Pooling (neural Networks) on:

[Wikipedia]

[Google]

[Amazon]

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of

A convolutional neural network consists of an input layer, hidden layers and an output layer. In any feed-forward neural network, any middle layers are called hidden because their inputs and outputs are masked by the activation function and final

A convolutional neural network consists of an input layer, hidden layers and an output layer. In any feed-forward neural network, any middle layers are called hidden because their inputs and outputs are masked by the activation function and final

Phoneme Recognition Using Time-Delay Neural Networks

' IEEE Transactions on Acoustics, Speech, and Signal Processing, Volume 37, No. 3, pp. 328. - 339 March 1989. Thus, while also using a pyramidal structure as in the neocognitron, it performed a global optimization of the weights instead of a local one. TDNNs are convolutional networks that share weights along the temporal dimension. They allow speech signals to be processed time-invariantly. In 1990 Hampshire and Waibel introduced a variant which performs a two dimensional convolution.John B. Hampshire and Alexander Waibel,

Connectionist Architectures for Multi-Speaker Phoneme Recognition

', Advances in Neural Information Processing Systems, 1990, Morgan Kaufmann. Since these TDNNs operated on spectrograms, the resulting phoneme recognition system was invariant to both shifts in time and in frequency. This inspired

Backpropagation Applied to Handwritten Zip Code Recognition

AT&T Bell Laboratories

The feed-forward architecture of convolutional neural networks was extended in the neural abstraction pyramid by lateral and feedback connections. The resulting recurrent convolutional network allows for the flexible incorporation of contextual information to iteratively resolve local ambiguities. In contrast to previous models, image-like outputs at the highest resolution were generated, e.g., for semantic segmentation, image reconstruction, and object localization tasks.

The feed-forward architecture of convolutional neural networks was extended in the neural abstraction pyramid by lateral and feedback connections. The resulting recurrent convolutional network allows for the flexible incorporation of contextual information to iteratively resolve local ambiguities. In contrast to previous models, image-like outputs at the highest resolution were generated, e.g., for semantic segmentation, image reconstruction, and object localization tasks.

For example, in CIFAR-10, images are only of size 32×32×3 (32 wide, 32 high, 3 color channels), so a single fully connected neuron in the first hidden layer of a regular neural network would have 32*32*3 = 3,072 weights. A 200×200 image, however, would lead to neurons that have 200*200*3 = 120,000 weights.

Also, such network architecture does not take into account the spatial structure of data, treating input pixels which are far apart in the same way as pixels that are close together. This ignores

For example, in CIFAR-10, images are only of size 32×32×3 (32 wide, 32 high, 3 color channels), so a single fully connected neuron in the first hidden layer of a regular neural network would have 32*32*3 = 3,072 weights. A 200×200 image, however, would lead to neurons that have 200*200*3 = 120,000 weights.

Also, such network architecture does not take into account the spatial structure of data, treating input pixels which are far apart in the same way as pixels that are close together. This ignores

When dealing with high-dimensional inputs such as images, it is impractical to connect neurons to all neurons in the previous volume because such a network architecture does not take the spatial structure of the data into account. Convolutional networks exploit spatially local correlation by enforcing a sparse local connectivity pattern between neurons of adjacent layers: each neuron is connected to only a small region of the input volume.

The extent of this connectivity is a hyperparameter called the

When dealing with high-dimensional inputs such as images, it is impractical to connect neurons to all neurons in the previous volume because such a network architecture does not take the spatial structure of the data into account. Convolutional networks exploit spatially local correlation by enforcing a sparse local connectivity pattern between neurons of adjacent layers: each neuron is connected to only a small region of the input volume.

The extent of this connectivity is a hyperparameter called the

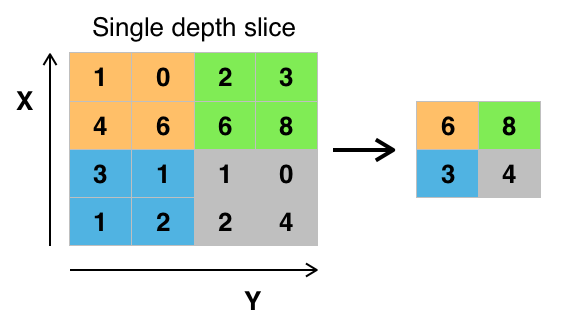

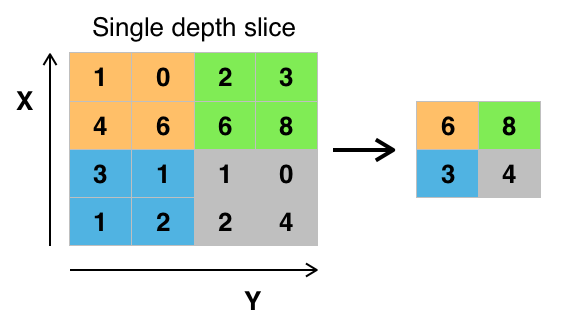

Another important concept of CNNs is pooling, which is a form of non-linear down-sampling. There are several non-linear functions to implement pooling, where ''max pooling'' is the most common. It partitions the input image into a set of rectangles and, for each such sub-region, outputs the maximum.

Intuitively, the exact location of a feature is less important than its rough location relative to other features. This is the idea behind the use of pooling in convolutional neural networks. The pooling layer serves to progressively reduce the spatial size of the representation, to reduce the number of parameters,

Another important concept of CNNs is pooling, which is a form of non-linear down-sampling. There are several non-linear functions to implement pooling, where ''max pooling'' is the most common. It partitions the input image into a set of rectangles and, for each such sub-region, outputs the maximum.

Intuitively, the exact location of a feature is less important than its rough location relative to other features. This is the idea behind the use of pooling in convolutional neural networks. The pooling layer serves to progressively reduce the spatial size of the representation, to reduce the number of parameters,  " Region of Interest" pooling (also known as RoI pooling) is a variant of max pooling, in which output size is fixed and input rectangle is a parameter.

Pooling is a downsampling method and an important component of convolutional neural networks for

" Region of Interest" pooling (also known as RoI pooling) is a variant of max pooling, in which output size is fixed and input rectangle is a parameter.

Pooling is a downsampling method and an important component of convolutional neural networks for

artificial neural network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected units ...

(ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Networks (SIANN), based on the shared-weight architecture of the convolution

In mathematics (in particular, functional analysis), convolution is a mathematical operation on two functions ( and ) that produces a third function (f*g) that expresses how the shape of one is modified by the other. The term ''convolution' ...

kernels or filters that slide along input features and provide translation-equivariant

In mathematics, equivariance is a form of symmetry for functions from one space with symmetry to another (such as symmetric spaces). A function is said to be an equivariant map when its domain and codomain are acted on by the same symmetry gro ...

responses known as feature maps. Counter-intuitively, most convolutional neural networks are not invariant to translation, due to the downsampling operation they apply to the input. They have applications in image and video recognition, recommender system

A recommender system, or a recommendation system (sometimes replacing 'system' with a synonym such as platform or engine), is a subclass of information filtering system that provide suggestions for items that are most pertinent to a particular ...

s, image classification

Computer vision is an interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human ...

, image segmentation

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects ( sets of pixels). The goal of segmentation is to simpl ...

, medical image analysis, natural language processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to proc ...

, brain–computer interface

A brain–computer interface (BCI), sometimes called a brain–machine interface (BMI) or smartbrain, is a direct communication pathway between the brain's electrical activity and an external device, most commonly a computer or robotic limb. B ...

s, and financial time series

In mathematics, a time series is a series of data points indexed (or listed or graphed) in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. E ...

.

CNNs are regularized versions of multilayer perceptron

A multilayer perceptron (MLP) is a fully connected class of feedforward artificial neural network (ANN). The term MLP is used ambiguously, sometimes loosely to mean ''any'' feedforward ANN, sometimes strictly to refer to networks composed of mul ...

s. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer

Layer or layered may refer to:

Arts, entertainment, and media

*Layers (Kungs album), ''Layers'' (Kungs album)

*Layers (Les McCann album), ''Layers'' (Les McCann album)

*Layers (Royce da 5'9" album), ''Layers'' (Royce da 5'9" album)

*"Layers", the ...

is connected to all neurons in the next layer

Layer or layered may refer to:

Arts, entertainment, and media

*Layers (Kungs album), ''Layers'' (Kungs album)

*Layers (Les McCann album), ''Layers'' (Les McCann album)

*Layers (Royce da 5'9" album), ''Layers'' (Royce da 5'9" album)

*"Layers", the ...

. The "full connectivity" of these networks make them prone to overfitting

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitt ...

data. Typical ways of regularization, or preventing overfitting, include: penalizing parameters during training (such as weight decay) or trimming connectivity (skipped connections, dropout, etc.) CNNs take a different approach towards regularization: they take advantage of the hierarchical pattern in data and assemble patterns of increasing complexity using smaller and simpler patterns embossed in their filters. Therefore, on a scale of connectivity and complexity, CNNs are on the lower extreme.

Convolutional networks were inspired

Inspiration, inspire, or inspired often refers to:

* Artistic inspiration, sudden creativity in artistic production

* Biblical inspiration, the doctrine in Judeo-Christian theology concerned with the divine origin of the Bible

* Creative inspir ...

by biological

Biology is the scientific study of life. It is a natural science with a broad scope but has several unifying themes that tie it together as a single, coherent field. For instance, all organisms are made up of cells that process hereditary ...

processes in that the connectivity pattern between neurons

A neuron, neurone, or nerve cell is an electrically excitable cell that communicates with other cells via specialized connections called synapses. The neuron is the main component of nervous tissue in all animals except sponges and placozoa. ...

resembles the organization of the animal visual cortex

The visual cortex of the brain is the area of the cerebral cortex that processes visual information. It is located in the occipital lobe. Sensory input originating from the eyes travels through the lateral geniculate nucleus in the thalamus and ...

. Individual cortical neuron

The cerebral cortex, also known as the cerebral mantle, is the outer layer of neural tissue of the cerebrum of the brain in humans and other mammals. The cerebral cortex mostly consists of the six-layered neocortex, with just 10% consisting of ...

s respond to stimuli only in a restricted region of the visual field

The visual field is the "spatial array of visual sensations available to observation in introspectionist psychological experiments". Or simply, visual field can be defined as the entire area that can be seen when an eye is fixed straight at a point ...

known as the receptive field

The receptive field, or sensory space, is a delimited medium where some physiological stimuli can evoke a sensory neuronal response in specific organisms.

Complexity of the receptive field ranges from the unidimensional chemical structure of odo ...

. The receptive fields of different neurons partially overlap such that they cover the entire visual field.

CNNs use relatively little pre-processing compared to other image classification algorithms. This means that the network learns to optimize the filters

Filter, filtering or filters may refer to:

Science and technology

Computing

* Filter (higher-order function), in functional programming

* Filter (software), a computer program to process a data stream

* Filter (video), a software component th ...

(or kernels) through automated learning, whereas in traditional algorithms these filters are hand-engineered. This independence from prior knowledge and human intervention in feature extraction is a major advantage.

Definition

Convolutional neural networks are a specialized type of artificial neural networks that use a mathematical operation calledconvolution

In mathematics (in particular, functional analysis), convolution is a mathematical operation on two functions ( and ) that produces a third function (f*g) that expresses how the shape of one is modified by the other. The term ''convolution' ...

in place of general matrix multiplication in at least one of their layers. They are specifically designed to process pixel data and are used in image recognition and processing.

Architecture

convolution

In mathematics (in particular, functional analysis), convolution is a mathematical operation on two functions ( and ) that produces a third function (f*g) that expresses how the shape of one is modified by the other. The term ''convolution' ...

. In a convolutional neural network, the hidden layers include layers that perform convolutions. Typically this includes a layer that performs a dot product

In mathematics, the dot product or scalar productThe term ''scalar product'' means literally "product with a scalar as a result". It is also used sometimes for other symmetric bilinear forms, for example in a pseudo-Euclidean space. is an alg ...

of the convolution kernel with the layer's input matrix. This product is usually the Frobenius inner product

In mathematics, the Frobenius inner product is a binary operation that takes two matrices and returns a scalar. It is often denoted \langle \mathbf,\mathbf \rangle_\mathrm. The operation is a component-wise inner product of two matrices as thou ...

, and its activation function is commonly ReLU. As the convolution kernel slides along the input matrix for the layer, the convolution operation generates a feature map, which in turn contributes to the input of the next layer. This is followed by other layers such as pooling layers, fully connected layers, and normalization layers.

Convolutional layers

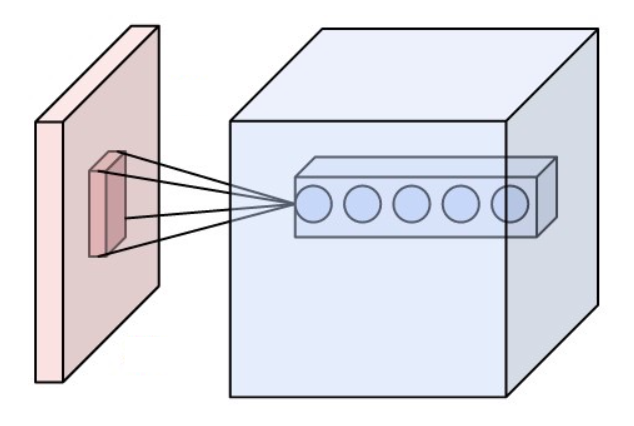

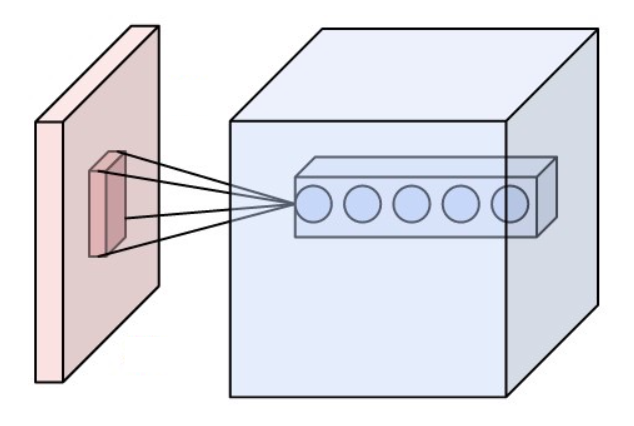

In a CNN, the input is atensor

In mathematics, a tensor is an algebraic object that describes a multilinear relationship between sets of algebraic objects related to a vector space. Tensors may map between different objects such as vectors, scalars, and even other tens ...

with a shape: (number of inputs) × (input height) × (input width) × (input channels). After passing through a convolutional layer, the image becomes abstracted to a feature map, also called an activation map, with shape: (number of inputs) × (feature map height) × (feature map width) × (feature map channels).

Convolutional layers convolve the input and pass its result to the next layer. This is similar to the response of a neuron in the visual cortex to a specific stimulus. Each convolutional neuron processes data only for its receptive field

The receptive field, or sensory space, is a delimited medium where some physiological stimuli can evoke a sensory neuronal response in specific organisms.

Complexity of the receptive field ranges from the unidimensional chemical structure of odo ...

. Although fully connected feedforward neural networks can be used to learn features and classify data, this architecture is generally impractical for larger inputs such as high-resolution images. It would require a very high number of neurons, even in a shallow architecture, due to the large input size of images, where each pixel is a relevant input feature. For instance, a fully connected layer for a (small) image of size 100 × 100 has 10,000 weights for ''each'' neuron in the second layer. Instead, convolution reduces the number of free parameters, allowing the network to be deeper. For example, regardless of image size, using a 5 × 5 tiling region, each with the same shared weights, requires only 25 learnable parameters. Using regularized weights over fewer parameters avoids the vanishing gradients and exploding gradients problems seen during backpropagation

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward artificial neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions gener ...

in traditional neural networks. Furthermore, convolutional neural networks are ideal for data with a grid-like topology (such as images) as spatial relations between separate features are taken into account during convolution and/or pooling.

Pooling layers

Convolutional networks may include local and/or global pooling layers along with traditional convolutional layers. Pooling layers reduce the dimensions of data by combining the outputs of neuron clusters at one layer into a single neuron in the next layer. Local pooling combines small clusters, tiling sizes such as 2 × 2 are commonly used. Global pooling acts on all the neurons of the feature map. There are two common types of pooling in popular use: max and average. ''Max pooling'' uses the maximum value of each local cluster of neurons in the feature map, while ''average pooling'' takes the average value.Fully connected layers

Fully connected layers connect every neuron in one layer to every neuron in another layer. It is the same as a traditionalmultilayer perceptron

A multilayer perceptron (MLP) is a fully connected class of feedforward artificial neural network (ANN). The term MLP is used ambiguously, sometimes loosely to mean ''any'' feedforward ANN, sometimes strictly to refer to networks composed of mul ...

neural network (MLP). The flattened matrix goes through a fully connected layer to classify the images.

Receptive field

In neural networks, each neuron receives input from some number of locations in the previous layer. In a convolutional layer, each neuron receives input from only a restricted area of the previous layer called the neuron's ''receptive field''. Typically the area is a square (e.g. 5 by 5 neurons). Whereas, in a fully connected layer, the receptive field is the ''entire previous layer''. Thus, in each convolutional layer, each neuron takes input from a larger area in the input than previous layers. This is due to applying the convolution over and over, which takes into account the value of a pixel, as well as its surrounding pixels. When using dilated layers, the number of pixels in the receptive field remains constant, but the field is more sparsely populated as its dimensions grow when combining the effect of several layers.Weights

Each neuron in a neural network computes an output value by applying a specific function to the input values received from the receptive field in the previous layer. The function that is applied to the input values is determined by a vector of weights and a bias (typically real numbers). Learning consists of iteratively adjusting these biases and weights. The vectors of weights and biases are called ''filters'' and represent particularfeature

Feature may refer to:

Computing

* Feature (CAD), could be a hole, pocket, or notch

* Feature (computer vision), could be an edge, corner or blob

* Feature (software design) is an intentional distinguishing characteristic of a software item ...

s of the input (e.g., a particular shape). A distinguishing feature of CNNs is that many neurons can share the same filter. This reduces the memory footprint

Memory footprint refers to the amount of main memory that a program uses or references while running.

The word footprint generally refers to the extent of physical dimensions that an object occupies, giving a sense of its size. In computing, the ...

because a single bias and a single vector of weights are used across all receptive fields that share that filter, as opposed to each receptive field having its own bias and vector weighting.

History

CNN are often compared to the way the brain achieves vision processing in livingorganisms

In biology, an organism () is any living system that functions as an individual entity. All organisms are composed of cells ( cell theory). Organisms are classified by taxonomy into groups such as multicellular animals, plants, and f ...

.

Receptive fields in the visual cortex

Work byHubel

Hubel, Hübel or Huebel is a German language topographic surname, denoting a person who lived near a hill (Middle High German ''hübel'' "hill") and may refer to:

* Allison Hubel, American mechanical engineer and cryobiologist

*David H. Hubel (1926 ...

and Wiesel in the 1950s and 1960s showed that cat visual cortices contain neurons that individually respond to small regions of the visual field

The visual field is the "spatial array of visual sensations available to observation in introspectionist psychological experiments". Or simply, visual field can be defined as the entire area that can be seen when an eye is fixed straight at a point ...

. Provided the eyes are not moving, the region of visual space within which visual stimuli affect the firing of a single neuron is known as its receptive field

The receptive field, or sensory space, is a delimited medium where some physiological stimuli can evoke a sensory neuronal response in specific organisms.

Complexity of the receptive field ranges from the unidimensional chemical structure of odo ...

. Neighboring cells have similar and overlapping receptive fields. Receptive field size and location varies systematically across the cortex to form a complete map of visual space. The cortex in each hemisphere represents the contralateral visual field

The visual field is the "spatial array of visual sensations available to observation in introspectionist psychological experiments". Or simply, visual field can be defined as the entire area that can be seen when an eye is fixed straight at a point ...

.

Their 1968 paper identified two basic visual cell types in the brain:

* simple cells, whose output is maximized by straight edges having particular orientations within their receptive field

* complex cells, which have larger receptive field

The receptive field, or sensory space, is a delimited medium where some physiological stimuli can evoke a sensory neuronal response in specific organisms.

Complexity of the receptive field ranges from the unidimensional chemical structure of odo ...

s, whose output is insensitive to the exact position of the edges in the field.

Hubel and Wiesel also proposed a cascading model of these two types of cells for use in pattern recognition tasks.

Neocognitron, origin of the CNN architecture

The "neocognitron

__NOTOC__

The neocognitron is a hierarchical, multilayered artificial neural network proposed by Kunihiko Fukushima in 1979. It has been used for Japanese Handwriting recognition, handwritten character recognition and other pattern recognition task ...

" was introduced by Kunihiko Fukushima in 1980.

It was inspired by the above-mentioned work of Hubel and Wiesel. The neocognitron introduced the two basic types of layers in CNNs: convolutional layers, and downsampling layers. A convolutional layer contains units whose receptive fields cover a patch of the previous layer. The weight vector (the set of adaptive parameters) of such a unit is often called a filter. Units can share filters. Downsampling layers contain units whose receptive fields cover patches of previous convolutional layers. Such a unit typically computes the average of the activations of the units in its patch. This downsampling helps to correctly classify objects in visual scenes even when the objects are shifted.

In a variant of the neocognitron called the cresceptron, instead of using Fukushima's spatial averaging, J. Weng et al. introduced a method called max-pooling where a downsampling unit computes the maximum of the activations of the units in its patch. Max-pooling is often used in modern CNNs.

Several supervised and unsupervised learning algorithms have been proposed over the decades to train the weights of a neocognitron. Today, however, the CNN architecture is usually trained through backpropagation

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward artificial neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions gener ...

.

The neocognitron

__NOTOC__

The neocognitron is a hierarchical, multilayered artificial neural network proposed by Kunihiko Fukushima in 1979. It has been used for Japanese Handwriting recognition, handwritten character recognition and other pattern recognition task ...

is the first CNN which requires units located at multiple network positions to have shared weights.

Convolutional neural networks were presented at the Neural Information Processing Workshop in 1987, automatically analyzing time-varying signals by replacing learned multiplication with convolution in time, and demonstrated for speech recognition.

Time delay neural networks

The time delay neural network (TDNN) was introduced in 1987 by Alex Waibel et al. and was one of the first convolutional networks, as it achieved shift invariance. It did so by utilizing weight sharing in combination withbackpropagation

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward artificial neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions gener ...

training. Alexander Waibel et al., Phoneme Recognition Using Time-Delay Neural Networks

' IEEE Transactions on Acoustics, Speech, and Signal Processing, Volume 37, No. 3, pp. 328. - 339 March 1989. Thus, while also using a pyramidal structure as in the neocognitron, it performed a global optimization of the weights instead of a local one. TDNNs are convolutional networks that share weights along the temporal dimension. They allow speech signals to be processed time-invariantly. In 1990 Hampshire and Waibel introduced a variant which performs a two dimensional convolution.John B. Hampshire and Alexander Waibel,

Connectionist Architectures for Multi-Speaker Phoneme Recognition

', Advances in Neural Information Processing Systems, 1990, Morgan Kaufmann. Since these TDNNs operated on spectrograms, the resulting phoneme recognition system was invariant to both shifts in time and in frequency. This inspired

translation invariance

In geometry, to translate a geometric figure is to move it from one place to another without rotating it. A translation "slides" a thing by .

In physics and mathematics, continuous translational symmetry is the invariance of a system of equatio ...

in image processing with CNNs. The tiling of neuron outputs can cover timed stages.

TDNNs now achieve the best performance in far distance speech recognition.

Max pooling

In 1990 Yamaguchi et al. introduced the concept of max pooling, which is a fixed filtering operation that calculates and propagates the maximum value of a given region. They did so by combining TDNNs with max pooling in order to realize a speaker independent isolated word recognition system. In their system they used several TDNNs per word, one for each syllable. The results of each TDNN over the input signal were combined using max pooling and the outputs of the pooling layers were then passed on to networks performing the actual word classification.Image recognition with CNNs trained by gradient descent

A system to recognize hand-written ZIP Code numbers involved convolutions in which the kernel coefficients had been laboriously hand designed.Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. Hubbard, L. D. JackelBackpropagation Applied to Handwritten Zip Code Recognition

AT&T Bell Laboratories

Yann LeCun

Yann André LeCun ( , ; originally spelled Le Cun; born 8 July 1960) is a French computer scientist working primarily in the fields of machine learning, computer vision, mobile robotics and computational neuroscience. He is the Silver Professor ...

et al. (1989) used back-propagation to learn the convolution kernel coefficients directly from images of hand-written numbers. Learning was thus fully automatic, performed better than manual coefficient design, and was suited to a broader range of image recognition problems and image types.

Wei Zhang et al. (1988) used back-propagation to train the convolution kernels of a CNN for alphabets recognition. The model was called Shift-Invariant Artificial Neural Network (SIANN) before the name CNN was coined later in the early 1990s. Wei Zhang et al. also applied the same CNN without the last fully connected layer for medical image object segmentation (1991) and breast cancer detection in mammograms (1994).

This approach became a foundation of modern computer vision

Computer vision is an Interdisciplinarity, interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate t ...

.

LeNet-5

LeNet-5, a pioneering 7-level convolutional network by LeCun et al. in 1998, that classifies digits, was applied by several banks to recognize hand-written numbers on checks () digitized in 32x32 pixel images. The ability to process higher-resolution images requires larger and more layers of convolutional neural networks, so this technique is constrained by the availability of computing resources.Shift-invariant neural network

A shift invariant neural network was proposed by Wei Zhang et al. for image character recognition in 1988. It is a modified Neocognitron by keeping only the convolutional interconnections between the image feature layers and the last fully connected layer. The model was trained with back-propagation. The training algorithm were further improved in 1991 to improve its generalization ability. The model architecture was modified by removing the last fully connected layer and applied for medical image segmentation (1991) and automatic detection of breast cancer in mammograms (1994). A different convolution-based design was proposed in 1988 for application to decomposition of one-dimensionalelectromyography

Electromyography (EMG) is a technique for evaluating and recording the electrical activity produced by skeletal muscles. EMG is performed using an instrument called an electromyograph to produce a record called an electromyogram. An electromyo ...

convolved signals via de-convolution. This design was modified in 1989 to other de-convolution-based designs.

Neural abstraction pyramid

The feed-forward architecture of convolutional neural networks was extended in the neural abstraction pyramid by lateral and feedback connections. The resulting recurrent convolutional network allows for the flexible incorporation of contextual information to iteratively resolve local ambiguities. In contrast to previous models, image-like outputs at the highest resolution were generated, e.g., for semantic segmentation, image reconstruction, and object localization tasks.

The feed-forward architecture of convolutional neural networks was extended in the neural abstraction pyramid by lateral and feedback connections. The resulting recurrent convolutional network allows for the flexible incorporation of contextual information to iteratively resolve local ambiguities. In contrast to previous models, image-like outputs at the highest resolution were generated, e.g., for semantic segmentation, image reconstruction, and object localization tasks.

GPU implementations

Although CNNs were invented in the 1980s, their breakthrough in the 2000s required fast implementations ongraphics processing unit

A graphics processing unit (GPU) is a specialized electronic circuit designed to manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mo ...

s (GPUs).

In 2004, it was shown by K. S. Oh and K. Jung that standard neural networks can be greatly accelerated on GPUs. Their implementation was 20 times faster than an equivalent implementation on CPU

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, an ...

. In 2005, another paper also emphasised the value of GPGPU

General-purpose computing on graphics processing units (GPGPU, or less often GPGP) is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditiona ...

for machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

.

The first GPU-implementation of a CNN was described in 2006 by K. Chellapilla et al. Their implementation was 4 times faster than an equivalent implementation on CPU. Subsequent work also used GPUs, initially for other types of neural networks (different from CNNs), especially unsupervised neural networks.

In 2010, Dan Ciresan et al. at IDSIA showed that even deep standard neural networks with many layers can be quickly trained on GPU by supervised learning through the old method known as backpropagation

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward artificial neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions gener ...

. Their network outperformed previous machine learning methods on the MNIST handwritten digits benchmark. In 2011, they extended this GPU approach to CNNs, achieving an acceleration factor of 60, with impressive results. In 2011, they used such CNNs on GPU to win an image recognition contest where they achieved superhuman performance for the first time. Between May 15, 2011 and September 30, 2012, their CNNs won no less than four image competitions. In 2012, they also significantly improved on the best performance in the literature for multiple image database

In computing, a database is an organized collection of data stored and accessed electronically. Small databases can be stored on a file system, while large databases are hosted on computer clusters or cloud storage. The design of databases spa ...

s, including the MNIST database

The MNIST database (''Modified National Institute of Standards and Technology database'') is a large database of handwritten digits that is commonly used for training various image processing systems. The database is also widely used for training a ...

, the NORB database, the HWDB1.0 dataset (Chinese characters) and the CIFAR10 dataset (dataset of 60000 32x32 labeled RGB images

Color digital images are made of pixels, and pixels are made of combinations of primary colors represented by a series of code. A channel in this context is the grayscale image of the same size as a color image, made of just one of these primary co ...

).

Subsequently, a similar GPU-based CNN by Alex Krizhevsky et al. won the ImageNet Large Scale Visual Recognition Challenge 2012. A very deep CNN with over 100 layers by Microsoft won the ImageNet 2015 contest.

Intel Xeon Phi implementations

Compared to the training of CNNs usingGPU

A graphics processing unit (GPU) is a specialized electronic circuit designed to manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mob ...

s, not much attention was given to the Intel Xeon Phi

Xeon Phi was a series of x86 manycore processors designed and made by Intel. It was intended for use in supercomputers, servers, and high-end workstations. Its architecture allowed use of standard programming languages and application progra ...

coprocessor.

A notable development is a parallelization method for training convolutional neural networks on the Intel Xeon Phi, named Controlled Hogwild with Arbitrary Order of Synchronization (CHAOS).

CHAOS exploits both the thread- and SIMD

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should ...

-level parallelism that is available on the Intel Xeon Phi.

Distinguishing features

In the past, traditionalmultilayer perceptron

A multilayer perceptron (MLP) is a fully connected class of feedforward artificial neural network (ANN). The term MLP is used ambiguously, sometimes loosely to mean ''any'' feedforward ANN, sometimes strictly to refer to networks composed of mul ...

(MLP) models were used for image recognition. However, the full connectivity between nodes caused the curse of dimensionality

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. Th ...

, and was computationally intractable with higher-resolution images. A 1000×1000-pixel image with RGB color

The RGB color model is an additive color model in which the red, green and blue primary colors of light are added together in various ways to reproduce a broad array of colors. The name of the model comes from the initials of the three addi ...

channels has 3 million weights per fully-connected neuron, which is too high to feasibly process efficiently at scale.

For example, in CIFAR-10, images are only of size 32×32×3 (32 wide, 32 high, 3 color channels), so a single fully connected neuron in the first hidden layer of a regular neural network would have 32*32*3 = 3,072 weights. A 200×200 image, however, would lead to neurons that have 200*200*3 = 120,000 weights.

Also, such network architecture does not take into account the spatial structure of data, treating input pixels which are far apart in the same way as pixels that are close together. This ignores

For example, in CIFAR-10, images are only of size 32×32×3 (32 wide, 32 high, 3 color channels), so a single fully connected neuron in the first hidden layer of a regular neural network would have 32*32*3 = 3,072 weights. A 200×200 image, however, would lead to neurons that have 200*200*3 = 120,000 weights.

Also, such network architecture does not take into account the spatial structure of data, treating input pixels which are far apart in the same way as pixels that are close together. This ignores locality of reference

In computer science, locality of reference, also known as the principle of locality, is the tendency of a processor to access the same set of memory locations repetitively over a short period of time. There are two basic types of reference localit ...

in data with a grid-topology (such as images), both computationally and semantically. Thus, full connectivity of neurons is wasteful for purposes such as image recognition that are dominated by spatially local input patterns.

Convolutional neural networks are variants of multilayer perceptrons, designed to emulate the behavior of a visual cortex

The visual cortex of the brain is the area of the cerebral cortex that processes visual information. It is located in the occipital lobe. Sensory input originating from the eyes travels through the lateral geniculate nucleus in the thalamus and ...

. These models mitigate the challenges posed by the MLP architecture by exploiting the strong spatially local correlation present in natural images. As opposed to MLPs, CNNs have the following distinguishing features:

* 3D volumes of neurons. The layers of a CNN have neurons arranged in 3 dimensions

Three-dimensional space (also: 3D space, 3-space or, rarely, tri-dimensional space) is a geometric setting in which three values (called ''parameters'') are required to determine the position of an element (i.e., point). This is the informal ...

: width, height and depth. Where each neuron inside a convolutional layer is connected to only a small region of the layer before it, called a receptive field. Distinct types of layers, both locally and completely connected, are stacked to form a CNN architecture.

* Local connectivity: following the concept of receptive fields, CNNs exploit spatial locality by enforcing a local connectivity pattern between neurons of adjacent layers. The architecture thus ensures that the learned "filters

Filter, filtering or filters may refer to:

Science and technology

Computing

* Filter (higher-order function), in functional programming

* Filter (software), a computer program to process a data stream

* Filter (video), a software component th ...

" produce the strongest response to a spatially local input pattern. Stacking many such layers leads to nonlinear filters that become increasingly global (i.e. responsive to a larger region of pixel space) so that the network first creates representations of small parts of the input, then from them assembles representations of larger areas.

* Shared weights: In CNNs, each filter is replicated across the entire visual field. These replicated units share the same parameterization (weight vector and bias) and form a feature map. This means that all the neurons in a given convolutional layer respond to the same feature within their specific response field. Replicating units in this way allows for the resulting activation map to be equivariant

In mathematics, equivariance is a form of symmetry for functions from one space with symmetry to another (such as symmetric spaces). A function is said to be an equivariant map when its domain and codomain are acted on by the same symmetry gro ...

under shifts of the locations of input features in the visual field, i.e. they grant translational equivariance - given that the layer has a stride of one.

* Pooling: In a CNN's pooling layers, feature maps are divided into rectangular sub-regions, and the features in each rectangle are independently down-sampled to a single value, commonly by taking their average or maximum value. In addition to reducing the sizes of feature maps, the pooling operation grants a degree of local translational invariance

In geometry, to translate a geometric figure is to move it from one place to another without rotating it. A translation "slides" a thing by .

In physics and mathematics, continuous translational symmetry is the invariance of a system of equatio ...

to the features contained therein, allowing the CNN to be more robust to variations in their positions.

Together, these properties allow CNNs to achieve better generalization on vision problems

Visual impairment, also known as vision impairment, is a medical definition primarily measured based on an individual's better eye visual acuity; in the absence of treatment such as correctable eyewear, assistive devices, and medical treatment� ...

. Weight sharing dramatically reduces the number of free parameter

A free parameter is a variable in a mathematical model which cannot be predicted precisely or constrained by the model and must be estimated experimentally or theoretically. A mathematical model, theory, or conjecture is more likely to be right a ...

s learned, thus lowering the memory requirements for running the network and allowing the training of larger, more powerful networks.

Building blocks

A CNN architecture is formed by a stack of distinct layers that transform the input volume into an output volume (e.g. holding the class scores) through a differentiable function. A few distinct types of layers are commonly used. These are further discussed below.

Convolutional layer

The convolutional layer is the core building block of a CNN. The layer's parameters consist of a set of learnablefilters

Filter, filtering or filters may refer to:

Science and technology

Computing

* Filter (higher-order function), in functional programming

* Filter (software), a computer program to process a data stream

* Filter (video), a software component th ...

(or kernels), which have a small receptive field, but extend through the full depth of the input volume. During the forward pass, each filter is convolved across the width and height of the input volume, computing the dot product

In mathematics, the dot product or scalar productThe term ''scalar product'' means literally "product with a scalar as a result". It is also used sometimes for other symmetric bilinear forms, for example in a pseudo-Euclidean space. is an alg ...

between the filter entries and the input, producing a 2-dimensional activation map of that filter. As a result, the network learns filters that activate when it detects some specific type of feature

Feature may refer to:

Computing

* Feature (CAD), could be a hole, pocket, or notch

* Feature (computer vision), could be an edge, corner or blob

* Feature (software design) is an intentional distinguishing characteristic of a software item ...

at some spatial position in the input., pp. 448When applied to other types of data than image data, such as sound data, "spatial position" may variously correspond to different points in the time domain

Time domain refers to the analysis of mathematical functions, physical signals or time series of economic or environmental data, with respect to time. In the time domain, the signal or function's value is known for all real numbers, for the ...

, frequency domain

In physics, electronics, control systems engineering, and statistics, the frequency domain refers to the analysis of mathematical functions or signals with respect to frequency, rather than time. Put simply, a time-domain graph shows how a ...

, or other mathematical spaces.

Stacking the activation maps for all filters along the depth dimension forms the full output volume of the convolution layer. Every entry in the output volume can thus also be interpreted as an output of a neuron that looks at a small region in the input and shares parameters with neurons in the same activation map.

Local connectivity

When dealing with high-dimensional inputs such as images, it is impractical to connect neurons to all neurons in the previous volume because such a network architecture does not take the spatial structure of the data into account. Convolutional networks exploit spatially local correlation by enforcing a sparse local connectivity pattern between neurons of adjacent layers: each neuron is connected to only a small region of the input volume.

The extent of this connectivity is a hyperparameter called the

When dealing with high-dimensional inputs such as images, it is impractical to connect neurons to all neurons in the previous volume because such a network architecture does not take the spatial structure of the data into account. Convolutional networks exploit spatially local correlation by enforcing a sparse local connectivity pattern between neurons of adjacent layers: each neuron is connected to only a small region of the input volume.

The extent of this connectivity is a hyperparameter called the receptive field

The receptive field, or sensory space, is a delimited medium where some physiological stimuli can evoke a sensory neuronal response in specific organisms.

Complexity of the receptive field ranges from the unidimensional chemical structure of odo ...

of the neuron. The connections are local in space (along width and height), but always extend along the entire depth of the input volume. Such an architecture ensures that the learnt filters produce the strongest response to a spatially local input pattern.

Spatial arrangement

Threehyperparameters

In Bayesian statistics, a hyperparameter is a parameter of a prior distribution; the term is used to distinguish them from parameters of the model for the underlying system under analysis.

For example, if one is using a beta distribution to m ...

control the size of the output volume of the convolutional layer: the depth, stride

Stride or STRIDE may refer to:

Computing

* STRIDE (security), spoofing, tampering, repudiation, information disclosure, denial of service, elevation of privilege

* Stride (software), a successor to the cloud-based HipChat, a corporate cloud-based ...

, and padding size:

* The ''depth'' of the output volume controls the number of neurons in a layer that connect to the same region of the input volume. These neurons learn to activate for different features in the input. For example, if the first convolutional layer takes the raw image as input, then different neurons along the depth dimension may activate in the presence of various oriented edges, or blobs of color.

*''Stride'' controls how depth columns around the width and height are allocated. If the stride is 1, then we move the filters one pixel at a time. This leads to heavily overlapping receptive fields between the columns, and to large output volumes. For any integer a stride ''S'' means that the filter is translated ''S'' units at a time per output. In practice, is rare. A greater stride means smaller overlap of receptive fields and smaller spatial dimensions of the output volume.

* Sometimes, it is convenient to pad the input with zeros (or other values, such as the average of the region) on the border of the input volume. The size of this padding is a third hyperparameter. Padding provides control of the output volume's spatial size. In particular, sometimes it is desirable to exactly preserve the spatial size of the input volume, this is commonly referred to as "same" padding.

The spatial size of the output volume is a function of the input volume size , the kernel field size of the convolutional layer neurons, the stride , and the amount of zero padding on the border. The number of neurons that "fit" in a given volume is then:

:

If this number is not an integer

An integer is the number zero (), a positive natural number (, , , etc.) or a negative integer with a minus sign ( −1, −2, −3, etc.). The negative numbers are the additive inverses of the corresponding positive numbers. In the language ...

, then the strides are incorrect and the neurons cannot be tiled to fit across the input volume in a symmetric

Symmetry (from grc, συμμετρία "agreement in dimensions, due proportion, arrangement") in everyday language refers to a sense of harmonious and beautiful proportion and balance. In mathematics, "symmetry" has a more precise definit ...

way. In general, setting zero padding to be when the stride is ensures that the input volume and output volume will have the same size spatially. However, it is not always completely necessary to use all of the neurons of the previous layer. For example, a neural network designer may decide to use just a portion of padding.

Parameter sharing

A parameter sharing scheme is used in convolutional layers to control the number of free parameters. It relies on the assumption that if a patch feature is useful to compute at some spatial position, then it should also be useful to compute at other positions. Denoting a single 2-dimensional slice of depth as a ''depth slice'', the neurons in each depth slice are constrained to use the same weights and bias. Since all neurons in a single depth slice share the same parameters, the forward pass in each depth slice of the convolutional layer can be computed as aconvolution

In mathematics (in particular, functional analysis), convolution is a mathematical operation on two functions ( and ) that produces a third function (f*g) that expresses how the shape of one is modified by the other. The term ''convolution' ...

of the neuron's weights with the input volume.hence the name "convolutional layer" Therefore, it is common to refer to the sets of weights as a filter (or a kernel

Kernel may refer to:

Computing

* Kernel (operating system), the central component of most operating systems

* Kernel (image processing), a matrix used for image convolution

* Compute kernel, in GPGPU programming

* Kernel method, in machine lea ...

), which is convolved with the input. The result of this convolution is an activation map, and the set of activation maps for each different filter are stacked together along the depth dimension to produce the output volume. Parameter sharing contributes to the translation invariance

In geometry, to translate a geometric figure is to move it from one place to another without rotating it. A translation "slides" a thing by .

In physics and mathematics, continuous translational symmetry is the invariance of a system of equatio ...

of the CNN architecture.

Sometimes, the parameter sharing assumption may not make sense. This is especially the case when the input images to a CNN have some specific centered structure; for which we expect completely different features to be learned on different spatial locations. One practical example is when the inputs are faces that have been centered in the image: we might expect different eye-specific or hair-specific features to be learned in different parts of the image. In that case it is common to relax the parameter sharing scheme, and instead simply call the layer a "locally connected layer".

Pooling layer

Another important concept of CNNs is pooling, which is a form of non-linear down-sampling. There are several non-linear functions to implement pooling, where ''max pooling'' is the most common. It partitions the input image into a set of rectangles and, for each such sub-region, outputs the maximum.

Intuitively, the exact location of a feature is less important than its rough location relative to other features. This is the idea behind the use of pooling in convolutional neural networks. The pooling layer serves to progressively reduce the spatial size of the representation, to reduce the number of parameters,

Another important concept of CNNs is pooling, which is a form of non-linear down-sampling. There are several non-linear functions to implement pooling, where ''max pooling'' is the most common. It partitions the input image into a set of rectangles and, for each such sub-region, outputs the maximum.

Intuitively, the exact location of a feature is less important than its rough location relative to other features. This is the idea behind the use of pooling in convolutional neural networks. The pooling layer serves to progressively reduce the spatial size of the representation, to reduce the number of parameters, memory footprint

Memory footprint refers to the amount of main memory that a program uses or references while running.

The word footprint generally refers to the extent of physical dimensions that an object occupies, giving a sense of its size. In computing, the ...

and amount of computation in the network, and hence to also control overfitting

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitt ...

. This is known as down-sampling. It is common to periodically insert a pooling layer between successive convolutional layers (each one typically followed by an activation function, such as a ReLU layer) in a CNN architecture. While pooling layers contribute to local translation invariance, they do not provide global translation invariance in a CNN, unless a form of global pooling is used. The pooling layer commonly operates independently on every depth, or slice, of the input and resizes it spatially. A very common form of max pooling is a layer with filters of size 2×2, applied with a stride of 2, which subsamples every depth slice in the input by 2 along both width and height, discarding 75% of the activations:

In this case, every max operation is over 4 numbers. The depth dimension remains unchanged (this is true for other forms of pooling as well).

In addition to max pooling, pooling units can use other functions, such as average

In ordinary language, an average is a single number taken as representative of a list of numbers, usually the sum of the numbers divided by how many numbers are in the list (the arithmetic mean). For example, the average of the numbers 2, 3, 4, 7, ...

pooling or ℓ2-norm pooling. Average pooling was often used historically but has recently fallen out of favor compared to max pooling, which generally performs better in practice.

Due to the effects of fast spatial reduction of the size of the representation, there is a recent trend towards using smaller filters or discarding pooling layers altogether.

" Region of Interest" pooling (also known as RoI pooling) is a variant of max pooling, in which output size is fixed and input rectangle is a parameter.

Pooling is a downsampling method and an important component of convolutional neural networks for

" Region of Interest" pooling (also known as RoI pooling) is a variant of max pooling, in which output size is fixed and input rectangle is a parameter.

Pooling is a downsampling method and an important component of convolutional neural networks for object detection

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos. Well-researched ...

based on the Fast R-CNN architecture. Channel Max Pooling

A CMP operation layer conducts the MP operation along the channel side among the corresponding positions of the consecutive feature maps for the purpose of redundant information elimination. The CMP makes the significant features gather together within fewer channels, which is important for fine-grained image classification that needs more discriminating features. Meanwhile, another advantage of the CMP operation is to make the channel number of feature maps smaller before it connects to the first fully connected (FC) layer. Similar to the MP operation, we denote the input feature maps and output feature maps of a CMP layer as F ∈ R(C×M×N) and C ∈ R(c×M×N), respectively, where C and c are the channel numbers of the input and output feature maps, M and N are the widths and the height of the feature maps, respectively. Note that the CMP operation only changes the channel number of the feature maps. The width and the height of the feature maps are not changed, which is different from the MP operation.ReLU layer

ReLU is the abbreviation ofrectified linear unit

In the context of artificial neural networks, the rectifier or ReLU (rectified linear unit) activation function is an activation function defined as the positive part of its argument:

: f(x) = x^+ = \max(0, x),

where ''x'' is the input to a n ...

, which applies the non-saturating activation function

In artificial neural networks, the activation function of a node defines the output of that node given an input or set of inputs.

A standard integrated circuit can be seen as a digital network of activation functions that can be "ON" (1) or " ...

. It effectively removes negative values from an activation map by setting them to zero. It introduces nonlinearities to the decision function and in the overall network without affecting the receptive fields of the convolution layers.

Other functions can also be used to increase nonlinearity, for example the saturating hyperbolic tangent

In mathematics, hyperbolic functions are analogues of the ordinary trigonometric functions, but defined using the hyperbola rather than the circle. Just as the points form a circle with a unit radius, the points form the right half of the ...

, , and the sigmoid function

A sigmoid function is a mathematical function having a characteristic "S"-shaped curve or sigmoid curve.

A common example of a sigmoid function is the logistic function shown in the first figure and defined by the formula:

:S(x) = \frac = \ ...

. ReLU is often preferred to other functions because it trains the neural network several times faster without a significant penalty to generalization

A generalization is a form of abstraction whereby common properties of specific instances are formulated as general concepts or claims. Generalizations posit the existence of a domain or set of elements, as well as one or more common character ...

accuracy.

Fully connected layer

After several convolutional and max pooling layers, the final classification is done via fully connected layers. Neurons in a fully connected layer have connections to all activations in the previous layer, as seen in regular (non-convolutional)artificial neural network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected units ...

s. Their activations can thus be computed as an affine transformation

In Euclidean geometry, an affine transformation or affinity (from the Latin, ''affinis'', "connected with") is a geometric transformation that preserves lines and parallelism, but not necessarily Euclidean distances and angles.

More generall ...

, with matrix multiplication

In mathematics, particularly in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the ...

followed by a bias offset (vector addition

In mathematics, physics, and engineering, a Euclidean vector or simply a vector (sometimes called a geometric vector or spatial vector) is a geometric object that has Magnitude (mathematics), magnitude (or euclidean norm, length) and Direction ( ...

of a learned or fixed bias term).

Loss layer

The "loss layer", or "loss function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "co ...

", specifies how training

Training is teaching, or developing in oneself or others, any skills and knowledge or fitness that relate to specific useful competencies. Training has specific goals of improving one's capability, capacity, productivity and performance. I ...

penalizes the deviation between the predicted output of the network, and the true

True most commonly refers to truth, the state of being in congruence with fact or reality.

True may also refer to:

Places

* True, West Virginia, an unincorporated community in the United States

* True, Wisconsin, a town in the United States

* ...

data labels (during supervised learning). Various loss function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "co ...

s can be used, depending on the specific task.

The Softmax

The softmax function, also known as softargmax or normalized exponential function, converts a vector of real numbers into a probability distribution of possible outcomes. It is a generalization of the logistic function to multiple dimensions, a ...

loss function is used for predicting a single class of ''K'' mutually exclusive classes.So-called categorical data

In statistics, a categorical variable (also called qualitative variable) is a variable that can take on one of a limited, and usually fixed, number of possible values, assigning each individual or other unit of observation to a particular group o ...

. Sigmoid cross-entropy loss is used for predicting ''K'' independent probability values in