|

Multilayer Perceptron

In deep learning, a multilayer perceptron (MLP) is a name for a modern feedforward neural network consisting of fully connected neurons with nonlinear activation functions, organized in layers, notable for being able to distinguish data that is not linearly separable.Cybenko, G. 1989. Approximation by superpositions of a sigmoidal function '' Mathematics of Control, Signals, and Systems'', 2(4), 303–314. Modern neural networks are trained using backpropagationRumelhart, David E., Geoffrey E. Hinton, and R. J. Williams.Learning Internal Representations by Error Propagation. David E. Rumelhart, James L. McClelland, and the PDP research group. (editors), Parallel distributed processing: Explorations in the microstructure of cognition, Volume 1: Foundation. MIT Press, 1986. and are colloquially referred to as "vanilla" networks. MLPs grew out of an effort to improve single-layer perceptrons, which could only be applied to linearly separable data. A perceptron traditionally used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Alexey Grigorevich Ivakhnenko

Alexey Ivakhnenko (; 30 March 1913 – 16 October 2007) was a Soviet and Ukrainian mathematician most famous for developing the group method of data handling (GMDH), a method of inductive statistical learning, for which he is considered as one of the founders of deep learning. Early life and education Aleksey was born in Kobelyaky, Poltava Governorate in a family of teachers. In 1932 he graduated from Electrotechnical college in Kyiv and worked for two years as an engineer on the construction of large power plant in Berezniki. Then in 1938, after graduation from the Leningrad Electrotechnical Institute, Ivakhnenko worked in the All-Union Electrotechnical Institute in Moscow during wartime. There he investigated the problems of automatic control in the laboratory, led by Sergey Lebedev. He continued research in other institutions in Ukraine after return to Kyiv in 1944. In that year he received the Ph.D. degree and later, in 1954 had received D.Sc. degree. In 1964, he ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synaptic Weight

In neuroscience and computer science, synaptic weight refers to the strength or amplitude of a connection between two nodes, corresponding in biology to the amount of influence the firing of one neuron has on another. The term is typically used in artificial and biological neural network research. Computation In a computational neural network, a vector or set of inputs \textbf and outputs \textbf, or pre- and post-synaptic neurons respectively, are interconnected with synaptic weights represented by the matrix w, where for a linear neuron :y_j = \sum_i w_ x_i ~~\textrm~~ \textbf = w\textbf. where the rows of the synaptic matrix represent the vector of synaptic weights for the output indexed by j. The synaptic weight is changed by using a learning rule, the most basic of which is Hebb's rule, which is usually stated in biological terms as ''Neurons that fire together, wire together.'' Computationally, this means that if a large signal from one of the input neurons results in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Classification

Computer vision tasks include methods for acquiring, processing, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanner, 3D point clouds from Lidar, LiDaR sensors, or medical scanning devices. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

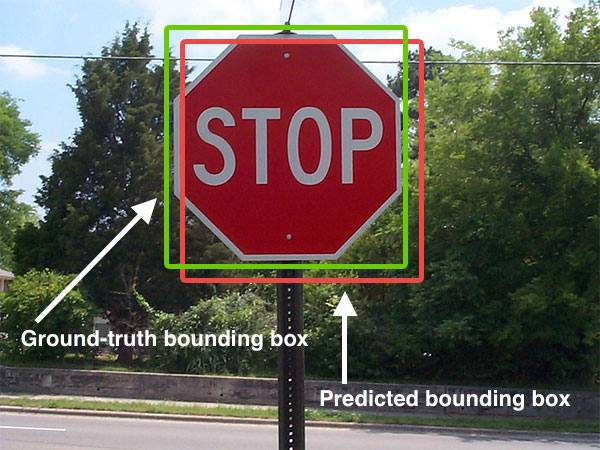

ImageNet

The ImageNet project is a large visual database designed for use in Outline of object recognition, visual object recognition software research. More than 14 million images have been hand-annotated by the project to indicate what objects are pictured and in at least one million of the images, bounding boxes are also provided. ImageNet contains more than 20,000 categories, with a typical category, such as "balloon" or "strawberry", consisting of several hundred images. The database of annotations of third-party image URLs is freely available directly from ImageNet, though the actual images are not owned by ImageNet. Since 2010, the ImageNet project runs an annual software contest, the ImageNet Large Scale Visual Recognition Challenge (#History_of_the_ImageNet_challenge, ILSVRC), where software programs compete to correctly classify and detect objects and scenes. The challenge uses a "trimmed" list of one thousand non-overlapping classes. History AI researcher Fei-Fei Li began working ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

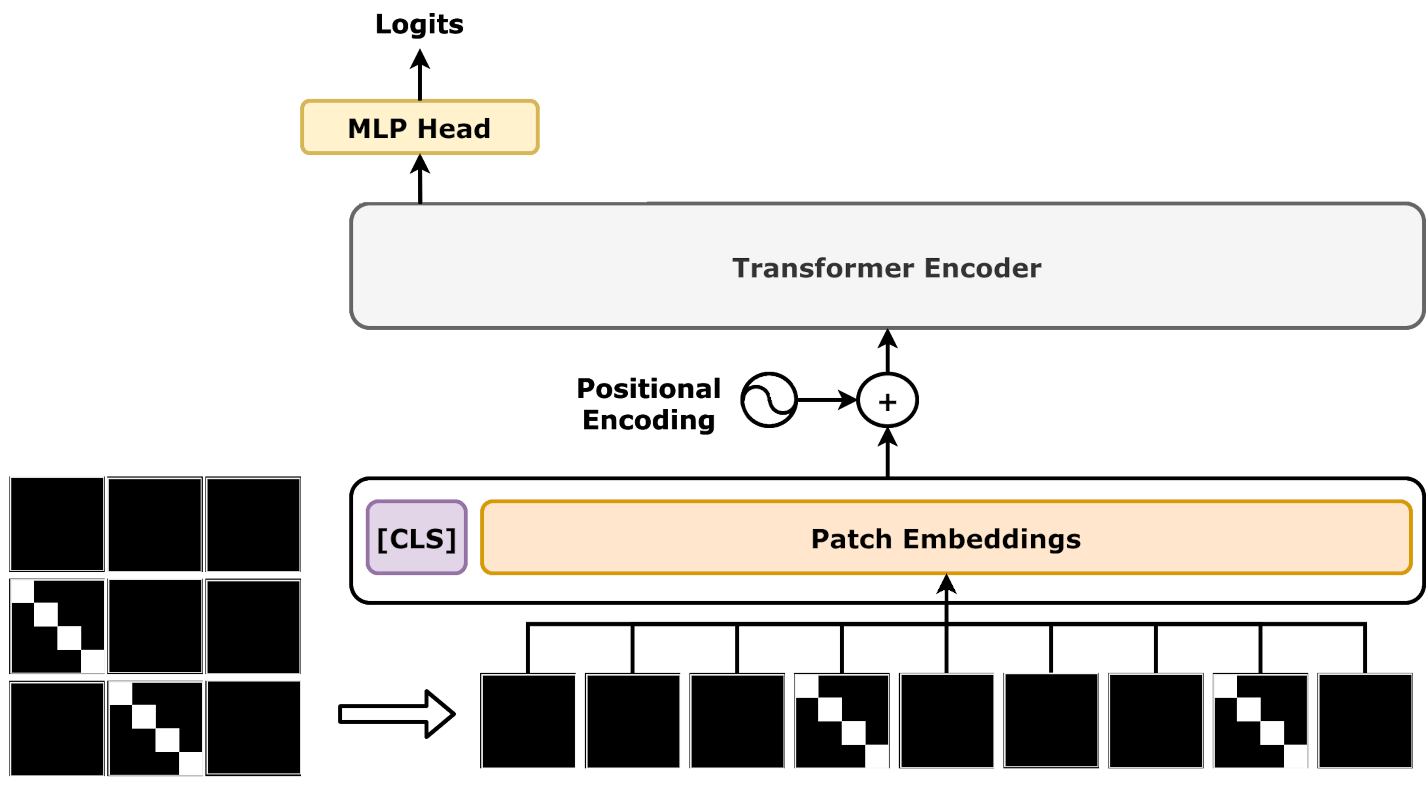

Vision Transformer

A vision transformer (ViT) is a Transformer (machine learning model), transformer designed for computer vision. A ViT decomposes an input image into a series of patches (rather than text into Byte pair encoding, tokens), serializes each patch into a vector, and maps it to a smaller dimension with a single matrix multiplication. These vector Latent space, embeddings are then processed by a BERT (language model), transformer encoder as if they were token embeddings. ViTs were designed as alternatives to convolutional neural networks (CNNs) in computer vision applications. They have different inductive biases, training stability, and data efficiency. Compared to CNNs, ViTs are less data efficient, but have higher capacity. Some of the largest modern computer vision models are ViTs, such as one with 22B parameters. Subsequent to its publication, many variants were proposed, with hybrid architectures with both features of ViTs and CNNs. ViTs have found application in image recognition, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yoshua Bengio

Yoshua Bengio (born March 5, 1964) is a Canadian-French computer scientist, and a pioneer of artificial neural networks and deep learning. He is a professor at the Université de Montréal and scientific director of the AI institute Montreal Institute for Learning Algorithms, MILA. Bengio received the 2018 Turing Award, ACM A.M. Turing Award, often referred to as the "List of prizes known as the Nobel of a field or the highest honors of a field, Nobel Prize of Computing", together with Geoffrey Hinton and Yann LeCun, for their foundational work on deep learning. Bengio, Geoffrey Hinton, Hinton, and Yann LeCun, LeCun are sometimes referred to as the "Godfathers of AI". Bengio is the most-cited computer scientist globally (by both total citations and by h-index, ''h''-index), and the most-cited living scientist across all fields (by total citations). In 2024, Time (magazine), ''TIME'' Magazine included Bengio in its Time 100, yearly list of the world's 100 most influential people. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Model

A language model is a model of the human brain's ability to produce natural language. Language models are useful for a variety of tasks, including speech recognition, machine translation,Andreas, Jacob, Andreas Vlachos, and Stephen Clark (2013)"Semantic parsing as machine translation". Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). natural language generation (generating more human-like text), optical character recognition, route optimization, handwriting recognition, grammar induction, and information retrieval. Large language models (LLMs), currently their most advanced form, are predominantly based on transformers trained on larger datasets (frequently using words scraped from the public internet). They have superseded recurrent neural network-based models, which had previously superseded the purely statistical models, such as word ''n''-gram language model. History Noam Chomsky did pioneering work on lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

David E

David (; , "beloved one") was a king of ancient Israel and Judah and the third king of the United Monarchy, according to the Hebrew Bible and Old Testament. The Tel Dan stele, an Aramaic-inscribed stone erected by a king of Aram-Damascus in the late 9th/early 8th centuries BCE to commemorate a victory over two enemy kings, contains the phrase (), which is translated as " House of David" by most scholars. The Mesha Stele, erected by King Mesha of Moab in the 9th century BCE, may also refer to the "House of David", although this is disputed. According to Jewish works such as the '' Seder Olam Rabbah'', '' Seder Olam Zutta'', and '' Sefer ha-Qabbalah'' (all written over a thousand years later), David ascended the throne as the king of Judah in 885 BCE. Apart from this, all that is known of David comes from biblical literature, the historicity of which has been extensively challenged,Writing and Rewriting the Story of Solomon in Ancient Israel; by Isaac Kalimi; pa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paul Werbos

Paul John Werbos (born September 4, 1947) is an American social scientist and machine learning pioneer. He is best known for his 1974 dissertation, which first described the process of training artificial neural networks through backpropagation of errors. He also was a pioneer of recurrent neural networks. Werbos was one of the original three two-year Presidents of the International Neural Network Society (INNS). In 1995, he was awarded the IEEE Neural Network Pioneer Award for the discovery of backpropagation and other basic neural network learning frameworks such as Adaptive Dynamic Programming. Werbos has also written on quantum mechanics and other areas of physics. He also has interest in larger questions relating to consciousness, the foundations of physics, and human potential. He served as program director in the National Science Foundation The U.S. National Science Foundation (NSF) is an Independent agencies of the United States government#Examples of independen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Seppo Linnainmaa

Seppo Ilmari Linnainmaa (born 28 September 1945) is a Finnish mathematician and computer scientist known for creating the modern version of backpropagation. Biography He was born in Pori. He received his MSc in 1970 and introduced a reverse mode of automatic differentiation in his MSc thesis. In 1974 he obtained the first doctorate ever awarded in computer science at the University of Helsinki. In 1976, he became Assistant Professor. From 1984 to 1985 he was Visiting Professor at the University of Maryland, USA. From 1986 to 1989 he was Chairman of the Finnish Artificial Intelligence Society. From 1989 to 2007, he was Research Professor at the VTT Technical Research Centre of Finland. He retired in 2007. Backpropagation Explicit, efficient error backpropagation in arbitrary, discrete, possibly sparsely connected, neural networks-like networks was first described in Linnainmaa's 1970 master's thesis, albeit without reference to NNs,Jürgen Schmidhuber, (2015)Who Invented Ba ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backpropagation

In machine learning, backpropagation is a gradient computation method commonly used for training a neural network to compute its parameter updates. It is an efficient application of the chain rule to neural networks. Backpropagation computes the gradient of a loss function with respect to the weights of the network for a single input–output example, and does so efficiently, computing the gradient one layer at a time, iterating backward from the last layer to avoid redundant calculations of intermediate terms in the chain rule; this can be derived through dynamic programming. Strictly speaking, the term ''backpropagation'' refers only to an algorithm for efficiently computing the gradient, not how the gradient is used; but the term is often used loosely to refer to the entire learning algorithm – including how the gradient is used, such as by stochastic gradient descent, or as an intermediate step in a more complicated optimizer, such as Adaptive Moment Estimation. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |