|

Per-comparison Error Rate

In statistics, per-comparison error rate (PCER) is the probability of a Type I error in the absence of any multiple hypothesis testing correction. This is a liberal error rate relative to the false discovery rate and family-wise error rate In statistics, family-wise error rate (FWER) is the probability of making one or more false discoveries, or type I errors when performing multiple hypotheses tests. Familywise and experimentwise error rates John Tukey developed in 1953 the conce ..., in that it is always less than or equal to those rates. References Statistical hypothesis testing Rates {{statistics-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Type I Error

Type I error, or a false positive, is the erroneous rejection of a true null hypothesis in statistical hypothesis testing. A type II error, or a false negative, is the erroneous failure in bringing about appropriate rejection of a false null hypothesis. Type I errors can be thought of as errors of commission, in which the status quo is erroneously rejected in favour of new, misleading information. Type II errors can be thought of as errors of omission, in which a misleading status quo is allowed to remain due to failures in identifying it as such. For example, if the assumption that people are ''innocent until proven guilty'' were taken as a null hypothesis, then proving an innocent person as guilty would constitute a Type I error, while failing to prove a guilty person as guilty would constitute a Type II error. If the null hypothesis were inverted, such that people were by default presumed to be ''guilty until proven innocent'', then proving a guilty person's innocence would ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Comparisons

Multiple comparisons, multiplicity or multiple testing problem occurs in statistics when one considers a set of statistical inferences simultaneously or estimates a subset of parameters selected based on the observed values. The larger the number of inferences made, the more likely erroneous inferences become. Several statistical techniques have been developed to address this problem, for example, by requiring a stricter significance threshold for individual comparisons, so as to compensate for the number of inferences being made. Methods for family-wise error rate give the probability of false positives resulting from the multiple comparisons problem. History The problem of multiple comparisons received increased attention in the 1950s with the work of statisticians such as Tukey and Scheffé. Over the ensuing decades, many procedures were developed to address the problem. In 1996, the first international conference on multiple comparison procedures took place in Tel Aviv. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of The Royal Statistical Society, Series B

A journal, from the Old French ''journal'' (meaning "daily"), may refer to: *Bullet journal, a method of personal organization *Diary, a record of personal secretive thoughts and as open book to personal therapy or used to feel connected to oneself. A record of what happened over the course of a day or other period *Daybook, also known as a general journal, a daily record of financial transactions *Logbook, a record of events important to the operation of a vehicle, facility, or otherwise *Transaction log, a chronological record of data processing *Travel journal, a record of the traveller's experience during the course of their journey In publishing, ''journal'' can refer to various periodicals or serials: *Academic journal, an academic or scholarly periodical **Scientific journal, an academic journal focusing on science **Medical journal, an academic journal focusing on medicine **Law review, a professional journal focusing on legal interpretation *Magazine, non-academic or scho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

False Discovery Rate

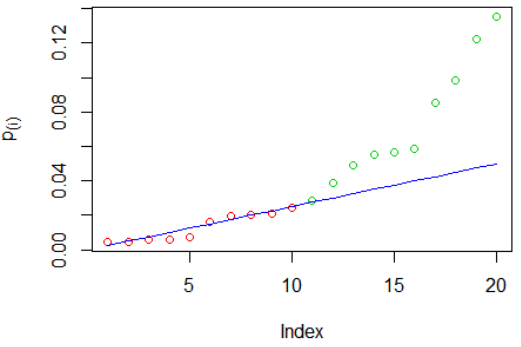

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the expected proportion of "discoveries" (rejected null hypotheses) that are false (incorrect rejections of the null). Equivalently, the FDR is the expected ratio of the number of false positive classifications (false discoveries) to the total number of positive classifications (rejections of the null). The total number of rejections of the null include both the number of false positives (FP) and true positives (TP). Simply put, FDR = FP / (FP + TP). FDR-controlling procedures provide less stringent control of Type I errors compared to family-wise error rate (FWER) controlling procedures (such as the Bonferroni correction), which control the probability of ''at least one'' Type I error. Thus, FDR-controlling procedures have greater power, at t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Family-wise Error Rate

In statistics, family-wise error rate (FWER) is the probability of making one or more false discoveries, or type I errors when performing multiple hypotheses tests. Familywise and experimentwise error rates John Tukey developed in 1953 the concept of a familywise error rate as the probability of making a Type I error among a specified group, or "family," of tests. Based on Tukey (1953), Ryan (1959) proposed the related concept of an ''experimentwise error rate'', which is the probability of making a Type I error in a given experiment. Hence, an experimentwise error rate is a familywise error rate where the family includes all the tests that are conducted within an experiment. As Ryan (1959, Footnote 3) explained, an experiment may contain two or more families of multiple comparisons, each of which relates to a particular statistical inference and each of which has its own separate familywise error rate. Hence, familywise error rates are usually based on theoretically informative ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Hypothesis Testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data provide sufficient evidence to reject a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. Then a decision is made, either by comparing the test statistic to a Critical value (statistics), critical value or equivalently by evaluating a p-value, ''p''-value computed from the test statistic. Roughly 100 list of statistical tests, specialized statistical tests are in use and noteworthy. History While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Choice of null hypothesis Paul Meehl has argued that the epistemological importance of the choice of null hypothesis has gone largely unacknowledged. When the null hypothesis is predicted by the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |