|

Vision Transformer

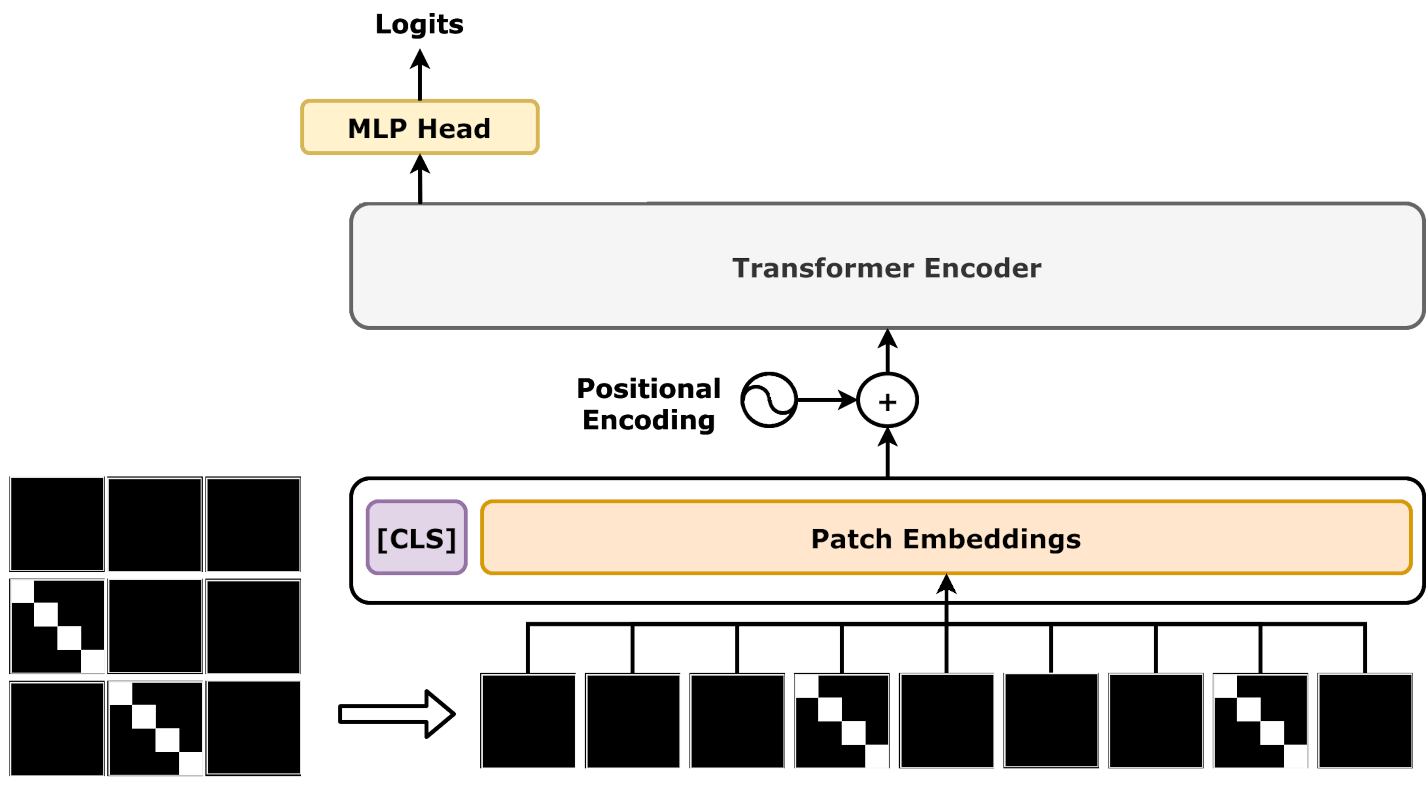

A vision transformer (ViT) is a Transformer (machine learning model), transformer designed for computer vision. A ViT decomposes an input image into a series of patches (rather than text into Byte pair encoding, tokens), serializes each patch into a vector, and maps it to a smaller dimension with a single matrix multiplication. These vector Latent space, embeddings are then processed by a BERT (language model), transformer encoder as if they were token embeddings. ViTs were designed as alternatives to convolutional neural networks (CNNs) in computer vision applications. They have different inductive biases, training stability, and data efficiency. Compared to CNNs, ViTs are less data efficient, but have higher capacity. Some of the largest modern computer vision models are ViTs, such as one with 22B parameters. Subsequent to its publication, many variants were proposed, with hybrid architectures with both features of ViTs and CNNs. ViTs have found application in image recognition, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pyramid (image Processing)

Pyramid, or pyramid representation, is a type of multi-scale signal representation developed by the computer vision, image processing and signal processing communities, in which a signal or an image is subject to repeated smoothing and subsampling. Pyramid representation is a predecessor to scale-space representation and multiresolution analysis. Pyramid generation There are two main types of pyramids: lowpass and bandpass. A lowpass pyramid is made by smoothing the image with an appropriate smoothing filter and then subsampling the smoothed image, usually by a factor of 2 along each coordinate direction. The resulting image is then subjected to the same procedure, and the cycle is repeated multiple times. Each cycle of this process results in a smaller image with increased smoothing, but with decreased spatial sampling density (that is, decreased image resolution). If illustrated graphically, the entire multi-scale representation will look like a pyramid, with the original ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Denoising Autoencoder

An autoencoder is a type of artificial neural network used to learn Feature learning, efficient codings of unlabeled data (unsupervised learning). An autoencoder learns two functions: an encoding function that transforms the input data, and a decoding function that recreates the input data from the encoded representation. The autoencoder learns an efficient representation (encoding) for a set of data, typically for dimensionality reduction, to generate lower-dimensional embeddings for subsequent use by other machine learning algorithms. Variants exist which aim to make the learned representations assume useful properties. Examples are regularized autoencoders (''sparse'', ''denoising'' and ''contractive'' autoencoders), which are effective in learning representations for subsequent Statistical classification, classification tasks, and Variational autoencoder, ''variational'' autoencoders, which can be used as generative models. Autoencoders are applied to many problems, includi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Masked Autoencoder

A vision transformer (ViT) is a transformer designed for computer vision. A ViT decomposes an input image into a series of patches (rather than text into tokens), serializes each patch into a vector, and maps it to a smaller dimension with a single matrix multiplication. These vector embeddings are then processed by a transformer encoder as if they were token embeddings. ViTs were designed as alternatives to convolutional neural networks (CNNs) in computer vision applications. They have different inductive biases, training stability, and data efficiency. Compared to CNNs, ViTs are less data efficient, but have higher capacity. Some of the largest modern computer vision models are ViTs, such as one with 22B parameters. Subsequent to its publication, many variants were proposed, with hybrid architectures with both features of ViTs and CNNs. ViTs have found application in image recognition, image segmentation, weather prediction, and autonomous driving. History Transformers wer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

LayerNorm

In machine learning, normalization is a statistical technique with various applications. There are two main forms of normalization, namely ''data normalization'' and ''activation normalization''. Data normalization (or feature scaling) includes methods that rescale input data so that the features have the same range, mean, variance, or other statistical properties. For instance, a popular choice of feature scaling method is min-max normalization, where each feature is transformed to have the same range (typically ,1/math> or 1,1/math>). This solves the problem of different features having vastly different scales, for example if one feature is measured in kilometers and another in nanometers. Activation normalization, on the other hand, is specific to deep learning, and includes methods that rescale the activation of hidden neurons inside neural networks. Normalization is often used to: * increase the speed of training convergence, * reduce sensitivity to variations and featur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pixel

In digital imaging, a pixel (abbreviated px), pel, or picture element is the smallest addressable element in a Raster graphics, raster image, or the smallest addressable element in a dot matrix display device. In most digital display devices, pixels are the smallest element that can be manipulated through software. Each pixel is a Sampling (signal processing), sample of an original image; more samples typically provide more accurate representations of the original. The Intensity (physics), intensity of each pixel is variable. In color imaging systems, a color is typically represented by three or four component intensities such as RGB color model, red, green, and blue, or CMYK color model, cyan, magenta, yellow, and black. In some contexts (such as descriptions of camera sensors), ''pixel'' refers to a single scalar element of a multi-component representation (called a ''photosite'' in the camera sensor context, although ''wikt:sensel, sensel'' is sometimes used), while in yet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Attention (machine Learning)

In machine learning, attention is a method that determines the importance of each component in a sequence relative to the other components in that sequence. In natural language processing, importance is represented b"soft"weights assigned to each word in a sentence. More generally, attention encodes vectors called lexical token, token Word embedding, embeddings across a fixed-width context window, sequence that can range from tens to millions of tokens in size. Unlike "hard" weights, which are computed during the backwards training pass, "soft" weights exist only in the forward pass and therefore change with every step of the input. Earlier designs implemented the attention mechanism in a serial recurrent neural network (RNN) language translation system, but a more recent design, namely the Transformer (machine learning model), transformer, removed the slower sequential RNN and relied more heavily on the faster parallel attention scheme. Inspired by ideas about attention, attent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Inceptionv3

Inception is a family of convolutional neural network (CNN) for computer vision, introduced by researchers at Google in 2014 as GoogLeNet (later renamed Inception v1). The series was historically important as an early CNN that separates the stem (data ingest), body (data processing), and head (prediction), an architectural design that persists in all modern CNN. Version history Inception v1 In 2014, a team at Google developed the GoogLeNet architecture, an instance of which won the ImageNet Large-Scale Visual Recognition Challenge 2014 (ILSVRC14). The name came from the LeNet of 1998, since both LeNet and GoogLeNet are CNNs. They also called it "Inception" after a "we need to go deeper" internet meme, a phrase from ''Inception'' (2010) the film. Because later, more versions were released, the original Inception architecture was renamed again as "Inception v1". The models and the code were released under Apache 2.0 license on GitHub. The Inception v1 architecture is a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

DenseNet

A residual neural network (also referred to as a residual network or ResNet) is a deep learning architecture in which the layers learn residual functions with reference to the layer inputs. It was developed in 2015 for image recognition, and won the ImageNet Large Scale Visual Recognition ChallengeILSVRC of that year. As a point of terminology, "residual connection" refers to the specific architectural motif of , where f is an arbitrary neural network module. The motif had been used previously (see Residual neural network#History, §History for details). However, the publication of ResNet made it widely popular for Feedforward neural network, feedforward networks, appearing in neural networks that are seemingly unrelated to ResNet. The residual connection stabilizes the training and convergence of deep neural networks with hundreds of layers, and is a common motif in deep neural networks, such as Transformer (deep learning architecture), transformer models (e.g., BERT (language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

EfficientNet

EfficientNet is a family of convolutional neural networks (CNNs) for computer vision published by researchers at Google AI in 2019. Its key innovation is compound scaling, which uniformly scales all dimensions of depth, width, and resolution using a single parameter. EfficientNet models have been adopted in various computer vision tasks, including image classification, object detection, and segmentation. Compound scaling EfficientNet introduces compound scaling, which, instead of scaling one dimension of the network at a time, such as depth (number of layers), width (number of channels), or resolution (input image size), uses a compound coefficient \phi to scale all three dimensions simultaneously. Specifically, given a baseline network, the depth, width, and resolution are scaled according to the following equations: \begin \text d &= \alpha^ \\ \text w &= \beta^ \\ \text r &= \gamma^ \end subject to \alpha \cdot \beta^2 \cdot \gamma^2 \approx 2 and \alpha \ge 1, \beta \g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is a large language model released by OpenAI in 2020. Like its predecessor, GPT-2, it is a decoder-only transformer model of deep neural network, which supersedes recurrence and convolution-based architectures with a technique known as "attention". This attention mechanism allows the model to focus selectively on segments of input text it predicts to be most relevant. GPT-3 has 175 billion parameters, each with 16-bit precision, requiring 350GB of storage since each parameter occupies 2 bytes. It has a context window size of 2048 tokens, and has demonstrated strong " zero-shot" and " few-shot" learning abilities on many tasks. On September 22, 2020, Microsoft announced that it had licensed GPT-3 exclusively. Others can still receive output from its public API, but only Microsoft has access to the underlying model. Background According to ''The Economist'', improved algorithms, more powerful computers, and a recent increase i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Language Models

A language model is a model of the human brain's ability to produce natural language. Language models are useful for a variety of tasks, including speech recognition, machine translation,Andreas, Jacob, Andreas Vlachos, and Stephen Clark (2013)"Semantic parsing as machine translation". Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). natural language generation (generating more human-like text), optical character recognition, route optimization, handwriting recognition, grammar induction, and information retrieval. Large language models (LLMs), currently their most advanced form, are predominantly based on transformers trained on larger datasets (frequently using words scraped from the public internet). They have superseded recurrent neural network-based models, which had previously superseded the purely statistical models, such as word ''n''-gram language model. History Noam Chomsky did pioneering work on lang ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |