Vision Transformer on:

[Wikipedia]

[Google]

[Amazon]

A vision transformer (ViT) is a

A vision transformer (ViT) is a

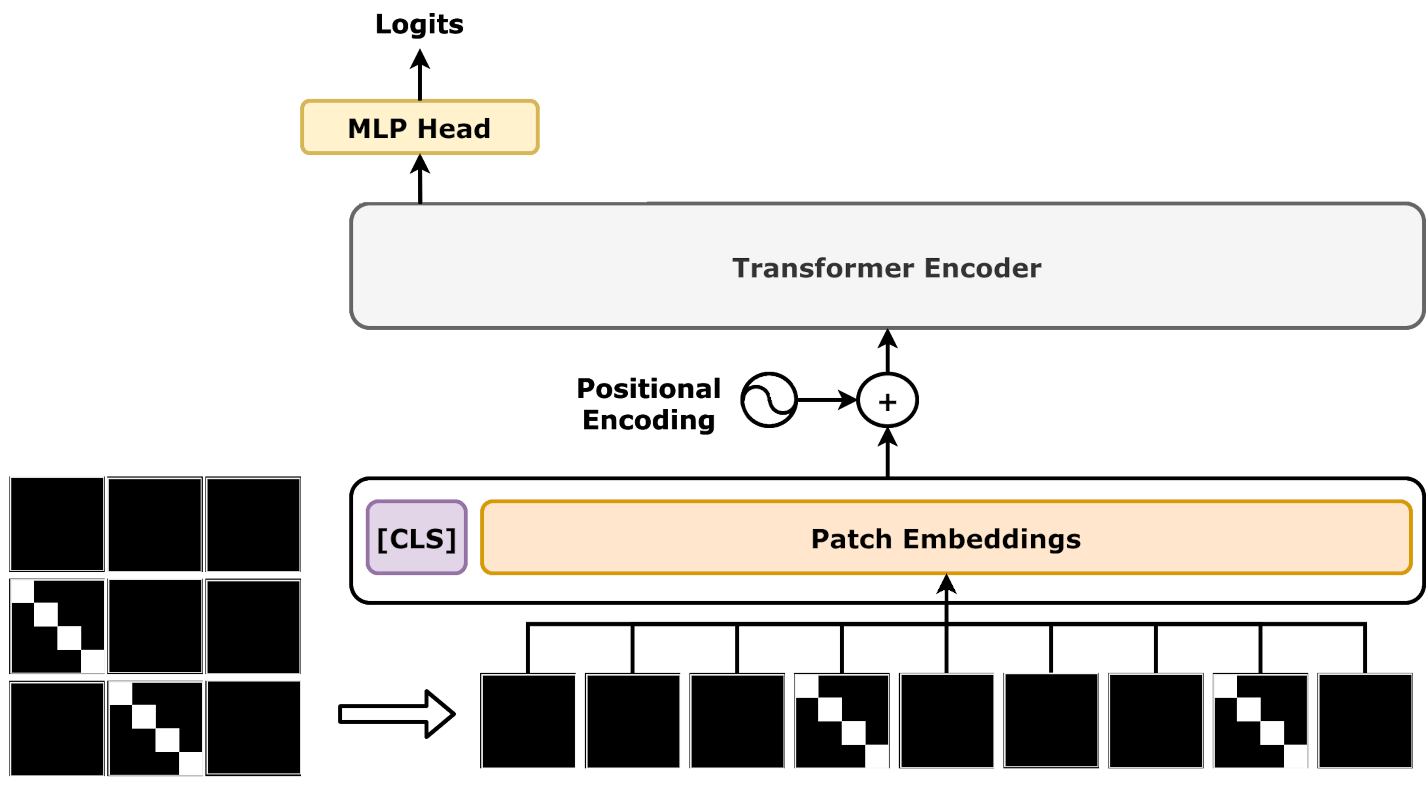

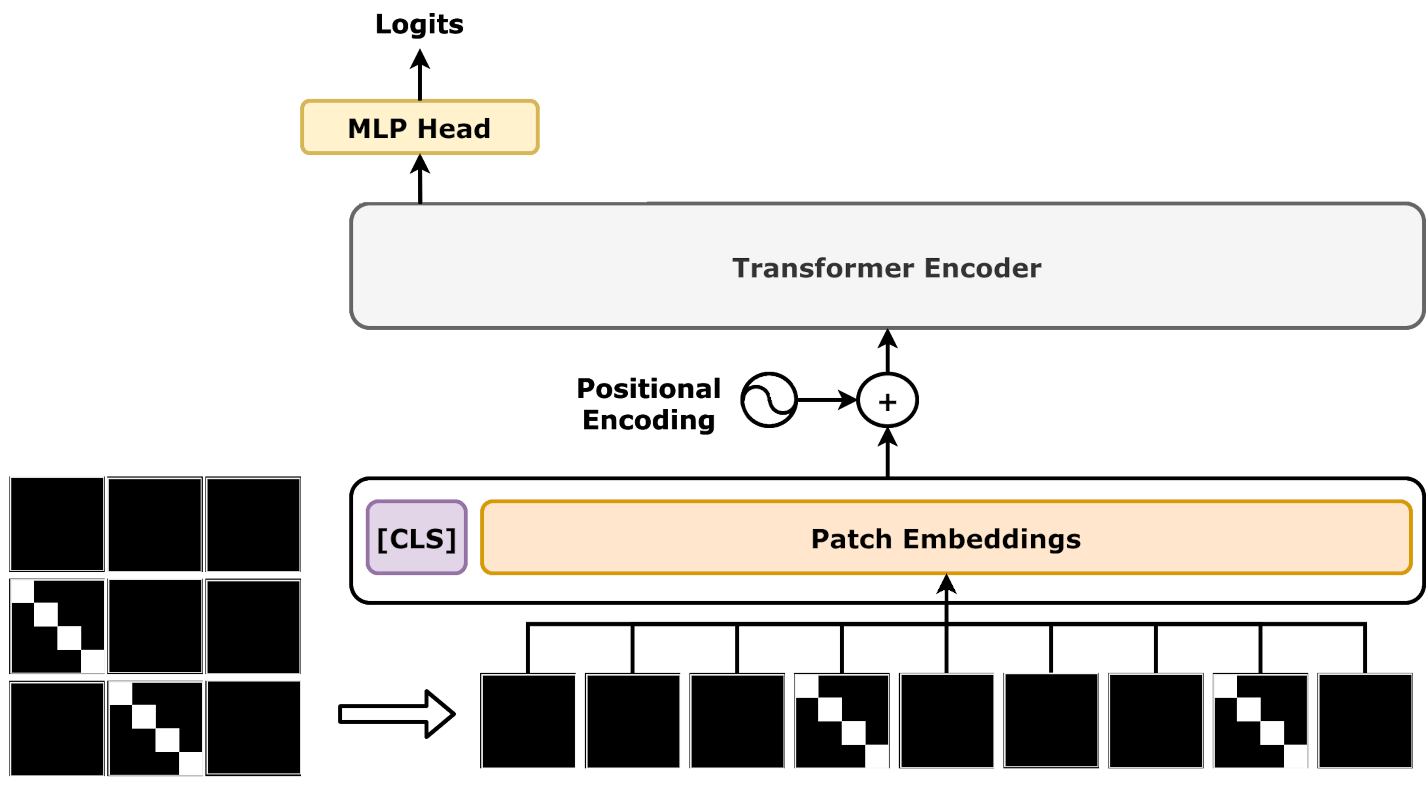

The basic architecture, used by the original 2020 paper, is as follows. In summary, it is a BERT-like encoder-only Transformer.

The input image is of type , where are height, width, channel (

The basic architecture, used by the original 2020 paper, is as follows. In summary, it is a BERT-like encoder-only Transformer.

The input image is of type , where are height, width, channel (

Transformers found their initial applications in

Transformers found their initial applications in

A vision transformer (ViT) is a

A vision transformer (ViT) is a transformer

In electrical engineering, a transformer is a passive component that transfers electrical energy from one electrical circuit to another circuit, or multiple Electrical network, circuits. A varying current in any coil of the transformer produces ...

designed for computer vision

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical ...

. A ViT decomposes an input image into a series of patches (rather than text into tokens), serializes each patch into a vector, and maps it to a smaller dimension with a single matrix multiplication

In mathematics, specifically in linear algebra, matrix multiplication is a binary operation that produces a matrix (mathematics), matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the n ...

. These vector embeddings are then processed by a transformer encoder as if they were token embeddings.

ViTs were designed as alternatives to convolutional neural network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different ty ...

s (CNNs) in computer vision applications. They have different inductive biases, training stability, and data efficiency. Compared to CNNs, ViTs are less data efficient, but have higher capacity. Some of the largest modern computer vision models are ViTs, such as one with 22B parameters.

Subsequent to its publication, many variants were proposed, with hybrid architectures with both features of ViTs and CNNs. ViTs have found application in image recognition

Computer vision tasks include methods for acquiring, processing, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form o ...

, image segmentation

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects (Set (mathematics), sets of pixels). The goal of segmen ...

, weather prediction

Weather is the state of the atmosphere, describing for example the degree to which it is hot or cold, wet or dry, calm or stormy, clear or cloudy. On Earth, most weather phenomena occur in the lowest layer of the planet's atmosphere, the ...

, and autonomous driving

Vehicular automation is using technology to assist or replace the operator of a vehicle such as a car, truck, aircraft, rocket, military vehicle, or boat. Assisted vehicles are ''semi-autonomous'', whereas vehicles that can travel without a ...

.

History

Transformers were introduced in ''Attention Is All You Need

"Attention Is All You Need" is a 2017 landmark research paper in machine learning authored by eight scientists working at Google. The paper introduced a new deep learning architecture known as the transformer, based on the attention mechanism p ...

'' (2017), and have found widespread use in natural language processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related ...

. A 2019 paper applied ideas from the Transformer to computer vision. Specifically, they started with a ResNet, a standard convolutional neural network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different ty ...

used for computer vision, and replaced all convolutional kernels by the self-attention mechanism found in a Transformer. It resulted in superior performance. However, it is not a Vision Transformer.

In 2020, an encoder-only Transformer was adapted for computer vision, yielding the ViT, which reached state of the art in image classification, overcoming the previous dominance of CNN. The masked autoencoder (2022) extended ViT to work with unsupervised training. The vision transformer and the masked autoencoder, in turn, stimulated new developments in convolutional neural networks.

Subsequently, there was cross-fertilization between the previous CNN approach and the ViT approach.

In 2021, some important variants of the Vision Transformers were proposed. These variants are mainly intended to be more efficient, more accurate or better suited to a specific domain. Two studies improved efficiency and robustness of ViT by adding a CNN as a preprocessor. The Swin Transformer achieved state-of-the-art results on some object detection datasets such as COCO

Coco or variants may refer to:

Arts and entertainment

Film

* ''Coco'' (2009 film), a French comedy film

* ''Coco'' (2017 film), an American animated fantasy film

* '' Pokémon the Movie: Secrets of the Jungle'' (), a 2020 Japanese anime film ...

, by using convolution-like sliding windows of attention mechanism, and the pyramid

A pyramid () is a structure whose visible surfaces are triangular in broad outline and converge toward the top, making the appearance roughly a pyramid in the geometric sense. The base of a pyramid can be of any polygon shape, such as trian ...

process in classical computer vision.

Overview

RGB

The RGB color model is an additive color model in which the red, green, and blue primary colors of light are added together in various ways to reproduce a broad array of colors. The name of the model comes from the initials of the three ...

). It is then split into square-shaped patches of type .

For each patch, the patch is pushed through a linear operator, to obtain a vector ("patch embedding"). The position of the patch is also transformed into a vector by "position encoding". The two vectors are added, then pushed through several Transformer encoders.

The attention mechanism in a ViT repeatedly transforms representation vectors of image patches, incorporating more and more semantic relations between image patches in an image. This is analogous to how in natural language processing, as representation vectors flow through a transformer, they incorporate more and more semantic relations between words, from syntax to semantics.

The above architecture turns an image into a sequence of vector representations. To use these for downstream applications, an additional head needs to be trained to interpret them.

For example, to use it for classification, one can add a shallow MLP on top of it that outputs a probability distribution over classes. The original paper uses a linear- GeLU-linear-softmax network.

Variants

Original ViT

The original ViT was an encoder-only Transformer supervise-trained to predict the image label from the patches of the image. As in the case ofBERT

Bert or BERT may refer to:

Persons, characters, or animals known as Bert

*Bert (name), commonly an abbreviated forename and sometimes a surname

*Bert, a character in the poem "Bert the Wombat" by The Wiggles; from their 1992 album ''Here Comes a ...

, it uses a special token  Transformers found their initial applications in

Transformers found their initial applications in natural language processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related ...

tasks, as demonstrated by language models

A language model is a model of the human brain's ability to produce natural language. Language models are useful for a variety of tasks, including speech recognition, machine translation,Andreas, Jacob, Andreas Vlachos, and Stephen Clark (2013)"S ...

such as BERT

Bert or BERT may refer to:

Persons, characters, or animals known as Bert

*Bert (name), commonly an abbreviated forename and sometimes a surname

*Bert, a character in the poem "Bert the Wombat" by The Wiggles; from their 1992 album ''Here Comes a ...

and GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is a large language model released by OpenAI in 2020.

Like its predecessor, GPT-2, it is a decoder-only transformer model of deep neural network, which supersedes recurrence and convolution-based ...

. By contrast the typical image processing system uses a convolutional neural network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different ty ...

(CNN). Well-known projects include Xception, ResNet, EfficientNet

EfficientNet is a family of convolutional neural networks (CNNs) for computer vision published by researchers at Google AI in 2019. Its key innovation is compound scaling, which uniformly scales all dimensions of depth, width, and resolution usi ...

, DenseNet

A residual neural network (also referred to as a residual network or ResNet) is a deep learning architecture in which the layers learn residual functions with reference to the layer inputs. It was developed in 2015 for image recognition, and won ...

, and Inception

''Inception'' is a 2010 science fiction action heist film written and directed by Christopher Nolan, who also produced it with Emma Thomas, his wife. The film stars Leonardo DiCaprio as a professional thief who steals information by inf ...

.

Transformers measure the relationships between pairs of input tokens (words in the case of text strings), termed attention

Attention or focus, is the concentration of awareness on some phenomenon to the exclusion of other stimuli. It is the selective concentration on discrete information, either subjectively or objectively. William James (1890) wrote that "Atte ...

. The cost is quadratic in the number of tokens. For images, the basic unit of analysis is the pixel

In digital imaging, a pixel (abbreviated px), pel, or picture element is the smallest addressable element in a Raster graphics, raster image, or the smallest addressable element in a dot matrix display device. In most digital display devices, p ...

. However, computing relationships for every pixel pair in a typical image is prohibitive in terms of memory and computation. Instead, ViT computes relationships among pixels in various small sections of the image (e.g., 16x16 pixels), at a drastically reduced cost. The sections (with positional embeddings) are placed in a sequence. The embeddings are learnable vectors. Each section is arranged into a linear sequence and multiplied by the embedding matrix. The result, with the position embedding is fed to the transformer.

Architectural improvements

Pooling

After the ViT processes an image, it produces some embedding vectors. These must be converted to a single class probability prediction by some kind of network. In the original ViT and Masked Autoencoder, they used a dummy LS/code> token , in emulation of the BERT

Bert or BERT may refer to:

Persons, characters, or animals known as Bert

*Bert (name), commonly an abbreviated forename and sometimes a surname

*Bert, a character in the poem "Bert the Wombat" by The Wiggles; from their 1992 album ''Here Comes a ...

language model. The output at LS/code> is the classification token, which is then processed by a LayerNorm

In machine learning, normalization is a statistical technique with various applications. There are two main forms of normalization, namely ''data normalization'' and ''activation normalization''. Data normalization (or feature scaling) includes me ...

-feedforward-softmax module into a probability distribution.

Global average pooling (GAP) does not use the dummy token, but simply takes the average of all output tokens as the classification token. It was mentioned in the original ViT as being equally good.

Multihead attention pooling (MAP) applies a multiheaded attention block to pooling. Specifically, it takes as input a list of vectors , which might be thought of as the output vectors of a layer of a ViT. The output from MAP is , where is a trainable query vector, and is the matrix with rows being . This was first proposed in the Set Transformer architecture.

Later papers demonstrated that GAP and MAP both perform better than BERT-like pooling. A variant of MAP was proposed as class attention, which applies MAP, then feedforward, then MAP again.

Re-attention was proposed to allow training deep ViT. It changes the multiheaded attention module.

Masked Autoencoder

The Masked Autoencoder took inspiration from denoising autoencoder

An autoencoder is a type of artificial neural network used to learn Feature learning, efficient codings of unlabeled data (unsupervised learning). An autoencoder learns two functions: an encoding function that transforms the input data, and a ...

The Masked Autoencoder took inspiration from denoising autoencoder

An autoencoder is a type of artificial neural network used to learn Feature learning, efficient codings of unlabeled data (unsupervised learning). An autoencoder learns two functions: an encoding function that transforms the input data, and a ...

s and context encoders. It has two ViTs put end-to-end. The first one ("encoder") takes in image patches with positional encoding, and outputs vectors representing each patch. The second one (called "decoder", even though it is still an encoder-only Transformer) takes in vectors with positional encoding and outputs image patches again. During training, both the encoder and the decoder ViTs are used. During inference, only the encoder ViT is used.

During training, each image is cut into patches, and with their positional embeddings added. Of these, only 25% of the patches are selected. The encoder ViT processes the selected patches. No mask tokens are used. Then, mask tokens are added back in, and positional embeddings added again. These are processed by the decoder ViT, which outputs a reconstruction of the full image. The loss is the total mean-squared loss in pixel-space for all masked patches (reconstruction loss is not computed for non-masked patches).

A similar architecture was BERT ViT (BEiT), published concurrently.

DINO

Like the Masked Autoencoder, the DINO (self-distillation with no labels) method is a way to train a ViT by self-supervision. DINO is a form of teacher-student self-distillation. In DINO, the student is the model itself, and the teacher is an exponential average of the student's past states. The method is similar to previous works like momentum contrast and bootstrap your own latent (BYOL).

The loss function used in DINO is the cross-entropy loss between the output of the teacher network () and the output of the student network (). The teacher network is an exponentially decaying average of the student network's past parameters: . The inputs to the networks are two different crops of the same image, represented as and , where is the original image. The loss function is written asOne issue is that the network can "collapse" by always outputting the same value (), regardless of the input. To prevent this collapse, DINO employs two strategies:

* Sharpening: The teacher network's output is sharpened using a softmax function with a lower temperature. This makes the teacher more "confident" in its predictions, forcing the student to learn more meaningful representations to match the teacher's sharpened output.

* Centering: The teacher network's output is centered by averaging it with its previous outputs. This prevents the teacher from becoming biased towards any particular output value, encouraging the student to learn a more diverse set of features.

In January 2024, Meta AI

Meta AI is a research division of Meta (formerly Facebook) that develops artificial intelligence and augmented reality technologies.

History

The foundation of laboratory was announced in 2013, under the name Facebook Artificial Intelligence ...

Research released an updated version called DINOv2 with improvements in architecture, loss function, and optimization technique. It was trained on a larger and more diverse dataset. The features learned by DINOv2 were more transferable, meaning it had better performance in downstream tasks.

Swin Transformer

The Swin Transformer ("Shifted windows") took inspiration from standard CNNs:

* Instead of performing self-attention over the entire sequence of tokens, one for each patch, it performs "shifted window based" self-attention, which means only performing attention over square-shaped blocks of patches. One block of patches is analogous to the receptive field of one convolution.

* After every few attention blocks, there is a "merge layer", which merges neighboring 2x2 tokens into a single token. This is analogous to pooling (by 2x2 convolution kernels, with stride 2). Merging means concatenation followed by multiplication with a matrix.

It is improved by Swin Transformer V2, which modifies upon the ViT by a different attention mechanism:

* LayerNorm

In machine learning, normalization is a statistical technique with various applications. There are two main forms of normalization, namely ''data normalization'' and ''activation normalization''. Data normalization (or feature scaling) includes me ...

immediately after each attention and feedforward layer ("res-post-norm");

* scaled cosine attention to replace the original dot product attention;

* log-spaced continuous relative position bias, which allows transfer learning

Transfer learning (TL) is a technique in machine learning (ML) in which knowledge learned from a task is re-used in order to boost performance on a related task. For example, for image classification, knowledge gained while learning to recogniz ...

across different window resolutions.

TimeSformer

The TimeSformer was designed for video understanding tasks, and it applied a factorized self-attention, similar to the factorized convolution kernels found in the Inception

''Inception'' is a 2010 science fiction action heist film written and directed by Christopher Nolan, who also produced it with Emma Thomas, his wife. The film stars Leonardo DiCaprio as a professional thief who steals information by inf ...

CNN architecture. Schematically, it divides a video into frames, and each frame into a square grid of patches (same as ViT). Let each patch coordinate be denoted by , denoting horizontal, vertical, and time.

* A space attention layer is a self-attention layer where each query patch attends to only the key and value patches such that .

* A time attention layer is where the requirement is instead.

The TimeSformer also considered other attention layer designs, such as the "height attention layer" where the requirement is . However, they found empirically that the best design interleaves one space attention layer and one time attention layer.

ViT-VQGAN

In ViT-VQGAN, there are two ViT encoders and a discriminator. One encodes 8x8 patches of an image into a list of vectors, one for each patch. The vectors can only come from a discrete set of "codebook", as in vector quantization

Vector quantization (VQ) is a classical quantization technique from signal processing that allows the modeling of probability density functions by the distribution of prototype vectors. Developed in the early 1980s by Robert M. Gray, it was ori ...

. Another encodes the quantized vectors back to image patches. The training objective attempts to make the reconstruction image (the output image) faithful to the input image. The discriminator (usually a convolutional network, but other networks are allowed) attempts to decide if an image is an original real image, or a reconstructed image by the ViT.

The idea is essentially the same as vector quantized variational autoencoder (VQVAE) plus generative adversarial network

A generative adversarial network (GAN) is a class of machine learning frameworks and a prominent framework for approaching generative artificial intelligence. The concept was initially developed by Ian Goodfellow and his colleagues in June ...

(GAN).

After such a ViT-VQGAN is trained, it can be used to code an arbitrary image into a list of symbols, and code an arbitrary list of symbols into an image. The list of symbols can be used to train into a standard autoregressive transformer (like GPT), for autoregressively generating an image. Further, one can take a list of caption-image pairs, convert the images into strings of symbols, and train a standard GPT-style transformer. Then at test time, one can just give an image caption, and have it autoregressively generate the image. This is the structure of Google Parti.

Others

Other examples include the visual transformer, CoAtNet, CvT, the data-efficient ViT (DeiT), etc.

In the Transformer in Transformer architecture, each layer applies a vision Transformer layer on each image patch embedding, add back the resulting tokens to the embedding, then applies another vision Transformer layer.

Comparison with CNNs

Typically, ViT uses patch sizes larger than standard CNN kernels (3x3 to 7x7). ViT is more sensitive to the choice of the optimizer, hyperparameters, and network depth. Preprocessing with a layer of smaller-size, overlapping (stride < size) convolutional filters helps with performance and stability.

This different behavior seems to derive from the different inductive bias

The inductive bias (also known as learning bias) of a learning algorithm is the set of assumptions that the learner uses to predict outputs of given inputs that it has not encountered.

Inductive bias is anything which makes the algorithm learn o ...

es they possess.

CNN applies the same set of filters for processing the entire image. This allows them to be more data efficient and less sensitive to local perturbations. ViT applies self-attention, allowing them to easily capture long-range relationships between patches. They also require more data to train, but they can ingest more training data compared to CNN, which might not improve after training on a large enough training dataset. ViT also appears more robust to input image distortions such as adversarial patches or permutations.

Applications

ViT have been used in many Computer Vision tasks with excellent results and in some cases even state-of-the-art. Image Classification

Computer vision tasks include methods for acquiring, processing, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form o ...

, Object Detection

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos. Well-researched ...

, Video Deepfake Detection, Image segmentation

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects (Set (mathematics), sets of pixels). The goal of segmen ...

, Anomaly detection

In data analysis, anomaly detection (also referred to as outlier detection and sometimes as novelty detection) is generally understood to be the identification of rare items, events or observations which deviate significantly from the majority of ...

, Image Synthesis, Cluster analysis

Cluster analysis or clustering is the data analyzing technique in which task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more Similarity measure, similar (in some specific sense defined by the ...

, Autonomous Driving

Vehicular automation is using technology to assist or replace the operator of a vehicle such as a car, truck, aircraft, rocket, military vehicle, or boat. Assisted vehicles are ''semi-autonomous'', whereas vehicles that can travel without a ...

.

ViT had been used for image generation as backbones for GAN

The word Gan or the initials GAN may refer to:

Places

* Gan, a component of Hebrew placenames literally meaning "garden"

China

* Gan River (Jiangxi)

* Gan River (Inner Mongolia),

* Gan County, in Jiangxi province

* Gansu, abbreviated '' ...

and for diffusion model

In machine learning, diffusion models, also known as diffusion-based generative models or score-based generative models, are a class of latent variable model, latent variable generative model, generative models. A diffusion model consists of two ...

s (diffusion transformer, or DiT).

DINO has been demonstrated to learn useful representations for clustering images and exploring morphological profiles on biological datasets, such as images generated with the Cell Painting assay.

In 2024, a 113 billion-parameter ViT model was proposed (the largest ViT to date) for weather and climate prediction, and trained on the Frontier supercomputer with a throughput of 1.6 exaFLOPs

Floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations.

For such cases, it is a more accurate measur ...

.

See also

* Transformer (machine learning model)

The transformer is a deep learning architecture based on the multi-head attention mechanism, in which text is converted to numerical representations called tokens, and each token is converted into a vector via lookup from a word embedding table. ...

* Convolutional neural network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different ty ...

* Attention (machine learning)

In machine learning, attention is a method that determines the importance of each component in a sequence relative to the other components in that sequence. In natural language processing, importance is represented b"soft"weights assigned to eac ...

* Perceiver

* Deep learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience a ...

* PyTorch

PyTorch is a machine learning library based on the Torch library, used for applications such as computer vision and natural language processing, originally developed by Meta AI and now part of the Linux Foundation umbrella. It is one of the mo ...

* TensorFlow

TensorFlow is a Library (computing), software library for machine learning and artificial intelligence. It can be used across a range of tasks, but is used mainly for Types of artificial neural networks#Training, training and Statistical infer ...

References

Further reading

*

*

{{Artificial intelligence navbox

Neural network architectures

Computer vision

Artificial neural networks

Image processing