|

Topological Deep Learning

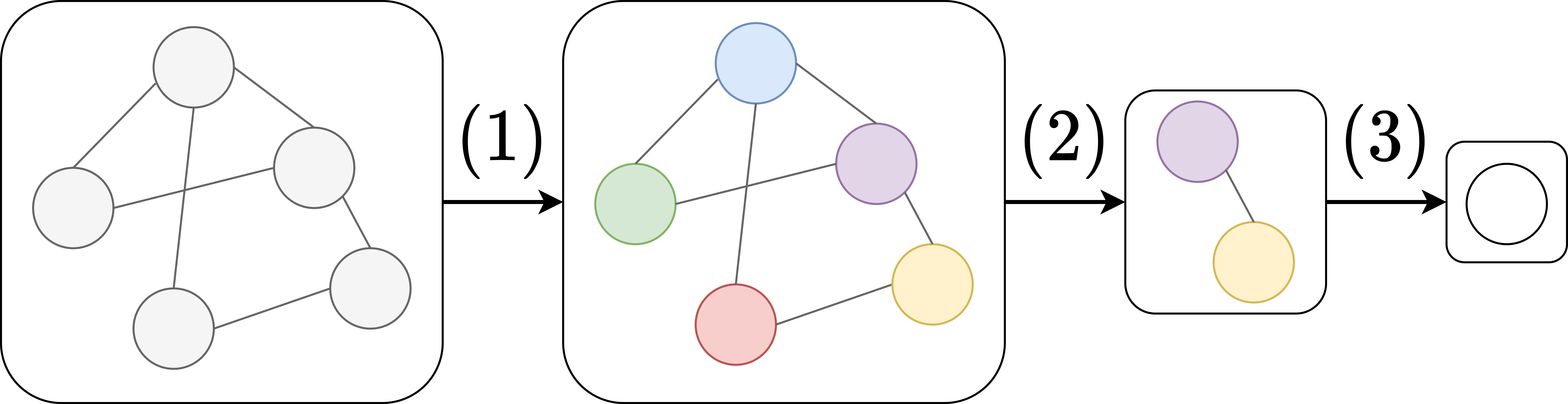

Topological deep learning (TDL) is a research field that extends deep learning to handle complex, Non-Euclidean geometry, non-Euclidean data structures. Traditional deep learning models, such as Convolutional neural network, convolutional neural networks (CNNs) and Recurrent neural network, recurrent neural networks (RNNs), excel in processing data on regular grids and sequences. However, scientific and real-world data often exhibit more intricate data domains encountered in scientific computations , including point clouds, Polygon mesh, meshes, time series, scalar fields Graph theory, graphs, or general topological spaces like simplicial complexes and CW complexes. TDL addresses this by incorporating topological concepts to process data with higher-order relationships, such as interactions among multiple entities and complex hierarchies. This approach leverages structures like Simplicial complex, simplicial complexes and Hypergraph, hypergraphs to capture global dependencies and qu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Differential Topology

In mathematics, differential topology is the field dealing with the topological properties and smooth properties of smooth manifolds. In this sense differential topology is distinct from the closely related field of differential geometry, which concerns the ''geometric'' properties of smooth manifolds, including notions of size, distance, and rigid shape. By comparison differential topology is concerned with coarser properties, such as the number of holes in a manifold, its homotopy type, or the structure of its diffeomorphism group. Because many of these coarser properties may be captured algebraically, differential topology has strong links to algebraic topology. The central goal of the field of differential topology is the classification of all smooth manifolds up to diffeomorphism. Since dimension is an invariant of smooth manifolds up to diffeomorphism type, this classification is often studied by classifying the ( connected) manifolds in each dimension separately: * In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Engineering

Feature engineering is a preprocessing step in supervised machine learning and statistical modeling which transforms raw data into a more effective set of inputs. Each input comprises several attributes, known as features. By providing models with relevant information, feature engineering significantly enhances their predictive accuracy and decision-making capability. Beyond machine learning, the principles of feature engineering are applied in various scientific fields, including physics. For example, physicists construct dimensionless numbers such as the Reynolds number in fluid dynamics, the Nusselt number in heat transfer, and the Archimedes number in sedimentation. They also develop first approximations of solutions, such as analytical solutions for the strength of materials in mechanics. Clustering One of the applications of feature engineering has been clustering of feature-objects or sample-objects in a dataset. Especially, feature engineering based on matrix decomposit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Forest

Random forests or random decision forests is an ensemble learning method for statistical classification, classification, regression analysis, regression and other tasks that works by creating a multitude of decision tree learning, decision trees during training. For classification tasks, the output of the random forest is the class selected by most trees. For regression tasks, the output is the average of the predictions of the trees. Random forests correct for decision trees' habit of overfitting to their Test set, training set. The first algorithm for random decision forests was created in 1995 by Tin Kam Ho using the random subspace method, which, in Ho's formulation, is a way to implement the "stochastic discrimination" approach to classification proposed by Eugene Kleinberg. An extension of the algorithm was developed by Leo Breiman and Adele Cutler, who registered "Random Forests" as a trademark in 2006 (, owned by Minitab, Minitab, Inc.). The extension combines Breiman's ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machine

In machine learning, support vector machines (SVMs, also support vector networks) are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories, SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). In addition to performing linear classification, SVMs can efficiently perform non-linear classification using the ''kernel trick'', representing the data only through a set of pairwise similarity comparisons between the original data points using a kernel function, which transforms them into coordinates in a higher-dimensional feature space. Thus, SVMs use the kernel trick to implicitly map their inputs into high-dimensional feature spaces, where linear classification can be performed. Being max-margin models, SVMs are resilient to noisy data (e.g., misclassified examples). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Topological Data Analysis

In applied mathematics, topological data analysis (TDA) is an approach to the analysis of datasets using techniques from topology. Extraction of information from datasets that are high-dimensional, incomplete and noisy is generally challenging. TDA provides a general framework to analyze such data in a manner that is insensitive to the particular metric chosen and provides dimensionality reduction and robustness to noise. Beyond this, it inherits functoriality, a fundamental concept of modern mathematics, from its topological nature, which allows it to adapt to new mathematical tools. The initial motivation is to study the shape of data. TDA has combined algebraic topology and other tools from pure mathematics to allow mathematically rigorous study of "shape". The main tool is persistent homology, an adaptation of homology to point cloud data. Persistent homology has been applied to many types of data across many fields. Moreover, its mathematical foundation is also of theoretica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomography, seismic signals, Altimeter, altimetry processing, and scientific measurements. Signal processing techniques are used to optimize transmissions, Data storage, digital storage efficiency, correcting distorted signals, improve subjective video quality, and to detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital refinement of these techniques can be found in the digital control systems of the 1940s and 1950s. In 1948, Claude Shannon wrote the influential paper "A Mathematical Theory of Communication" which was publis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graph Neural Network

Graph neural networks (GNN) are specialized artificial neural networks that are designed for tasks whose inputs are graphs. One prominent example is molecular drug design. Each input sample is a graph representation of a molecule, where atoms form the nodes and chemical bonds between atoms form the edges. In addition to the graph representation, the input also includes known chemical properties for each of the atoms. Dataset samples may thus differ in length, reflecting the varying numbers of atoms in molecules, and the varying number of bonds between them. The task is to predict the efficacy of a given molecule for a specific medical application, like eliminating ''E. coli'' bacteria. The key design element of GNNs is the use of ''pairwise message passing'', such that graph nodes iteratively update their representations by exchanging information with their neighbors. Several GNN architectures have been proposed, which implement different flavors of message passing, started ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Molecule

A molecule is a group of two or more atoms that are held together by Force, attractive forces known as chemical bonds; depending on context, the term may or may not include ions that satisfy this criterion. In quantum physics, organic chemistry, and biochemistry, the distinction from ions is dropped and ''molecule'' is often used when referring to polyatomic ions. A molecule may be homonuclear, that is, it consists of atoms of one chemical element, e.g. two atoms in the oxygen molecule (O2); or it may be heteronuclear, a chemical compound composed of more than one element, e.g. water (molecule), water (two hydrogen atoms and one oxygen atom; H2O). In the kinetic theory of gases, the term ''molecule'' is often used for any gaseous particle regardless of its composition. This relaxes the requirement that a molecule contains two or more atoms, since the noble gases are individual atoms. Atoms and complexes connected by non-covalent interactions, such as hydrogen bonds or ionic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euclidean Space

Euclidean space is the fundamental space of geometry, intended to represent physical space. Originally, in Euclid's ''Elements'', it was the three-dimensional space of Euclidean geometry, but in modern mathematics there are ''Euclidean spaces'' of any positive integer dimension ''n'', which are called Euclidean ''n''-spaces when one wants to specify their dimension. For ''n'' equal to one or two, they are commonly called respectively Euclidean lines and Euclidean planes. The qualifier "Euclidean" is used to distinguish Euclidean spaces from other spaces that were later considered in physics and modern mathematics. Ancient Greek geometers introduced Euclidean space for modeling the physical space. Their work was collected by the ancient Greek mathematician Euclid in his ''Elements'', with the great innovation of '' proving'' all properties of the space as theorems, by starting from a few fundamental properties, called '' postulates'', which either were considered as evid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different types of data including text, images and audio. Convolution-based networks are the de-facto standard in deep learning-based approaches to computer vision and image processing, and have only recently been replaced—in some cases—by newer deep learning architectures such as the transformer. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by the regularization that comes from using shared weights over fewer connections. For example, for ''each'' neuron in the fully-connected layer, 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded ''convolution'' (or cross-correlation) kernels, only 25 weights for each convolutio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image

An image or picture is a visual representation. An image can be Two-dimensional space, two-dimensional, such as a drawing, painting, or photograph, or Three-dimensional space, three-dimensional, such as a carving or sculpture. Images may be displayed through other media, including a Projector, projection on a surface, activation of electronic signals, or Display device, digital displays; they can also be reproduced through mechanical means, such as photography, printmaking, or Photocopier, photocopying. Images can also be Animation, animated through digital or physical processes. In the context of signal processing, an image is a distributed amplitude of color(s). In optics, the term ''image'' (or ''optical image'') refers specifically to the reproduction of an object formed by light waves coming from the object. A ''volatile image'' exists or is perceived only for a short period. This may be a reflection of an object by a mirror, a projection of a camera obscura, or a scene d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |