A graph neural network (GNN) belongs to a class of

artificial neural network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected units ...

s for processing data that can be represented as

graphs

Graph may refer to:

Mathematics

*Graph (discrete mathematics), a structure made of vertices and edges

**Graph theory, the study of such graphs and their properties

* Graph (topology), a topological space resembling a graph in the sense of discr ...

.

In the more general subject of "geometric

deep learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs.

A

convolutional neural network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Netwo ...

layer, in the context of

computer vision

Computer vision is an Interdisciplinarity, interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate t ...

, can be seen as a GNN applied to graphs whose nodes are

pixel

In digital imaging, a pixel (abbreviated px), pel, or picture element is the smallest addressable element in a raster image, or the smallest point in an all points addressable display device.

In most digital display devices, pixels are the s ...

s and only adjacent pixels are connected by edges in the graph. A

transformer

A transformer is a passive component that transfers electrical energy from one electrical circuit to another circuit, or multiple circuits. A varying current in any coil of the transformer produces a varying magnetic flux in the transformer' ...

layer, in

natural language processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to proc ...

, can be seen as a GNN applied to

complete graph

In the mathematical field of graph theory, a complete graph is a simple undirected graph in which every pair of distinct vertices is connected by a unique edge. A complete digraph is a directed graph in which every pair of distinct vertices ...

s whose nodes are

words

A word is a basic element of language that carries an objective or practical meaning, can be used on its own, and is uninterruptible. Despite the fact that language speakers often have an intuitive grasp of what a word is, there is no conse ...

or tokens in a passage of

natural language

In neuropsychology, linguistics, and philosophy of language, a natural language or ordinary language is any language that has evolved naturally in humans through use and repetition without conscious planning or premeditation. Natural languag ...

text.

The key design element of GNNs is the use of ''pairwise message passing'', such that graph nodes iteratively update their representations by exchanging information with their neighbors. Since their inception, several different GNN architectures have been proposed,

which implement different flavors of message passing,

started by recursive

or convolutional constructive

approaches. , whether it is possible to define GNN architectures "going beyond" message passing, or if every GNN can be built on message passing over suitably defined graphs, is an open research question.

Relevant application domains for GNNs include

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to proc ...

,

social networks

A social network is a social structure made up of a set of social actors (such as individuals or organizations), sets of dyadic ties, and other social interactions between actors. The social network perspective provides a set of methods for ...

,

,

molecular biology

Molecular biology is the branch of biology that seeks to understand the molecular basis of biological activity in and between cells, including biomolecular synthesis, modification, mechanisms, and interactions. The study of chemical and phys ...

, chemistry,

physics

Physics is the natural science that studies matter, its fundamental constituents, its motion and behavior through space and time, and the related entities of energy and force. "Physical science is that department of knowledge which rel ...

and

NP-hard

In computational complexity theory, NP-hardness ( non-deterministic polynomial-time hardness) is the defining property of a class of problems that are informally "at least as hard as the hardest problems in NP". A simple example of an NP-hard pr ...

combinatorial optimization

Combinatorial optimization is a subfield of mathematical optimization that consists of finding an optimal object from a finite set of objects, where the set of feasible solutions is discrete or can be reduced to a discrete set. Typical combi ...

problems.

Several

open source

Open source is source code that is made freely available for possible modification and redistribution. Products include permission to use the source code, design documents, or content of the product. The open-source model is a decentralized sof ...

libraries

A library is a collection of materials, books or media that are accessible for use and not just for display purposes. A library provides physical (hard copies) or digital access (soft copies) materials, and may be a physical location or a vir ...

implementing graph neural networks are available, such as PyTorch Geometric

(

PyTorch

PyTorch is a machine learning framework based on the Torch library, used for applications such as computer vision and natural language processing, originally developed by Meta AI and now part of the Linux Foundation umbrella. It is free and op ...

), TensorFlow GNN

(

TensorFlow

TensorFlow is a free and open-source software library for machine learning and artificial intelligence. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks. "It is machine learning ...

), jraph

[ ( Google JAX), and GraphNeuralNetworks.jl][ (]Julia

Julia is usually a feminine given name. It is a Latinate feminine form of the name Julio and Julius. (For further details on etymology, see the Wiktionary entry "Julius".) The given name ''Julia'' had been in use throughout Late Antiquity (e ...

, Flux

Flux describes any effect that appears to pass or travel (whether it actually moves or not) through a surface or substance. Flux is a concept in applied mathematics and vector calculus which has many applications to physics. For transport ...

).

Architecture

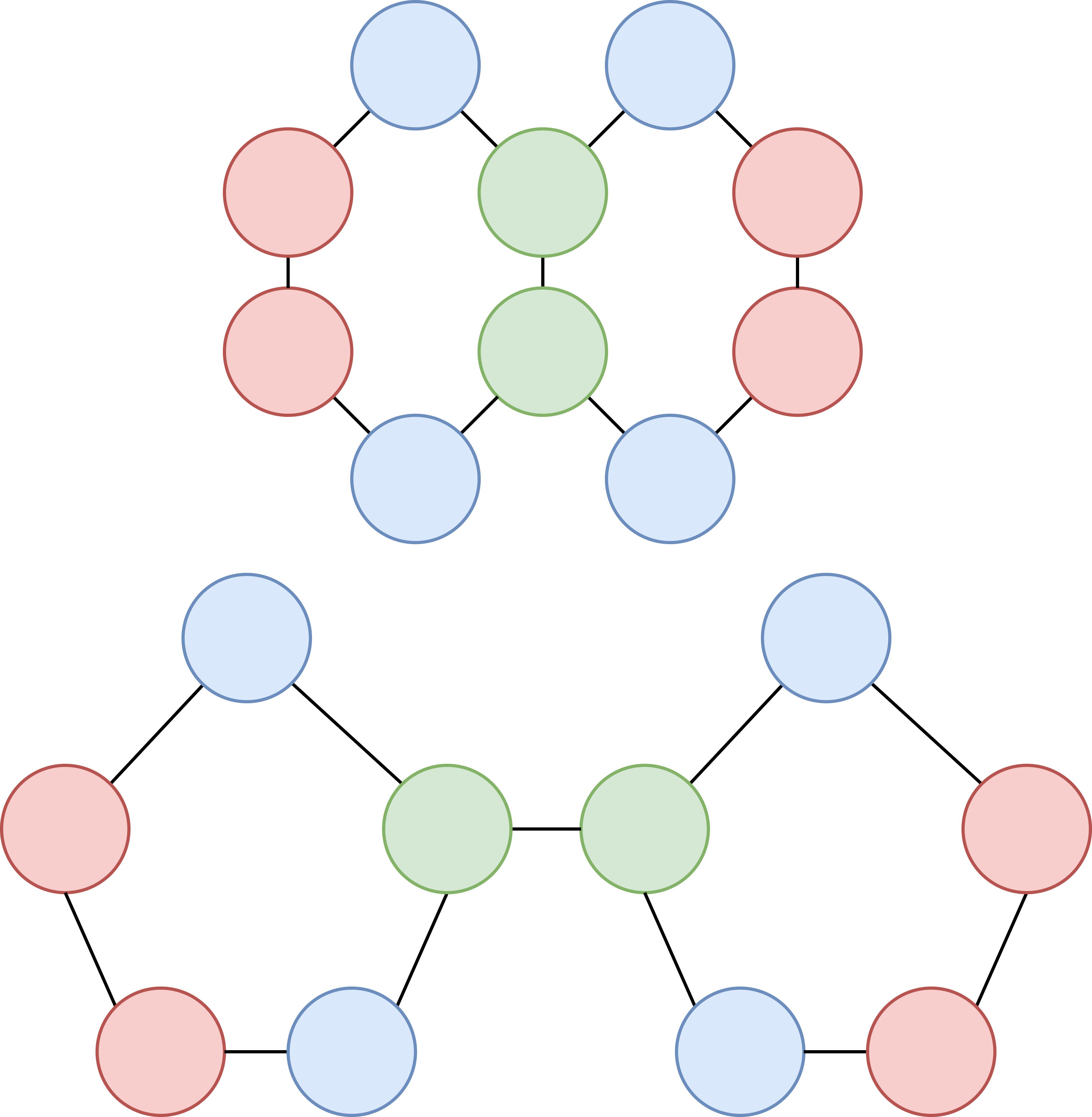

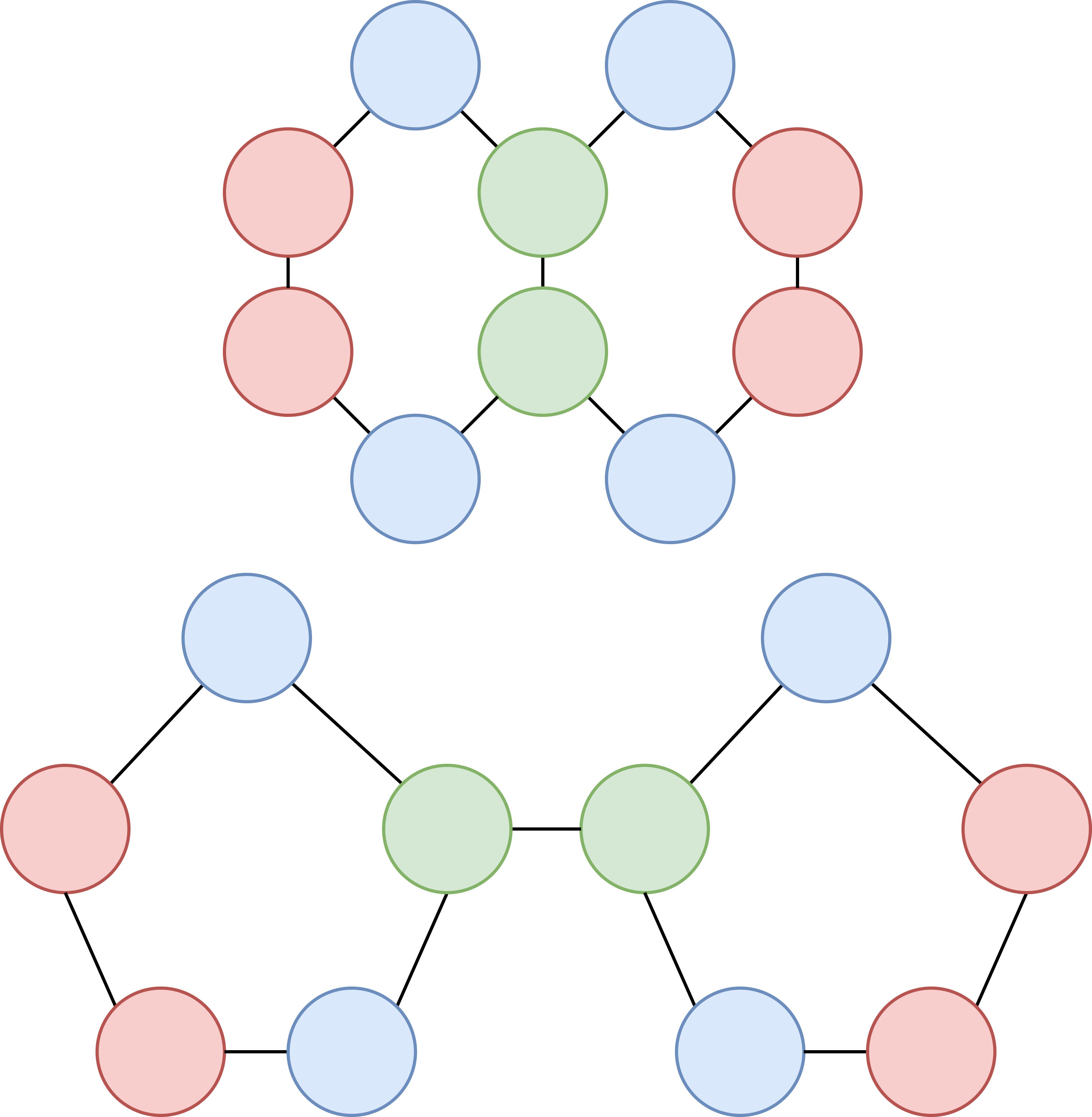

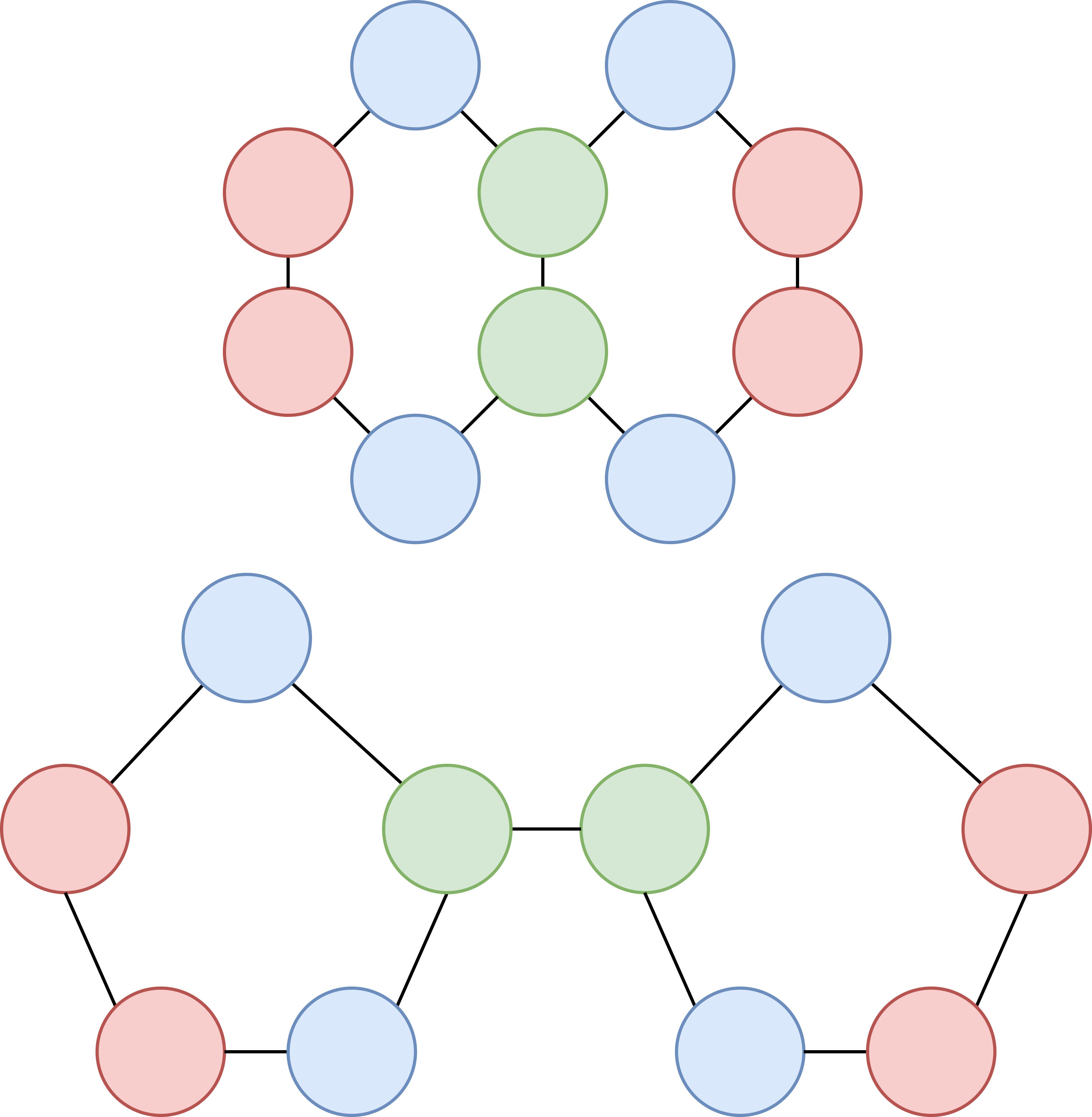

The architecture of a generic GNN implements the following fundamental layers:maps

A map is a symbolic depiction emphasizing relationships between elements of some space, such as objects, regions, or themes.

Many maps are static, fixed to paper or some other durable medium, while others are dynamic or interactive. Althoug ...

a representation of a graph into an updated representation of the same graph. In the literature, permutation equivariant layers are implemented via pairwise message passing between graph nodes.downsampling In digital signal processing, downsampling, compression, and decimation are terms associated with the process of ''resampling'' in a multi-rate digital signal processing system. Both ''downsampling'' and ''decimation'' can be synonymous with ''com ...

. Local pooling is used to increase the receptive field of a GNN, in a similar fashion to pooling layers in convolutional neural network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Netwo ...

s. Examples include k-nearest neighbours pooling, top-k pooling,molecules

A molecule is a group of two or more atoms held together by attractive forces known as chemical bonds; depending on context, the term may or may not include ions which satisfy this criterion. In quantum physics, organic chemistry, and bioc ...

with the same atoms

Every atom is composed of a nucleus and one or more electrons bound to the nucleus. The nucleus is made of one or more protons and a number of neutrons. Only the most common variety of hydrogen has no neutrons.

Every solid, liquid, gas ...

but different bonds) that cannot be distinguished by GNNs. More powerful GNNs operating on higher-dimension geometries such as simplicial complex

In mathematics, a simplicial complex is a set composed of points, line segments, triangles, and their ''n''-dimensional counterparts (see illustration). Simplicial complexes should not be confused with the more abstract notion of a simplicial ...

es can be designed.

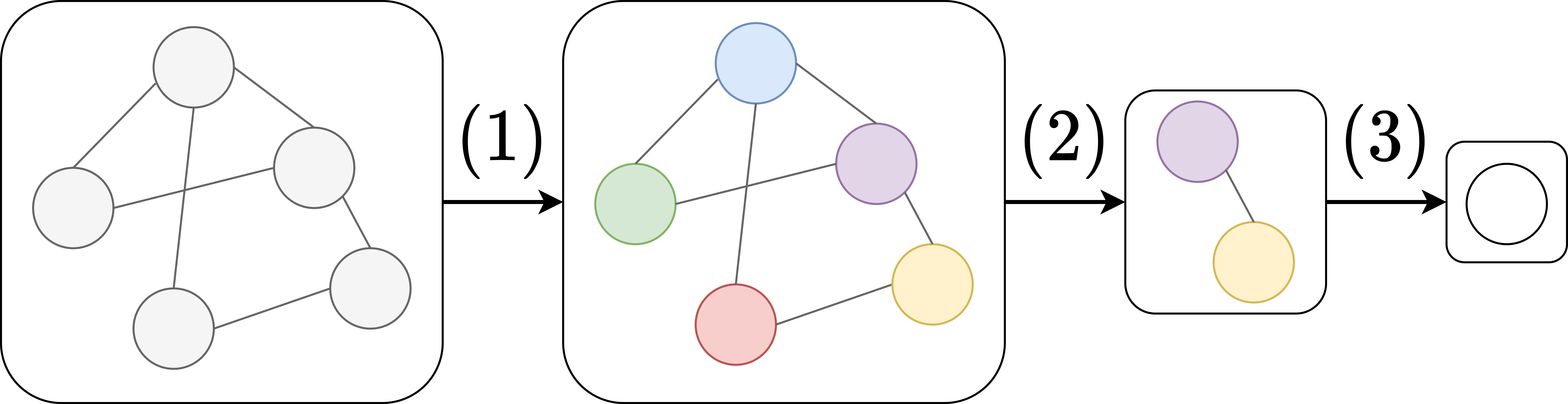

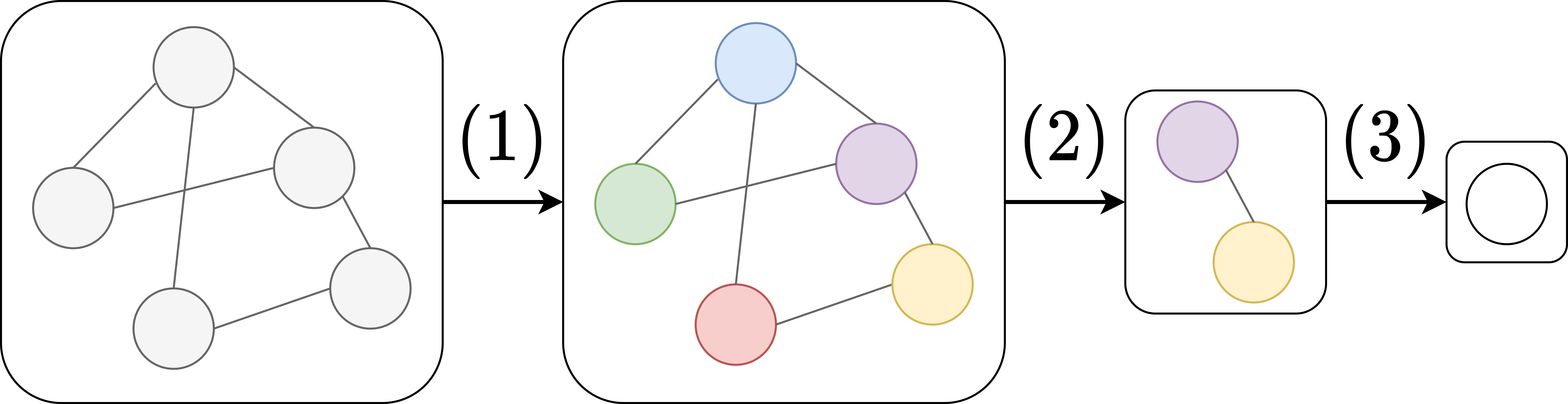

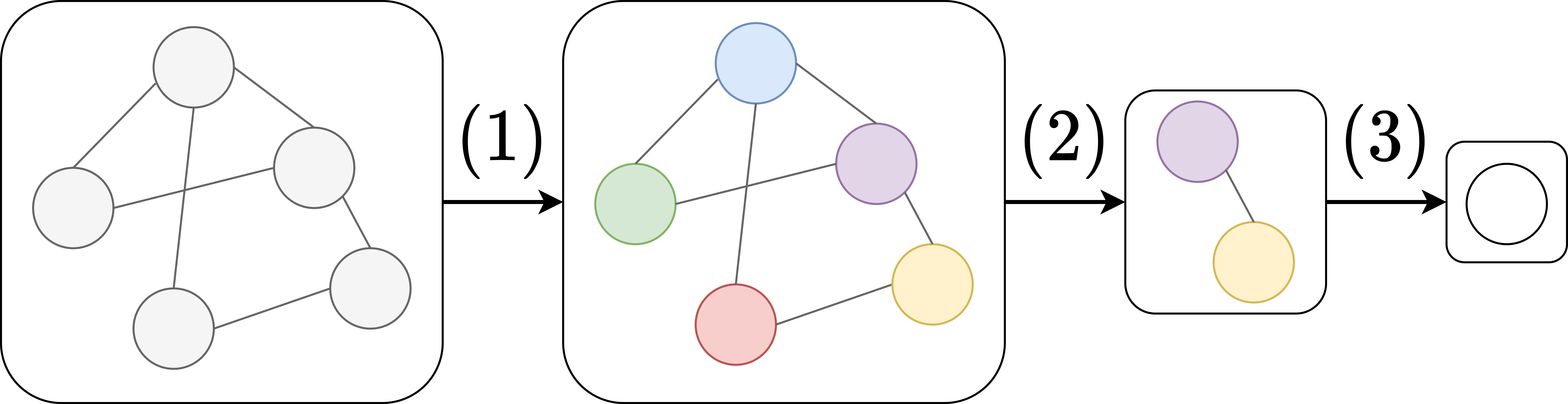

Message passing layers

Message passing layers are permutation-equivariant layers mapping a graph into an updated representation of the same graph. Formally, they can be expressed as message passing neural networks (MPNNs).graph

Graph may refer to:

Mathematics

*Graph (discrete mathematics), a structure made of vertices and edges

**Graph theory, the study of such graphs and their properties

*Graph (topology), a topological space resembling a graph in the sense of discre ...

, where is the node set and is the edge set. Let be the neighbourhood

A neighbourhood (British English, Irish English, Australian English and Canadian English) or neighborhood (American English; American and British English spelling differences, see spelling differences) is a geographically localised community ...

of some node . Additionally, let be the features of node , and be the features of edge . An MPNN layer

Layer or layered may refer to:

Arts, entertainment, and media

*Layers (Kungs album), ''Layers'' (Kungs album)

*Layers (Les McCann album), ''Layers'' (Les McCann album)

*Layers (Royce da 5'9" album), ''Layers'' (Royce da 5'9" album)

*"Layers", the ...

can be expressed as follows:differentiable functions

In mathematics, a differentiable function of one real variable is a function whose derivative exists at each point in its domain. In other words, the graph of a differentiable function has a non- vertical tangent line at each interior point in ...

(e.g., artificial neural network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected units ...

s), and is a permutation

In mathematics, a permutation of a set is, loosely speaking, an arrangement of its members into a sequence or linear order, or if the set is already ordered, a rearrangement of its elements. The word "permutation" also refers to the act or p ...

invariant aggregation operator that can accept an arbitrary number of inputs (e.g., element-wise sum, mean, or max). In particular, and are referred to as ''update'' and ''message'' functions, respectively. Intuitively, in an MPNN computational block, graph nodes ''update'' their representations by ''aggregating'' the ''messages'' received from their neighbours.

The outputs of one or more MPNN layers are node representations for each node in the graph. Node representations can be employed for any downstream task, such as node/graph classification Classification is a process related to categorization, the process in which ideas and objects are recognized, differentiated and understood.

Classification is the grouping of related facts into classes.

It may also refer to:

Business, organizat ...

or edge prediction.

Graph nodes in an MPNN update their representation aggregating information from their immediate neighbours. As such, stacking MPNN layers means that one node will be able to communicate with nodes that are at most "hops" away. In principle, to ensure that every node receives information from every other node, one would need to stack a number of MPNN layers equal to the graph diameter

In geometry, a diameter of a circle is any straight line segment that passes through the center of the circle and whose endpoints lie on the circle. It can also be defined as the longest chord of the circle. Both definitions are also valid fo ...

. However, stacking many MPNN layers may cause issues such as oversmoothingresidual neural network

A residual neural network (ResNet) is an artificial neural network (ANN). It is a gateless or open-gated variant of the HighwayNet, the first working very deep feedforward neural network

A feedforward neural network (FNN) is an artificial neu ...

s), gated update rulescomplete graph

In the mathematical field of graph theory, a complete graph is a simple undirected graph in which every pair of distinct vertices is connected by a unique edge. A complete digraph is a directed graph in which every pair of distinct vertices ...

, can mitigate oversquashing in problems where long-range dependencies are required.

Graph convolutional network

The graph convolutional network (GCN) was first introduced by Thomas Kipf and Max Welling

Max Welling (born 1968) is a Dutch computer scientist in machine learning at the University of Amsterdam. In August 2017, the university spin-off ''Scyfer BV'', co-founded by Welling, was acquired by Qualcomm. He has since then served as a Vice P ...

in 2017.convolutional neural network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Netwo ...

s to graph-structured data.

The formal expression of a GCN layer reads as follows:

:

where is the matrix of node representations , is the matrix of node features , is an activation function

In artificial neural networks, the activation function of a node defines the output of that node given an input or set of inputs.

A standard integrated circuit can be seen as a digital network of activation functions that can be "ON" (1) or " ...

(e.g., ReLU), is the graph adjacency matrix

In graph theory and computer science, an adjacency matrix is a square matrix used to represent a finite graph. The elements of the matrix indicate whether pairs of vertices are adjacent or not in the graph.

In the special case of a finite simple ...

with the addition of self-loops, is the graph degree matrix In the mathematical field of algebraic graph theory, the degree matrix of an undirected graph is a diagonal matrix which contains information about the degree of each vertex—that is, the number of edges attached to each vertex.. It is used togethe ...

with the addition of self-loops, and is a matrix of trainable parameters.

In particular, let be the graph adjacency matrix: then, one can define and , where denotes the identity matrix

In linear algebra, the identity matrix of size n is the n\times n square matrix with ones on the main diagonal and zeros elsewhere.

Terminology and notation

The identity matrix is often denoted by I_n, or simply by I if the size is immaterial ...

. This normalization ensures that the eigenvalue

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denot ...

s of are bounded in the range  In the more general subject of "geometric deep learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs. A

In the more general subject of "geometric deep learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs. A

In the more general subject of "geometric deep learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs. A

In the more general subject of "geometric deep learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs. A