Mixed Models on:

[Wikipedia]

[Google]

[Amazon]

A mixed model, mixed-effects model or mixed error-component model is a

LMMs allow us to understand the important effects between and within levels while incorporating the corrections for standard errors for non-independence embedded in the data structure. In experimental fields such as social psychology, psycholinguistics, cognitive psychology (and neuroscience), where studies often involve multiple grouping variables, failing to account for random effects can lead to inflated Type I error rates and unreliable conclusions. For instance, when analyzing data from experiments that involve both samples of participants and samples of stimuli (e.g., images, scenarios, etc.), ignoring variation in either of these grouping variables (e.g., by averaging over stimuli) can result in misleading conclusions. In such cases, researchers can instead treat both participant and stimulus as random effects with LMMs, and in doing so, can correctly account for the variation in their data across multiple grouping variables. Similarly, when analyzing data from comparative longitudinal surveys, failing to include random effects at all relevant levels—such as country and country-year—can significantly distort the results.

LMMs allow us to understand the important effects between and within levels while incorporating the corrections for standard errors for non-independence embedded in the data structure. In experimental fields such as social psychology, psycholinguistics, cognitive psychology (and neuroscience), where studies often involve multiple grouping variables, failing to account for random effects can lead to inflated Type I error rates and unreliable conclusions. For instance, when analyzing data from experiments that involve both samples of participants and samples of stimuli (e.g., images, scenarios, etc.), ignoring variation in either of these grouping variables (e.g., by averaging over stimuli) can result in misleading conclusions. In such cases, researchers can instead treat both participant and stimulus as random effects with LMMs, and in doing so, can correctly account for the variation in their data across multiple grouping variables. Similarly, when analyzing data from comparative longitudinal surveys, failing to include random effects at all relevant levels—such as country and country-year—can significantly distort the results.

There are several other methods to fit mixed models, including using a mixed effect model (MEM) initially, and then Newton-Raphson (used by R package nlme's lme()), penalized least squares to get a profiled log likelihood only depending on the (low-dimensional) variance-covariance parameters of , i.e., its cov matrix , and then modern direct optimization for that reduced objective function (used by R's lme4 package lmer() and the

There are several other methods to fit mixed models, including using a mixed effect model (MEM) initially, and then Newton-Raphson (used by R package nlme's lme()), penalized least squares to get a profiled log likelihood only depending on the (low-dimensional) variance-covariance parameters of , i.e., its cov matrix , and then modern direct optimization for that reduced objective function (used by R's lme4 package lmer() and the

statistical model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of Sample (statistics), sample data (and similar data from a larger Statistical population, population). A statistical model repre ...

containing both fixed effects and random effect

In econometrics, a random effects model, also called a variance components model, is a statistical model where the model parameters are random variables. It is a kind of hierarchical linear model, which assumes that the data being analysed are ...

s. These models are useful in a wide variety of disciplines in the physical, biological and social sciences.

They are particularly useful in settings where repeated measurements are made on the same statistical unit

In statistics, a unit is one member of a set of entities being studied. It is the main source for the mathematical abstraction of a "random variable". Common examples of a unit would be a single person, animal, plant, manufactured item, or countr ...

s (see also longitudinal study

A longitudinal study (or longitudinal survey, or panel study) is a research design that involves repeated observations of the same variables (e.g., people) over long periods of time (i.e., uses longitudinal data). It is often a type of observationa ...

), or where measurements are made on clusters of related statistical units. Mixed models are often preferred over traditional analysis of variance

Analysis of variance (ANOVA) is a family of statistical methods used to compare the Mean, means of two or more groups by analyzing variance. Specifically, ANOVA compares the amount of variation ''between'' the group means to the amount of variati ...

regression models because they don't rely on the independent observations assumption. Further, they have their flexibility in dealing with missing values and uneven spacing of repeated measurements. The Mixed model analysis allows measurements to be explicitly modeled in a wider variety of correlation

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics ...

and variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion ...

-covariance

In probability theory and statistics, covariance is a measure of the joint variability of two random variables.

The sign of the covariance, therefore, shows the tendency in the linear relationship between the variables. If greater values of one ...

avoiding biased estimations structures.

This page will discuss mainly linear mixed-effects models rather than generalized linear mixed models or nonlinear mixed-effects model

Nonlinear mixed-effects models constitute a class of statistical models generalizing linear mixed-effects models. Like linear mixed-effects models, they are particularly useful in settings where there are multiple measurements within the same sta ...

s.

Qualitative Description

Linear mixed models (LMMs) arestatistical models

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of sample data (and similar data from a larger population). A statistical model represents, often in considerably idealized form ...

that incorporate fixed

Fixed may refer to:

* ''Fixed'' (EP), EP by Nine Inch Nails

* ''Fixed'' (film), an upcoming animated film directed by Genndy Tartakovsky

* Fixed (typeface), a collection of monospace bitmap fonts that is distributed with the X Window System

* Fi ...

and random effects

In econometrics, a random effects model, also called a variance components model, is a statistical model where the model parameters are random variables. It is a kind of hierarchical linear model, which assumes that the data being analysed are ...

to accurately represent non-independent data structures. LMM is an alternative to analysis of variance

Analysis of variance (ANOVA) is a family of statistical methods used to compare the Mean, means of two or more groups by analyzing variance. Specifically, ANOVA compares the amount of variation ''between'' the group means to the amount of variati ...

. Often, ANOVA assumes the independence

Independence is a condition of a nation, country, or state, in which residents and population, or some portion thereof, exercise self-government, and usually sovereignty, over its territory. The opposite of independence is the status of ...

of observations within each group, however, this assumption may not hold in non-independent data, such as multilevel/hierarchical

A hierarchy (from Greek: , from , 'president of sacred rites') is an arrangement of items (objects, names, values, categories, etc.) that are represented as being "above", "below", or "at the same level as" one another. Hierarchy is an importan ...

, longitudinal

Longitudinal is a geometric term of location which may refer to:

* Longitude

** Line of longitude, also called a meridian

* Longitudinal engine, an internal combustion engine in which the crankshaft is oriented along the long axis of the vehicle, ...

, or correlated

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistic ...

datasets.

Non-independent sets are ones in which the variability between outcomes is due to correlations within groups or between groups. Mixed models properly account for nest

A nest is a structure built for certain animals to hold Egg (biology), eggs or young. Although nests are most closely associated with birds, members of all classes of vertebrates and some invertebrates construct nests. They may be composed of ...

structures/hierarchical data structures where observations are influenced by their nested associations. For example, when studying education methods involving multiple schools, there are multiple levels of variables to consider. The individual level/lower level comprises individual students or teachers within the school. The observations obtained from this student/teacher is nested within their school. For example, Student A is a unit within the School A. The next higher level is the school. At the higher level, the school contains multiple individual students and teachers. The school level influences the observations obtained from the students and teachers. For Example, School A and School B are the higher levels each with its set of Student A and Student B respectively. This represents a hierarchical data scheme. A solution to modeling hierarchical data is using linear mixed models.

LMMs allow us to understand the important effects between and within levels while incorporating the corrections for standard errors for non-independence embedded in the data structure. In experimental fields such as social psychology, psycholinguistics, cognitive psychology (and neuroscience), where studies often involve multiple grouping variables, failing to account for random effects can lead to inflated Type I error rates and unreliable conclusions. For instance, when analyzing data from experiments that involve both samples of participants and samples of stimuli (e.g., images, scenarios, etc.), ignoring variation in either of these grouping variables (e.g., by averaging over stimuli) can result in misleading conclusions. In such cases, researchers can instead treat both participant and stimulus as random effects with LMMs, and in doing so, can correctly account for the variation in their data across multiple grouping variables. Similarly, when analyzing data from comparative longitudinal surveys, failing to include random effects at all relevant levels—such as country and country-year—can significantly distort the results.

LMMs allow us to understand the important effects between and within levels while incorporating the corrections for standard errors for non-independence embedded in the data structure. In experimental fields such as social psychology, psycholinguistics, cognitive psychology (and neuroscience), where studies often involve multiple grouping variables, failing to account for random effects can lead to inflated Type I error rates and unreliable conclusions. For instance, when analyzing data from experiments that involve both samples of participants and samples of stimuli (e.g., images, scenarios, etc.), ignoring variation in either of these grouping variables (e.g., by averaging over stimuli) can result in misleading conclusions. In such cases, researchers can instead treat both participant and stimulus as random effects with LMMs, and in doing so, can correctly account for the variation in their data across multiple grouping variables. Similarly, when analyzing data from comparative longitudinal surveys, failing to include random effects at all relevant levels—such as country and country-year—can significantly distort the results.

The Fixed Effect

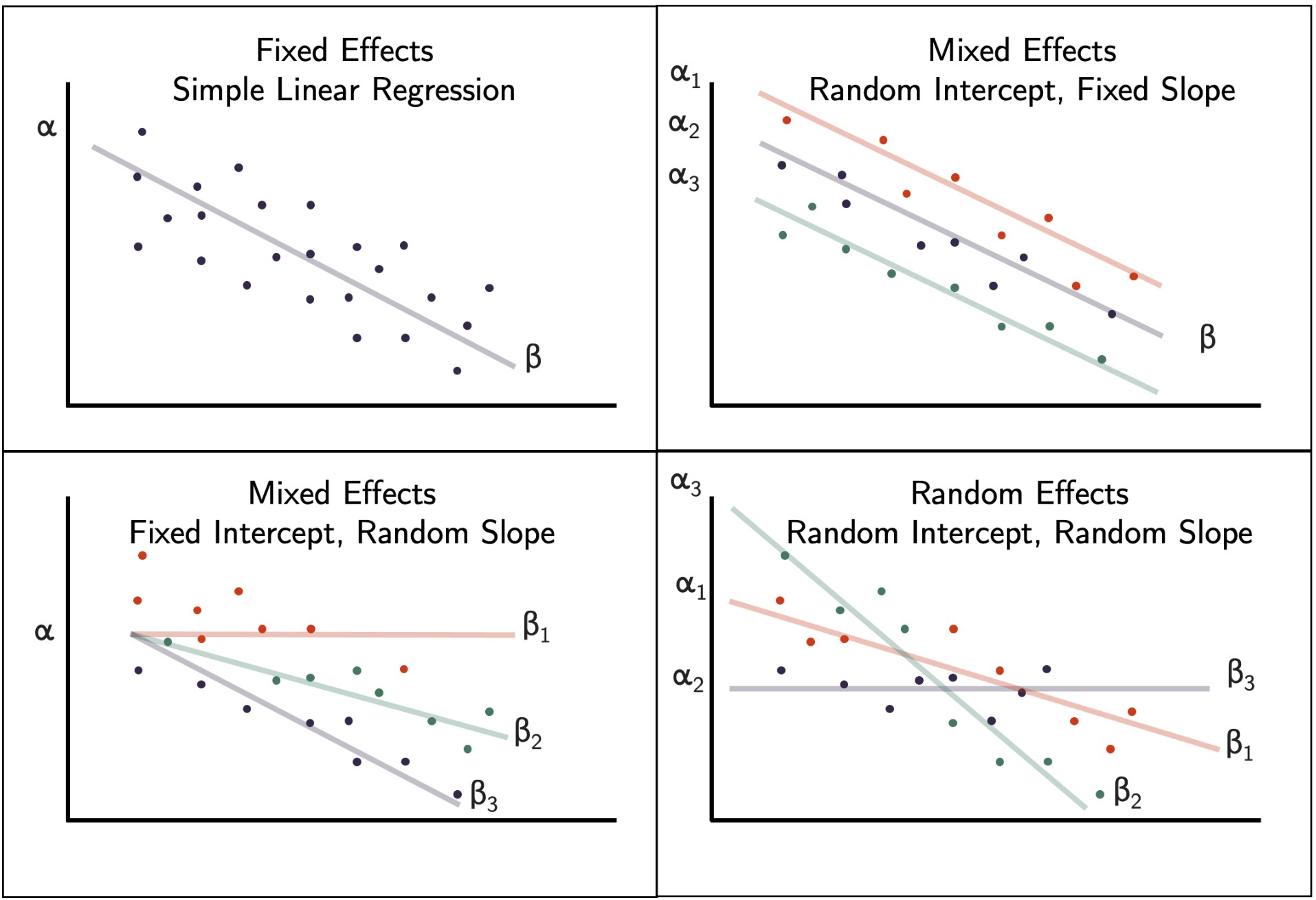

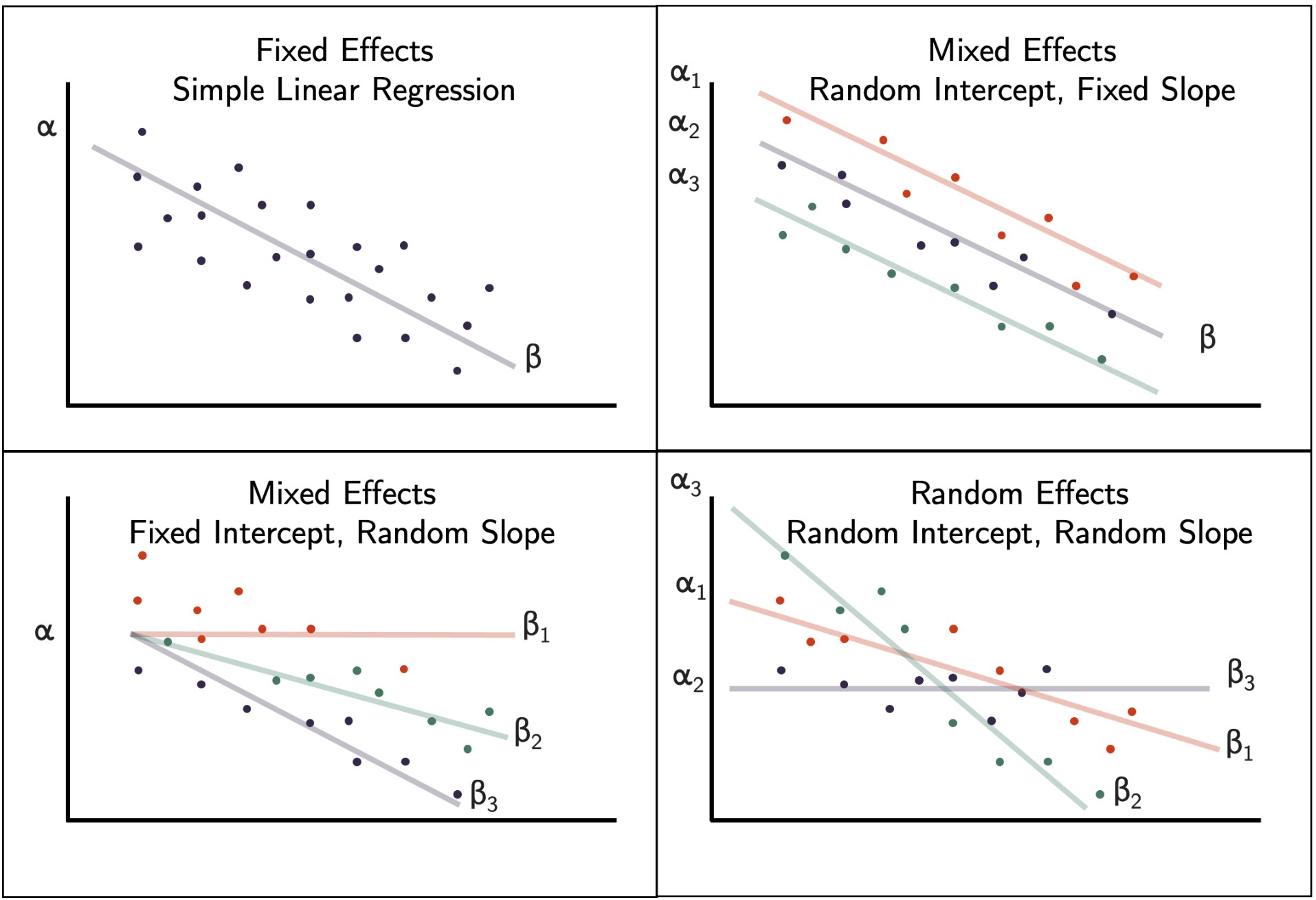

Fixed effects encapsulate the tendencies/trends that are consistent at the levels of primary interest. These effects are considered fixed because they are non-random and assumed to be constant for the population being studied. For example, when studying education a fixed effect could represent overall school level effects that are consistent across all schools. While the hierarchy of the data set is typically obvious, the specific fixed effects that affect the average responses for all subjects must be specified. Some fixed effect coefficients are sufficient without corresponding random effects where as other fixed coefficients only represent an average where the individual units are random. These may be determined by incorporating random intercepts andslopes

In mathematics, the slope or gradient of a Line (mathematics), line is a number that describes the direction (geometry), direction of the line on a plane (geometry), plane. Often denoted by the letter ''m'', slope is calculated as the ratio of t ...

.

In most situations, several related models are considered and the model that best represents a universal model is adopted.

The Random Effect, ε

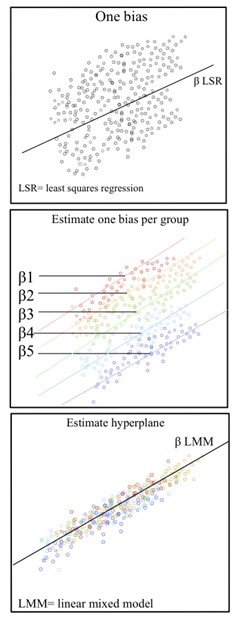

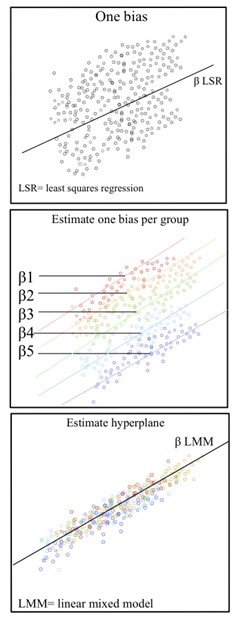

A key component of the mixed model is the incorporation of random effects with the fixed effect. Fixed effects are often fitted to represent the underlying model. In Linear mixed models, the trueregression

Regression or regressions may refer to:

Arts and entertainment

* ''Regression'' (film), a 2015 horror film by Alejandro Amenábar, starring Ethan Hawke and Emma Watson

* ''Regression'' (magazine), an Australian punk rock fanzine (1982–1984)

* ...

of the population is linear, β. The fixed data is fitted at the highest level. Random effects introduce statistical variability

In statistics, dispersion (also called variability, scatter, or spread) is the extent to which a distribution is stretched or squeezed. Common examples of measures of statistical dispersion are the variance, standard deviation, and interquartile ...

at different levels of the data hierarchy. These account for the unmeasured sources of variance that affect certain groups in the data. For example, the differences between student 1 and student 2 in the same class, or the differences between class 1 and class 2 in the same school.

History and current status

Ronald Fisher

Sir Ronald Aylmer Fisher (17 February 1890 – 29 July 1962) was a British polymath who was active as a mathematician, statistician, biologist, geneticist, and academic. For his work in statistics, he has been described as "a genius who a ...

introduced random effects model

In econometrics, a random effects model, also called a variance components model, is a statistical model where the model parameters are random variables. It is a kind of hierarchical linear model, which assumes that the data being analysed are ...

s to study the correlations of trait values between relatives. In the 1950s, Charles Roy Henderson

Charles Roy Henderson ( – ) was an American statistician and a pioneer in animal breeding — the application of quantitative methods for the genetic evaluation of domestic livestock. This is critically important because it allows farmers and g ...

provided best linear unbiased estimates of fixed effects

In statistics, a fixed effects model is a statistical model in which the model parameters are fixed or non-random quantities. This is in contrast to random effects models and mixed models in which all or some of the model parameters are random v ...

and best linear unbiased prediction

In statistics, best linear unbiased prediction (BLUP) is used in linear mixed models for the estimation of random effects. BLUP was derived by Charles Roy Henderson in 1950 but the term "best linear unbiased predictor" (or "prediction") seems not ...

s of random effects. Subsequently, mixed modeling has become a major area of statistical research, including work on computation of maximum likelihood estimates, non-linear mixed effects models, missing data in mixed effects models, and Bayesian

Thomas Bayes ( ; c. 1701 – 1761) was an English statistician, philosopher, and Presbyterian minister.

Bayesian ( or ) may be either any of a range of concepts and approaches that relate to statistical methods based on Bayes' theorem

Bayes ...

estimation of mixed effects models. Mixed models are applied in many disciplines where multiple correlated measurements are made on each unit of interest. They are prominently used in research involving human and animal subjects in fields ranging from genetics to marketing, and have also been used in baseball and industrial statistics.

The mixed linear model association has improved the prevention of false positive associations. Populations are deeply interconnected and the relatedness structure of population dynamics is extremely difficult to model without the use of mixed models. Linear mixed models may not, however, be the only solution. LMM's have a constant- residual variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion ...

assumption that is sometimes violated when accounting for deeply associated continuous

Continuity or continuous may refer to:

Mathematics

* Continuity (mathematics), the opposing concept to discreteness; common examples include

** Continuous probability distribution or random variable in probability and statistics

** Continuous ...

and binary

Binary may refer to:

Science and technology Mathematics

* Binary number, a representation of numbers using only two values (0 and 1) for each digit

* Binary function, a function that takes two arguments

* Binary operation, a mathematical op ...

traits.

Definition

Inmatrix notation

In mathematics, a matrix (: matrices) is a rectangular array or table of numbers, symbols, or expressions, with elements or entries arranged in rows and columns, which is used to represent a mathematical object or property of such an object. ...

a linear mixed model can be represented as

:

where

* is a known vector of observations, with mean ;

* is an unknown vector of fixed effects;

* is an unknown vector of random effects, with mean and variance–covariance matrix ;

* is an unknown vector of random errors, with mean and variance ;

* is the known design matrix

In statistics and in particular in regression analysis, a design matrix, also known as model matrix or regressor matrix and often denoted by X, is a matrix of values of explanatory variables of a set of objects. Each row represents an individual o ...

for the fixed effects relating the observations to , respectively

* is the known design matrix

In statistics and in particular in regression analysis, a design matrix, also known as model matrix or regressor matrix and often denoted by X, is a matrix of values of explanatory variables of a set of objects. Each row represents an individual o ...

for the random effects relating the observations to , respectively.

For example, if each observation can belong to any zero or more of categories then , which has one row per observation, can be chosen to have columns, where a value of for a matrix element of indicates that an observation is known to belong to a category and a value of indicates that an observation is known to not belong to a category. The inferred value of for a category is then a category-specific intercept. If has additional columns, where the non-zero values are instead the value of an independent variable for an observation, then the corresponding inferred value of is a category-specific slope

In mathematics, the slope or gradient of a Line (mathematics), line is a number that describes the direction (geometry), direction of the line on a plane (geometry), plane. Often denoted by the letter ''m'', slope is calculated as the ratio of t ...

for that independent variable. The prior distribution for the category intercepts and slopes is described by the covariance matrix .

Estimation

The joint density of and can be written as: . Assuming normality, , and , and maximizing the joint density over and , gives Henderson's "mixed model equations" (MME) for linear mixed models: : where for example is thematrix transpose

In linear algebra, the transpose of a matrix is an operator which flips a matrix over its diagonal;

that is, it switches the row and column indices of the matrix by producing another matrix, often denoted by (among other notations).

The tr ...

of and is the matrix inverse

In linear algebra, an invertible matrix (''non-singular'', ''non-degenarate'' or ''regular'') is a square matrix that has an inverse. In other words, if some other matrix is multiplied by the invertible matrix, the result can be multiplied by an ...

of .

The solutions to the MME, and are best linear unbiased estimates and predictors for and , respectively. This is a consequence of the Gauss–Markov theorem

In statistics, the Gauss–Markov theorem (or simply Gauss theorem for some authors) states that the ordinary least squares (OLS) estimator has the lowest sampling variance within the class of linear unbiased estimators, if the errors in ...

when the conditional variance

In probability theory and statistics, a conditional variance is the variance of a random variable given the value(s) of one or more other variables.

Particularly in econometrics, the conditional variance is also known as the scedastic function or s ...

of the outcome is not scalable to the identity matrix. When the conditional variance is known, then the inverse variance weighted least squares estimate is best linear unbiased estimates. However, the conditional variance is rarely, if ever, known. So it is desirable to jointly estimate the variance and weighted parameter estimates when solving MMEs.

Choice of random effects structure

One choice that analysts face with mixed models is which random effects (i.e., grouping variables, random intercepts, and random slopes) to include. One prominent recommendation in the context of confirmatory hypothesis testing is to adopt a "maximal" random effects structure, including all possible random effects justified by the experimental design, as a means to control Type I error rates.Software

One method used to fit such mixed models is that of theexpectation–maximization algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method to find (local) maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent varia ...

(EM) where the variance components are treated as unobserved nuisance parameter

In statistics, a nuisance parameter is any parameter which is unspecified but which must be accounted for in the hypothesis testing of the parameters which are of interest.

The classic example of a nuisance parameter comes from the normal distri ...

s in the joint likelihood. Currently, this is the method implemented in statistical software such as Python

Python may refer to:

Snakes

* Pythonidae, a family of nonvenomous snakes found in Africa, Asia, and Australia

** ''Python'' (genus), a genus of Pythonidae found in Africa and Asia

* Python (mythology), a mythical serpent

Computing

* Python (prog ...

(statsmodels package) and SAS (proc mixed), and as initial step only in R's nlme package lme(). The solution to the mixed model equations is a maximum likelihood estimate

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed stati ...

when the distribution of the errors is normal.

There are several other methods to fit mixed models, including using a mixed effect model (MEM) initially, and then Newton-Raphson (used by R package nlme's lme()), penalized least squares to get a profiled log likelihood only depending on the (low-dimensional) variance-covariance parameters of , i.e., its cov matrix , and then modern direct optimization for that reduced objective function (used by R's lme4 package lmer() and the

There are several other methods to fit mixed models, including using a mixed effect model (MEM) initially, and then Newton-Raphson (used by R package nlme's lme()), penalized least squares to get a profiled log likelihood only depending on the (low-dimensional) variance-covariance parameters of , i.e., its cov matrix , and then modern direct optimization for that reduced objective function (used by R's lme4 package lmer() and the Julia

Julia may refer to:

People

*Julia (given name), including a list of people with the name

*Julia (surname), including a list of people with the name

*Julia gens, a patrician family of Ancient Rome

*Julia (clairvoyant) (fl. 1689), lady's maid of Qu ...

package MixedModels.jl) and direct optimization of the likelihood (used by e.g. R's glmmTMB). Notably, while the canonical form proposed by Henderson is useful for theory, many popular software packages use a different formulation for numerical computation in order to take advantage of sparse matrix methods (e.g. lme4 and MixedModels.jl).

In the context of Bayesian methods, the brms package provides a user-friendly interface for fitting mixed models in R using Stan, allowing for the incorporation of prior distributions and the estimation of posterior distributions. In python, Bambi provides a similarly streamlined approach for fitting mixed effects models using PyMC.

See also

*Nonlinear mixed-effects model

Nonlinear mixed-effects models constitute a class of statistical models generalizing linear mixed-effects models. Like linear mixed-effects models, they are particularly useful in settings where there are multiple measurements within the same sta ...

* Fixed effects model

In statistics, a fixed effects model is a statistical model in which the model parameters are fixed or non-random quantities. This is in contrast to random effects models and mixed models in which all or some of the model parameters are random va ...

* Generalized linear mixed model

* Linear regression

In statistics, linear regression is a statistical model, model that estimates the relationship between a Scalar (mathematics), scalar response (dependent variable) and one or more explanatory variables (regressor or independent variable). A mode ...

* Mixed-design analysis of variance

* Multilevel model

Multilevel models are statistical models of parameters that vary at more than one level. An example could be a model of student performance that contains measures for individual students as well as measures for classrooms within which the studen ...

* Random effects model

In econometrics, a random effects model, also called a variance components model, is a statistical model where the model parameters are random variables. It is a kind of hierarchical linear model, which assumes that the data being analysed are ...

* Repeated measures design

Repeated measures design is a research design that involves multiple measures of the same variable taken on the same or matched subjects either under different conditions or over two or more time periods. For instance, repeated measurements are c ...

* Empirical Bayes method

Empirical Bayes methods are procedures for statistical inference in which the prior probability distribution is estimated from the data. This approach stands in contrast to standard Bayesian methods, for which the prior distribution is fixed ...

References

Further reading

* * * {{DEFAULTSORT:Mixed Model Regression models Analysis of variance