history of statistics on:

[Wikipedia]

[Google]

[Amazon]

The term 'statistic' was introduced by the Italian scholar Girolamo Ghilini in 1589 with reference to this science. The birth of statistics is often dated to 1662, when John Graunt, along with

The term 'statistic' was introduced by the Italian scholar Girolamo Ghilini in 1589 with reference to this science. The birth of statistics is often dated to 1662, when John Graunt, along with

The first statistical bodies were established in the early 19th century. The Royal Statistical Society was founded in 1834 and

The first statistical bodies were established in the early 19th century. The Royal Statistical Society was founded in 1834 and

In 1747, while serving as surgeon on HM Bark ''Salisbury'', James Lind (physician), James Lind carried out a controlled experiment to develop a cure for scurvy. In this study his subjects' cases "were as similar as I could have them", that is he provided strict entry requirements to reduce extraneous variation. The men were paired, which provided Blocking (statistics), blocking. From a modern perspective, the main thing that is missing is randomized allocation of subjects to treatments.

Lind is today often described as a one-factor-at-a-time experimenter. Similar one-factor-at-a-time (OFAT) experimentation was performed at the Rothamsted Research Station in the 1840s by Sir John Lawes to determine the optimal inorganic fertilizer for use on wheat.

A theory of statistical inference was developed by Charles Sanders Peirce, Charles S. Peirce in "Charles Sanders Peirce bibliography#illus, Illustrations of the Logic of Science" (1877–1878) and "Charles Sanders Peirce bibliography#SIL, A Theory of Probable Inference" (1883), two publications that emphasized the importance of randomization-based inference in statistics. In another study, Peirce randomly assigned volunteers to a blinding (medicine), blinded, repeated measures design, repeated-measures design to evaluate their ability to discriminate weights.

Peirce's experiment inspired other researchers in psychology and education, which developed a research tradition of randomized experiments in laboratories and specialized textbooks in the 1800s. Peirce also contributed the first English-language publication on an optimal design for Regression analysis, regression-statistical model, models in 1876. A pioneering optimal design for polynomial regression was suggested by Joseph Diaz Gergonne, Gergonne in 1815. In 1918 Kirstine Smith published optimal designs for polynomials of degree six (and less).

The use of a sequence of experiments, where the design of each may depend on the results of previous experiments, including the possible decision to stop experimenting, was pioneered by Abraham Wald in the context of sequential tests of statistical hypotheses. Surveys are available of optimal sequential analysis, sequential designs,Herman Chernoff, Chernoff, H. (1972) ''Sequential Analysis and Optimal Design'', Society for Industrial and Applied Mathematics, SIAM Monograph. and of Minimisation (clinical trials), adaptive designs. One specific type of sequential design is the "two-armed bandit", generalized to the multi-armed bandit, on which early work was done by Herbert Robbins in 1952.

The term "design of experiments" (DOE) derives from early statistical work performed by Ronald Fisher, Sir Ronald Fisher. He was described by Anders Hald as "a genius who almost single-handedly created the foundations for modern statistical science." Fisher initiated the principles of

In 1747, while serving as surgeon on HM Bark ''Salisbury'', James Lind (physician), James Lind carried out a controlled experiment to develop a cure for scurvy. In this study his subjects' cases "were as similar as I could have them", that is he provided strict entry requirements to reduce extraneous variation. The men were paired, which provided Blocking (statistics), blocking. From a modern perspective, the main thing that is missing is randomized allocation of subjects to treatments.

Lind is today often described as a one-factor-at-a-time experimenter. Similar one-factor-at-a-time (OFAT) experimentation was performed at the Rothamsted Research Station in the 1840s by Sir John Lawes to determine the optimal inorganic fertilizer for use on wheat.

A theory of statistical inference was developed by Charles Sanders Peirce, Charles S. Peirce in "Charles Sanders Peirce bibliography#illus, Illustrations of the Logic of Science" (1877–1878) and "Charles Sanders Peirce bibliography#SIL, A Theory of Probable Inference" (1883), two publications that emphasized the importance of randomization-based inference in statistics. In another study, Peirce randomly assigned volunteers to a blinding (medicine), blinded, repeated measures design, repeated-measures design to evaluate their ability to discriminate weights.

Peirce's experiment inspired other researchers in psychology and education, which developed a research tradition of randomized experiments in laboratories and specialized textbooks in the 1800s. Peirce also contributed the first English-language publication on an optimal design for Regression analysis, regression-statistical model, models in 1876. A pioneering optimal design for polynomial regression was suggested by Joseph Diaz Gergonne, Gergonne in 1815. In 1918 Kirstine Smith published optimal designs for polynomials of degree six (and less).

The use of a sequence of experiments, where the design of each may depend on the results of previous experiments, including the possible decision to stop experimenting, was pioneered by Abraham Wald in the context of sequential tests of statistical hypotheses. Surveys are available of optimal sequential analysis, sequential designs,Herman Chernoff, Chernoff, H. (1972) ''Sequential Analysis and Optimal Design'', Society for Industrial and Applied Mathematics, SIAM Monograph. and of Minimisation (clinical trials), adaptive designs. One specific type of sequential design is the "two-armed bandit", generalized to the multi-armed bandit, on which early work was done by Herbert Robbins in 1952.

The term "design of experiments" (DOE) derives from early statistical work performed by Ronald Fisher, Sir Ronald Fisher. He was described by Anders Hald as "a genius who almost single-handedly created the foundations for modern statistical science." Fisher initiated the principles of

The term ''Bayesian'' refers to

The term ''Bayesian'' refers to

When did Bayesian Inference become "Bayesian"?

''Bayesian Analysis'', 1 (1), 1–40. See page 5.). After the 1920s, inverse probability was largely supplanted by a collection of methods that were developed by Ronald A. Fisher,

"Earliest Known Uses of Some of the Words of Mathematics (B)"

"The term Bayesian entered circulation around 1950. R. A. Fisher used it in the notes he wrote to accompany the papers in his Contributions to Mathematical Statistics (1950). Fisher thought Bayes's argument was all but extinct for the only recent work to take it seriously was Harold Jeffreys's Theory of Probability (1939). In 1951 L. J. Savage, reviewing Wald's Statistical Decisions Functions, referred to "modern, or unBayesian, statistical theory" ("The Theory of Statistical Decision," ''Journal of the American Statistical Association'', 46, p. 58.). Soon after, however, Savage changed from being an unBayesian to being a Bayesian." In the 20th century, the ideas of Laplace were further developed in two different directions, giving rise to ''objective'' and ''subjective'' currents in Bayesian practice. In the objectivist stream, the statistical analysis depends on only the model assumed and the data analysed. No subjective decisions need to be involved. In contrast, "subjectivist" statisticians deny the possibility of fully objective analysis for the general case. In the further development of Laplace's ideas, subjective ideas predate objectivist positions. The idea that 'probability' should be interpreted as 'subjective degree of belief in a proposition' was proposed, for example, by John Maynard Keynes in the early 1920s. This idea was taken further by Bruno de Finetti in Italy (', 1930) and Frank P. Ramsey, Frank Ramsey in Cambridge (''The Foundations of Mathematics'', 1931). The approach was devised to solve problems with the frequentist, frequentist definition of probability but also with the earlier, objectivist approach of Laplace. The subjective Bayesian methods were further developed and popularized in the 1950s by Leonard Jimmie Savage, L.J. Savage. Objective Bayesian inference was further developed by Harold Jeffreys at the University of Cambridge. His book ''Theory of Probability'' first appeared in 1939 and played an important role in the revival of the Bayesian probability, Bayesian view of probability. In 1957, Edwin Thompson Jaynes, Edwin Jaynes promoted the concept of Principle of maximum entropy, maximum entropy for constructing priors, which is an important principle in the formulation of objective methods, mainly for discrete problems. In 1965, Dennis Lindley's two-volume work "Introduction to Probability and Statistics from a Bayesian Viewpoint" brought Bayesian methods to a wide audience. In 1979, José-Miguel Bernardo introduced Prior probability#Uninformative priors, reference analysis, which offers a general applicable framework for objective analysis. Other well-known proponents of Bayesian probability theory include I.J. Good, B.O. Koopman, Howard Raiffa, Robert Schlaifer and Alan Turing. In the 1980s, there was a dramatic growth in research and applications of Bayesian methods, mostly attributed to the discovery of Markov chain Monte Carlo methods, which removed many of the computational problems, and an increasing interest in nonstandard, complex applications. Despite growth of Bayesian research, most undergraduate teaching is still based on frequentist statistics. Nonetheless, Bayesian methods are widely accepted and used, such as for example in the field of machine learning.Bishop, C.M. (2007) ''Pattern Recognition and Machine Learning''. Springer

Revised version, 2002

* * * Kotz, S., Johnson, N.L. (1992,1992,1997). ''Breakthroughs in Statistics'', Vols I, II, III. Springer , , * * David Salsburg, Salsburg, David (2001). ''The Lady Tasting Tea, The Lady Tasting Tea: How Statistics Revolutionized Science in the Twentieth Century''. * * Stigler, Stephen M. (1999) ''Statistics on the Table: The History of Statistical Concepts and Methods''. Harvard University Press. *

JEHPS: Recent publications in the history of probability and statistics

* [https://web.archive.org/web/20220316084055/http://www.economics.soton.ac.uk/staff/aldrich/Figures.htm Figures from the History of Probability and Statistics (Univ. of Southampton)]

Materials for the History of Statistics (Univ. of York)

*[https://mathshistory.st-andrews.ac.uk/Miller/mathsym/stat/ Earliest Uses of Symbols in Probability and Statistics] o

Earliest Uses of Various Mathematical Symbols

{{DEFAULTSORT:History Of Statistics History of probability and statistics, History of science by discipline, Statistics

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

, in the modern sense of the word, began evolving in the 18th century in response to the novel needs of industrializing sovereign states

A sovereign state is a State (polity), state that has the highest authority over a territory. It is commonly understood that Sovereignty#Sovereignty and independence, a sovereign state is independent. When referring to a specific polity, the ter ...

.

In early times, the meaning was restricted to information about states, particularly demographics

Demography () is the statistical study of human populations: their size, composition (e.g., ethnic group, age), and how they change through the interplay of fertility (births), mortality (deaths), and migration.

Demographic analysis examin ...

such as population. This was later extended to include all collections of information of all types, and later still it was extended to include the analysis and interpretation of such data. In modern terms, "statistics" means both sets of collected information, as in national accounts

National accounts or national account systems (NAS) are the implementation of complete and consistent accounting Scientific technique, techniques for measuring the economic activity of a nation. These include detailed underlying measures that ...

and temperature record, and analytical work which requires statistical inference

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of ...

. Statistical activities are often associated with models expressed using probabilities

Probability is a branch of mathematics and statistics concerning Event (probability theory), events and numerical descriptions of how likely they are to occur. The probability of an event is a number between 0 and 1; the larger the probab ...

, hence the connection with probability theory. The large requirements of data processing have made statistics a key application of computing. A number of statistical concepts have an important impact on a wide range of sciences. These include the design of experiments

The design of experiments (DOE), also known as experiment design or experimental design, is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation. ...

and approaches to statistical inference such as Bayesian inference

Bayesian inference ( or ) is a method of statistical inference in which Bayes' theorem is used to calculate a probability of a hypothesis, given prior evidence, and update it as more information becomes available. Fundamentally, Bayesian infer ...

, each of which can be considered to have their own sequence in the development of the ideas underlying modern statistics.

Introduction

By the 18th century, the term "statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

" designated the systematic collection of demographic

Demography () is the statistics, statistical study of human populations: their size, composition (e.g., ethnic group, age), and how they change through the interplay of fertility (births), mortality (deaths), and migration.

Demographic analy ...

and economic

An economy is an area of the Production (economics), production, Distribution (economics), distribution and trade, as well as Consumption (economics), consumption of Goods (economics), goods and Service (economics), services. In general, it is ...

data by states. For at least two millennia, these data were mainly tabulations of human and material resources that might be taxed or put to military use. In the early 19th century, collection intensified, and the meaning of "statistics" broadened to include the discipline concerned with the collection, summary, and analysis of data. Today, data is collected and statistics are computed and widely distributed in government, business, most of the sciences and sports, and even for many pastimes. Electronic computer

A computer is a machine that can be Computer programming, programmed to automatically Execution (computing), carry out sequences of arithmetic or logical operations (''computation''). Modern digital electronic computers can perform generic set ...

s have expedited more elaborate statistical computation even as they have facilitated the collection and aggregation of data. A single data analyst may have available a set of data-files with millions of records, each with dozens or hundreds of separate measurements. These were collected over time from computer activity (for example, a stock exchange) or from computerized sensors, point-of-sale registers, and so on. Computers then produce simple, accurate summaries, and allow more tedious analyses, such as those that require inverting a large matrix or perform hundreds of steps of iteration, that would never be attempted by hand. Faster computing has allowed statisticians to develop "computer-intensive" methods which may look at all permutations, or use randomization to look at 10,000 permutations of a problem, to estimate answers that are not easy to quantify by theory alone.

The term "mathematical statistics

Mathematical statistics is the application of probability theory and other mathematical concepts to statistics, as opposed to techniques for collecting statistical data. Specific mathematical techniques that are commonly used in statistics inc ...

" designates the mathematical theories of probability

Probability is a branch of mathematics and statistics concerning events and numerical descriptions of how likely they are to occur. The probability of an event is a number between 0 and 1; the larger the probability, the more likely an e ...

and statistical inference

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of ...

, which are used in statistical practice. The relation between statistics and probability theory developed rather late, however. In the 19th century, statistics increasingly used probability theory

Probability theory or probability calculus is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expre ...

, whose initial results were found in the 17th and 18th centuries, particularly in the analysis of games of chance

A game of chance is in contrast with a game of skill. It is a game whose outcome is strongly influenced by some randomizing device. Common devices used include dice, spinning tops, playing cards, roulette wheels, numbered balls, or in the case ...

(gambling). By 1800, astronomy used probability models and statistical theories, particularly the method of least squares

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The me ...

. Early probability theory and statistics was systematized in the 19th century and statistical reasoning and probability models were used by social scientists to advance the new sciences of experimental psychology

Experimental psychology is the work done by those who apply Experiment, experimental methods to psychological study and the underlying processes. Experimental psychologists employ Research participant, human participants and Animal testing, anim ...

and sociology

Sociology is the scientific study of human society that focuses on society, human social behavior, patterns of Interpersonal ties, social relationships, social interaction, and aspects of culture associated with everyday life. The term sociol ...

, and by physical scientists in thermodynamics

Thermodynamics is a branch of physics that deals with heat, Work (thermodynamics), work, and temperature, and their relation to energy, entropy, and the physical properties of matter and radiation. The behavior of these quantities is governed b ...

and statistical mechanics

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. Sometimes called statistical physics or statistical thermodynamics, its applicati ...

. The development of statistical reasoning was closely associated with the development of inductive logic and the scientific method

The scientific method is an Empirical evidence, empirical method for acquiring knowledge that has been referred to while doing science since at least the 17th century. Historically, it was developed through the centuries from the ancient and ...

, which are concerns that move statisticians away from the narrower area of mathematical statistics. Much of the theoretical work was readily available by the time computers were available to exploit them. By the 1970s, Johnson and Kotz produced a four-volume ''Compendium on Statistical Distributions'' (1st ed., 1969–1972), which is still an invaluable resource.

Applied statistics can be regarded as not a field of mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many ar ...

but an autonomous mathematical science, like computer science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, ...

and operations research

Operations research () (U.S. Air Force Specialty Code: Operations Analysis), often shortened to the initialism OR, is a branch of applied mathematics that deals with the development and application of analytical methods to improve management and ...

. Unlike mathematics, statistics had its origins in public administration

Public administration, or public policy and administration refers to "the management of public programs", or the "translation of politics into the reality that citizens see every day",Kettl, Donald and James Fessler. 2009. ''The Politics of the ...

. Applications arose early in demography

Demography () is the statistical study of human populations: their size, composition (e.g., ethnic group, age), and how they change through the interplay of fertility (births), mortality (deaths), and migration.

Demographic analysis examine ...

and economics

Economics () is a behavioral science that studies the Production (economics), production, distribution (economics), distribution, and Consumption (economics), consumption of goods and services.

Economics focuses on the behaviour and interac ...

; large areas of micro- and macro-economics today are "statistics" with an emphasis on time-series analyses. With its emphasis on learning from data and making best predictions, statistics also has been shaped by areas of academic research including psychological testing, medicine and epidemiology

Epidemiology is the study and analysis of the distribution (who, when, and where), patterns and Risk factor (epidemiology), determinants of health and disease conditions in a defined population, and application of this knowledge to prevent dise ...

. The ideas of statistical testing have considerable overlap with decision science

Decision may refer to:

Law and politics

*Judgment (law), as the outcome of a legal case

* Landmark decision, the outcome of a case that sets a legal precedent

* ''Per curiam'' decision, by a court with multiple judges

Books

* ''Decision'' (novel ...

. With its concerns with searching and effectively presenting data

Data ( , ) are a collection of discrete or continuous values that convey information, describing the quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted for ...

, statistics has overlap with information science and computer science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, ...

.

Etymology

:''Look up ''statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

'' in Wiktionary

Wiktionary (, ; , ; rhyming with "dictionary") is a multilingual, web-based project to create a free content dictionary of terms (including words, phrases, proverbs, linguistic reconstructions, etc.) in all natural languages and in a number o ...

, the free dictionary.''

The term ''statistics'' is ultimately derived from the Neo-Latin

Neo-LatinSidwell, Keith ''Classical Latin-Medieval Latin-Neo Latin'' in ; others, throughout. (also known as New Latin and Modern Latin) is the style of written Latin used in original literary, scholarly, and scientific works, first in Italy d ...

("council of state") and the Italian

Italian(s) may refer to:

* Anything of, from, or related to the people of Italy over the centuries

** Italians, a Romance ethnic group related to or simply a citizen of the Italian Republic or Italian Kingdom

** Italian language, a Romance languag ...

word ("statesman" or "politician

A politician is a person who participates in Public policy, policy-making processes, usually holding an elective position in government. Politicians represent the people, make decisions, and influence the formulation of public policy. The roles ...

"). The German , first introduced by Gottfried Achenwall (1749), originally designated the analysis of data

Data ( , ) are a collection of discrete or continuous values that convey information, describing the quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted for ...

about the state

State most commonly refers to:

* State (polity), a centralized political organization that regulates law and society within a territory

**Sovereign state, a sovereign polity in international law, commonly referred to as a country

**Nation state, a ...

, signifying the "science of state" (then called ''political arithmetic'' in English). It acquired the meaning of the collection and classification of data generally in the early 19th century. It was introduced into English in 1791 by Sir John Sinclair when he published the first of 21 volumes titled '' Statistical Account of Scotland''.

Origins in probability theory

Basic forms of statistics have been used since the beginning of civilization. Early empires often collated censuses of the population or recorded the trade in various commodities. TheHan dynasty

The Han dynasty was an Dynasties of China, imperial dynasty of China (202 BC9 AD, 25–220 AD) established by Liu Bang and ruled by the House of Liu. The dynasty was preceded by the short-lived Qin dynasty (221–206 BC ...

and the Roman Empire

The Roman Empire ruled the Mediterranean and much of Europe, Western Asia and North Africa. The Roman people, Romans conquered most of this during the Roman Republic, Republic, and it was ruled by emperors following Octavian's assumption of ...

were some of the first states to extensively gather data on the size of the empire's population, geographical area and wealth.

The use of statistical methods dates back to at least the 5th century BCE. The historian Thucydides

Thucydides ( ; ; BC) was an Classical Athens, Athenian historian and general. His ''History of the Peloponnesian War'' recounts Peloponnesian War, the fifth-century BC war between Sparta and Athens until the year 411 BC. Thucydides has been d ...

in his ''History of the Peloponnesian War

The ''History of the Peloponnesian War'' () is a historical account of the Peloponnesian War (431–404 BC), which was fought between the Peloponnesian League (led by Sparta) and the Delian League (led by Classical Athens, Athens). The account, ...

'' describes how the Athenians calculated the height of the wall of Platea by counting the number of bricks in an unplastered section of the wall sufficiently near them to be able to count them. The count was repeated several times by a number of soldiers. The most frequent value (in modern terminology – the mode) so determined was taken to be the most likely value of the number of bricks. Multiplying this value by the height of the bricks used in the wall allowed the Athenians to determine the height of the ladders necessary to scale the walls.

The Trial of the Pyx is a test of the purity of the coinage of the Royal Mint

The Royal Mint is the United Kingdom's official maker of British coins. It is currently located in Llantrisant, Wales, where it moved in 1968.

Operating under the legal name The Royal Mint Limited, it is a limited company that is wholly ow ...

which has been held on a regular basis since the 12th century. The Trial itself is based on statistical sampling methods. After minting a series of coins – originally from ten pounds of silver – a single coin was placed in the Pyx – a box in Westminster Abbey

Westminster Abbey, formally titled the Collegiate Church of Saint Peter at Westminster, is an Anglican church in the City of Westminster, London, England. Since 1066, it has been the location of the coronations of 40 English and British m ...

. After a given period – now once a year – the coins are removed and weighed. A sample of coins removed from the box are then tested for purity.

The '' Nuova Cronica'', a 14th-century history of Florence

Florence () weathered the decline of the Western Roman Empire to emerge as a financial hub of Europe, home to several banks including that of the politically powerful Medici family. The city's wealth supported the development of art during the I ...

by the Florentine banker and official Giovanni Villani

Giovanni Villani (; 1276 or 1280 – 1348)Bartlett (1992), 35. was an Italian banker, official, diplomat and chronicler from Florence who wrote the ''Nuova Cronica'' (''New Chronicles'') on the history of Florence. He was a leading statesman of ...

, includes much statistical information on population, ordinances, commerce and trade, education, and religious facilities and has been described as the first introduction of statistics as a positive element in history,Villani, Giovanni. Encyclopædia Britannica. Encyclopædia Britannica 2006 Ultimate Reference Suite DVD

An encyclopedia is a reference work or compendium providing summaries of knowledge, either general or special, in a particular field or discipline. Encyclopedias are divided into articles or entries that are arranged alphabetically by artic ...

. Retrieved on 2008-03-04. though neither the term nor the concept of statistics as a specific field yet existed.

The arithmetic mean

A mean is a quantity representing the "center" of a collection of numbers and is intermediate to the extreme values of the set of numbers. There are several kinds of means (or "measures of central tendency") in mathematics, especially in statist ...

, although a concept known to the Greeks, was not generalised to more than two values until the 16th century. The invention of the decimal system by Simon Stevin

Simon Stevin (; 1548–1620), sometimes called Stevinus, was a County_of_Flanders, Flemish mathematician, scientist and music theorist. He made various contributions in many areas of science and engineering, both theoretical and practical. He a ...

in 1585 seems likely to have facilitated these calculations. This method was first adopted in astronomy by Tycho Brahe

Tycho Brahe ( ; ; born Tyge Ottesen Brahe, ; 14 December 154624 October 1601), generally called Tycho for short, was a Danish astronomer of the Renaissance, known for his comprehensive and unprecedentedly accurate astronomical observations. He ...

who was attempting to reduce the errors in his estimates of the locations of various celestial bodies.

The idea of the median

The median of a set of numbers is the value separating the higher half from the lower half of a Sample (statistics), data sample, a statistical population, population, or a probability distribution. For a data set, it may be thought of as the “ ...

originated in Edward Wright's book on navigation (''Certaine Errors in Navigation'') in 1599 in a section concerning the determination of location with a compass. Wright felt that this value was the most likely to be the correct value in a series of observations. The difference between the mean and the median was noticed in 1669 by Chistiaan Huygens in the context of using Graunt's tables.

The term 'statistic' was introduced by the Italian scholar Girolamo Ghilini in 1589 with reference to this science. The birth of statistics is often dated to 1662, when John Graunt, along with

The term 'statistic' was introduced by the Italian scholar Girolamo Ghilini in 1589 with reference to this science. The birth of statistics is often dated to 1662, when John Graunt, along with William Petty

Sir William Petty (26 May 1623 – 16 December 1687) was an English economist, physician, scientist and philosopher. He first became prominent serving Oliver Cromwell and the Commonwealth of England, Commonwealth in Cromwellian conquest of I ...

, developed early human statistical and census

A census (from Latin ''censere'', 'to assess') is the procedure of systematically acquiring, recording, and calculating population information about the members of a given Statistical population, population, usually displayed in the form of stati ...

methods that provided a framework for modern demography

Demography () is the statistical study of human populations: their size, composition (e.g., ethnic group, age), and how they change through the interplay of fertility (births), mortality (deaths), and migration.

Demographic analysis examine ...

. He produced the first life table

In actuarial science and demography, a life table (also called a mortality table or actuarial table) is a table which shows, for each age, the probability that a person of that age will die before their next birthday ("probability of death"). In ...

, giving probabilities of survival to each age. His book ''Natural and Political Observations Made upon the Bills of Mortality'' used analysis of the mortality rolls to make the first statistically based estimation of the population of London

London is the Capital city, capital and List of urban areas in the United Kingdom, largest city of both England and the United Kingdom, with a population of in . London metropolitan area, Its wider metropolitan area is the largest in Wester ...

. He knew that there were around 13,000 funerals per year in London and that three people died per eleven families per year. He estimated from the parish records that the average family size was 8 and calculated that the population of London was about 384,000; this is the first known use of a ratio estimator

The ratio estimator is a statistical estimator for the ratio of means of two random variables. Ratio estimates are biased and corrections must be made when they are used in experimental or survey work. The ratio estimates are asymmetrical and symm ...

. Laplace

Pierre-Simon, Marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French polymath, a scholar whose work has been instrumental in the fields of physics, astronomy, mathematics, engineering, statistics, and philosophy. He summariz ...

in 1802 estimated the population of France with a similar method; see for details.

Although the original scope of statistics was limited to data useful for governance, the approach was extended to many fields of a scientific or commercial nature during the 19th century. The mathematical foundations for the subject heavily drew on the new probability theory

Probability theory or probability calculus is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expre ...

, pioneered in the 16th century by Gerolamo Cardano

Gerolamo Cardano (; also Girolamo or Geronimo; ; ; 24 September 1501– 21 September 1576) was an Italian polymath whose interests and proficiencies ranged through those of mathematician, physician, biologist, physicist, chemist, astrologer, as ...

, Pierre de Fermat

Pierre de Fermat (; ; 17 August 1601 – 12 January 1665) was a French mathematician who is given credit for early developments that led to infinitesimal calculus, including his technique of adequality. In particular, he is recognized for his d ...

and Blaise Pascal

Blaise Pascal (19June 162319August 1662) was a French mathematician, physicist, inventor, philosopher, and Catholic Church, Catholic writer.

Pascal was a child prodigy who was educated by his father, a tax collector in Rouen. His earliest ...

. Christiaan Huygens

Christiaan Huygens, Halen, Lord of Zeelhem, ( , ; ; also spelled Huyghens; ; 14 April 1629 – 8 July 1695) was a Dutch mathematician, physicist, engineer, astronomer, and inventor who is regarded as a key figure in the Scientific Revolution ...

(1657) gave the earliest known scientific treatment of the subject. Jakob Bernoulli's '' Ars Conjectandi'' (posthumous, 1713) and Abraham de Moivre

Abraham de Moivre FRS (; 26 May 166727 November 1754) was a French mathematician known for de Moivre's formula, a formula that links complex numbers and trigonometry, and for his work on the normal distribution and probability theory.

He move ...

's '' The Doctrine of Chances'' (1718) treated the subject as a branch of mathematics. In his book Bernoulli introduced the idea of representing complete certainty as one and probability as a number between zero and one.

A key early application of statistics in the 18th century was to the human sex ratio

The human sex ratio is the ratio of males to females in a population in the context of anthropology and demography. In humans, the natural sex ratio at birth is slightly biased towards the male sex. It is estimated to be about 1.05 worldwide or ...

at birth. John Arbuthnot studied this question in 1710.

Arbuthnot examined birth records in London for each of the 82 years from 1629 to 1710. In every year, the number of males born in London exceeded the number of females. Considering more male or more female births as equally likely, the probability of the observed outcome is 0.5^82, or about 1 in 4,8360,0000,0000,0000,0000,0000; in modern terms, the ''p''-value. This is vanishingly small, leading Arbuthnot that this was not due to chance, but to divine providence: "From whence it follows, that it is Art, not Chance, that governs." This is and other work by Arbuthnot is credited as "the first use of significance test

A statistical hypothesis test is a method of statistical inference used to decide whether the data provide sufficient evidence to reject a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. T ...

s"

the first example of reasoning about statistical significance

In statistical hypothesis testing, a result has statistical significance when a result at least as "extreme" would be very infrequent if the null hypothesis were true. More precisely, a study's defined significance level, denoted by \alpha, is the ...

and moral certainty, and "... perhaps the first published report of a nonparametric test ...", specifically the sign test; see details at .

The formal study of theory of errors may be traced back to Roger Cotes' ''Opera Miscellanea'' (posthumous, 1722), but a memoir prepared by Thomas Simpson in 1755 (printed 1756) first applied the theory to the discussion of errors of observation. The reprint (1757) of this memoir lays down the axiom

An axiom, postulate, or assumption is a statement that is taken to be true, to serve as a premise or starting point for further reasoning and arguments. The word comes from the Ancient Greek word (), meaning 'that which is thought worthy or ...

s that positive and negative errors are equally probable, and that there are certain assignable limits within which all errors may be supposed to fall; continuous errors are discussed and a probability curve is given. Simpson discussed several possible distributions of error. He first considered the uniform distribution and then the discrete symmetric triangular distribution followed by the continuous symmetric triangle distribution. Tobias Mayer, in his study of the libration

In lunar astronomy, libration is the cyclic variation in the apparent position of the Moon that is perceived by observers on the Earth and caused by changes between the orbital and rotational planes of the moon. It causes an observer to see ...

of the moon

The Moon is Earth's only natural satellite. It Orbit of the Moon, orbits around Earth at Lunar distance, an average distance of (; about 30 times Earth diameter, Earth's diameter). The Moon rotation, rotates, with a rotation period (lunar ...

(', Nuremberg, 1750), invented the first formal method for estimating the unknown quantities by generalized the averaging of observations under identical circumstances to the averaging of groups of similar equations.

Roger Joseph Boscovich in 1755 based in his work on the shape of the earth proposed in his book ''De Litteraria expeditione per pontificiam ditionem ad dimetiendos duos meridiani gradus a PP. Maire et Boscovicli'' that the true value of a series of observations would be that which minimises the sum of absolute errors. In modern terminology this value is the median. The first example of what later became known as the normal curve was studied by Abraham de Moivre

Abraham de Moivre FRS (; 26 May 166727 November 1754) was a French mathematician known for de Moivre's formula, a formula that links complex numbers and trigonometry, and for his work on the normal distribution and probability theory.

He move ...

who plotted this curve on November 12, 1733.de Moivre, A. (1738) The doctrine of chances. Woodfall de Moivre was studying the number of heads that occurred when a 'fair' coin was tossed.

In 1763 Richard Price transmitted to the Royal Society Thomas Bayes

Thomas Bayes ( , ; 7 April 1761) was an English statistician, philosopher and Presbyterian minister who is known for formulating a specific case of the theorem that bears his name: Bayes' theorem.

Bayes never published what would become his m ...

proof of a rule for using a binomial distribution to calculate a posterior probability on a prior event.

In 1765 Joseph Priestley

Joseph Priestley (; 24 March 1733 – 6 February 1804) was an English chemist, Unitarian, Natural philosophy, natural philosopher, English Separatist, separatist theologian, Linguist, grammarian, multi-subject educator and Classical libera ...

invented the first timeline

A timeline is a list of events displayed in chronological order. It is typically a graphic design showing a long bar labelled with dates paralleling it, and usually contemporaneous events.

Timelines can use any suitable scale representing t ...

charts.

Johann Heinrich Lambert

Johann Heinrich Lambert (; ; 26 or 28 August 1728 – 25 September 1777) was a polymath from the Republic of Mulhouse, at that time allied to the Switzerland, Swiss Confederacy, who made important contributions to the subjects of mathematics, phys ...

in his 1765 book ''Anlage zur Architectonic'' proposed the semicircle

In mathematics (and more specifically geometry), a semicircle is a one-dimensional locus of points that forms half of a circle. It is a circular arc that measures 180° (equivalently, radians, or a half-turn). It only has one line of symmetr ...

as a distribution of errors:

:

with -1 < ''x'' < 1.

Pierre-Simon Laplace

Pierre-Simon, Marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French polymath, a scholar whose work has been instrumental in the fields of physics, astronomy, mathematics, engineering, statistics, and philosophy. He summariz ...

(1774) made the first attempt to deduce a rule for the combination of observations from the principles of the theory of probabilities. He represented the law of probability of errors by a curve and deduced a formula for the mean of three observations.

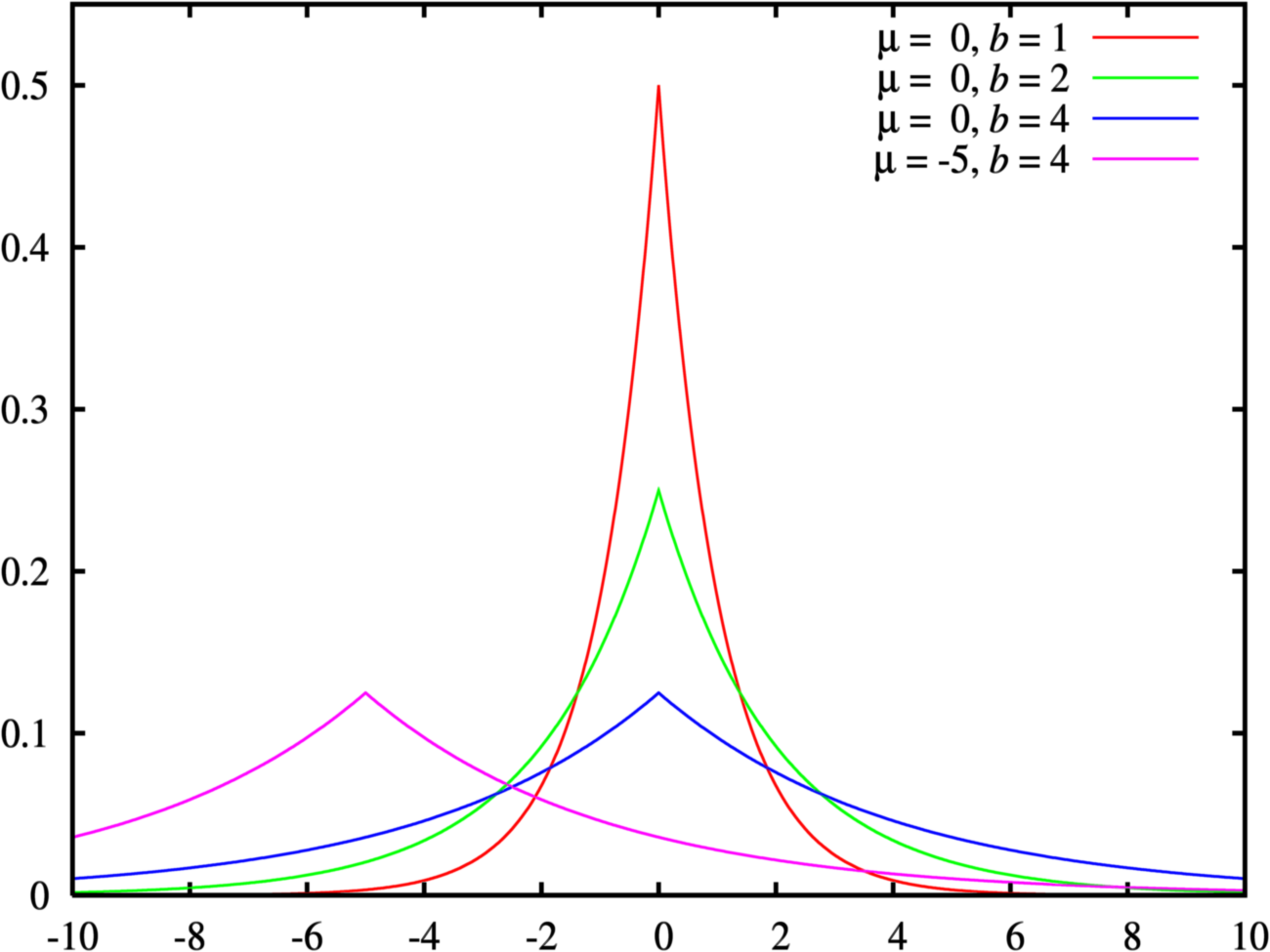

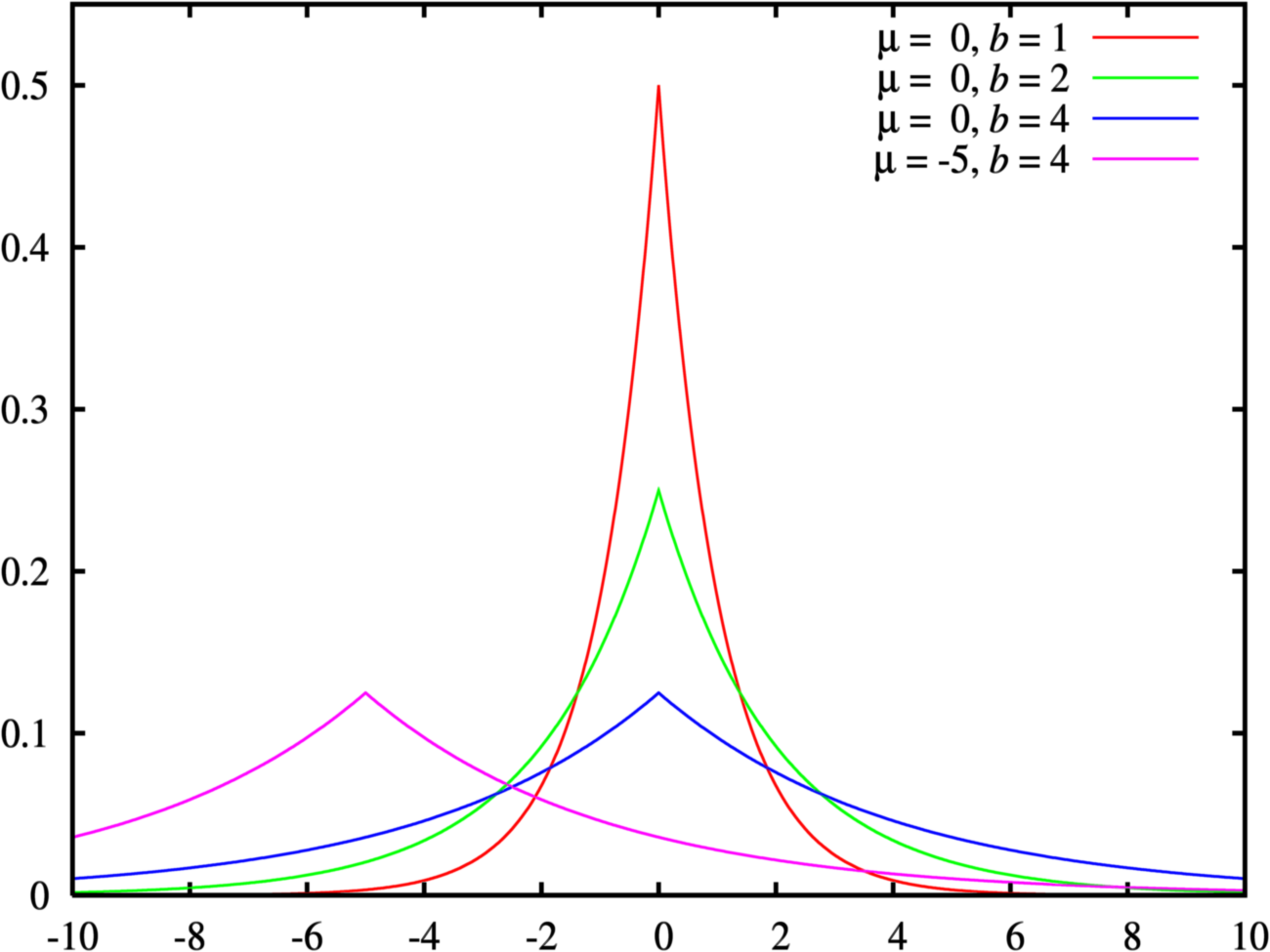

Laplace in 1774 noted that the frequency of an error could be expressed as an exponential function of its magnitude once its sign was disregarded.Wilson, Edwin Bidwell (1923) "First and second laws of error", ''Journal of the American Statistical Association

The ''Journal of the American Statistical Association'' is a quarterly peer-reviewed scientific journal published by Taylor & Francis on behalf of the American Statistical Association. It covers work primarily focused on the application of statis ...

'', 18 (143), 841-851 This distribution is now known as the Laplace distribution

In probability theory and statistics, the Laplace distribution is a continuous probability distribution named after Pierre-Simon Laplace. It is also sometimes called the double exponential distribution, because it can be thought of as two exponen ...

. Lagrange proposed a parabolic fractal distribution

Parabolic usually refers to something in a shape of a parabola, but may also refer to a parable.

Parabolic may refer to:

*In mathematics:

**In elementary mathematics, especially elementary geometry:

**Parabolic coordinates

**Parabolic cylindrical ...

of errors in 1776.

Laplace in 1778 published his second law of errors wherein he noted that the frequency of an error was proportional to the exponential of the square of its magnitude. This was subsequently rediscovered by Gauss

Johann Carl Friedrich Gauss (; ; ; 30 April 177723 February 1855) was a German mathematician, astronomer, Geodesy, geodesist, and physicist, who contributed to many fields in mathematics and science. He was director of the Göttingen Observat ...

(possibly in 1795) and is now best known as the normal distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

f(x) = \frac ...

which is of central importance in statistics.Havil J (2003) ''Gamma: Exploring Euler's Constant''. Princeton, NJ: Princeton University Press, p. 157 This distribution was first referred to as the ''normal'' distribution by C. S. Peirce in 1873 who was studying measurement errors when an object was dropped onto a wooden base. C. S. Peirce (1873) Theory of errors of observations. Report of the Superintendent US Coast Survey, Washington, Government Printing Office. Appendix no. 21: 200-224 He chose the term ''normal'' because of its frequent occurrence in naturally occurring variables.

Lagrange also suggested in 1781 two other distributions for errors – a raised cosine distribution and a logarithmic distribution

In probability and statistics, the logarithmic distribution (also known as the logarithmic series distribution or the log-series distribution) is a discrete probability distribution derived from the Maclaurin series expansion

:

-\ln(1-p) = ...

.

Laplace gave (1781) a formula for the law of facility of error (a term due to Joseph Louis Lagrange

Joseph-Louis Lagrange (born Giuseppe Luigi LagrangiaDaniel Bernoulli

Daniel Bernoulli ( ; ; – 27 March 1782) was a Swiss people, Swiss-France, French mathematician and physicist and was one of the many prominent mathematicians in the Bernoulli family from Basel. He is particularly remembered for his applicati ...

(1778) introduced the principle of the maximum product of the probabilities of a system of concurrent errors.

In 1786 William Playfair

William Playfair (22 September 1759 – 11 February 1823) was a Scottish engineer and political economist. The founder of graphical methods of statistics, Playfair invented several types of diagrams: in 1786 he introduced the line, area and ...

(1759–1823) introduced the idea of graphical representation into statistics. He invented the line chart

A line chart or line graph, also known as curve chart, is a type of chart that displays information as a series of data points called 'markers' connected by straight wikt:line, line segments. It is a basic type of chart common in many fields. ...

, bar chart

A bar chart or bar graph is a chart or graph that presents categorical variable, categorical data with rectangular bars with heights or lengths proportional to the values that they represent. The bars can be plotted vertically or horizontally. A ...

and histogram

A histogram is a visual representation of the frequency distribution, distribution of quantitative data. To construct a histogram, the first step is to Data binning, "bin" (or "bucket") the range of values— divide the entire range of values in ...

and incorporated them into his works on economics

Economics () is a behavioral science that studies the Production (economics), production, distribution (economics), distribution, and Consumption (economics), consumption of goods and services.

Economics focuses on the behaviour and interac ...

, the ''Commercial and Political Atlas''. This was followed in 1795 by his invention of the pie chart

A pie chart (or a circle chart) is a circular Statistical graphics, statistical graphic which is divided into slices to illustrate numerical proportion. In a pie chart, the arc length of each slice (and consequently its central angle and area) ...

and circle chart which he used to display the evolution of England's imports and exports. These latter charts came to general attention when he published examples in his ''Statistical Breviary'' in 1801.

Laplace, in an investigation of the motions of Saturn

Saturn is the sixth planet from the Sun and the second largest in the Solar System, after Jupiter. It is a gas giant, with an average radius of about 9 times that of Earth. It has an eighth the average density of Earth, but is over 95 tim ...

and Jupiter

Jupiter is the fifth planet from the Sun and the List of Solar System objects by size, largest in the Solar System. It is a gas giant with a Jupiter mass, mass more than 2.5 times that of all the other planets in the Solar System combined a ...

in 1787, generalized Mayer's method by using different linear combinations of a single group of equations.

In 1791 Sir John Sinclair introduced the term 'statistics' into English in his Statistical Accounts of Scotland.

In 1802 Laplace estimated the population of France to be 28,328,612.Cochran W.G. (1978) "Laplace's ratio estimators". pp 3-10. In David H.A., (ed). ''Contributions to Survey Sampling and Applied Statistics: papers in honor of H. O. Hartley''. Academic Press, New York He calculated this figure using the number of births in the previous year and census data for three communities. The census data of these communities showed that they had 2,037,615 persons and that the number of births were 71,866. Assuming that these samples were representative of France, Laplace produced his estimate for the entire population.

The method of least squares

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The me ...

, which was used to minimize errors in data measurement

Measurement is the quantification of attributes of an object or event, which can be used to compare with other objects or events.

In other words, measurement is a process of determining how large or small a physical quantity is as compared to ...

, was published independently by Adrien-Marie Legendre

Adrien-Marie Legendre (; ; 18 September 1752 – 9 January 1833) was a French people, French mathematician who made numerous contributions to mathematics. Well-known and important concepts such as the Legendre polynomials and Legendre transforma ...

(1805), Robert Adrain (1808), and Carl Friedrich Gauss

Johann Carl Friedrich Gauss (; ; ; 30 April 177723 February 1855) was a German mathematician, astronomer, geodesist, and physicist, who contributed to many fields in mathematics and science. He was director of the Göttingen Observatory and ...

(1809). Gauss had used the method in his famous 1801 prediction of the location of the dwarf planet

A dwarf planet is a small planetary-mass object that is in direct orbit around the Sun, massive enough to be hydrostatic equilibrium, gravitationally rounded, but insufficient to achieve clearing the neighbourhood, orbital dominance like the ...

Ceres. The observations that Gauss based his calculations on were made by the Italian monk Piazzi.

The method of least squares was preceded by the use a median regression slope. This method minimizing the sum of the absolute deviances. A method of estimating this slope was invented by Roger Joseph Boscovich in 1760 which he applied to astronomy.

The term ''probable error'' (') – the median deviation from the mean – was introduced in 1815 by the German astronomer Frederik Wilhelm Bessel. Antoine Augustin Cournot

Antoine Augustin Cournot (; 28 August 180131 March 1877) was a French philosopher and mathematician who contributed to the development of economics.

Biography

Antoine Augustin Cournot was born on August 28, 1801 in Gray, Haute-Saône. He ent ...

in 1843 was the first to use the term ''median'' (') for the value that divides a probability distribution into two equal halves.

Other contributors to the theory of errors were Ellis (1844), De Morgan (1864), Glaisher (1872), and Giovanni Schiaparelli (1875). Peters's (1856) formula for , the "probable error" of a single observation was widely used and inspired early robust statistics

Robust statistics are statistics that maintain their properties even if the underlying distributional assumptions are incorrect. Robust Statistics, statistical methods have been developed for many common problems, such as estimating location parame ...

(resistant to outlier

In statistics, an outlier is a data point that differs significantly from other observations. An outlier may be due to a variability in the measurement, an indication of novel data, or it may be the result of experimental error; the latter are ...

s: see Peirce's criterion).

In the 19th century authors on statistical theory

The theory of statistics provides a basis for the whole range of techniques, in both study design and data analysis, that are used within applications of statistics.

The theory covers approaches to statistical-decision problems and to statistica ...

included Laplace, S. Lacroix (1816), Littrow (1833), Dedekind (1860), Helmert (1872), Laurent (1873), Liagre, Didion, De Morgan and Boole

George Boole ( ; 2 November 1815 – 8 December 1864) was a largely self-taught English mathematician, philosopher and logician, most of whose short career was spent as the first professor of mathematics at Queen's College, Cork in Ireland. ...

.

Gustav Theodor Fechner

Gustav Theodor Fechner (; ; 19 April 1801 – 18 November 1887) was a German physicist, philosopher, and experimental psychologist. A pioneer in experimental psychology and founder of psychophysics (techniques for measuring the mind), he inspired ...

used the median (''Centralwerth'') in sociological and psychological phenomena.Keynes, JM (1921) A treatise on probability. Pt II Ch XVII §5 (p 201) It had earlier been used only in astronomy and related fields. Francis Galton

Sir Francis Galton (; 16 February 1822 – 17 January 1911) was an English polymath and the originator of eugenics during the Victorian era; his ideas later became the basis of behavioural genetics.

Galton produced over 340 papers and b ...

used the English term ''median'' for the first time in 1881 having earlier used the terms ''middle-most value'' in 1869 and the ''medium'' in 1880.Galton F (1881) Report of the Anthropometric Committee pp 245-260. Report of the 51st Meeting of the British Association for the Advancement of Science

Adolphe Quetelet

Lambert Adolphe Jacques Quetelet FRSF or FRSE (; 22 February 1796 – 17 February 1874) was a Belgian- French astronomer, mathematician, statistician and sociologist who founded and directed the Brussels Observatory and was influential ...

(1796–1874), another important founder of statistics, introduced the notion of the "average man" (''l'homme moyen'') as a means of understanding complex social phenomena such as crime rates, marriage rates, and suicide rates.

The first tests of the normal distribution were invented by the German statistician Wilhelm Lexis in the 1870s. The only data sets available to him that he was able to show were normally distributed were birth rates.

Development of modern statistics

Although the origins of statistical theory lie in the 18th-century advances in probability, the modern field of statistics only emerged in the late-19th and early-20th century in three stages. The first wave, at the turn of the century, was led by the work ofFrancis Galton

Sir Francis Galton (; 16 February 1822 – 17 January 1911) was an English polymath and the originator of eugenics during the Victorian era; his ideas later became the basis of behavioural genetics.

Galton produced over 340 papers and b ...

and Karl Pearson

Karl Pearson (; born Carl Pearson; 27 March 1857 – 27 April 1936) was an English biostatistician and mathematician. He has been credited with establishing the discipline of mathematical statistics. He founded the world's first university ...

, who transformed statistics into a rigorous mathematical discipline used for analysis, not just in science, but in industry and politics as well. The second wave of the 1910s and 20s was initiated by William Sealy Gosset

William Sealy Gosset (13 June 1876 – 16 October 1937) was an English statistician, chemist and brewer who worked for Guinness. In statistics, he pioneered small sample experimental design. Gosset published under the pen name Student and develo ...

, and reached its culmination in the insights of Ronald Fisher

Sir Ronald Aylmer Fisher (17 February 1890 – 29 July 1962) was a British polymath who was active as a mathematician, statistician, biologist, geneticist, and academic. For his work in statistics, he has been described as "a genius who a ...

. This involved the development of better design of experiments

The design of experiments (DOE), also known as experiment design or experimental design, is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation. ...

models, hypothesis testing and techniques for use with small data samples. The final wave, which mainly saw the refinement and expansion of earlier developments, emerged from the collaborative work between Egon Pearson

Egon Sharpe Pearson (11 August 1895 – 12 June 1980) was one of three children of Karl Pearson and Maria, née Sharpe, and, like his father, a British statistician.

Career

Pearson was educated at Winchester College and Trinity College ...

and Jerzy Neyman

Jerzy Spława-Neyman (April 16, 1894 – August 5, 1981; ) was a Polish mathematician and statistician who first introduced the modern concept of a confidence interval into statistical hypothesis testing and, with Egon Pearson, revised Ronald Fis ...

in the 1930s. Today, statistical methods are applied in all fields that involve decision making, for making accurate inferences from a collated body of data and for making decisions in the face of uncertainty based on statistical methodology.

The first statistical bodies were established in the early 19th century. The Royal Statistical Society was founded in 1834 and

The first statistical bodies were established in the early 19th century. The Royal Statistical Society was founded in 1834 and Florence Nightingale

Florence Nightingale (; 12 May 1820 – 13 August 1910) was an English Reform movement, social reformer, statistician and the founder of modern nursing. Nightingale came to prominence while serving as a manager and trainer of nurses during th ...

, its first female member, pioneered the application of statistical analysis to health problems for the furtherance of epidemiological understanding and public health practice. However, the methods then used would not be considered as modern statistics today.

The Oxford

Oxford () is a City status in the United Kingdom, cathedral city and non-metropolitan district in Oxfordshire, England, of which it is the county town.

The city is home to the University of Oxford, the List of oldest universities in continuou ...

scholar Francis Ysidro Edgeworth's book, ''Metretike: or The Method of Measuring Probability and Utility'' (1887) dealt with probability as the basis of inductive reasoning, and his later works focused on the 'philosophy of chance'. His first paper on statistics (1883) explored the law of error (normal distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

f(x) = \frac ...

), and his ''Methods of Statistics'' (1885) introduced an early version of the t distribution, the Edgeworth expansion, the Edgeworth series

In probability theory, the Gram–Charlier A series (named in honor of Jørgen Pedersen Gram and Carl Charlier), and the Edgeworth series (named in honor of Francis Ysidro Edgeworth) are series that approximate a probability distribution over th ...

, the method of variate transformation and the asymptotic theory of maximum likelihood estimates.

The Norwegian Anders Nicolai Kiær introduced the concept of stratified sampling in 1895.Bellhouse DR (1988) A brief history of random sampling methods. Handbook of statistics. Vol 6 pp 1-14 Elsevier Arthur Lyon Bowley introduced new methods of data sampling in 1906 when working on social statistics. Although statistical surveys of social conditions had started with Charles Booth's "Life and Labour of the People in London" (1889–1903) and Seebohm Rowntree's "Poverty, A Study of Town Life" (1901), Bowley's, key innovation consisted of the use of random sampling

In this statistics, quality assurance, and survey methodology, sampling is the selection of a subset or a statistical sample (termed sample for short) of individuals from within a statistical population to estimate characteristics of the who ...

techniques. His efforts culminated in his ''New Survey of London Life and Labour''.

Francis Galton

Sir Francis Galton (; 16 February 1822 – 17 January 1911) was an English polymath and the originator of eugenics during the Victorian era; his ideas later became the basis of behavioural genetics.

Galton produced over 340 papers and b ...

is credited as one of the principal founders of statistical theory. His contributions to the field included introducing the concepts of standard deviation

In statistics, the standard deviation is a measure of the amount of variation of the values of a variable about its Expected value, mean. A low standard Deviation (statistics), deviation indicates that the values tend to be close to the mean ( ...

, correlation

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics ...

, regression and the application of these methods to the study of the variety of human characteristics – height, weight, eyelash length among others. He found that many of these could be fitted to a normal curve distribution.

Galton submitted a paper to ''Nature'' in 1907 on the usefulness of the median. He examined the accuracy of 787 guesses of the weight of an ox at a country fair. The actual weight was 1208 pounds: the median guess was 1198. The guesses were markedly non-normally distributed (cf. Wisdom of the Crowd

"Wisdom of the crowd" or "wisdom of the majority" expresses the notion that the collective opinion of a diverse and independent group of individuals (rather than that of a single expert) yields the best judgement. This concept, while not new to ...

).

Galton's publication of ''Natural Inheritance'' in 1889 sparked the interest of a brilliant mathematician, Karl Pearson

Karl Pearson (; born Carl Pearson; 27 March 1857 – 27 April 1936) was an English biostatistician and mathematician. He has been credited with establishing the discipline of mathematical statistics. He founded the world's first university ...

, then working at University College London

University College London (Trade name, branded as UCL) is a Public university, public research university in London, England. It is a Member institutions of the University of London, member institution of the Federal university, federal Uni ...

, and he went on to found the discipline of mathematical statistics. He emphasised the statistical foundation of scientific laws and promoted its study and his laboratory attracted students from around the world attracted by his new methods of analysis, including Udny Yule. His work grew to encompass the fields of biology

Biology is the scientific study of life and living organisms. It is a broad natural science that encompasses a wide range of fields and unifying principles that explain the structure, function, growth, History of life, origin, evolution, and ...

, epidemiology

Epidemiology is the study and analysis of the distribution (who, when, and where), patterns and Risk factor (epidemiology), determinants of health and disease conditions in a defined population, and application of this knowledge to prevent dise ...

, anthropometry, medicine

Medicine is the science and Praxis (process), practice of caring for patients, managing the Medical diagnosis, diagnosis, prognosis, Preventive medicine, prevention, therapy, treatment, Palliative care, palliation of their injury or disease, ...

and social history

History is the systematic study of the past, focusing primarily on the Human history, human past. As an academic discipline, it analyses and interprets evidence to construct narratives about what happened and explain why it happened. Some t ...

. In 1901, with Walter Weldon, founder of biometry

Biostatistics (also known as biometry) is a branch of statistics that applies statistical methods to a wide range of topics in biology. It encompasses the design of biological experiments, the collection and analysis of data from those experime ...

, and Galton, he founded the journal ''Biometrika

''Biometrika'' is a peer-reviewed scientific journal published by Oxford University Press for the Biometrika Trust. The editor-in-chief is Paul Fearnhead (Lancaster University). The principal focus of this journal is theoretical statistics. It was ...

'' as the first journal of mathematical statistics and biometry.

His work, and that of Galton, underpins many of the 'classical' statistical methods which are in common use today, including the Correlation coefficient

A correlation coefficient is a numerical measure of some type of linear correlation, meaning a statistical relationship between two variables. The variables may be two columns of a given data set of observations, often called a sample, or two c ...

, defined as a product-moment; the method of moments for the fitting of distributions to samples; Pearson's system of continuous curves that forms the basis of the now conventional continuous probability distributions; Chi distance a precursor and special case of the Mahalanobis distance

The Mahalanobis distance is a distance measure, measure of the distance between a point P and a probability distribution D, introduced by Prasanta Chandra Mahalanobis, P. C. Mahalanobis in 1936. The mathematical details of Mahalanobis distance ...

and P-value

In null-hypothesis significance testing, the ''p''-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small ''p''-value means ...

, defined as the probability measure of the complement of the ball

A ball is a round object (usually spherical, but sometimes ovoid) with several uses. It is used in ball games, where the play of the game follows the state of the ball as it is hit, kicked or thrown by players. Balls can also be used for s ...

with the hypothesized value as center point and chi distance as radius. He also introduced the term 'standard deviation'.

He also founded the statistical hypothesis testing theory, Pearson's chi-squared test

Pearson's chi-squared test or Pearson's \chi^2 test is a statistical test applied to sets of categorical data to evaluate how likely it is that any observed difference between the sets arose by chance. It is the most widely used of many chi-squa ...

and principal component analysis

Principal component analysis (PCA) is a linear dimensionality reduction technique with applications in exploratory data analysis, visualization and data preprocessing.

The data is linearly transformed onto a new coordinate system such that th ...

. In 1911 he founded the world's first university statistics department at University College London

University College London (Trade name, branded as UCL) is a Public university, public research university in London, England. It is a Member institutions of the University of London, member institution of the Federal university, federal Uni ...

.

The second wave of mathematical statistics was pioneered by Ronald Fisher

Sir Ronald Aylmer Fisher (17 February 1890 – 29 July 1962) was a British polymath who was active as a mathematician, statistician, biologist, geneticist, and academic. For his work in statistics, he has been described as "a genius who a ...

who wrote two textbooks, ''Statistical Methods for Research Workers

''Statistical Methods for Research Workers'' is a classic book on statistics, written by the statistician R. A. Fisher. It is considered by some to be one of the 20th century's most influential books on statistical methods, together with his '' T ...

'', published in 1925 and ''The Design of Experiments

''The Design of Experiments'' is a 1935 book by the English statistician Ronald Fisher about the design of experiments and is considered a foundational work in experimental design. Among other contributions, the book introduced the concept of th ...

'' in 1935, that were to define the academic discipline in universities around the world. He also systematized previous results, putting them on a firm mathematical footing. In his 1918 seminal paper '' The Correlation between Relatives on the Supposition of Mendelian Inheritance'', the first use to use the statistical term, variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion ...

. In 1919, at Rothamsted Experimental Station

Rothamsted Research, previously known as the Rothamsted Experimental Station and then the Institute of Arable Crops Research, is one of the oldest agricultural research institutions in the world, having been founded in 1843. It is located at Harp ...

he started a major study of the extensive collections of data recorded over many years. This resulted in a series of reports under the general title ''Studies in Crop Variation.'' In 1930 he published ''The Genetical Theory of Natural Selection

''The Genetical Theory of Natural Selection'' is a book by Ronald Fisher which combines Mendelian inheritance, Mendelian genetics with Charles Darwin's theory of natural selection, with Fisher being the first to argue that "Mendelism therefore va ...

'' where he applied statistics to evolution

Evolution is the change in the heritable Phenotypic trait, characteristics of biological populations over successive generations. It occurs when evolutionary processes such as natural selection and genetic drift act on genetic variation, re ...

.

Over the next seven years, he pioneered the principles of the design of experiments

The design of experiments (DOE), also known as experiment design or experimental design, is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation. ...

(see below) and elaborated his studies of analysis of variance. He furthered his studies of the statistics of small samples. Perhaps even more important, he began his systematic approach of the analysis of real data as the springboard for the development of new statistical methods. He developed computational algorithms for analyzing data from his balanced experimental designs. In 1925, this work resulted in the publication of his first book, ''Statistical Methods for Research Workers

''Statistical Methods for Research Workers'' is a classic book on statistics, written by the statistician R. A. Fisher. It is considered by some to be one of the 20th century's most influential books on statistical methods, together with his '' T ...

''. This book went through many editions and translations in later years, and it became the standard reference work for scientists in many disciplines. In 1935, this book was followed by ''The Design of Experiments

''The Design of Experiments'' is a 1935 book by the English statistician Ronald Fisher about the design of experiments and is considered a foundational work in experimental design. Among other contributions, the book introduced the concept of th ...

'', which was also widely used.

In addition to analysis of variance, Fisher named and promoted the method of maximum likelihood estimation. Fisher also originated the concepts of sufficiency (statistics), sufficiency, ancillary statistics, linear discriminant analysis, Fisher's linear discriminator and Fisher information. His article ''On a distribution yielding the error functions of several well known statistics'' (1924) presented Pearson's chi-squared test

Pearson's chi-squared test or Pearson's \chi^2 test is a statistical test applied to sets of categorical data to evaluate how likely it is that any observed difference between the sets arose by chance. It is the most widely used of many chi-squa ...

and William Sealy Gosset

William Sealy Gosset (13 June 1876 – 16 October 1937) was an English statistician, chemist and brewer who worked for Guinness. In statistics, he pioneered small sample experimental design. Gosset published under the pen name Student and develo ...

's Student's t-distribution, t in the same framework as the Gaussian distribution, and his own parameter in the analysis of variance Fisher's z-distribution (more commonly used decades later in the form of the F distribution).

The 5% level of significance appears to have been introduced by Fisher in 1925.Fisher RA (1925) Statistical methods for research workers, Edinburgh: Oliver & Boyd Fisher stated that deviations exceeding twice the standard deviation are regarded as significant. Before this deviations exceeding three times the probable error were considered significant. For a symmetrical distribution the probable error is half the interquartile range. For a normal distribution the probable error is approximately 2/3 the standard deviation. It appears that Fisher's 5% criterion was rooted in previous practice.

Other important contributions at this time included Charles Spearman's Spearman's rank correlation coefficient, rank correlation coefficient that was a useful extension of the Pearson correlation coefficient. William Sealy Gosset

William Sealy Gosset (13 June 1876 – 16 October 1937) was an English statistician, chemist and brewer who worked for Guinness. In statistics, he pioneered small sample experimental design. Gosset published under the pen name Student and develo ...

, the English statistician better known under his pseudonym of ''Student'', introduced Student's t-distribution, a continuous probability distribution useful in situations where the sample size is small and population standard deviation is unknown.

Egon Pearson

Egon Sharpe Pearson (11 August 1895 – 12 June 1980) was one of three children of Karl Pearson and Maria, née Sharpe, and, like his father, a British statistician.

Career

Pearson was educated at Winchester College and Trinity College ...

(Karl's son) and Jerzy Neyman

Jerzy Spława-Neyman (April 16, 1894 – August 5, 1981; ) was a Polish mathematician and statistician who first introduced the modern concept of a confidence interval into statistical hypothesis testing and, with Egon Pearson, revised Ronald Fis ...

introduced the concepts of "Type I and type II errors, Type II" error, power of a test and confidence intervals. Jerzy Neyman

Jerzy Spława-Neyman (April 16, 1894 – August 5, 1981; ) was a Polish mathematician and statistician who first introduced the modern concept of a confidence interval into statistical hypothesis testing and, with Egon Pearson, revised Ronald Fis ...

in 1934 showed that stratified random sampling was in general a better method of estimation than purposive (quota) sampling.

Design of experiments