|

Bayesian Approaches To Brain Function

Bayesian approaches to brain function investigate the capacity of the nervous system to operate in situations of uncertainty in a fashion that is close to the optimal prescribed by Bayesian statistics. This term is used in behavioural sciences and neuroscience and studies associated with this term often strive to explain the brain's cognitive abilities based on statistical principles. It is frequently assumed that the nervous system maintains internal probabilistic models that are updated by neural processing of sensory information using methods approximating those of Bayesian probability. Origins This field of study has its historical roots in numerous disciplines including machine learning, experimental psychology and Bayesian statistics. As early as the 1860s, with the work of Hermann Helmholtz in experimental psychology, the brain's ability to extract perceptual information from sensory data was modeled in terms of probabilistic estimation. The basic idea is that the nervous sy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Statistics

Bayesian statistics ( or ) is a theory in the field of statistics based on the Bayesian interpretation of probability, where probability expresses a ''degree of belief'' in an event. The degree of belief may be based on prior knowledge about the event, such as the results of previous experiments, or on personal beliefs about the event. This differs from a number of other interpretations of probability, such as the frequentist interpretation, which views probability as the limit of the relative frequency of an event after many trials. More concretely, analysis in Bayesian methods codifies prior knowledge in the form of a prior distribution. Bayesian statistical methods use Bayes' theorem to compute and update probabilities after obtaining new data. Bayes' theorem describes the conditional probability of an event based on data as well as prior information or beliefs about the event or conditions related to the event. For example, in Bayesian inference, Bayes' theorem can ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unsupervised Learning

Unsupervised learning is a framework in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Other frameworks in the spectrum of supervisions include weak- or semi-supervision, where a small portion of the data is tagged, and self-supervision. Some researchers consider self-supervised learning a form of unsupervised learning. Conceptually, unsupervised learning divides into the aspects of data, training, algorithm, and downstream applications. Typically, the dataset is harvested cheaply "in the wild", such as massive text corpus obtained by web crawling, with only minor filtering (such as Common Crawl). This compares favorably to supervised learning, where the dataset (such as the ImageNet1000) is typically constructed manually, which is much more expensive. There were algorithms designed specifically for unsupervised learning, such as clustering algorithms like k-means, dimensionality reduction techniques l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Karl Friston

Karl John Friston FRS FMedSci FRSB (born 12 July 1959) is a British neuroscientist and theoretician at University College London. He is an authority on brain imaging and theoretical neuroscience, especially the use of physics-inspired statistical methods to model neuroimaging data and other random dynamical systems. Friston is a key architect of the free energy principle and active inference. In imaging neuroscience he is best known for statistical parametric mapping and dynamic causal modelling. Friston also acts as a scientific advisor to numerous groups in industry. Friston is one of the most highly cited living scientists and in 2016 was ranked No. 1 by Semantic Scholar in the list of top 10 most influential neuroscientists. Education Karl Friston attended the Ellesmere Port Grammar School, later renamed Whitby Comprehensive, from 1970 to 1977. Friston studied natural sciences (physics and psychology) at the University of Cambridge in 1980, and completed his medical s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kalman Filter

In statistics and control theory, Kalman filtering (also known as linear quadratic estimation) is an algorithm that uses a series of measurements observed over time, including statistical noise and other inaccuracies, to produce estimates of unknown variables that tend to be more accurate than those based on a single measurement, by estimating a joint probability distribution over the variables for each time-step. The filter is constructed as a mean squared error minimiser, but an alternative derivation of the filter is also provided showing how the filter relates to maximum likelihood statistics. The filter is named after Rudolf E. Kálmán. Kalman filtering has numerous technological applications. A common application is for guidance, navigation, and control of vehicles, particularly aircraft, spacecraft and ships Dynamic positioning, positioned dynamically. Furthermore, Kalman filtering is much applied in time series analysis tasks such as signal processing and econometrics. K ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predictive Coding

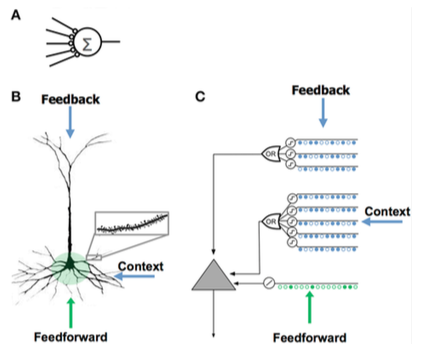

In neuroscience, predictive coding (also known as predictive processing) is a theory of brain function which postulates that the brain is constantly generating and updating a " mental model" of the environment. According to the theory, such a mental model is used to predict input signals from the senses that are then compared with the actual input signals from those senses. Predictive coding is member of a wider set of theories that follow the Bayesian brain hypothesis. Origins Theoretical ancestors to predictive coding date back as early as 1860 with Helmholtz's concept of unconscious inference. Unconscious inference refers to the idea that the human brain fills in visual information to make sense of a scene. For example, if something is relatively smaller than another object in the visual field, the brain uses that information as a likely cue of depth, such that the perceiver ultimately (and involuntarily) experiences depth. The understanding of perception as the interaction ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Michael Shadlen

} Michael Neil Shadlen (born August 19, 1959) is an American neuroscientist and neurologist, whose research concerns the neural mechanisms of decision-making. He has been Professor of Neuroscience at Columbia University since 2012 and a Howard Hughes Medical Investigator since 2000. He is a member of the Kavli Institute for Brain Science, a Principal Investigator at the Mortimer B. Zuckerman Mind Brain Behavior Institute and an elected member of the National Academy of Medicine and National Academy of Sciences. Shadlen is a jazz guitarist and interested in the relation between jazz and neuroscience. Education Shadlen earned a Bachelor of Arts degree in biology at Brown University in 1981. He completed his Ph.D. in neurobiology at the University of California, Berkeley in 1985 under the direction of Ralph D. Freeman. Shadlen completed his M.D. at Brown University's Alpert Medical School in 1988. Career Shadlen completed his residency at Stanford University School of Medicine w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chain

In probability theory and statistics, a Markov chain or Markov process is a stochastic process describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs ''now''." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). Markov processes are named in honor of the Russian mathematician Andrey Markov. Markov chains have many applications as statistical models of real-world processes. They provide the basis for general stochastic simulation methods known as Markov chain Monte Carlo, which are used for simulating sampling from complex probability distributions, and have found application in areas including Bayesian statistics, biology, chemistry, economics, fin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchical Temporal Memory

Hierarchical temporal memory (HTM) is a biologically constrained machine intelligence technology developed by Numenta. Originally described in the 2004 book '' On Intelligence'' by Jeff Hawkins with Sandra Blakeslee, HTM is primarily used today for anomaly detection in streaming data. The technology is based on neuroscience and the physiology and interaction of pyramidal neurons in the neocortex of the mammalian (in particular, human) brain. At the core of HTM are learning algorithms that can store, learn, infer, and recall high-order sequences. Unlike most other machine learning methods, HTM constantly learns (in an unsupervised process) time-based patterns in unlabeled data. HTM is robust to noise, and has high capacity (it can learn multiple patterns simultaneously). When applied to computers, HTM is well suited for prediction, anomaly detection, classification, and ultimately sensorimotor applications. HTM has been tested and implemented in software through example appli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jeff Hawkins

Jeffrey Hawkins is an American businessman, computer scientist, neuroscientist and engineer. He co-founded Palm Computing — where he co-created the PalmPilot and Treo — and Handspring. He subsequently turned to work on neuroscience, founding in 2002 the Redwood Neuroscience Institute. In 2005 he co-founded Numenta, where he leads a team in efforts to reverse-engineer the neocortex and enable machine intelligence technology based on brain theory. He is the co-author of '' On Intelligence'' (2004), which explains his memory-prediction framework theory of the brain, and the author of ''A Thousand Brains: A New Theory of Intelligence'' (2021). Education Hawkins attended Cornell University, where he received a Bachelor of Science with a major in electrical engineering in 1979. His interest in pattern recognition for speech and text input to computers led him to enroll in the biophysics program at the University of California, Berkeley in 1986. While there he patented a "p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Michael S

Michael may refer to: People * Michael (given name), a given name * he He ..., a given name * Michael (surname), including a list of people with the surname Michael Given name * Michael (bishop elect)">Michael (surname)">he He ..., a given name * Michael (surname), including a list of people with the surname Michael Given name * Michael (bishop elect), English 13th-century Bishop of Hereford elect * Michael (Khoroshy) (1885–1977), cleric of the Ukrainian Orthodox Church of Canada * Michael Donnellan (fashion designer), Michael Donnellan (1915–1985), Irish-born London fashion designer, often referred to simply as "Michael" * Michael (footballer, born 1982), Brazilian footballer * Michael (footballer, born 1983), Brazilian footballer * Michael (footballer, born 1993), Brazilian footballer * Michael (footballer, born February 1996), Brazilian footballer * Michael (footballer, born March 1996), Brazilian footballer * Michael (footballer, born 1999), Brazilian football ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Helmholtz Machine

The Helmholtz machine (named after Hermann von Helmholtz and his concept of Helmholtz free energy) is a type of artificial neural network that can account for the hidden structure of a set of data by being trained to create a generative model of the original set of data. The hope is that by learning economical representations of the data, the underlying structure of the generative model should reasonably approximate the hidden structure of the data set. A Helmholtz machine contains two networks, a bottom-up ''recognition'' network that takes the data as input and produces a distribution over hidden variables, and a top-down "generative" network that generates values of the hidden variables and the data itself. At the time, Helmholtz machines were one of a handful of learning architectures that used feedback as well as feedforward to ensure quality of learned models. Helmholtz machines are usually trained using an unsupervised learning algorithm, such as the wake-sleep algorithm. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peter Dayan

Peter Dayan is a British neuroscientist and computer scientist who is director at the Max Planck Institute for Biological Cybernetics in Tübingen, Germany, along with Ivan De Araujo. He is co-author of ''Theoretical Neuroscience'', an influential textbook on computational neuroscience. He is known for applying Bayesian methods from machine learning and artificial intelligence to understand neural function and is particularly recognized for relating neurotransmitter levels to prediction errors and Bayesian uncertainties. He has pioneered the field of reinforcement learning (RL) where he and his colleagues proposed that dopamine signals reward prediction error and helped develop the Q-learning algorithm, and he made contributions to unsupervised learning, including the wake-sleep algorithm for neural networks and the Helmholtz machine. Education Dayan studied mathematics at the University of Cambridge and then continued for a PhD in artificial intelligence at the University of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |