|

Separation (statistics)

In statistics, separation is a phenomenon associated with models for dichotomous or categorical outcomes, including logistic and probit regression. Separation occurs if the predictor (or a linear combination of some subset of the predictors) is associated with only one outcome value when the predictor range is split at a certain value. The phenomenon For example, if the predictor ''X'' is continuous, and the outcome ''y'' = 1 for all observed ''x'' > 2. If the outcome values are (seemingly) perfectly determined by the predictor (e.g., ''y'' = 0 when ''x'' ≤ 2) then the condition "complete separation" is said to occur. If instead there is some overlap (e.g., ''y'' = 0 when ''x'' < 2, but ''y'' has observed values of 0 and 1 when ''x'' = 2) then "quasi-complete separation" occurs. A 2 × 2 table w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

American Economic Review

The ''American Economic Review'' is a monthly peer-reviewed academic journal first published by the American Economic Association in 1911. The current editor-in-chief is Erzo FP Luttmer, a professor of economics at Dartmouth College. The journal is based in Pittsburgh. It is one of the " top five" journals in economics. In 2004, the ''American Economic Review'' began requiring "data and code sufficient to permit replication" of a paper's results, which is then posted on the journal's website. Exceptions are made for proprietary data. Until 2017, the May issue of the ''American Economic Review'', titled the ''Papers and Proceedings'' issue, featured the papers presented at the American Economic Association's annual meeting that January. After being selected for presentation, the papers in the ''Papers and Proceedings'' issue did not undergo a formal process of peer review. Starting in 2018, papers presented at the annual meetings have been published in a separate journal, '' AEA Pap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integral

In mathematics, an integral is the continuous analog of a Summation, sum, which is used to calculate area, areas, volume, volumes, and their generalizations. Integration, the process of computing an integral, is one of the two fundamental operations of calculus,Integral calculus is a very well established mathematical discipline for which there are many sources. See and , for example. the other being Derivative, differentiation. Integration was initially used to solve problems in mathematics and physics, such as finding the area under a curve, or determining displacement from velocity. Usage of integration expanded to a wide variety of scientific fields thereafter. A definite integral computes the signed area of the region in the plane that is bounded by the Graph of a function, graph of a given Function (mathematics), function between two points in the real line. Conventionally, areas above the horizontal Coordinate axis, axis of the plane are positive while areas below are n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference ( or ) is a method of statistical inference in which Bayes' theorem is used to calculate a probability of a hypothesis, given prior evidence, and update it as more information becomes available. Fundamentally, Bayesian inference uses a prior distribution to estimate posterior probabilities. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence of two antecedents: a prior probability and a "likelihood function" derive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

American Journal Of Epidemiology

The American Journal of Epidemiology (''AJE'') is a peer-reviewed journal for empirical research findings, opinion pieces, and methodological developments in the field of epidemiological research. The current editor-in-chief is Enrique Schisterman. Articles published in ''AJE'' are indexed by PubMed, Embase, and a number of other databases. The ''AJE'' offers open-access options for authors. It is published monthly, with articles published online ahead of print at the accepted manuscript and corrected proof stages. Entire issues have been dedicated to abstracts from academic meetings (Society of Epidemiologic Research, North American Congress of Epidemiology), the history of the Epidemic Intelligence Service of the Centers for Disease Control and Prevention (CDC), the life of George W. Comstock, and the celebration of notable anniversaries of schools of public health ( University of California, Berkeley, School of Public Health; Tulane University School of Public Health and Tro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuity Correction

In mathematics, a continuity correction is an adjustment made when a discrete object is approximated using a continuous object. Examples Binomial If a random variable ''X'' has a binomial distribution with parameters ''n'' and ''p'', i.e., ''X'' is distributed as the number of "successes" in ''n'' independent Bernoulli trials with probability ''p'' of success on each trial, then :P(X\leq x) = P(X |

Regularization (mathematics)

In mathematics, statistics, Mathematical finance, finance, and computer science, particularly in machine learning and inverse problems, regularization is a process that converts the Problem solving, answer to a problem to a simpler one. It is often used in solving ill-posed problems or to prevent overfitting. Although regularization procedures can be divided in many ways, the following delineation is particularly helpful: * Explicit regularization is regularization whenever one explicitly adds a term to the optimization problem. These terms could be Prior probability, priors, penalties, or constraints. Explicit regularization is commonly employed with ill-posed optimization problems. The regularization term, or penalty, imposes a cost on the optimization function to make the optimal solution unique. * Implicit regularization is all other forms of regularization. This includes, for example, early stopping, using a robust loss function, and discarding outliers. Implicit regularizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Communications In Statistics

''Communications in Statistics'' is a peer-reviewed scientific journal that publishes papers related to statistics. It is published by Taylor & Francis in three series, ''Theory and Methods'', ''Simulation and Computation'', and ''Case Studies, Data Analysis and Applications''. ''Communications in Statistics – Theory and Methods'' This series started publishing in 1970 and publishes papers related to statistical theory and methods. It publishes 20 issues each year. Based on Web of Science, the five most cited papers in the journal are: * Kulldorff M. A spatial scan statistic, 1997, 982 cites. * Holland PW, Welsch RE. Robust regression using iteratively reweighted least-squares, 1977, 526 cites. * Sugiura N. Further analysts of the data by Akaike's information criterion and the finite corrections, 1978, 490 cites. * Hosmer DW, Lemeshow S. Goodness of fit tests for the multiple logistic regression model, 1980, 401 cites. * Iman RL, Conover WJ. Small sample sensitivity analysis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dichotomy

A dichotomy () is a partition of a set, partition of a whole (or a set) into two parts (subsets). In other words, this couple of parts must be * jointly exhaustive: everything must belong to one part or the other, and * mutually exclusive: nothing can belong simultaneously to both parts. If there is a concept A, and it is split into parts B and not-B, then the parts form a dichotomy: they are mutually exclusive, since no part of B is contained in not-B and vice versa, and they are jointly exhaustive, since they cover all of A, and together again give A. Such a partition is also frequently called a bipartition. The two parts thus formed are Complement (set theory), complements. In logic, the partitions are dual (category theory), opposites if there exists a proposition such that it holds over one and not the other. Treating continuous variables or multicategorical variables as binary variables is called discretization, dichotomization. The discretization error inherent in dichoto ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Error

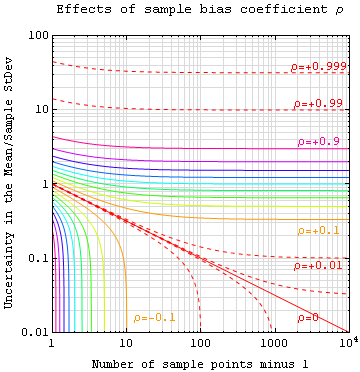

The standard error (SE) of a statistic (usually an estimator of a parameter, like the average or mean) is the standard deviation of its sampling distribution or an estimate of that standard deviation. In other words, it is the standard deviation of statistic values (each value is per sample that is a set of observations made per sampling on the same population). If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals. The sampling distribution of a mean is generated by repeated sampling from the same population and recording the sample mean per sample. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely arou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood Estimation

In statistics, maximum likelihood estimation (MLE) is a method of estimation theory, estimating the Statistical parameter, parameters of an assumed probability distribution, given some observed data. This is achieved by Mathematical optimization, maximizing a likelihood function so that, under the assumed statistical model, the Realization (probability), observed data is most probable. The point estimate, point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is Differentiable function, differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |