|

Relative Mean Difference

The mean absolute difference (univariate) is a Statistical dispersion#Measures of statistical dispersion, measure of statistical dispersion equal to the average absolute difference of two independent values drawn from a probability distribution. A related statistic is the #Relative_mean_absolute_difference, relative mean absolute difference, which is the mean absolute difference divided by the arithmetic mean, and equal to twice the Gini coefficient. The mean absolute difference is also known as the absolute mean difference (not to be confused with the absolute value of the mean signed difference) and the Corrado Gini, Gini mean difference (GMD). The mean absolute difference is sometimes denoted by Δ or as MD. Definition The mean absolute difference is defined as the "average" or "mean", formally the expected value, of the absolute difference of two random variables ''X'' and ''Y'' Independent and identically distributed random variables, independently and identically distribute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Dispersion

In statistics, dispersion (also called variability, scatter, or spread) is the extent to which a distribution is stretched or squeezed. Common examples of measures of statistical dispersion are the variance, standard deviation, and interquartile range. For instance, when the variance of data in a set is large, the data is widely scattered. On the other hand, when the variance is small, the data in the set is clustered. Dispersion is contrasted with location or central tendency, and together they are the most used properties of distributions. Measures A measure of statistical dispersion is a nonnegative real number that is zero if all the data are the same and increases as the data become more diverse. Most measures of dispersion have the same units as the quantity being measured. In other words, if the measurements are in metres or seconds, so is the measure of dispersion. Examples of dispersion measures include: * Standard deviation * Interquartile range (IQR) * Range * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lorenz Curve

In economics, the Lorenz curve is a graphical representation of the distribution of income or of wealth. It was developed by Max O. Lorenz in 1905 for representing inequality of the wealth distribution. The curve is a graph showing the proportion of overall income or wealth assumed by the bottom ''x''% of the people, although this is not rigorously true for a finite population (see below). It is often used to represent income distribution, where it shows for the bottom ''x''% of households, what percentage (''y''%) of the total income they have. The percentage of households is plotted on the ''x''-axis, the percentage of income on the ''y''-axis. It can also be used to show distribution of assets. In such use, many economists consider it to be a measure of social inequality. The concept is useful in describing inequality among the size of individuals in ecology and in studies of biodiversity, where the cumulative proportion of species is plotted against the cumulative proportio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gamma Distribution

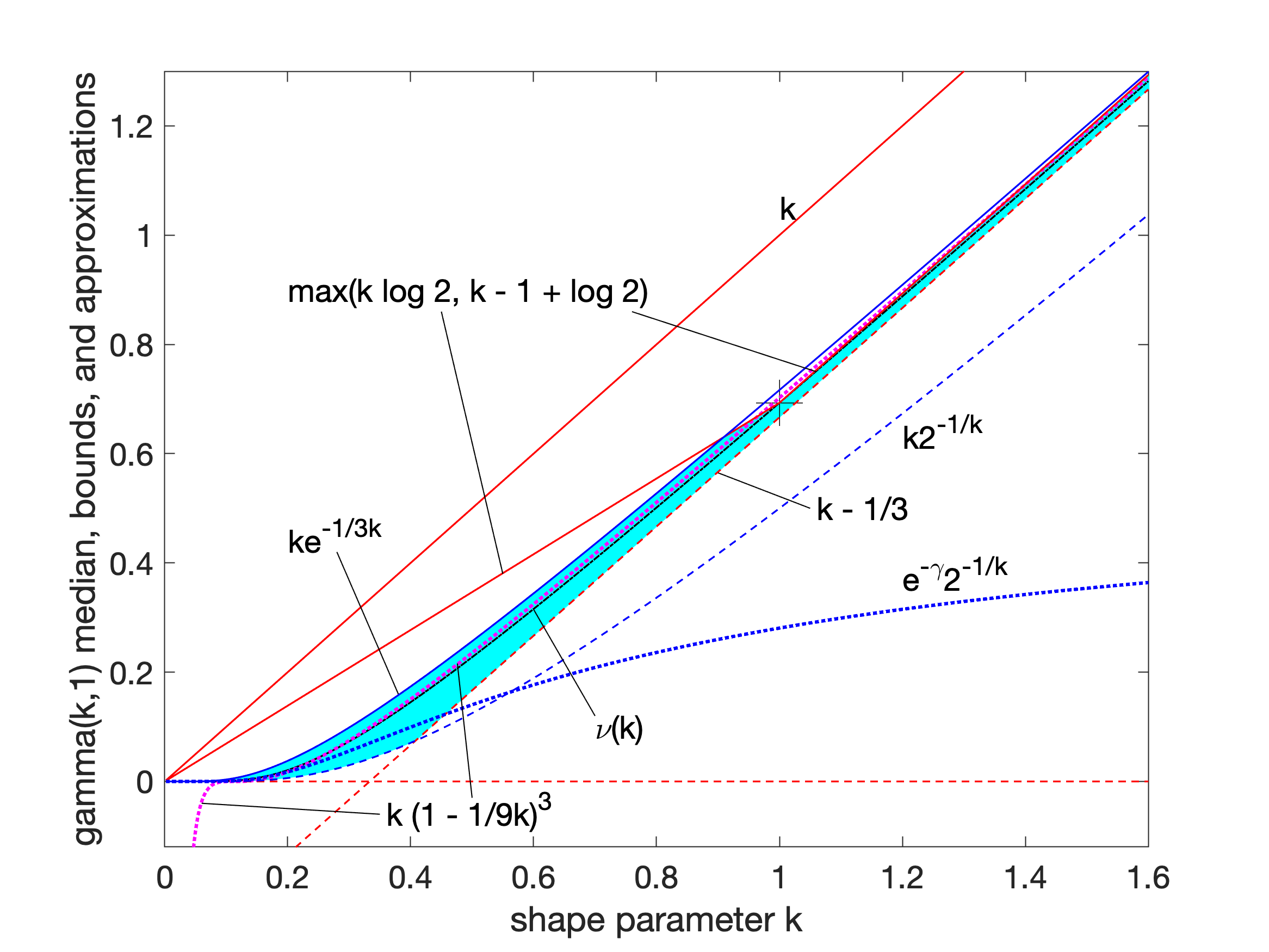

In probability theory and statistics, the gamma distribution is a two- parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-square distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use: #With a shape parameter k and a scale parameter \theta. #With a shape parameter \alpha = k and an inverse scale parameter \beta = 1/ \theta , called a rate parameter. In each of these forms, both parameters are positive real numbers. The gamma distribution is the maximum entropy probability distribution (both with respect to a uniform base measure and a 1/x base measure) for a random variable X for which E 'X''= ''kθ'' = ''α''/''β'' is fixed and greater than zero, and E n(''X'')= ''ψ''(''k'') + ln(''θ'') = ''ψ''(''α'') − ln(''β'') is fixed (''ψ'' is the digamma function). Definitions The parameterization with ''k'' and ''θ'' appears to be more common ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pareto Distribution

The Pareto distribution, named after the Italian civil engineer, economist, and sociologist Vilfredo Pareto ( ), is a power-law probability distribution that is used in description of social, quality control, scientific, geophysical, actuarial, and many other types of observable phenomena; the principle originally applied to describing the distribution of wealth in a society, fitting the trend that a large portion of wealth is held by a small fraction of the population. The Pareto principle or "80-20 rule" stating that 80% of outcomes are due to 20% of causes was named in honour of Pareto, but the concepts are distinct, and only Pareto distributions with shape value () of log45 ≈ 1.16 precisely reflect it. Empirical observation has shown that this 80-20 distribution fits a wide range of cases, including natural phenomena and human activities. Definitions If ''X'' is a random variable with a Pareto (Type I) distribution, then the probability that ''X'' is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exponential Distribution

In probability theory and statistics, the exponential distribution is the probability distribution of the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts. The exponential distribution is not the same as the class of exponential families of distributions. This is a large class of probability distributions that includes the exponential distribution as one of its members, but also includes many other distributions, like the normal, binomial, gamma, and Poisson distributions. Definitions Probability density function The probability density function (pdf) of an exponential distribution is : f(x;\lambda) = \begin \l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uniform Distribution (continuous)

In probability theory and statistics, the continuous uniform distribution or rectangular distribution is a family of symmetric probability distributions. The distribution describes an experiment where there is an arbitrary outcome that lies between certain bounds. The bounds are defined by the parameters, ''a'' and ''b'', which are the minimum and maximum values. The interval can either be closed (e.g. , b or open (e.g. (a, b)). Therefore, the distribution is often abbreviated ''U'' (''a'', ''b''), where U stands for uniform distribution. The difference between the bounds defines the interval length; all intervals of the same length on the distribution's support are equally probable. It is the maximum entropy probability distribution for a random variable ''X'' under no constraint other than that it is contained in the distribution's support. Definitions Probability density function The probability density function of the continuous uniform distribution is: : f(x)=\be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bernoulli Distribution

In probability theory and statistics, the Bernoulli distribution, named after Swiss mathematician Jacob Bernoulli,James Victor Uspensky: ''Introduction to Mathematical Probability'', McGraw-Hill, New York 1937, page 45 is the discrete probability distribution of a random variable which takes the value 1 with probability p and the value 0 with probability q = 1-p. Less formally, it can be thought of as a model for the set of possible outcomes of any single experiment that asks a yes–no question. Such questions lead to outcomes that are boolean-valued: a single bit whose value is success/yes/ true/one with probability ''p'' and failure/no/false/zero with probability ''q''. It can be used to represent a (possibly biased) coin toss where 1 and 0 would represent "heads" and "tails", respectively, and ''p'' would be the probability of the coin landing on heads (or vice versa where 1 would represent tails and ''p'' would be the probability of tails). In particular, unfair coins ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. However, in robust statistics, statistic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

E-statistic

Energy distance is a statistical distance between probability distributions. If X and Y are independent random vectors in ''R''d with cumulative distribution functions (cdf) F and G respectively, then the energy distance between the distributions F and G is defined to be the square root of : D^2(F, G) = 2\operatorname E\, X - Y\, - \operatorname E\, X - X'\, - \operatorname E\, Y - Y'\, \geq 0, where (X, X', Y, Y') are independent, the cdf of X and X' is F, the cdf of Y and Y' is G, \operatorname E is the expected value, and , , . , , denotes the length of a vector. Energy distance satisfies all axioms of a metric thus energy distance characterizes the equality of distributions: D(F,G) = 0 if and only if F = G. Energy distance for statistical applications was introduced in 1985 by Gábor J. Székely, who proved that for real-valued random variables D^2(F, G) is exactly twice Harald Cramér's distance: : \int_^\infty (F(x) - G(x))^2 \, dx. For a simple proof of this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distance Standard Deviation

In statistics and in probability theory, distance correlation or distance covariance is a measure of dependence between two paired random vectors of arbitrary, not necessarily equal, dimension. The population distance correlation coefficient is zero if and only if the random vectors are independent. Thus, distance correlation measures both linear and nonlinear association between two random variables or random vectors. This is in contrast to Pearson's correlation, which can only detect linear association between two random variables. Distance correlation can be used to perform a statistical test of dependence with a permutation test. One first computes the distance correlation (involving the re-centering of Euclidean distance matrices) between two random vectors, and then compares this value to the distance correlations of many shuffles of the data. Background The classical measure of dependence, the Pearson correlation coefficient, is mainly sensitive to a linear relatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Examples

Example may refer to: * '' exempli gratia'' (e.g.), usually read out in English as "for example" * .example, reserved as a domain name that may not be installed as a top-level domain of the Internet ** example.com, example.net, example.org, example.edu, second-level domain names reserved for use in documentation as examples * HMS ''Example'' (P165), an Archer-class patrol and training vessel of the Royal Navy Arts * ''The Example'', a 1634 play by James Shirley * ''The Example'' (comics), a 2009 graphic novel by Tom Taylor and Colin Wilson * Example (musician), the British dance musician Elliot John Gleave (born 1982) * ''Example'' (album), a 1995 album by American rock band For Squirrels See also * * Exemplar (other), a prototype or model which others can use to understand a topic better * Exemplum, medieval collections of short stories to be told in sermons * Eixample The Eixample (; ) is a district of Barcelona between the old city (Ciutat Vella) and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |