|

Neural Scaling Law

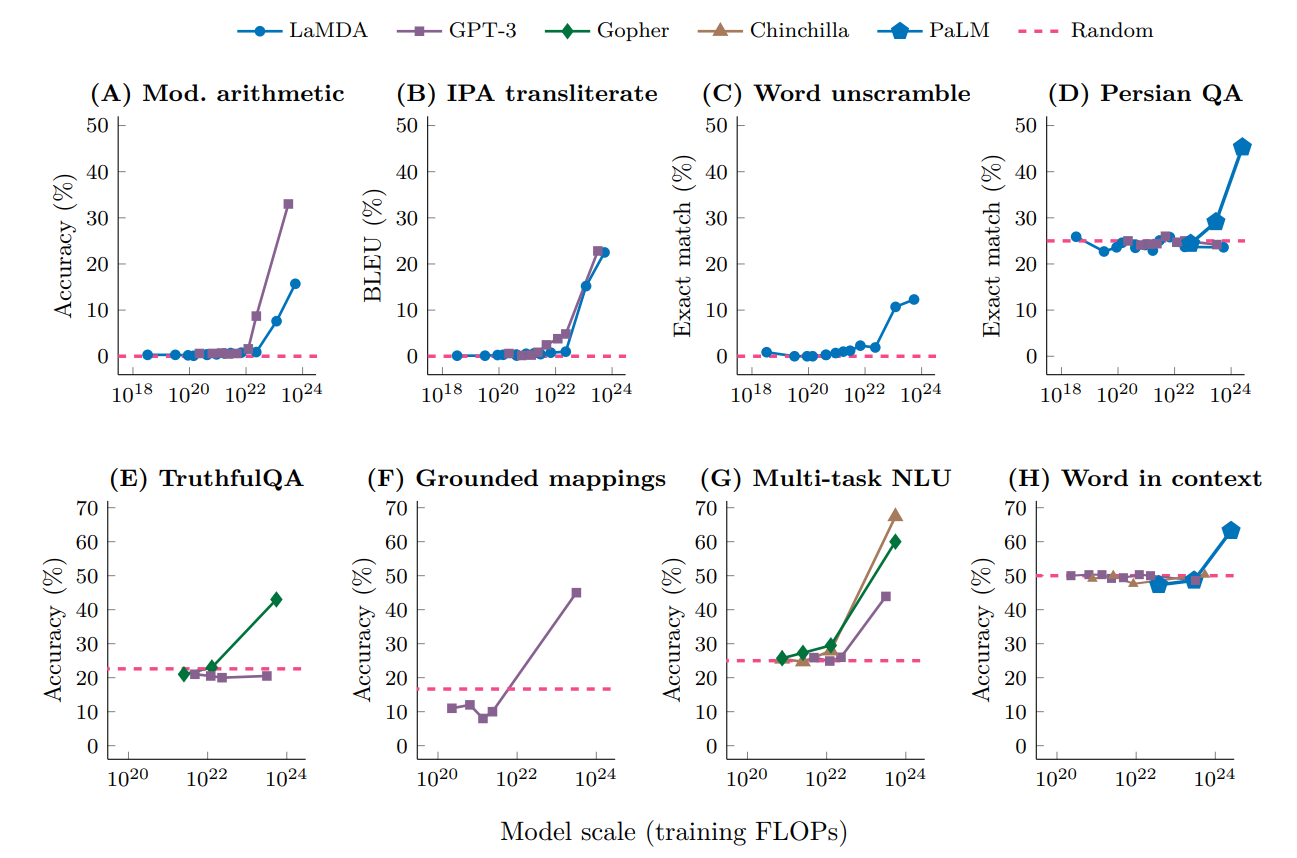

In machine learning, a neural scaling law is an empirical scaling law that describes how neural network performance changes as key factors are scaled up or down. These factors typically include the number of parameters, training dataset size, and training cost. Introduction In general, a neural model can be characterized by 4 parameters: size of the model, size of the training dataset, cost of training, error rate after training. Each of these four variables can be precisely defined into a real number, and they are empirically found to be related by simple statistical laws, called "scaling laws". These are usually written as N, D, C, L (number of parameters, dataset size, computing cost, loss). Size of the model In most cases, the size of the model is simply the number of parameters. However, one complication arises with the use of sparse models, such as mixture-of-expert models. In sparse models, during every inference, only a fraction of the parameters are used. In compariso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making pred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perplexity

In information theory, perplexity is a measurement of how well a probability distribution or probability model predicts a sample. It may be used to compare probability models. A low perplexity indicates the probability distribution is good at predicting the sample. Perplexity of a probability distribution The perplexity ''PP'' of a discrete probability distribution ''p'' is defined as :\mathit(p) := 2^=2^=\prod_x p(x)^ where ''H''(''p'') is the entropy (in bits) of the distribution and ''x'' ranges over events. (The base need not be 2: The perplexity is independent of the base, provided that the entropy and the exponentiation use the ''same'' base.) This measure is also known in some domains as the '' (order-1 true) diversity''. Perplexity of a random variable ''X'' may be defined as the perplexity of the distribution over its possible values ''x''. In the special case where ''p'' models a fair ''k''-sided die (a uniform distribution over ''k'' discrete events), its ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformer (machine Learning Model)

A transformer is a deep learning model that adopts the mechanism of self-attention, differentially weighting the significance of each part of the input data. It is used primarily in the fields of natural language processing (NLP) and computer vision (CV). Like recurrent neural networks (RNNs), transformers are designed to process sequential input data, such as natural language, with applications towards tasks such as translation and text summarization. However, unlike RNNs, transformers process the entire input all at once. The attention mechanism provides context for any position in the input sequence. For example, if the input data is a natural language sentence, the transformer does not have to process one word at a time. This allows for more parallelization than RNNs and therefore reduces training times. Transformers were introduced in 2017 by a team at Google Brain and are increasingly the model of choice for NLP problems, replacing RNN models such as long short-term me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. More generally, op ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backpropagation

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward artificial neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions generally. These classes of algorithms are all referred to generically as "backpropagation". In fitting a neural network, backpropagation computes the gradient of the loss function with respect to the weights of the network for a single input–output example, and does so efficiently, unlike a naive direct computation of the gradient with respect to each weight individually. This efficiency makes it feasible to use gradient methods for training multilayer networks, updating weights to minimize loss; gradient descent, or variants such as stochastic gradient descent, are commonly used. The backpropagation algorithm works by computing the gradient of the loss function with respect to each weight by the chain rule, computing the gradient one laye ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nat (unit)

The natural unit of information (symbol: nat), sometimes also nit or nepit, is a unit of information, based on natural logarithms and powers of ''e'', rather than the powers of 2 and base 2 logarithms, which define the shannon. This unit is also known by its unit symbol, the nat. One nat is the information content of an event when the probability of that event occurring is 1/ ''e''. One nat is equal to shannons ≈ 1.44 Sh or, equivalently, hartleys ≈ 0.434 Hart. History Boulton and Wallace used the term ''nit'' in conjunction with minimum message length, which was subsequently changed by the minimum description length community to ''nat'' to avoid confusion with the nit used as a unit of luminance. Alan Turing used the ''natural ban''. Entropy Shannon entropy (information entropy), being the expected value of the information of an event, is a quantity of the same type and with the same units as information. The International System ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

FLOPS

In computing, floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance, useful in fields of scientific computations that require floating-point calculations. For such cases, it is a more accurate measure than measuring instructions per second. Floating-point arithmetic Floating-point arithmetic is needed for very large or very small real numbers, or computations that require a large dynamic range. Floating-point representation is similar to scientific notation, except everything is carried out in base two, rather than base ten. The encoding scheme stores the sign, the exponent (in base two for Cray and VAX, base two or ten for IEEE floating point formats, and base 16 for IBM Floating Point Architecture) and the significand (number after the radix point). While several similar formats are in use, the most common is ANSI/IEEE Std. 754-1985. This standard defines the format for 32-bit numbers called ''single precision'', as well as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Learning Rate

In machine learning and statistics, the learning rate is a Hyperparameter (machine learning), tuning parameter in an Mathematical optimization, optimization algorithm that determines the step size at each iteration while moving toward a minimum of a loss function. Since it influences to what extent newly acquired information overrides old information, it metaphorically represents the speed at which a machine learning model "learns". In the adaptive control literature, the learning rate is commonly referred to as gain. In setting a learning rate, there is a trade-off between the rate of convergence and overshooting. While the descent direction is usually determined from the Gradient descent, gradient of the loss function, the learning rate determines how big a step is taken in that direction. A too high learning rate will make the learning jump over minima but a too low learning rate will either take too long to converge or get stuck in an undesirable local minimum. In order to ach ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chinchilla AI

Chinchilla is a family of large language models developed by the research team at DeepMind, presented in March 2022. It is named "chinchilla" because it is a further development over a previous model family named Gopher. Both model families were trained in order to investigate the scaling laws of large language models. It claimed to outperform GPT-3. It considerably simplifies downstream utilization because it requires much less computer power for inference and fine-tuning. Based on the training of previously employed language models, it has been determined that if one doubles the model size, one must also have twice the number of training tokens. This hypothesis has been used to train Chinchilla by DeepMind DeepMind Technologies is a British artificial intelligence subsidiary of Alphabet Inc. and research laboratory founded in 2010. DeepMind was List of mergers and acquisitions by Google, acquired by Google in 2014 and became a wholly owned subsid .... Similar to Gopher in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like text. Given an initial text as prompt, it will produce text that continues the prompt. The architecture is a standard transformer network (with a few engineering tweaks) with the unprecedented size of 2048-token-long context and 175 billion parameters (requiring 800 GB of storage). The training method is "generative pretraining", meaning that it is trained to predict what the next token is. The model demonstrated strong few-shot learning on many text-based tasks. It is the third-generation language prediction model in the GPT-n series (and the successor to GPT-2) created by OpenAI, a San Francisco-based artificial intelligence research laboratory. GPT-3, which was introduced in May 2020, and was in beta testing as of July 2020, is part of a trend in natural language processing (NLP) systems of pre-trained language representations. The quality of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning From Human Feedback

In machine learning, reinforcement learning from human feedback (RLHF) or reinforcement learning from human preferences is a technique that trains a "reward model" directly from human feedback and uses the model as a reward function to optimize an agent's policy using reinforcement learning (RL) through an optimization algorithm like Proximal Policy Optimization. The reward model is trained in advance to the policy being optimized to predict if a given output is good (high reward) or bad (low reward). RLHF can improve the robustness and exploration of RL agents, especially when the reward function is sparse or noisy. Human feedback is collected by asking humans to rank instances of the agent's behavior. These rankings can then be used to score outputs, for example with the Elo rating system. RLHF has been applied to various domains of natural language processing, such as conversational agents, text summarization, and natural language understanding. Ordinary reinforcement learnin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |