|

NetOwl

NetOwl is a suite of multilingual text and identity analytics products that analyze big data in the form of text data – reports, web, social media, etc. – as well as structured entity data about people, organizations, places, and things. NetOwl utilizes artificial intelligence (AI)-based approaches, including natural language processing (NLP), machine learning (ML), and computational linguistics, to extract entities, relationships, and events; to perform sentiment analysis; to assign latitude/longitude to geographical references in text; to translate names written in foreign languages; and to perform name matching and identity resolution."SRA International." Washington Post. Retrieved 2013-07-02.Zelenko, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NetOwl Logo

NetOwl is a suite of multilingual text and identity analytics products that analyze big data in the form of text data – reports, web, social media, etc. – as well as structured entity data about people, organizations, places, and things. NetOwl utilizes artificial intelligence (AI)-based approaches, including natural language processing (NLP), machine learning (ML), and computational linguistics, to extract entities, relationships, and events; to perform sentiment analysis; to assign latitude/longitude to geographical references in text; to translate names written in foreign languages; and to perform name matching and identity resolution."SRA International." Washington Post. Retrieved 2013-07-02.Zelen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Knowledge Extraction

Knowledge extraction is the creation of knowledge from structured (relational databases, XML) and unstructured (text, documents, images) sources. The resulting knowledge needs to be in a machine-readable and machine-interpretable format and must represent knowledge in a manner that facilitates inferencing. Although it is methodically similar to information extraction ( NLP) and ETL (data warehouse), the main criterion is that the extraction result goes beyond the creation of structured information or the transformation into a relational schema. It requires either the reuse of existing formal knowledge (reusing identifiers or ontologies) or the generation of a schema based on the source data. The RDB2RDF W3C group is currently standardizing a language for extraction of resource description frameworks (RDF) from relational databases. Another popular example for knowledge extraction is the transformation of Wikipedia into structured data and also the mapping to existing knowledg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Relationship

Contemporary ontologies share many structural similarities, regardless of the ontology language in which they are expressed. Most ontologies describe individuals (instances), classes (concepts), attributes, and relations. Overview Common components of ontologies include: ;Individuals: instances or objects (the basic or "ground level" objects). ;Classes: sets, collections, concepts, types of objects, or kinds of things. ;Attributes: aspects, properties, features, characteristics, or parameters that objects (and classes) can have. ;Relations: ways in which classes and individuals can be related to one another. ;Function terms: complex structures formed from certain relations that can be used in place of an individual term in a statement. ;Restrictions: formally stated descriptions of what must be true in order for some assertion to be accepted as input. ;Rules: statements in the form of an if-then (antecedent-consequent) sentence that describe the logical inferences that can be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Document Classification

Document classification or document categorization is a problem in library science, information science and computer science. The task is to assign a document to one or more classes or categories. This may be done "manually" (or "intellectually") or algorithmically. The intellectual classification of documents has mostly been the province of library science, while the algorithmic classification of documents is mainly in information science and computer science. The problems are overlapping, however, and there is therefore interdisciplinary research on document classification. The documents to be classified may be texts, images, music, etc. Each kind of document possesses its special classification problems. When not otherwise specified, text classification is implied. Documents may be classified according to their subjects or according to other attributes (such as document type, author, printing year etc.). In the rest of this article only subject classification is considered. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unstructured Data

Unstructured data (or unstructured information) is information that either does not have a pre-defined data model or is not organized in a pre-defined manner. Unstructured information is typically text-heavy, but may contain data such as dates, numbers, and facts as well. This results in irregularities and ambiguities that make it difficult to understand using traditional programs as compared to data stored in fielded form in databases or annotated ( semantically tagged) in documents. In 1998, Merrill Lynch said "unstructured data comprises the vast majority of data found in an organization, some estimates run as high as 80%." It's unclear what the source of this number is, but nonetheless it is accepted by some. Other sources have reported similar or higher percentages of unstructured data. , IDC and Dell EMC project that data will grow to 40 zettabytes by 2020, resulting in a 50-fold growth from the beginning of 2010. More recently, IDC and Seagate predict that the global ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Named Entity Recognition

Named-entity recognition (NER) (also known as (named) entity identification, entity chunking, and entity extraction) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc. Most research on NER/NEE systems has been structured as taking an unannotated block of text, such as this one: And producing an annotated block of text that highlights the names of entities: In this example, a person name consisting of one token, a two-token company name and a temporal expression have been detected and classified. State-of-the-art NER systems for English produce near-human performance. For example, the best system entering MUC-7 scored 93.39% of F-measure while human annotators scored 97.60% and 96.95%. Named-entity recognition platforms Notable NER platforms include: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Linguistics

Computational linguistics is an Interdisciplinarity, interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others. Sub-fields and related areas Traditionally, computational linguistics emerged as an area of artificial intelligence performed by computer scientists who had specialized in the application of computers to the processing of a natural language. With the formation of the Association for Computational Linguistics (ACL) and the establishment of independent conference series, the field consolidated during the 1970s and 1980s. The Association for Computational Linguistics defines computational linguistics as: The term "comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Mining

Text mining, also referred to as ''text data mining'', similar to text analytics, is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005) we can distinguish between three different perspectives of text mining: information extraction, data mining, and a KDD (Knowledge Discovery in Databases) process. Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation and int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regulatory Compliance

In general, compliance means conforming to a rule, such as a specification, policy, standard or law. Compliance has traditionally been explained by reference to the deterrence theory, according to which punishing a behavior will decrease the violations both by the wrongdoer (specific deterrence) and by others (general deterrence). This view has been supported by economic theory, which has framed punishment in terms of costs and has explained compliance in terms of a cost-benefit equilibrium (Becker 1968). However, psychological research on motivation provides an alternative view: granting rewards (Deci, Koestner and Ryan, 1999) or imposing fines (Gneezy Rustichini 2000) for a certain behavior is a form of extrinsic motivation that weakens intrinsic motivation and ultimately undermines compliance. Regulatory compliance describes the goal that organizations aspire to achieve in their efforts to ensure that they are aware of and take steps to comply with relevant laws, policies, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anti-money Laundering

Money laundering is the process of concealing the origin of money, obtained from illicit activities such as drug trafficking, corruption, embezzlement or gambling, by converting it into a legitimate source. It is a crime in many jurisdictions with varying definitions. It is usually a key operation of organized crime. In US law, money laundering is the practice of engaging in financial transactions to conceal the identity, source, or destination of illegally gained money. In UK law the common law definition is wider. The act is defined as "taking any action with property of any form which is either wholly or in part the proceeds of a crime that will disguise the fact that that property is the proceeds of a crime or obscure the beneficial ownership of said property". In the past, the term "money laundering" was applied only to financial transactions related to organized crime. Today its definition is often expanded by government and international regulators such as the US Office ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HPCC

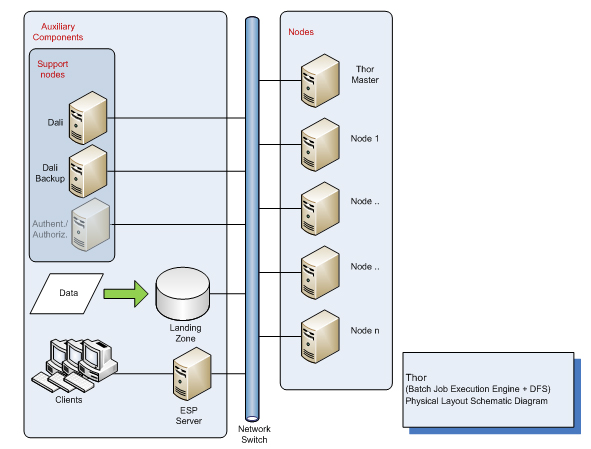

HPCC (High-Performance Computing Cluster), also known as DAS (Data Analytics Supercomputer), is an open source, data-intensive computing system platform developed by LexisNexis Risk Solutions. The HPCC platform incorporates a software architecture implemented on commodity computing clusters to provide high-performance, data-parallel processing for applications utilizing big data. The HPCC platform includes system configurations to support both parallel batch data processing (Thor) and high-performance online query applications using indexed data files (Roxie). The HPCC platform also includes a data-centric declarative programming language for parallel data processing called ECL. The public release of HPCC waannouncedin 2011, after ten years of in-house development (according to LexisNexis). It is an alternative to Hadoop and other Big data platforms. System architecture The HPCC system architecture includes two distinct cluster processing environments Thor and Roxie, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |