|

L-BFGS

Limited-memory BFGS (L-BFGS or LM-BFGS) is an optimization (mathematics), optimization algorithm in the family of quasi-Newton methods that approximates the Broyden–Fletcher–Goldfarb–Shanno algorithm (BFGS) using a limited amount of computer memory. It is a popular algorithm for parameter estimation in machine learning. The algorithm's target problem is to minimize f(\mathbf) over unconstrained values of the real-vector \mathbf where f is a differentiable scalar function. Like the original BFGS, L-BFGS uses an estimate of the inverse Hessian matrix to steer its search through variable space, but where BFGS stores a dense n\times n approximation to the inverse Hessian (''n'' being the number of variables in the problem), L-BFGS stores only a few vectors that represent the approximation implicitly. Due to its resulting linear memory requirement, the L-BFGS method is particularly well suited for optimization problems with many variables. Instead of the inverse Hessian H''k'', L-B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compact Quasi-Newton Representation

The compact representation for quasi-Newton methods is a matrix decomposition, which is typically used in gradient based optimization (mathematics), optimization algorithms or for solving nonlinear systems. The decomposition uses a low-rank representation for the direct and/or inverse Hessian matrix, Hessian or the Jacobian matrix and determinant, Jacobian of a nonlinear system. Because of this, the compact representation is often used for large problems and constrained optimization. Definition The compact representation of a quasi-Newton matrix for the inverse Hessian H_k or direct Hessian B_k of a nonlinear loss function, objective function f(x):\mathbb^n \to \mathbb expresses a sequence of recursive rank-1 or rank-2 matrix updates as one rank-k or rank-2k update of an initial matrix. Because it is derived from quasi-Newton updates, it uses differences of iterates and gradients \nabla f(x_k) = g_k in its definition \_^k . In particular, for r=k or r=2k the rectangular ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Broyden–Fletcher–Goldfarb–Shanno Algorithm

In numerical optimization, the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm is an iterative method for solving unconstrained nonlinear optimization problems. Like the related Davidon–Fletcher–Powell method, BFGS determines the descent direction by preconditioning the gradient with curvature information. It does so by gradually improving an approximation to the Hessian matrix of the loss function, obtained only from gradient evaluations (or approximate gradient evaluations) via a generalized secant method. Since the updates of the BFGS curvature matrix do not require matrix inversion, its computational complexity is only \mathcal(n^), compared to \mathcal(n^) in Newton's method. Also in common use is L-BFGS, which is a limited-memory version of BFGS that is particularly suited to problems with very large numbers of variables (e.g., >1000). The BFGS-B variant handles simple box constraints. The BFGS matrix also admits a compact representation, which makes it b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quasi-Newton Method

In numerical analysis, a quasi-Newton method is an iterative numerical method used either to find zeroes or to find local maxima and minima of functions via an iterative recurrence formula much like the one for Newton's method, except using approximations of the derivatives of the functions in place of exact derivatives. Newton's method requires the Jacobian matrix of all partial derivatives of a multivariate function when used to search for zeros or the Hessian matrix when used for finding extrema. Quasi-Newton methods, on the other hand, can be used when the Jacobian matrices or Hessian matrices are unavailable or are impractical to compute at every iteration. Some iterative methods that reduce to Newton's method, such as sequential quadratic programming, may also be considered quasi-Newton methods. Search for zeros: root finding Newton's method to find zeroes of a function g of multiple variables is given by x_ = x_n - _g(x_n) g(x_n), where _g(x_n) is the left inverse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Random Field

Conditional random fields (CRFs) are a class of statistical modeling methods often applied in pattern recognition and machine learning and used for structured prediction. Whereas a classifier predicts a label for a single sample without considering "neighbouring" samples, a CRF can take context into account. To do so, the predictions are modelled as a graphical model, which represents the presence of dependencies between the predictions. The kind of graph used depends on the application. For example, in natural language processing, "linear chain" CRFs are popular, for which each prediction is dependent only on its immediate neighbours. In image processing, the graph typically connects locations to nearby and/or similar locations to enforce that they receive similar predictions. Other examples where CRFs are used are: labeling or parsing of sequential data for natural language processing or biological sequences, part-of-speech tagging, shallow parsing, named entity recogn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Gradient Descent

Stochastic gradient descent (often abbreviated SGD) is an Iterative method, iterative method for optimizing an objective function with suitable smoothness properties (e.g. Differentiable function, differentiable or Subderivative, subdifferentiable). It can be regarded as a stochastic approximation of gradient descent optimization, since it replaces the actual gradient (calculated from the entire data set) by an estimate thereof (calculated from a randomly selected subset of the data). Especially in high-dimensional optimization problems this reduces the very high Computational complexity, computational burden, achieving faster iterations in exchange for a lower Rate of convergence, convergence rate. The basic idea behind stochastic approximation can be traced back to the Robbins–Monro algorithm of the 1950s. Today, stochastic gradient descent has become an important optimization method in machine learning. Background Both statistics, statistical M-estimation, estimation and ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multinomial Logistic Regression

In statistics, multinomial logistic regression is a classification method that generalizes logistic regression to multiclass problems, i.e. with more than two possible discrete outcomes. That is, it is a model that is used to predict the probabilities of the different possible outcomes of a categorically distributed dependent variable, given a set of independent variables (which may be real-valued, binary-valued, categorical-valued, etc.). Multinomial logistic regression is known by a variety of other names, including polytomous LR, multiclass LR, softmax regression, multinomial logit (mlogit), the maximum entropy (MaxEnt) classifier, and the conditional maximum entropy model. Background Multinomial logistic regression is used when the dependent variable in question is nominal (equivalently ''categorical'', meaning that it falls into any one of a set of categories that cannot be ordered in any meaningful way) and for which there are more than two categories. Some example ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimization (mathematics)

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Matrix

In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements (e.g., ''m'' × ''n'' for an ''m'' × ''n'' matrix) is sometimes referred to as the sparsity of the matrix. Conceptually, sparsity corresponds to systems with few pairwise interactions. For example, consider a line of balls connected by springs from one to the next: this is a sparse system, as only adjacent balls are coupled. By contrast, if the same line of balls were to have springs connecting each ball to all other balls, the system would correspond to a dense matrix. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convex Function

In mathematics, a real-valued function is called convex if the line segment between any two distinct points on the graph of a function, graph of the function lies above or on the graph between the two points. Equivalently, a function is convex if its epigraph (mathematics), ''epigraph'' (the set of points on or above the graph of the function) is a convex set. In simple terms, a convex function graph is shaped like a cup \cup (or a straight line like a linear function), while a concave function's graph is shaped like a cap \cap. A twice-differentiable function, differentiable function of a single variable is convex if and only if its second derivative is nonnegative on its entire domain of a function, domain. Well-known examples of convex functions of a single variable include a linear function f(x) = cx (where c is a real number), a quadratic function cx^2 (c as a nonnegative real number) and an exponential function ce^x (c as a nonnegative real number). Convex functions pl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

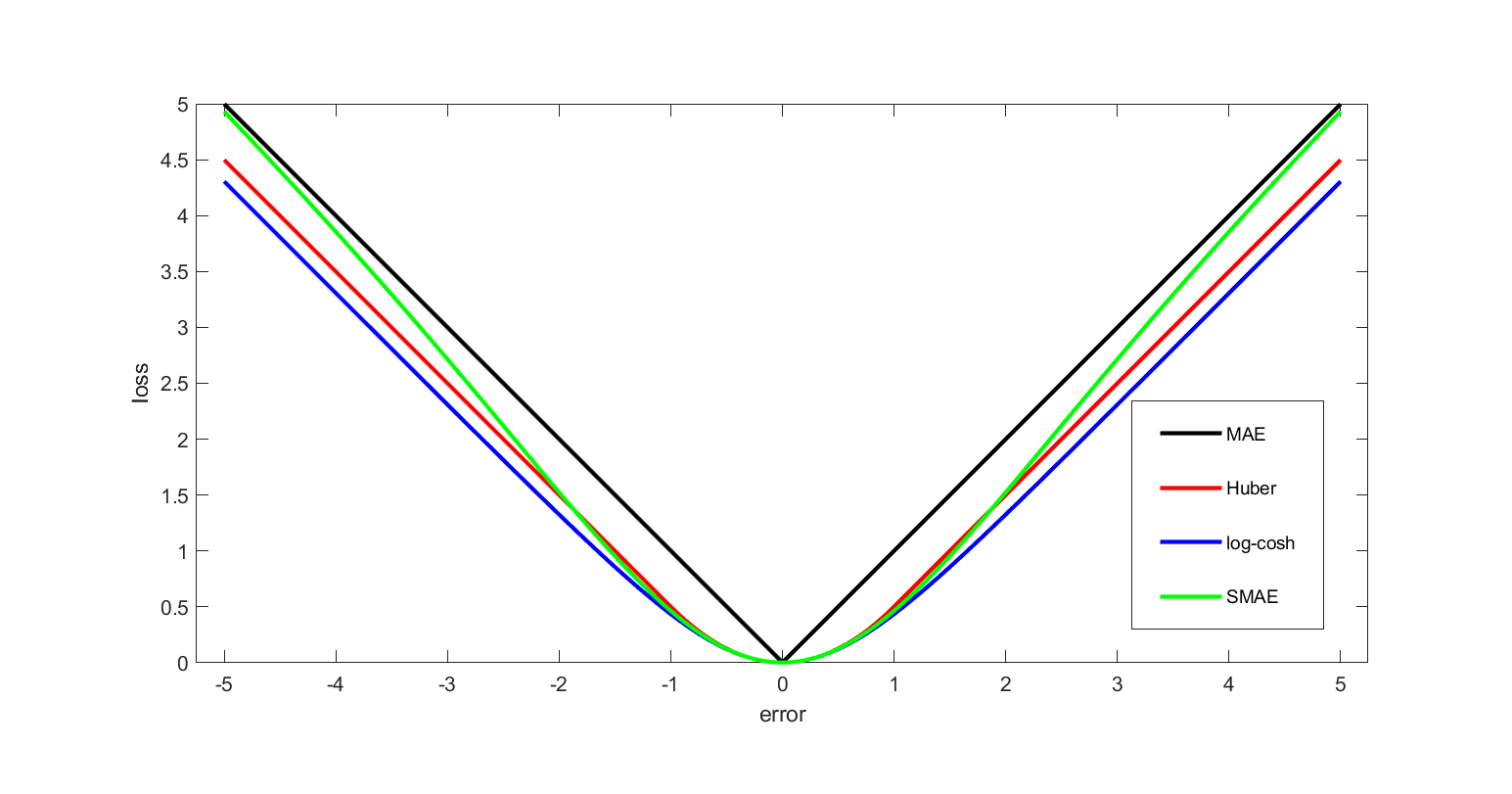

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Pierre-Simon Laplace, Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Online Machine Learning

In computer science, online machine learning is a method of machine learning in which data becomes available in a sequential order and is used to update the best predictor for future data at each step, as opposed to batch learning techniques which generate the best predictor by learning on the entire training data set at once. Online learning is a common technique used in areas of machine learning where it is computationally infeasible to train over the entire dataset, requiring the need of out-of-core algorithms. It is also used in situations where it is necessary for the algorithm to dynamically adapt to new patterns in the data, or when the data itself is generated as a function of time, e.g., prediction of prices in the financial international markets. Online learning algorithms may be prone to catastrophic interference, a problem that can be addressed by incremental learning approaches. Introduction In the setting of supervised learning, a function of f : X \to Y is to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sign (mathematics)

In mathematics, the sign of a real number is its property of being either positive, negative, or 0. Depending on local conventions, zero may be considered as having its own unique sign, having no sign, or having both positive and negative sign. In some contexts, it makes sense to distinguish between a positive and a negative zero. In mathematics and physics, the phrase "change of sign" is associated with exchanging an object for its additive inverse (multiplication with −1, negation), an operation which is not restricted to real numbers. It applies among other objects to vectors, matrices, and complex numbers, which are not prescribed to be only either positive, negative, or zero. The word "sign" is also often used to indicate binary aspects of mathematical or scientific objects, such as odd and even ( sign of a permutation), sense of orientation or rotation ( cw/ccw), one sided limits, and other concepts described in below. Sign of a number Numbers from various number ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |