|

Hurdle Model

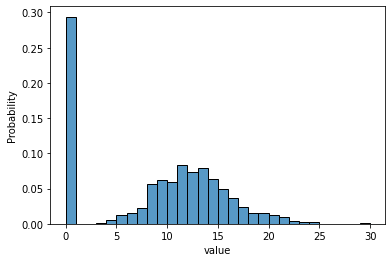

A hurdle model is a class of statistical models where a random variable is modelled using two parts, the first which is the probability of attaining value 0, and the second part models the probability of the non-zero values. The use of hurdle models are often motivated by an excess of zeroes in the data, that is not sufficiently accounted for in more standard statistical models. In a hurdle model, a random variable ''x'' is modelled as : \Pr (x = 0) = \theta : \Pr (x \ne 0) = p_(x) where p_(x) is a truncated probability distribution function, truncated at 0. Hurdle models were introduced by John G. Cragg in 1971, where the non-zero values of ''x'' were modelled using a normal model, and a probit model was used to model the zeros. The probit part of the model was said to model the presence of "hurdles" that must be overcome for the values of x to attain non-zero values, hence the designation ''hurdle model''. Hurdle models were later developed for count data, with Poisson, ge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of sample data (and similar data from a larger population). A statistical model represents, often in considerably idealized form, the data-generating process. A statistical model is usually specified as a mathematical relationship between one or more random variables and other non-random variables. As such, a statistical model is "a formal representation of a theory" ( Herman Adèr quoting Kenneth Bollen). All statistical hypothesis tests and all statistical estimators are derived via statistical models. More generally, statistical models are part of the foundation of statistical inference. Introduction Informally, a statistical model can be thought of as a statistical assumption (or set of statistical assumptions) with a certain property: that the assumption allows us to calculate the probability of any event. As an example, consider a pair of ordinary six ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Truncated Probability Distribution

In statistics, a truncated distribution is a conditional distribution that results from restricting the domain of some other probability distribution. Truncated distributions arise in practical statistics in cases where the ability to record, or even to know about, occurrences is limited to values which lie above or below a given threshold or within a specified range. For example, if the dates of birth of children in a school are examined, these would typically be subject to truncation relative to those of all children in the area given that the school accepts only children in a given age range on a specific date. There would be no information about how many children in the locality had dates of birth before or after the school's cutoff dates if only a direct approach to the school were used to obtain information. Where sampling is such as to retain knowledge of items that fall outside the required range, without recording the actual values, this is known as censoring, as opposed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probit

In probability theory and statistics, the probit function is the quantile function associated with the standard normal distribution. It has applications in data analysis and machine learning, in particular exploratory statistical graphics and specialized regression modeling of binary response variables. Mathematically, the probit is the inverse of the cumulative distribution function of the standard normal distribution, which is denoted as \Phi(z), so the probit is defined as :\operatorname(p) = \Phi^(p) \quad \text \quad p \in (0,1). Largely because of the central limit theorem, the standard normal distribution plays a fundamental role in probability theory and statistics. If we consider the familiar fact that the standard normal distribution places 95% of probability between −1.96 and 1.96, and is symmetric around zero, it follows that :\Phi(-1.96) = 0.025 = 1-\Phi(1.96).\,\! The probit function gives the 'inverse' computation, generating a value of a standard normal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Poisson Distribution

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known constant mean rate and independently of the time since the last event. It is named after French mathematician Siméon Denis Poisson (; ). The Poisson distribution can also be used for the number of events in other specified interval types such as distance, area, or volume. For instance, a call center receives an average of 180 calls per hour, 24 hours a day. The calls are independent; receiving one does not change the probability of when the next one will arrive. The number of calls received during any minute has a Poisson probability distribution with mean 3: the most likely numbers are 2 and 3 but 1 and 4 are also likely and there is a small probability of it being as low as zero and a very small probability it could be 10. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Geometric Distribution

In probability theory and statistics, the geometric distribution is either one of two discrete probability distributions: * The probability distribution of the number ''X'' of Bernoulli trials needed to get one success, supported on the set \; * The probability distribution of the number ''Y'' = ''X'' − 1 of failures before the first success, supported on the set \. Which of these is called the geometric distribution is a matter of convention and convenience. These two different geometric distributions should not be confused with each other. Often, the name ''shifted'' geometric distribution is adopted for the former one (distribution of the number ''X''); however, to avoid ambiguity, it is considered wise to indicate which is intended, by mentioning the support explicitly. The geometric distribution gives the probability that the first occurrence of success requires ''k'' independent trials, each with success probability ''p''. If the probability of suc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Negative Binomial Distribution

In probability theory Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set o ... and statistics, the negative binomial distribution is a discrete probability distribution that models the number of failures in a sequence of independent and identically distributed Bernoulli trials before a specified (non-random) number of successes (denoted r) occurs. For example, we can define rolling a 6 on a die as a success, and rolling any other number as a failure, and ask how many failure rolls will occur before we see the third success (r=3). In such a case, the probability distribution of the number of failures that appear will be a negative binomial distribution. An alternative formulation is to model the number of total trials (instead of the number of failures). In fact, for a specified (non-ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Zero-inflated Model

In statistics, a zero-inflated model is a statistical model based on a zero-inflated probability distribution, i.e. a distribution that allows for frequent zero-valued observations. Zero-inflated Poisson One well-known zero-inflated model is Diane Lambert's zero-inflated Poisson model, which concerns a random event containing excess zero-count data in unit time. For example, the number of insurance claims within a population for a certain type of risk would be zero-inflated by those people who have not taken out insurance against the risk and thus are unable to claim. The zero-inflated Poisson (ZIP) model mixes two zero generating processes. The first process generates zeros. The second process is governed by a Poisson distribution that generates counts, some of which may be zero. The mixture distribution is described as follows: : \Pr (Y = 0) = \pi + (1 - \pi) e^ :\Pr (Y = y_i) = (1 - \pi) \frac ,\qquad y_i = 1,2,3,... where the outcome variable y_i has any non-negative in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixture Model

In statistics, a mixture model is a probabilistic model for representing the presence of subpopulations within an overall population, without requiring that an observed data set should identify the sub-population to which an individual observation belongs. Formally a mixture model corresponds to the mixture distribution that represents the probability distribution of observations in the overall population. However, while problems associated with "mixture distributions" relate to deriving the properties of the overall population from those of the sub-populations, "mixture models" are used to make statistical inferences about the properties of the sub-populations given only observations on the pooled population, without sub-population identity information. Mixture models should not be confused with models for compositional data, i.e., data whose components are constrained to sum to a constant value (1, 100%, etc.). However, compositional models can be thought of as mixture models ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Zero-inflated Model

In statistics, a zero-inflated model is a statistical model based on a zero-inflated probability distribution, i.e. a distribution that allows for frequent zero-valued observations. Zero-inflated Poisson One well-known zero-inflated model is Diane Lambert's zero-inflated Poisson model, which concerns a random event containing excess zero-count data in unit time. For example, the number of insurance claims within a population for a certain type of risk would be zero-inflated by those people who have not taken out insurance against the risk and thus are unable to claim. The zero-inflated Poisson (ZIP) model mixes two zero generating processes. The first process generates zeros. The second process is governed by a Poisson distribution that generates counts, some of which may be zero. The mixture distribution is described as follows: : \Pr (Y = 0) = \pi + (1 - \pi) e^ :\Pr (Y = y_i) = (1 - \pi) \frac ,\qquad y_i = 1,2,3,... where the outcome variable y_i has any non-negative in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Truncated Normal Hurdle Model

In econometrics, the truncated normal hurdle model is a variant of the Tobit model and was first proposed by Cragg in 1971. In a standard Tobit model, represented as y=(x\beta+u) 1 0">\beta+u>0/math>, where u, x\sim N(0,\sigma^2)This model construction implicitly imposes two first order assumptions: # Since: \partial P 0">>0\partial x_j=\varphi (x\beta/\sigma) \beta_j/\sigma and \partial \operatorname E 0">\mid x,y >0\partial x_j=\beta_j\, the partial effect of x_j on the probability P 0">>0/math> and the conditional expectation: \operatorname E 0">\mid x,y >0/math> has the same sign: # The relative effects of x_h and x_j on P 0">>0/math> and \operatorname E 0">\mid x,y>0/math> are identical, i.e.: : \frac=\frac = \frac, However, these two implicit assumptions are too strong and inconsistent with many contexts in economics. For instance, when we need to decide whether to invest and build a factory, the construction cost might be more influential than the product price; but o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |