|

Granular Computing

Granular computing is an emerging computing paradigm of Data processing, information processing that concerns the processing of complex information entities called "information granulation, granules", which arise in the process of data abstraction and knowledge extraction, derivation of knowledge from information or data. Generally speaking, information granules are collections of entities that usually originate at the numeric level and are arranged together due to their Similarity measure, similarity, functional or physical adjacency, indistinguishability, coherency, or the like. At present, granular computing is more a ''theoretical perspective'' than a coherent set of methods or principles. As a theoretical perspective, it encourages an approach to data that recognizes and exploits the knowledge present in data at various levels of resolution or scales. In this sense, it encompasses all methods which provide flexibility and adaptability in the resolution at which knowledge o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computer, computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both computer hardware, hardware and software. Computing has scientific, engineering, mathematical, technological, and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology, and software engineering. The term ''computing'' is also synonymous with counting and calculation, calculating. In earlier times, it was used in reference to the action performed by Mechanical computer, mechanical computing machines, and before that, to Computer (occupation), human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Value Granulation

Value or values may refer to: Ethics and social sciences * Value (ethics), concept which may be construed as treating actions themselves as abstract objects, associating value to them ** Axiology, interdisciplinary study of values, including ethical values * Social imaginary, set of morals, institutions, laws, and symbols common to a particular social group * Religious values, beliefs and practices which a religious adherent partakes in Economics * Value (economics), a measure of the benefit that may be gained from goods or service ** Theory of value (economics), the study of the concept of economic value ** Value (marketing), the difference between a customer's evaluation of benefits and costs ** Value investing, an investment paradigm * Values (heritage), the measure by which the cultural significance of heritage items is assessed * Present value, value of an expected income stream as of the date of valuation * Present value of benefits, discounted sum of a stream of be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Measure Of Similarity

In statistics and related fields, a similarity measure or similarity function or similarity metric is a real-valued function that quantifies the similarity between two objects. Although no single definition of a similarity exists, usually such measures are in some sense the inverse of distance metrics: they take on large values for similar objects and either zero or a negative value for very dissimilar objects. Though, in more broad terms, a similarity function may also satisfy metric axioms. Cosine similarity is a commonly used similarity measure for real-valued vectors, used in (among other fields) information retrieval to score the similarity of documents in the vector space model. In machine learning, common kernel functions such as the RBF kernel can be viewed as similarity functions. Use of different similarity measure formulas Different types of similarity measures exist for various types of objects, depending on the objects being compared. For each type of object there ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Data Clustering

Cluster analysis or clustering is the data analyzing technique in which task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some specific sense defined by the analyst) to each other than to those in other groups (clusters). It is a main task of exploratory data analysis, and a common technique for statistical data analysis, used in many fields, including pattern recognition, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Cluster analysis refers to a family of algorithms and tasks rather than one specific algorithm. It can be achieved by various algorithms that differ significantly in their understanding of what constitutes a cluster and how to efficiently find them. Popular notions of clusters include groups with small distances between cluster members, dense areas of the data space, intervals or particular statistical distributions. Clustering ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Independent Component Analysis

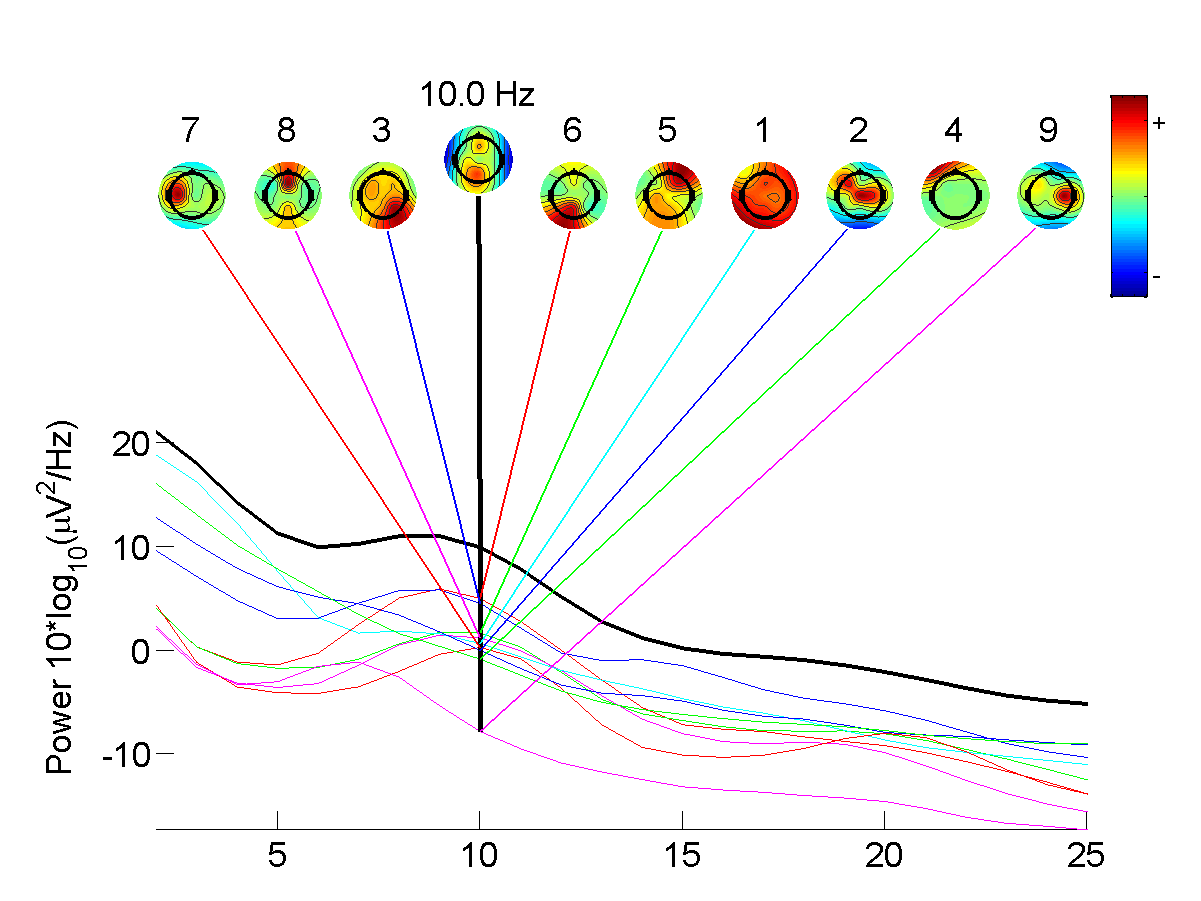

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate statistics, multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are Statistical independence, statistically independent from each other. ICA was invented by Jeanny Hérault and Christian Jutten in 1985. ICA is a special case of blind source separation. A common example application of ICA is the "cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Projection Pursuit

Projection pursuit (PP) is a type of statistical technique that involves finding the most "interesting" possible projections in multidimensional data. Often, projections that deviate more from a normal distribution are considered to be more interesting. As each projection is found, the data are reduced by removing the component along that projection, and the process is repeated to find new projections; this is the "pursuit" aspect that motivated the technique known as matching pursuit. The idea of projection pursuit is to locate the projection or projections from high-dimensional space to low-dimensional space that reveal the most details about the structure of the data set. Once an interesting set of projections has been found, existing structures (clusters, surfaces, etc.) can be extracted and analyzed separately. Projection pursuit has been widely used for blind source separation, so it is very important in independent component analysis. Projection pursuit seeks one projec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Dimensionality Reduction

Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data, ideally close to its intrinsic dimension. Working in high-dimensional spaces can be undesirable for many reasons; raw data are often sparse as a consequence of the curse of dimensionality, and analyzing the data is usually computationally intractable. Dimensionality reduction is common in fields that deal with large numbers of observations and/or large numbers of variables, such as signal processing, speech recognition, neuroinformatics, and bioinformatics. Methods are commonly divided into linear and nonlinear approaches. Linear approaches can be further divided into feature selection and feature extraction. Dimensionality reduction can be used for noise reduction, data visualization, cluster analysis, or as an intermediate step to facilitat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Structural Equation Modeling

Structural equation modeling (SEM) is a diverse set of methods used by scientists for both observational and experimental research. SEM is used mostly in the social and behavioral science fields, but it is also used in epidemiology, business, and other fields. A common definition of SEM is, "...a class of methodologies that seeks to represent hypotheses about the means, variances, and covariances of observed data in terms of a smaller number of 'structural' parameters defined by a hypothesized underlying conceptual or theoretical model,". SEM involves a model representing how various aspects of some phenomenon are thought to causally connect to one another. Structural equation models often contain postulated causal connections among some latent variables (variables thought to exist but which can't be directly observed). Additional causal connections link those latent variables to observed variables whose values appear in a data set. The causal connections are represented using ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Factor Analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, pers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Multidimensional Scaling

Multidimensional scaling (MDS) is a means of visualizing the level of similarity of individual cases of a data set. MDS is used to translate distances between each pair of n objects in a set into a configuration of n points mapped into an abstract Cartesian space. More technically, MDS refers to a set of related ordination techniques used in information visualization, in particular to display the information contained in a distance matrix. It is a form of non-linear dimensionality reduction. Given a distance matrix with the distances between each pair of objects in a set, and a chosen number of dimensions, ''N'', an MDS algorithm places each object into ''N''-dimensional space (a lower-dimensional representation) such that the between-object distances are preserved as well as possible. For ''N'' = 1, 2, and 3, the resulting points can be visualized on a scatter plot. Core theoretical contributions to MDS were made by James O. Ramsay of McGill University, who is also ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Principal Component Analysis

Principal component analysis (PCA) is a linear dimensionality reduction technique with applications in exploratory data analysis, visualization and data preprocessing. The data is linearly transformed onto a new coordinate system such that the directions (principal components) capturing the largest variation in the data can be easily identified. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the i-th vector is the direction of a line that best fits the data while being orthogonal to the first i-1 vectors. Here, a best-fitting line is defined as one that minimizes the average squared perpendicular distance from the points to the line. These directions (i.e., principal components) constitute an orthonormal basis in which different individual dimensions of the data are linearly uncorrelated. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |