|

GLOH

GLOH (Gradient Location and Orientation Histogram) is a robust image descriptor that can be used in computer vision tasks. It is a Scale-invariant_feature_transform, SIFT-like descriptor that considers more spatial regions for the histograms. An intermediate vector is computed from 17 location and 16 orientation bins, for a total of 272-dimensions. Principal components analysis (PCA) is then used to reduce the vector size to 128 (same size as SIFT descriptor vector). See also * Scale-invariant feature transform * Speeded up robust features, Speeded Up Robust Features * Local energy-based shape histogram, LESH – Local Energy-based Shape Histogram * Feature detection (computer vision) References Krystian Mikolajczyk and Cordelia Schmid "A performance evaluation of local descriptors", IEEE Transactions on Pattern Analysis and Machine Intelligence, 10, 27, pp 1615--1630, 2005. Feature detection (computer vision) {{comp-sci-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scale-invariant Feature Transform

The scale-invariant feature transform (SIFT) is a computer vision algorithm to detect, describe, and match local '' features'' in images, invented by David Lowe in 1999. Applications include object recognition, robotic mapping and navigation, image stitching, 3D modeling, gesture recognition, video tracking, individual identification of wildlife and match moving. SIFT keypoints of objects are first extracted from a set of reference images and stored in a database. An object is recognized in a new image by individually comparing each feature from the new image to this database and finding candidate matching features based on Euclidean distance of their feature vectors. From the full set of matches, subsets of keypoints that agree on the object and its location, scale, and orientation in the new image are identified to filter out good matches. The determination of consistent clusters is performed rapidly by using an efficient hash table implementation of the generalised Hou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speeded Up Robust Features

In computer vision, speeded up robust features (SURF) is a patented local feature detector and descriptor. It can be used for tasks such as object recognition, image registration, classification, or 3D reconstruction. It is partly inspired by the scale-invariant feature transform (SIFT) descriptor. The standard version of SURF is several times faster than SIFT and claimed by its authors to be more robust against different image transformations than SIFT. To detect interest points, SURF uses an integer approximation of the determinant of Hessian blob detector, which can be computed with 3 integer operations using a precomputed integral image. Its feature descriptor is based on the sum of the Haar wavelet response around the point of interest. These can also be computed with the aid of the integral image. SURF descriptors have been used to locate and recognize objects, people or faces, to reconstruct 3D scenes, to track objects and to extract points of interest. SURF wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Local Energy-based Shape Histogram

Local energy-based shape histogram (LESH) is a proposed image descriptor in computer vision. It can be used to get a description of the underlying shape. The LESH feature descriptor is built on local energy model of feature perception, see e.g. phase congruency for more details. It encodes the underlying shape by accumulating local energy of the underlying signal along several filter orientations, several local histograms from different parts of the image/patch are generated and concatenated together into a 128-dimensional compact spatial histogram. It is designed to be scale invariant. The LESH features can be used in applications like shape-based image retrieval, medical image processing, object detection, and 3D Pose Estimation, pose estimation. See also * Feature detection (computer vision) * Scale-invariant feature transform * Speeded up robust features * GLOH, Gradient Location Orientation Histogram References * Code: Sarfraz, S., Hellwich, O.:"Head Pose Estimation in Fa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Descriptor

In computer vision, visual descriptors or image descriptors are descriptions of the visual features of the contents in images, videos, or algorithms or applications that produce such descriptions. They describe elementary characteristics such as the shape, the color, the texture or the motion, among others. Introduction As a result of the new communication technologies and the massive use of Internet in our society, the amount of audio-visual information available in digital format is increasing considerably. Therefore, it has been necessary to design some systems that allow us to describe the content of several types of multimedia information in order to search and classify them. The audio-visual descriptors are in charge of the contents description. These descriptors have a good knowledge of the objects and events found in a video, image or audio and they allow the quick and efficient searches of the audio-visual content. This system can be compared to the search engines for t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

Computer vision is an Interdisciplinarity, interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do. Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scien ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

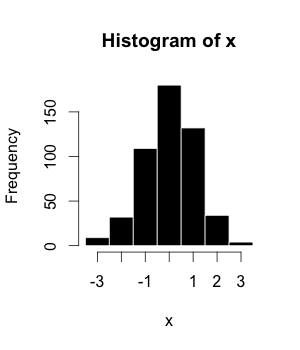

Histograms

A histogram is an approximate representation of the distribution of numerical data. The term was first introduced by Karl Pearson. To construct a histogram, the first step is to " bin" (or " bucket") the range of values—that is, divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping intervals of a variable. The bins (intervals) must be adjacent and are often (but not required to be) of equal size. If the bins are of equal size, a bar is drawn over the bin with height proportional to the frequency—the number of cases in each bin. A histogram may also be normalized to display "relative" frequencies showing the proportion of cases that fall into each of several categories, with the sum of the heights equaling 1. However, bins need not be of equal width; in that case, the erected rectangle is defined to have its ''area'' proportional to the frequenc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Components Analysis

Principal component analysis (PCA) is a popular technique for analyzing large datasets containing a high number of dimensions/features per observation, increasing the interpretability of data while preserving the maximum amount of information, and enabling the visualization of multidimensional data. Formally, PCA is a statistical technique for reducing the dimensionality of a dataset. This is accomplished by linearly transforming the data into a new coordinate system where (most of) the variation in the data can be described with fewer dimensions than the initial data. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identify clusters of closely related data points. Principal component analysis has applications in many fields such as population genetics, microbiome studies, and atmospheric science. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Detection (computer Vision)

In computer vision and image processing, a feature is a piece of information about the content of an image; typically about whether a certain region of the image has certain properties. Features may be specific structures in the image such as points, edges or objects. Features may also be the result of a general neighborhood operation or feature detection applied to the image. Other examples of features are related to motion in image sequences, or to shapes defined in terms of curves or boundaries between different image regions. More broadly a ''feature'' is any piece of information which is relevant for solving the computational task related to a certain application. This is the same sense as feature in machine learning and pattern recognition generally, though image processing has a very sophisticated collection of features. The feature concept is very general and the choice of features in a particular computer vision system may be highly dependent on the specific problem at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_(cropped).png)