|

Fluid Queue

In queueing theory, a discipline within the mathematical theory of probability, a fluid queue (fluid model, fluid flow model or stochastic fluid model) is a mathematical model used to describe the fluid level in a reservoir subject to randomly determined periods of filling and emptying. The term dam theory was used in earlier literature for these models. The model has been used to approximate discrete models, model the spread of wildfires, in ruin theory and to model high speed data networks. The model applies the leaky bucket algorithm to a stochastic source. The model was first introduced by Pat Moran in 1954 where a discrete-time model was considered. Fluid queues allow arrivals to be continuous rather than discrete, as in models like the M/M/1 and M/G/1 queues. Fluid queues have been used to model the performance of a network switch, a router, the IEEE 802.11 protocol, Asynchronous Transfer Mode (the intended technology for B-ISDN), peer-to-peer file sharing, optical burst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Queueing Theory

Queueing theory is the mathematical study of waiting lines, or queues. A queueing model is constructed so that queue lengths and waiting time can be predicted. Queueing theory is generally considered a branch of operations research because the results are often used when making business decisions about the resources needed to provide a service. Queueing theory has its origins in research by Agner Krarup Erlang, who created models to describe the system of incoming calls at the Copenhagen Telephone Exchange Company. These ideas were seminal to the field of teletraffic engineering and have since seen applications in telecommunications, traffic engineering, computing, project management, and particularly industrial engineering, where they are applied in the design of factories, shops, offices, and hospitals. Spelling The spelling "queueing" over "queuing" is typically encountered in the academic research field. In fact, one of the flagship journals of the field is '' Queue ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peer-to-peer File Sharing

Peer-to-peer file sharing is the distribution and sharing of digital media using peer-to-peer (P2P) networking technology. P2P file sharing allows users to access media files such as books, music, movies, and games using a P2P software program that searches for other connected computers on a P2P network to locate the desired content. The nodes (peers) of such networks are end-user computers and distribution servers (not required). The early days of file-sharing were done predominantly by client-server transfers from web pages, FTP and IRC before Napster popularised a Windows application that allowed users to both upload and download with a freemium style service. Record companies and artists called for its shutdown and FBI raids followed. Napster had been incredibly popular at its peak, spawning a grass-roots movement following from the mixtape scene of the 80's and left a significant gap in music availability with its followers. After much discussion on forums and in chat rooms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quadratically Convergent

In mathematical analysis, particularly numerical analysis, the rate of convergence and order of convergence of a sequence that converges to a limit are any of several characterizations of how quickly that sequence approaches its limit. These are broadly divided into rates and orders of convergence that describe how quickly a sequence further approaches its limit once it is already close to it, called asymptotic rates and orders of convergence, and those that describe how quickly sequences approach their limits from starting points that are not necessarily close to their limits, called non-asymptotic rates and orders of convergence. Asymptotic behavior is particularly useful for deciding when to stop a sequence of numerical computations, for instance once a target precision has been reached with an iterative root-finding algorithm, but pre-asymptotic behavior is often crucial for determining whether to begin a sequence of computations at all, since it may be impossible or imprac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decreasing Failure Rate

In mathematics, a monotonic function (or monotone function) is a function between ordered sets that preserves or reverses the given order. This concept first arose in calculus, and was later generalized to the more abstract setting of order theory. In calculus and analysis In calculus, a function f defined on a subset of the real numbers with real values is called ''monotonic'' if it is either entirely non-decreasing, or entirely non-increasing. That is, as per Fig. 1, a function that increases monotonically does not exclusively have to increase, it simply must not decrease. A function is termed ''monotonically increasing'' (also ''increasing'' or ''non-decreasing'') if for all x and y such that x \leq y one has f\!\left(x\right) \leq f\!\left(y\right), so f preserves the order (see Figure 1). Likewise, a function is called ''monotonically decreasing'' (also ''decreasing'' or ''non-increasing'') if, whenever x \leq y, then f\!\left(x\right) \geq f\!\left(y\right), so it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value

In probability theory, the expected value (also called expectation, expectancy, expectation operator, mathematical expectation, mean, expectation value, or first Moment (mathematics), moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean, mean of the possible values a random variable can take, weighted by the probability of those outcomes. Since it is obtained through arithmetic, the expected value sometimes may not even be included in the sample data set; it is not the value you would expect to get in reality. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by Integral, integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Laplace–Stieltjes Transform

The Laplace–Stieltjes transform, named for Pierre-Simon Laplace and Thomas Joannes Stieltjes, is an integral transform similar to the Laplace transform. For real-valued functions, it is the Laplace transform of a Stieltjes measure, however it is often defined for functions with values in a Banach space. It is useful in a number of areas of mathematics, including functional analysis, and certain areas of theoretical and applied probability. Real-valued functions The Laplace–Stieltjes transform of a real-valued function ''g'' is given by a Lebesgue–Stieltjes integral of the form :\int e^\,dg(x) for ''s'' a complex number. As with the usual Laplace transform, one gets a slightly different transform depending on the domain of integration, and for the integral to be defined, one also needs to require that ''g'' be of bounded variation on the region of integration. The most common are: * The bilateral (or two-sided) Laplace–Stieltjes transform is given by \(s) = \int_^ e^\,dg( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Schur Decomposition

In the mathematical discipline of linear algebra, the Schur decomposition or Schur triangulation, named after Issai Schur, is a matrix decomposition. It allows one to write an arbitrary complex square matrix as unitarily similar to an upper triangular matrix whose diagonal elements are the eigenvalues of the original matrix. Statement The complex Schur decomposition reads as follows: if is an square matrix with complex entries, then ''A'' can be expressed as (Section 2.3 and further at p. 79(Section 7.7 at p. 313 A = Q U Q^ for some unitary matrix ''Q'' (so that the inverse ''Q''−1 is also the conjugate transpose ''Q''* of ''Q''), and some upper triangular matrix ''U''. This is called a Schur form of ''A''. Since ''U'' is similar to ''A'', it has the same spectrum, and since it is triangular, its eigenvalues are the diagonal entries of ''U''. The Schur decomposition implies that there exists a nested sequence of ''A''-invariant subspaces , and that there exists an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix-analytic Method

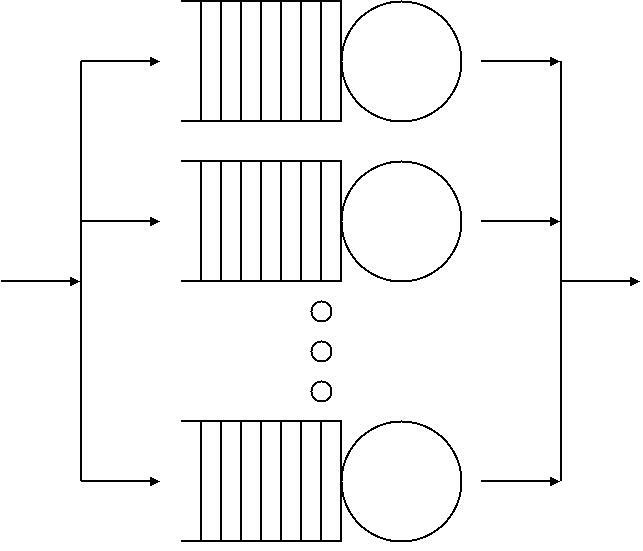

In probability theory, the matrix analytic method is a technique to compute the stationary probability distribution of a Markov chain which has a repeating structure (after some point) and a state space which grows unboundedly in no more than one dimension. Such models are often described as M/G/1 type Markov chains because they can describe transitions in an M/G/1 queue. The method is a more complicated version of the matrix geometric method and is the classical solution method for M/G/1 chains. Method description An M/G/1-type stochastic matrix is one of the form ::P = \begin B_0 & B_1 & B_2 & B_3 & \cdots \\ A_0 & A_1 & A_2 & A_3 & \cdots \\ & A_0 & A_1 & A_2 & \cdots \\ & & A_0 & A_1 & \cdots \\ \vdots & \vdots & \vdots & \vdots & \ddots \end where ''B''''i'' and ''A''''i'' are ''k'' × ''k'' matrices. (Note that unmarked matrix entries represent zeroes.) Such a matrix describes the embedded Markov chain in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phase-type Distribution

A phase-type distribution is a probability distribution constructed by a convolution or mixture of exponential distributions. It results from a system of one or more inter-related Poisson processes occurring in sequence, or phases. The sequence in which each of the phases occurs may itself be a stochastic process. The distribution can be represented by a random variable describing the time until absorption of a Markov process with one absorbing state. Each of the Markov process, states of the Markov process represents one of the phases. It has a discrete time, discrete-time equivalent the discrete phase-type distribution. The set of phase-type distributions is dense in the field of all positive-valued distributions, that is, it can be used to approximate any positive-valued distribution. Definition Consider a continuous-time Markov process with ''m'' + 1 states, where ''m'' ≥ 1, such that the states 1,...,''m'' are transient states and state 0 is an absorbi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Reward Model

In probability theory, a Markov reward model or Markov reward process is a stochastic process which extends either a Markov chain or continuous-time Markov chain by adding a reward rate to each state. An additional variable records the reward accumulated up to the current time. Features of interest in the model include expected reward at a given time and expected time to accumulate a given reward. The model appears in Ronald A. Howard's book. The models are often studied in the context of Markov decision processes where a decision strategy can impact the rewards received. The Markov Reward Model Checker tool can be used to numerically compute transient and stationary properties of Markov reward models. Continuous-time Markov chain The accumulated reward at a time ''t'' can be computed numerically over the time domain or by evaluating the linear hyperbolic system of equations which describe the accumulated reward using transform methods or finite difference methods. See also * Ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Piecewise Deterministic Markov Process

In probability theory, a piecewise-deterministic Markov process (PDMP) is a process whose behaviour is governed by random jumps at points in time, but whose evolution is deterministically governed by an ordinary differential equation between those times. The class of models is "wide enough to include as special cases virtually all the non-diffusion models of applied probability." The process is defined by three quantities: the flow, the jump rate, and the transition measure. The model was first introduced in a paper by Mark H. A. Davis in 1984. Examples Piecewise linear models such as Markov chains, continuous-time Markov chains, the M/G/1 queue, the GI/G/1 queue and the fluid queue can be encapsulated as PDMPs with simple differential equations. Applications PDMPs have been shown useful in ruin theory, queueing theory, for modelling biochemical processes such as DNA replication in eukaryotes and subtilin production by the organism B. subtilis, and for modelling earthquak ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuous Time Markov Chain

A continuous-time Markov chain (CTMC) is a continuous stochastic process in which, for each state, the process will change state according to an exponential random variable and then move to a different state as specified by the probabilities of a stochastic matrix. An equivalent formulation describes the process as changing state according to the least value of a set of exponential random variables, one for each possible state it can move to, with the parameters determined by the current state. An example of a CTMC with three states \ is as follows: the process makes a transition after the amount of time specified by the holding time—an exponential random variable E_i, where ''i'' is its current state. Each random variable is independent and such that E_0\sim \text(6), E_1\sim \text(12) and E_2\sim \text(18). When a transition is to be made, the process moves according to the jump chain, a discrete-time Markov chain with stochastic matrix: :\begin 0 & \frac & \frac \\ \frac & ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |