|

Deep Belief Networks

In machine learning, a deep belief network (DBN) is a generative graphical model, or alternatively a class of deep neural network, composed of multiple layers of latent variables ("hidden units"), with connections between the layers but not between units within each layer. When trained on a set of examples without supervision, a DBN can learn to probabilistically reconstruct its inputs. The layers then act as feature detectors. After this learning step, a DBN can be further trained with supervision to perform classification. DBNs can be viewed as a composition of simple, unsupervised networks such as restricted Boltzmann machines (RBMs) or autoencoders, where each sub-network's hidden layer serves as the visible layer for the next. An RBM is an undirected, generative energy-based model with a "visible" input layer and a hidden layer and connections between but not within layers. This composition leads to a fast, layer-by-layer unsupervised training procedure, where contrasti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Greedy Algorithm

A greedy algorithm is any algorithm that follows the problem-solving heuristic of making the locally optimal choice at each stage. In many problems, a greedy strategy does not produce an optimal solution, but a greedy heuristic can yield locally optimal solutions that approximate a globally optimal solution in a reasonable amount of time. For example, a greedy strategy for the travelling salesman problem (which is of high computational complexity) is the following heuristic: "At each step of the journey, visit the nearest unvisited city." This heuristic does not intend to find the best solution, but it terminates in a reasonable number of steps; finding an optimal solution to such a complex problem typically requires unreasonably many steps. In mathematical optimization, greedy algorithms optimally solve combinatorial problems having the properties of matroids and give constant-factor approximations to optimization problems with the submodular structure. Specifics Greedy algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Deep Belief Network

In computer science, a convolutional deep belief network (CDBN) is a type of deep artificial neural network composed of multiple layers of convolutional restricted Boltzmann machines stacked together. Alternatively, it is a hierarchical generative model for deep learning, which is highly effective in image processing and object recognition, though it has been used in other domains too. The salient features of the model include the fact that it scales well to high-dimensional images and is translation-invariant. CDBNs use the technique of probabilistic max-pooling to reduce the dimensions in higher layers in the network. Training of the network involves a pre-training stage accomplished in a greedy layer-wise manner, similar to other deep belief networks. Depending on whether the network is to be used for discrimination or generative tasks, it is then "fine tuned" or trained with either back-propagation In machine learning, backpropagation is a gradient computation method com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Network

A Bayesian network (also known as a Bayes network, Bayes net, belief network, or decision network) is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). While it is one of several forms of causal notation, causal networks are special cases of Bayesian networks. Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases. Efficient algorithms can perform inference and learning in Bayesian networks. Bayesian networks that model sequences of variables (''e.g.'' speech signals or protein sequences) are called dynamic Bayesian networks. Generalizations of Bayesian networks tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Restricted Boltzmann Machine

A restricted Boltzmann machine (RBM) (also called a restricted Sherrington–Kirkpatrick model with external field or restricted stochastic Ising–Lenz–Little model) is a generative stochastic artificial neural network that can learn a probability distribution over its set of inputs. RBMs were initially proposed under the name Harmonium by Paul Smolensky in 1986, and rose to prominence after Geoffrey Hinton and collaborators used fast learning algorithms for them in the mid-2000s. RBMs have found applications in dimensionality reduction, classification, collaborative filtering, feature learning, topic modelling,Ruslan Salakhutdinov and Geoffrey Hinton (2010)Replicated softmax: an undirected topic model. '' Neural Information Processing Systems'' 23. immunology, and even manybody quantum mechanics. They can be trained in either supervised or unsupervised ways, depending on the task. As their name implies, RBMs are a variant of Boltzmann machines, with the restrictio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sigmoid Function

A sigmoid function is any mathematical function whose graph of a function, graph has a characteristic S-shaped or sigmoid curve. A common example of a sigmoid function is the logistic function, which is defined by the formula :\sigma(x) = \frac = \frac = 1 - \sigma(-x). Other sigmoid functions are given in the #Examples, Examples section. In some fields, most notably in the context of artificial neural networks, the term "sigmoid function" is used as a synonym for "logistic function". Special cases of the sigmoid function include the Gompertz curve (used in modeling systems that saturate at large values of ''x'') and the ogee curve (used in the spillway of some dams). Sigmoid functions have domain of all real numbers, with return (response) value commonly monotonically increasing but could be decreasing. Sigmoid functions most often show a return value (''y'' axis) in the range 0 to 1. Another commonly used range is from −1 to 1. A wide variety of sigmoid functions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gibbs Sampling

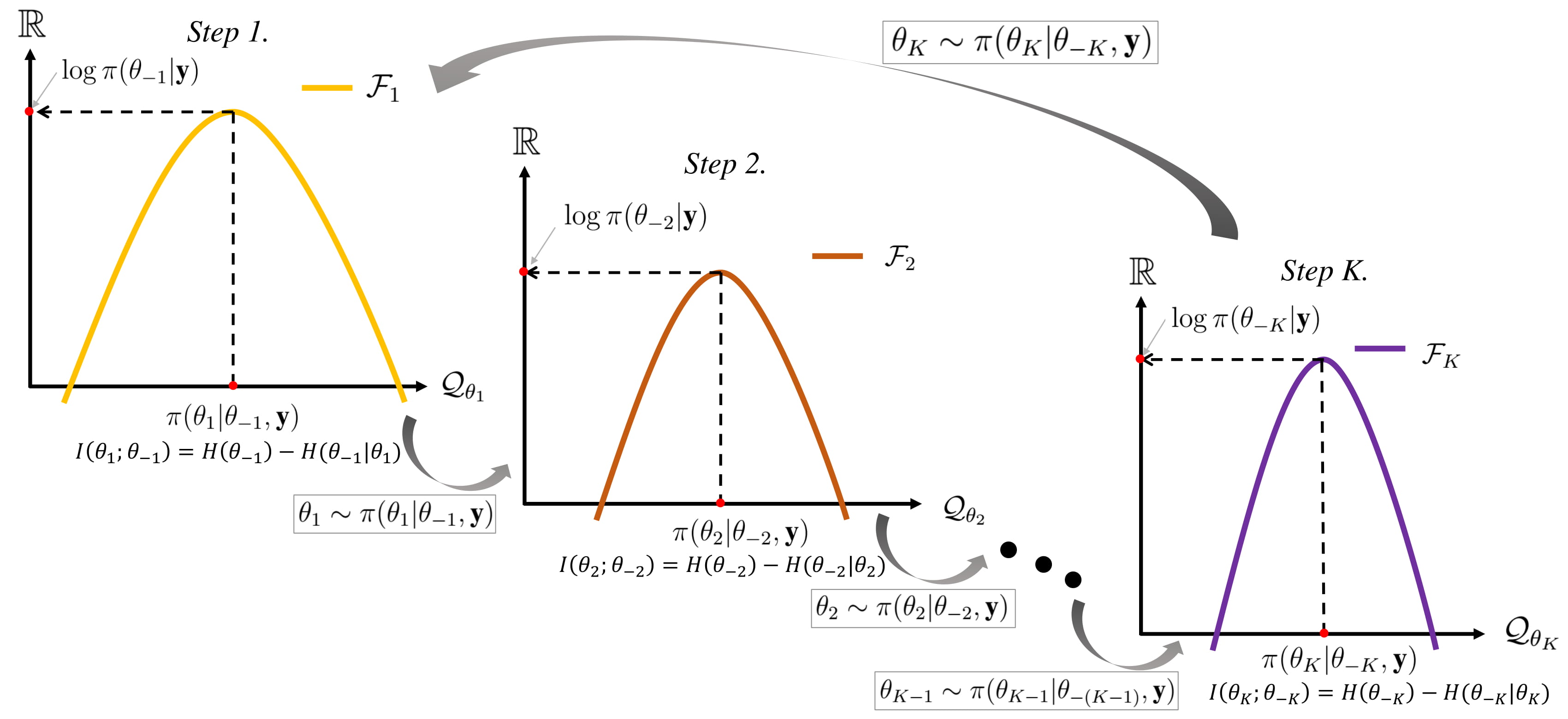

In statistics, Gibbs sampling or a Gibbs sampler is a Markov chain Monte Carlo (MCMC) algorithm for sampling from a specified multivariate distribution, multivariate probability distribution when direct sampling from the joint distribution is difficult, but sampling from the Conditional probability distribution, conditional distribution is more practical. This sequence can be used to approximate the joint distribution (e.g., to generate a histogram of the distribution); to approximate the marginal distribution of one of the variables, or some subset of the variables (for example, the unknown parameters or latent variables); or to compute an integral (such as the expected value of one of the variables). Typically, some of the variables correspond to observations whose values are known, and hence do not need to be sampled. Gibbs sampling is commonly used as a means of statistical inference, especially Bayesian inference. It is a randomized algorithm (i.e. an algorithm that makes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partition Function (mathematics)

The partition function or configuration integral, as used in probability theory, information theory and dynamical systems, is a generalization of the definition of a partition function in statistical mechanics. It is a special case of a normalizing constant in probability theory, for the Boltzmann distribution. The partition function occurs in many problems of probability theory because, in situations where there is a natural symmetry, its associated probability measure, the Gibbs measure, has the Markov property. This means that the partition function occurs not only in physical systems with translation symmetry, but also in such varied settings as neural networks (the Hopfield network), and applications such as genomics, corpus linguistics and artificial intelligence, which employ Markov networks, and Markov logic networks. The Gibbs measure is also the unique measure that has the property of maximizing the entropy for a fixed expectation value of the energy; this under ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient Descent

Gradient descent is a method for unconstrained mathematical optimization. It is a first-order iterative algorithm for minimizing a differentiable multivariate function. The idea is to take repeated steps in the opposite direction of the gradient (or approximate gradient) of the function at the current point, because this is the direction of steepest descent. Conversely, stepping in the direction of the gradient will lead to a trajectory that maximizes that function; the procedure is then known as ''gradient ascent''. It is particularly useful in machine learning for minimizing the cost or loss function. Gradient descent should not be confused with local search algorithms, although both are iterative methods for optimization. Gradient descent is generally attributed to Augustin-Louis Cauchy, who first suggested it in 1847. Jacques Hadamard independently proposed a similar method in 1907. Its convergence properties for non-linear optimization problems were first studied by Has ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when the random errors are assumed to have normal distributions with the same variance. From the perspective of Bayesian inference, ML ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Product Of Experts

Product of experts (PoE) is a machine learning technique. It models a probability distribution by combining the output from several simpler distributions. It was proposed by Geoffrey Hinton in 1999, along with an algorithm for training the parameters of such a system. The core idea is to combine several probability distributions ("experts") by multiplying their density functions—making the PoE classification similar to an "and" operation. This allows each expert to make decisions on the basis of a few dimensions without having to cover the full dimensionality of a problem: P(y, \)=\frac\prod_^M f_j(y, \) where f_j are unnormalized expert densities and Z=\int\mboxy \prod_^M f_j(y, \) is a normalization constant (see partition function (statistical mechanics)). This is related to (but quite different from) a mixture model, where several probability distributions p_j(y, \) are combined via an "or" operation, which is a weighted sum of their density functions: P(y, \)=\sum_^M \a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |