|

Autoregressive Moving-average Model

In the statistics, statistical analysis of time series, autoregressive–moving-average (ARMA) models are a way to describe a stationary stochastic process, (weakly) stationary stochastic process using AR model, autoregression (AR) and a MA model, moving average (MA), each with a polynomial. They are a tool for understanding a series and predicting future values. AR involves regressing the variable on its own lagged (i.e., past) values. MA involves modeling the errors and residuals in statistics, error as a linear combination of error terms occurring contemporaneously and at various times in the past. The model is usually denoted ARMA(''p'', ''q''), where ''p'' is the order of AR and ''q'' is the order of MA. The general ARMA model was described in the 1951 thesis of Peter Whittle (mathematician), Peter Whittle, ''Hypothesis testing in time series analysis'', and it was popularized in the 1970 book by George E. P. Box and Gwilym Jenkins. ARMA models can be estimated by using the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Augmented Dickey–Fuller Test

In statistics, an augmented Dickey–Fuller test (ADF) tests the null hypothesis that a unit root is present in a time series sample. The alternative hypothesis depends on which version of the test is used, but is usually stationarity or trend-stationarity. It is an augmented version of the Dickey–Fuller test for a larger and more complicated set of time series models. The augmented Dickey–Fuller (ADF) statistic, used in the test, is a negative number. The more negative it is, the stronger the rejection of the hypothesis that there is a unit root at some level of confidence. Testing procedure The procedure for the ADF test is the same as for the Dickey–Fuller test but it is applied to the model :\Delta y_t = \alpha + \beta t + \gamma y_ + \delta_1 \Delta y_ + \cdots + \delta_ \Delta y_ + \varepsilon_t, where \alpha is a constant, \beta the coefficient on a time trend and p the lag order of the autoregressive process. Imposing the constraints \alpha = 0 and \beta = 0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R (programming Language)

R is a programming language for statistical computing and Data and information visualization, data visualization. It has been widely adopted in the fields of data mining, bioinformatics, data analysis, and data science. The core R language is extended by a large number of R package, software packages, which contain Reusability, reusable code, documentation, and sample data. Some of the most popular R packages are in the tidyverse collection, which enhances functionality for visualizing, transforming, and modelling data, as well as improves the ease of programming (according to the authors and users). R is free and open-source software distributed under the GNU General Public License. The language is implemented primarily in C (programming language), C, Fortran, and Self-hosting (compilers), R itself. Preprocessor, Precompiled executables are available for the major operating systems (including Linux, MacOS, and Microsoft Windows). Its core is an interpreted language with a na ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least Squares

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The method is widely used in areas such as regression analysis, curve fitting and data modeling. The least squares method can be categorized into linear and nonlinear forms, depending on the relationship between the model parameters and the observed data. The method was first proposed by Adrien-Marie Legendre in 1805 and further developed by Carl Friedrich Gauss. History Founding The method of least squares grew out of the fields of astronomy and geodesy, as scientists and mathematicians sought to provide solutions to the challenges of navigating the Earth's oceans during the Age of Discovery. The accurate description of the behavior of celestial bodies was the key to enabling ships to sail in open seas, where sailors could no longer rely on la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Information Criterion

In statistics, the Bayesian information criterion (BIC) or Schwarz information criterion (also SIC, SBC, SBIC) is a criterion for model selection among a finite set of models; models with lower BIC are generally preferred. It is based, in part, on the likelihood function and it is closely related to the Akaike information criterion (AIC). When fitting models, it is possible to increase the maximum likelihood by adding parameters, but doing so may result in overfitting. Both BIC and AIC attempt to resolve this problem by introducing a penalty term for the number of parameters in the model; the penalty term is larger in BIC than in AIC for sample sizes greater than 7. The BIC was developed by Gideon E. Schwarz and published in a 1978 paper, as a large-sample approximation to the Bayes factor. Definition The BIC is formally defined as : \mathrm = k\ln(n) - 2\ln(\widehat L). \ where *\hat L = the maximized value of the likelihood function of the model M, i.e. \hat L=p(x\mid\wid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Akaike Information Criterion

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection. AIC is founded on information theory. When a statistical model is used to represent the process that generated the data, the representation will almost never be exact; so some information will be lost by using the model to represent the process. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality of that model. In estimating the amount of information lost by a model, AIC deals with the trade-off between the goodness of fit of the model and the simplicity of the model. In other words, AIC deals with both the risk of overfitting and the risk of underfitting. The Akaike information crite ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation Function

Autocorrelation, sometimes known as serial correlation in the discrete time case, measures the correlation of a signal with a delayed copy of itself. Essentially, it quantifies the similarity between observations of a random variable at different points in time. The analysis of autocorrelation is a mathematical tool for identifying repeating patterns or hidden periodicities within a signal obscured by noise. Autocorrelation is widely used in signal processing, time domain and time series analysis to understand the behavior of data over time. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Various time series models incorporate autocorrelation, such as unit root processes, trend-stationary processes, autoregressive processes, and moving average processes. Autocorrelation of stochastic processes In statistics, the autocorrelation of a real or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partial Autocorrelation Function

In time series analysis, the partial autocorrelation function (PACF) gives the partial correlation of a stationary time series with its own lagged values, regressed the values of the time series at all shorter lags. It contrasts with the autocorrelation function, which does not control for other lags. This function plays an important role in data analysis aimed at identifying the extent of the lag in an autoregressive model, autoregressive (AR) model. The use of this function was introduced as part of the Box–Jenkins approach to time series modelling, whereby plotting the partial autocorrelative functions one could determine the appropriate lags p in an AR (p) autoregressive model, model or in an extended Autoregressive integrated moving average, ARIMA (p,d,q) model. Definition Given a time series z_t, the partial autocorrelation of lag k, denoted \phi_, is the autocorrelation between z_t and z_ with the linear dependence of z_t on z_ through z_ removed. Equivalently, it is the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. It is the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviation; for example, the variance of a sum of uncorrelated random variables is equal to the sum of their variances. A disadvantage of the variance for practical applications is that, unlike the standard deviation, its units differ from the random variable, which is why the standard devi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spectral Density

In signal processing, the power spectrum S_(f) of a continuous time signal x(t) describes the distribution of power into frequency components f composing that signal. According to Fourier analysis, any physical signal can be decomposed into a number of discrete frequencies, or a spectrum of frequencies over a continuous range. The statistical average of any sort of signal (including noise) as analyzed in terms of its frequency content, is called its spectrum. When the energy of the signal is concentrated around a finite time interval, especially if its total energy is finite, one may compute the energy spectral density. More commonly used is the power spectral density (PSD, or simply power spectrum), which applies to signals existing over ''all'' time, or over a time period large enough (especially in relation to the duration of a measurement) that it could as well have been over an infinite time interval. The PSD then refers to the spectral energy distribution that would be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gwilym M

Gwilym is a Welsh given name and surname, related to William, Guillaume, and others in a number of other languages. Given name * Gwilym ab Ieuan Hen (1440–1480), Welsh language poet * Gwilym Davies (minister) CBE (1879–1955), Welsh Baptist minister * Gwilym Edwards (1881–1963), Welsh Presbyterian minister * Gwilym Ellis Lane Owen (1922–1982), Welsh philosopher * Gwil Owen (born 1960), American singer/songwriter * Gwilym Gibbons (born 1971), British arts leader * Gwilym Gwent (1834–1891), Welsh-born composer in the United States * Gwilym Jenkins (1933–1982), British statistician and systems engineer * Gwilym Jones (born 1947), British Conservative politician * Gwilym Kessey (1919–1986), Australian cricketer *Gwilym Lee (born 1983), British actor * Gwilym Lloyd George, 1st Viscount Tenby (1894–1967), politician and UK cabinet minister * Gwilym Thomas Mainwaring (1941–2019), Welsh rugby player * Gwilym Owen Williams (1913–1990), Anglican Archbishop of Wales from 19 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

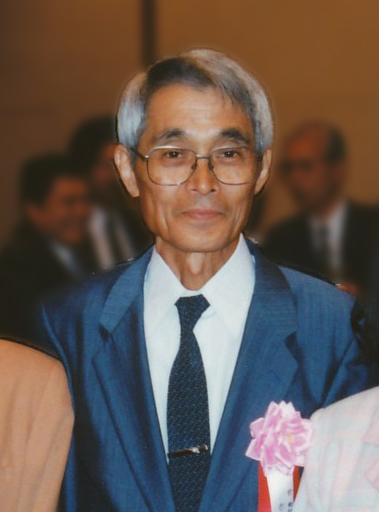

George Box

George Edward Pelham Box (18 October 1919 – 28 March 2013) was a British statistician, who worked in the areas of quality control, time-series analysis, design of experiments, and Bayesian inference. He has been called "one of the great statistical minds of the 20th century". He is famous for the quote "All models are wrong but some are useful". Education and early life He was born in Gravesend, Kent, England. Upon entering university he began to study chemistry, but was called up for service before finishing. During World War II, he performed experiments for the British Army exposing small animals to poison gas. To analyze the results of his experiments, he taught himself statistics from available texts. After the war, he enrolled at University College London and obtained a bachelor's degree in mathematics and statistics. He received a PhD from the University of London in 1953, under the supervision of Egon Pearson and Herman Otto Hartley, HO Hartley. Career and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |