|

Adversarial Information Retrieval

Adversarial information retrieval (adversarial IR) is a topic in information retrieval related to strategies for working with a data source where some portion of it has been manipulated maliciously. Tasks can include gathering, indexing, filtering, retrieving and ranking information from such a data source. Adversarial IR includes the study of methods to detect, isolate, and defeat such manipulation. On the Web, the predominant form of such manipulation is search engine spamming (also known as spamdexing), which involves employing various techniques to disrupt the activity of web search engines, usually for financial gain. Examples of spamdexing are link-bombing, comment or referrer spam, spam blogs (splogs), malicious tagging. Reverse engineering of ranking algorithms, click fraud, and web content filtering may also be considered forms of adversarial data manipulation. Topics Topics related to Web spam (spamdexing): * Link spam * Keyword spamming * Cloaking * Mali ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Information Retrieval

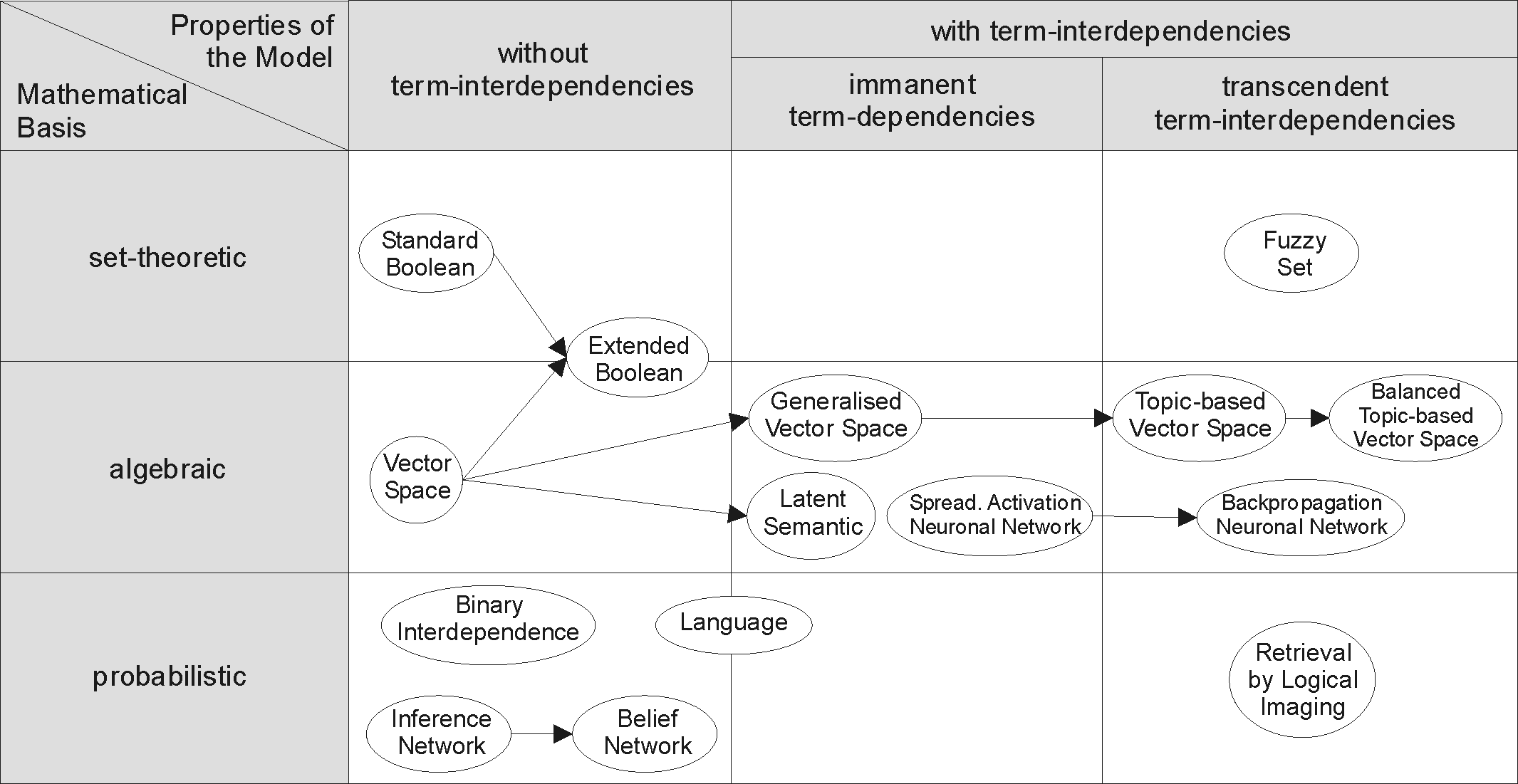

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text search, full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; it also stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user enters a query into the sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Click Fraud

Click fraud is a type of ad fraud that occurs on the Internet in pay per click (PPC) online advertising. In this type of advertising, the owners of websites that post the ads are paid based on how many site visitors click on the ads. Fraud occurs when a person, automated script, computer program or an auto clicker imitates a legitimate user of a web browser, clicking on such an ad without having an actual interest in the target of the ad's link in order to increase revenue. Click fraud is the subject of some controversy and increasing litigation due to the advertising networks being a key beneficiary of the fraud. Media entrepreneur and journalist John Battelle describes click fraud as the intentionally malicious, "decidedly black hat" practice of publishers gaming paid search advertising by employing robots or low-wage workers to click on ads on their sites repeatedly, thereby generating money to be paid by the advertiser to the publisher and to any agent the advertiser may b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Artificial Intelligence Content Detection

Artificial intelligence detection software aims to determine whether some Content creation, content (text, image, video or audio) was Generative artificial intelligence, generated using artificial intelligence (AI). However, this software is often unreliable. Accuracy issues Many AI detection tools have been shown to be unreliable when generating AI-generated text. In a 2023 study conducted by Weber-Wulff et al., researchers evaluated 14 detection tools including Turnitin and GPTZero and found that "all scored below 80% of accuracy and only 5 over 70%." They also found that these tools tend to have a bias for classifying texts more as human than as AI, and that accuracy of these tools worsens upon paraphrasing. False positives In AI content detection, a False positives and false negatives, false positive is when human-written work is incorrectly flagged as AI-written. Many AI detection platforms claim to have a minimal level of false positives, with Turnitin claiming a less t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Text Retrieval Conference

The Text REtrieval Conference (TREC) is an ongoing series of workshops focusing on a list of different information retrieval (IR) research areas, or ''tracks.'' It is co-sponsored by the National Institute of Standards and Technology (NIST) and the Intelligence Advanced Research Projects Activity (part of the office of the Director of National Intelligence), and began in 1992 as part of the TIPSTER Text program. Its purpose is to support and encourage research within the information retrieval community by providing the infrastructure necessary for large-scale ''evaluation'' of text retrieval methodologies and to increase the speed of lab-to-product transfer of technology. TREC's evaluation protocols have improved many search technologies. A 2010 study estimated that "without TREC, U.S. Internet users would have spent up to 3.15 billion additional hours using web search engines between 1999 and 2009." Hal Varian the Chief Economist at Google wrote that "The TREC data revital ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Alta Vista

AltaVista was a web search engine established in 1995. It became one of the most-used early search engines, but lost ground to Google and was purchased by Yahoo! in 2003, which retained the brand, but based all AltaVista searches on its own search engine. On July 8, 2013, the service was shut down by Yahoo!, and since then the domain has redirected to Yahoo!'s own search site. Etymology The word "AltaVista" is formed from the words for "high view" or "upper view" in Spanish (alta + vista); thus, it colloquially translates to "overview". Origins AltaVista was created by researchers at Digital Equipment Corporation's Network Systems Laboratory and Western Research Laboratory who were trying to provide services to make finding files on the public network easier. Paul Flaherty came up with the original idea, along with Louis Monier and Michael Burrows, who wrote the Web crawler and indexer, respectively. The name "AltaVista" was chosen in relation to the surroundings of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Andrei Broder

Andrei Zary Broder (born April 12, 1953) is a distinguished scientist at Google. Previously, he was a research fellow and vice president of computational advertising for Yahoo!, and before that, the vice president of research for AltaVista. He has also worked for IBM Research as a distinguished engineer and was CTO of IBM's Institute for Search and Text Analysis. Education and career Broder was born in Bucharest, Romania, in 1953. His parents were medical doctors, his father a noted oncological surgeon. They emigrated to Israel in 1973, when Broder was in the second year of college in Romania, in the Electronics department at the Politehnica University of Bucharest. He was accepted at Technion – Israel Institute of Technology, in the EE Department. Broder graduated from Technion in 1977, with a B.Sc. summa cum laude. He was then admitted to the PhD program at Stanford, where he initially planned to work in the systems area. His first adviser was John L. Hennessy. After receiv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Sockpuppetry

A sock puppet, sock puppet account, or simply sock is a false online identity used for deceptive purposes. The term originally referred to a hand puppet made from a sock. Sock puppets include online identities created to praise, defend, or support a person or organization, to manipulate public opinion, or to circumvent restrictions such as viewing a social media account that a user is blocked from. Sock puppets are unwelcome in many online communities and forums. History The practice of writing pseudonymous self-reviews began before the Internet. Writers Walt Whitman and Anthony Burgess wrote pseudonymous reviews of their own books,Amy Harmon"Amazon Glitch Unmasks War Of Reviewers" ''The New York Times'', February 14, 2004. (). as did Benjamin Franklin. The ''Oxford English Dictionary'' defines the term without reference to the internet, as "a person whose actions are controlled by another; a minion" with a 2000 citation from '' U.S. News & World Report''. Wikipedia has h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Astroturfing

Astroturfing is the deceptive practice of hiding the Sponsor (commercial), sponsors of an orchestrated message or organization (e.g., political, economic, advertising, religious, or public relations) to make it appear as though it originates from, and is supported by, unsolicited grassroots participants. It is a practice intended to give the statements or organizations credibility by withholding information about the source's financial backers. The implication behind the use of the term is that instead of a "true" or "natural" grassroots effort behind the activity in question, there is a "fake" or "artificial" appearance of support. It is increasingly recognized as a problem in social media, e-commerce, and politics. Astroturfing can influence public opinion by flooding platforms like political blogs, news sites, and review websites with manipulated content. Some groups accused of astroturfing argue that they are legitimately helping citizen activists to make their voices heard. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Social Networks

A social network is a social structure consisting of a set of social actors (such as individuals or organizations), networks of dyadic ties, and other social interactions between actors. The social network perspective provides a set of methods for analyzing the structure of whole social entities along with a variety of theories explaining the patterns observed in these structures. The study of these structures uses social network analysis to identify local and global patterns, locate influential entities, and examine dynamics of networks. For instance, social network analysis has been used in studying the spread of misinformation on social media platforms or analyzing the influence of key figures in social networks. Social networks and the analysis of them is an inherently interdisciplinary academic field which emerged from social psychology, sociology, statistics, and graph theory. Georg Simmel authored early structural theories in sociology emphasizing the dynamics of tria ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Troll (Internet)

In slang, a troll is a person who posts deliberately offensive or provocative messages online (such as in social media, a newsgroup, a internet forum, forum, a chat room, an Multiplayer video game, online video game) or who performs similar behaviors in real life. The methods and motivations of trolls can range from benign to sadistic. These messages can be inflammatory, insincerity, insincere, digression, digressive, wikt:extraneous#Adjective, extraneous, or off-topic, and may have the intent of provoking others into displaying emotional responses, or Psychological manipulation, manipulating others' perceptions, thus acting as a bullying, bully or a agent provocateur, provocateur. The behavior is typically for the troll's amusement, or to achieve a specific result such as disrupting a rival's online activities or purposefully causing confusion or harm to other people. Trolling behaviors involve tactical aggression to incite emotional responses, which can adversely affect the ta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Web Crawling

Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing (''web spidering''). Web search engines and some other websites use Web crawling or spidering software to update their web content or indices of other sites' web content. Web crawlers copy pages for processing by a search engine, which indexes the downloaded pages so that users can search more efficiently. Crawlers consume resources on visited systems and often visit sites unprompted. Issues of schedule, load, and "politeness" come into play when large collections of pages are accessed. Mechanisms exist for public sites not wishing to be crawled to make this known to the crawling agent. For example, including a robots.txt file can request bots to index only parts of a website, or nothing at all. The number of Internet pages is extremely large; even t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Ad Filtering

Ad blocking (or ad filtering) is a software capability for blocking or altering online advertising in a web browser, an application or a network. This may be done using browser extensions or other methods or browsers with inside blocking. History The first ad blocker was Internet Fast Forward, a plugin for the Netscape Navigator browser, developed by PrivNet and released in 1996. The AdBlock extension for Firefox was developed in 2002, with Adblock Plus being released in 2006. uBlock Origin, originally called "uBlock", was first released in 2014. Technologies and native countermeasures Online advertising exists in a variety of forms, including web banners, pictures, animations, embedded audio and video, text, or pop-up windows, and can even employ audio and video autoplay. Many browsers offer some ways to remove or alter advertisements: either by targeting technologies that are used to deliver ads (such as embedded content delivered through browser plug-ins or via HTML ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |