|

Orthonormality

In linear algebra, two vector space, vectors in an inner product space are orthonormal if they are orthogonality, orthogonal unit vectors. A unit vector means that the vector has a length of 1, which is also known as normalized. Orthogonal means that the vectors are all perpendicular to each other. A set of vectors form an orthonormal set if all vectors in the set are mutually orthogonal and all of unit length. An orthonormal set which forms a basis (linear algebra), basis is called an ''orthonormal basis''. Intuitive overview The construction of orthogonality of vectors is motivated by a desire to extend the intuitive notion of perpendicular vectors to higher-dimensional spaces. In the Cartesian coordinate system#Cartesian coordinates in two dimensions, Cartesian plane, two Vector (geometry), vectors are said to be ''perpendicular'' if the angle between them is 90° (i.e. if they form a right angle). This definition can be formalized in Cartesian space by defining the dot produc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polar Coordinates

In mathematics, the polar coordinate system specifies a given point (mathematics), point in a plane (mathematics), plane by using a distance and an angle as its two coordinate system, coordinates. These are *the point's distance from a reference point called the ''pole'', and *the point's direction from the pole relative to the direction of the ''polar axis'', a ray (geometry), ray drawn from the pole. The distance from the pole is called the ''radial coordinate'', ''radial distance'' or simply ''radius'', and the angle is called the ''angular coordinate'', ''polar angle'', or ''azimuth''. The pole is analogous to the origin in a Cartesian coordinate system. Polar coordinates are most appropriate in any context where the phenomenon being considered is inherently tied to direction and length from a center point in a plane, such as spirals. Planar physical systems with bodies moving around a central point, or phenomena originating from a central point, are often simpler and more in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Algebra

Linear algebra is the branch of mathematics concerning linear equations such as :a_1x_1+\cdots +a_nx_n=b, linear maps such as :(x_1, \ldots, x_n) \mapsto a_1x_1+\cdots +a_nx_n, and their representations in vector spaces and through matrix (mathematics), matrices. Linear algebra is central to almost all areas of mathematics. For instance, linear algebra is fundamental in modern presentations of geometry, including for defining basic objects such as line (geometry), lines, plane (geometry), planes and rotation (mathematics), rotations. Also, functional analysis, a branch of mathematical analysis, may be viewed as the application of linear algebra to Space of functions, function spaces. Linear algebra is also used in most sciences and fields of engineering because it allows mathematical model, modeling many natural phenomena, and computing efficiently with such models. For nonlinear systems, which cannot be modeled with linear algebra, it is often used for dealing with first-order a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cotangent

In mathematics, the trigonometric functions (also called circular functions, angle functions or goniometric functions) are real functions which relate an angle of a right-angled triangle to ratios of two side lengths. They are widely used in all sciences that are related to geometry, such as navigation, solid mechanics, celestial mechanics, geodesy, and many others. They are among the simplest periodic functions, and as such are also widely used for studying periodic phenomena through Fourier analysis. The trigonometric functions most widely used in modern mathematics are the sine, the cosine, and the tangent functions. Their reciprocals are respectively the cosecant, the secant, and the cotangent functions, which are less used. Each of these six trigonometric functions has a corresponding inverse function, and an analog among the hyperbolic functions. The oldest definitions of trigonometric functions, related to right-angle triangles, define them only for acute angle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Basis

In mathematics, the standard basis (also called natural basis or canonical basis) of a coordinate vector space (such as \mathbb^n or \mathbb^n) is the set of vectors, each of whose components are all zero, except one that equals 1. For example, in the case of the Euclidean plane \mathbb^2 formed by the pairs of real numbers, the standard basis is formed by the vectors \mathbf_x = (1,0),\quad \mathbf_y = (0,1). Similarly, the standard basis for the three-dimensional space \mathbb^3 is formed by vectors \mathbf_x = (1,0,0),\quad \mathbf_y = (0,1,0),\quad \mathbf_z=(0,0,1). Here the vector e''x'' points in the ''x'' direction, the vector e''y'' points in the ''y'' direction, and the vector e''z'' points in the ''z'' direction. There are several common notations for standard-basis vectors, including , , , and . These vectors are sometimes written with a hat to emphasize their status as unit vectors (standard unit vectors). These vectors are a basis in the sense that any othe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spectral Theorem

In linear algebra and functional analysis, a spectral theorem is a result about when a linear operator or matrix can be diagonalized (that is, represented as a diagonal matrix in some basis). This is extremely useful because computations involving a diagonalizable matrix can often be reduced to much simpler computations involving the corresponding diagonal matrix. The concept of diagonalization is relatively straightforward for operators on finite-dimensional vector spaces but requires some modification for operators on infinite-dimensional spaces. In general, the spectral theorem identifies a class of linear operators that can be modeled by multiplication operators, which are as simple as one can hope to find. In more abstract language, the spectral theorem is a statement about commutative C*-algebras. See also spectral theory for a historical perspective. Examples of operators to which the spectral theorem applies are self-adjoint operators or more generally normal operator ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

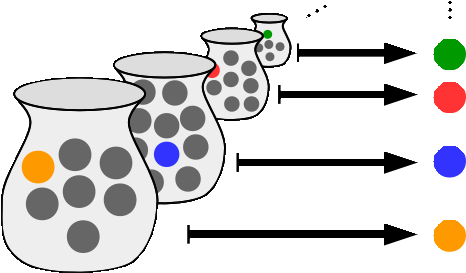

Axiom Of Choice

In mathematics, the axiom of choice, abbreviated AC or AoC, is an axiom of set theory. Informally put, the axiom of choice says that given any collection of non-empty sets, it is possible to construct a new set by choosing one element from each set, even if the collection is infinite. Formally, it states that for every indexed family (S_i)_ of nonempty sets (S_i as a nonempty set indexed with i), there exists an indexed set (x_i)_ such that x_i \in S_i for every i \in I. The axiom of choice was formulated in 1904 by Ernst Zermelo in order to formalize his proof of the well-ordering theorem. The axiom of choice is equivalent to the statement that every partition has a transversal. In many cases, a set created by choosing elements can be made without invoking the axiom of choice, particularly if the number of sets from which to choose the elements is finite, or if a canonical rule on how to choose the elements is available — some distinguishing property that happens to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constructive Proof

In mathematics, a constructive proof is a method of mathematical proof, proof that demonstrates the existence of a mathematical object by creating or providing a method for creating the object. This is in contrast to a non-constructive proof (also known as an existence proof or existence theorem, ''pure existence theorem''), which proves the existence of a particular kind of object without providing an example. For avoiding confusion with the stronger concept that follows, such a constructive proof is sometimes called an effective proof. A constructive proof may also refer to the stronger concept of a proof that is valid in constructive mathematics. Constructivism (mathematics), Constructivism is a mathematical philosophy that rejects all proof methods that involve the existence of objects that are not explicitly built. This excludes, in particular, the use of the law of the excluded middle, the axiom of infinity, and the axiom of choice. Constructivism also induces a different mean ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linearly Independent

In the theory of vector spaces, a set of vectors is said to be if there exists no nontrivial linear combination of the vectors that equals the zero vector. If such a linear combination exists, then the vectors are said to be . These concepts are central to the definition of dimension. A vector space can be of finite dimension or infinite dimension depending on the maximum number of linearly independent vectors. The definition of linear dependence and the ability to determine whether a subset of vectors in a vector space is linearly dependent are central to determining the dimension of a vector space. Definition A sequence of vectors \mathbf_1, \mathbf_2, \dots, \mathbf_k from a vector space is said to be ''linearly dependent'', if there exist scalars a_1, a_2, \dots, a_k, not all zero, such that :a_1\mathbf_1 + a_2\mathbf_2 + \cdots + a_k\mathbf_k = \mathbf, where \mathbf denotes the zero vector. This implies that at least one of the scalars is nonzero, say a_1\ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Map

In mathematics, and more specifically in linear algebra, a linear map (also called a linear mapping, linear transformation, vector space homomorphism, or in some contexts linear function) is a mapping V \to W between two vector spaces that preserves the operations of vector addition and scalar multiplication. The same names and the same definition are also used for the more general case of modules over a ring; see Module homomorphism. If a linear map is a bijection then it is called a . In the case where V = W, a linear map is called a linear endomorphism. Sometimes the term refers to this case, but the term "linear operator" can have different meanings for different conventions: for example, it can be used to emphasize that V and W are real vector spaces (not necessarily with V = W), or it can be used to emphasize that V is a function space, which is a common convention in functional analysis. Sometimes the term ''linear function'' has the same meaning as ''linear m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Diagonalizable Matrix

In linear algebra, a square matrix A is called diagonalizable or non-defective if it is matrix similarity, similar to a diagonal matrix. That is, if there exists an invertible matrix P and a diagonal matrix D such that . This is equivalent to (Such D are not unique.) This property exists for any linear map: for a dimension (vector space), finite-dimensional vector space a linear map T:V\to V is called diagonalizable if there exists an Basis (linear algebra)#Ordered bases and coordinates, ordered basis of V consisting of eigenvectors of T. These definitions are equivalent: if T has a matrix (mathematics), matrix representation A = PDP^ as above, then the column vectors of P form a basis consisting of eigenvectors of and the diagonal entries of D are the corresponding eigenvalues of with respect to this eigenvector basis, T is represented by Diagonalization is the process of finding the above P and and makes many subsequent computations easier. One can raise a diag ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |