|

Inverse-variance Weighting

In statistics, inverse-variance weighting is a method of aggregating two or more random variables to minimize the variance of the weighted average. Each random variable is weighted in inverse proportion to its variance (i.e., proportional to its precision). Given a sequence of independent observations with variances , the inverse-variance weighted average is given by : \hat = \frac . The inverse-variance weighted average has the least variance among all weighted averages, which can be calculated as : Var(\hat) = \frac . This variance can be used to parametrize a confidence interval. If the variances of the measurements are all equal, then the inverse-variance weighted average becomes the simple average. Inverse-variance weighting is typically used in statistical meta-analysis or sensor fusion to combine the results from independent measurements. Context Suppose an experimenter wishes to measure the value of a quantity, say the acceleration due to gravity of Earth, whose true ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Projectile Motion

In physics, projectile motion describes the motion of an object that is launched into the air and moves under the influence of gravity alone, with air resistance neglected. In this idealized model, the object follows a parabolic path determined by its initial velocity and the constant acceleration due to gravity. The motion can be decomposed into horizontal and vertical components: the horizontal motion occurs at a constant velocity, while the vertical motion experiences uniform acceleration. This framework, which lies at the heart of classical mechanics, is fundamental to a wide range of applications—from engineering and ballistics to sports science and natural phenomena. Galileo Galilei showed that the trajectory of a given projectile is parabolic, but the path may also be straight in the special case when the object is thrown directly upward or downward. The study of such motions is called ballistics, and such a trajectory is described as ballistic. The only force of m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Portfolio Theory

Modern portfolio theory (MPT), or mean-variance analysis, is a mathematical framework for assembling a portfolio of assets such that the expected return is maximized for a given level of risk. It is a formalization and extension of Diversification (finance), diversification in investing, the idea that owning different kinds of financial assets is less risky than owning only one type. Its key insight is that an asset's risk and return should not be assessed by itself, but by how it contributes to a portfolio's overall risk and return. The variance of return (or its transformation, the standard deviation) is used as a measure of risk, because it is tractable when assets are combined into portfolios. Often, the historical variance and covariance of returns is used as a proxy for the forward-looking versions of these quantities, but other, more sophisticated methods are available. Economist Harry Markowitz introduced MPT in a 1952 paper, for which he was later awarded a Nobel Memorial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighted Least Squares

Weighted least squares (WLS), also known as weighted linear regression, is a generalization of ordinary least squares and linear regression in which knowledge of the unequal variance of observations (''heteroscedasticity'') is incorporated into the regression. WLS is also a specialization of generalized least squares, when all the off-diagonal entries of the covariance matrix of the errors, are null. Formulation The fit of a model to a data point is measured by its residual, r_i , defined as the difference between a measured value of the dependent variable, y_i and the value predicted by the model, f(x_i, \boldsymbol\beta): r_i(\boldsymbol\beta) = y_i - f(x_i, \boldsymbol\beta). If the errors are uncorrelated and have equal variance, then the function S(\boldsymbol\beta) = \sum_i r_i(\boldsymbol\beta)^2, is minimised at \boldsymbol\hat\beta, such that \frac(\hat\boldsymbol\beta) = 0. The Gauss–Markov theorem shows that, when this is so, \hat is a best linear unbiased es ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Variance

The generalized variance is a scalar value which generalizes variance for multivariate random variables. It was introduced by Samuel S. Wilks. The generalized variance is defined as the determinant of the covariance matrix, \det(\Sigma). It can be shown to be related to the multidimensional scatter of points around their mean. Minimizing the generalized variance gives the Kalman filter In statistics and control theory, Kalman filtering (also known as linear quadratic estimation) is an algorithm that uses a series of measurements observed over time, including statistical noise and other inaccuracies, to produce estimates of unk ... gain.Proof that the Kalman gain minimizes the generalized variance, Eviatar Bach https://arxiv.org/abs/2103.07275 References {{statistics-stub Covariance and correlation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kalman Filter

In statistics and control theory, Kalman filtering (also known as linear quadratic estimation) is an algorithm that uses a series of measurements observed over time, including statistical noise and other inaccuracies, to produce estimates of unknown variables that tend to be more accurate than those based on a single measurement, by estimating a joint probability distribution over the variables for each time-step. The filter is constructed as a mean squared error minimiser, but an alternative derivation of the filter is also provided showing how the filter relates to maximum likelihood statistics. The filter is named after Rudolf E. Kálmán. Kalman filtering has numerous technological applications. A common application is for guidance, navigation, and control of vehicles, particularly aircraft, spacecraft and ships Dynamic positioning, positioned dynamically. Furthermore, Kalman filtering is much applied in time series analysis tasks such as signal processing and econometrics. K ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference ( or ) is a method of statistical inference in which Bayes' theorem is used to calculate a probability of a hypothesis, given prior evidence, and update it as more information becomes available. Fundamentally, Bayesian inference uses a prior distribution to estimate posterior probabilities. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence of two antecedents: a prior probability and a "likelihood function" derive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Second Partial Derivative Test

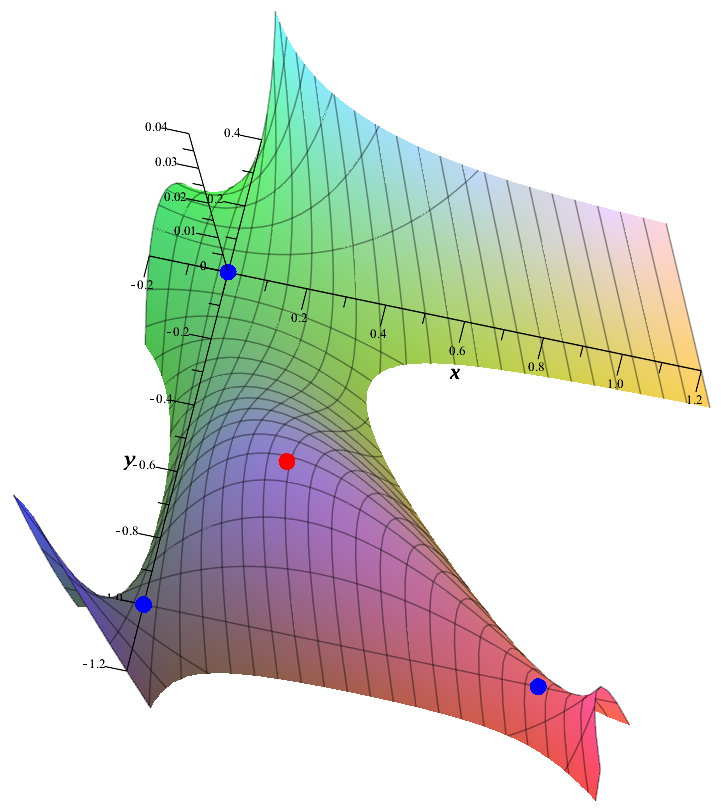

In mathematics, the second partial derivative test is a method in multivariable calculus used to determine if a Critical point (mathematics), critical point of a function is a maxima and minima, local minimum, maximum or saddle point. Functions of two variables Suppose that is a differentiable real function of two variables whose second partial derivatives exist and are continuous function, continuous. The Hessian matrix of is the 2 × 2 matrix of partial derivatives of : H(x,y) = \begin f_(x,y) &f_(x,y)\\ f_(x,y) &f_(x,y) \end. Define to be the determinant D(x,y)=\det(H(x,y)) = f_(x,y)f_(x,y) - \left( f_(x,y) \right)^2 of . Finally, suppose that is a critical point of , that is, that . Then the second partial derivative test asserts the following: #If and then is a local minimum of . #If and then is a local maximum of . #If then is a saddle point of . #If then the point could be any of a minimum, maximum, or saddle point (that is, the test is inconclusive). S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Multiplier

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function (mathematics), function subject to constraint (mathematics), equation constraints (i.e., subject to the condition that one or more equations have to be satisfied exactly by the chosen values of the variable (mathematics), variables). It is named after the mathematician Joseph-Louis Lagrange. Summary and rationale The basic idea is to convert a constrained problem into a form such that the derivative test of an unconstrained problem can still be applied. The relationship between the gradient of the function and gradients of the constraints rather naturally leads to a reformulation of the original problem, known as the Lagrangian function or Lagrangian. In the general case, the Lagrangian is defined as \mathcal(x, \lambda) \equiv f(x) + \langle \lambda, g(x)\rangle for functions f, g; the notation \langle \cdot, \cdot \rangle denotes an inner prod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient

In vector calculus, the gradient of a scalar-valued differentiable function f of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p gives the direction and the rate of fastest increase. The gradient transforms like a vector under change of basis of the space of variables of f. If the gradient of a function is non-zero at a point p, the direction of the gradient is the direction in which the function increases most quickly from p, and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to minimize a function by gradient descent. In coordinate-free terms, the gradient of a function f(\mathbf) may be defined by: df=\nabla f \cdot d\mathbf where df is the total infinitesimal change in f for a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |