|

Inception (deep Learning Architecture)

Inception is a family of convolutional neural network (CNN) for computer vision, introduced by researchers at Google in 2014 as GoogLeNet (later renamed Inception v1). The series was historically important as an early CNN that separates the stem (data ingest), body (data processing), and head (prediction), an architectural design that persists in all modern CNN. Version history Inception v1 In 2014, a team at Google developed the GoogLeNet architecture, an instance of which won the ImageNet Large Scale Visual Recognition Challenge, ImageNet Large-Scale Visual Recognition Challenge 2014 (ILSVRC14). The name came from the LeNet of 1998, since both LeNet and GoogLeNet are CNNs. They also called it "Inception" after a "we need to go deeper" internet meme, a phrase from Inception, ''Inception'' (2010) the film. Because later, more versions were released, the original Inception architecture was renamed again as "Inception v1". The models and the code were released under Apache ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google AI

Google AI is a division of Google dedicated to artificial intelligence. It was announced at Google I/O 2017 by CEO Sundar Pichai. This division has expanded its reach with research facilities in various parts of the world such as Zurich, Paris, Israel, and Beijing. In 2023, Google AI was part of the reorganization initiative that elevated its head, Jeff Dean, to the position of chief scientist at Google. This reorganization involved the merging of Google Brain and DeepMind, a UK-based company that Google acquired in 2014 that operated separately from the company's core research. In March 2019, Google announced the creation of an Advanced Technology External Advisory Council (ATEAC) comprising eight members: Alessandro Acquisti, Bubacarr Bah, De Kai, Dyan Gibbens, Joanna Bryson, Kay Coles James, Luciano Floridi and William Joseph Burns. Following objections from a large number of Google staff to the appointment of Kay Coles James, the Council was abandoned within one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inception Dimension-reduced Module

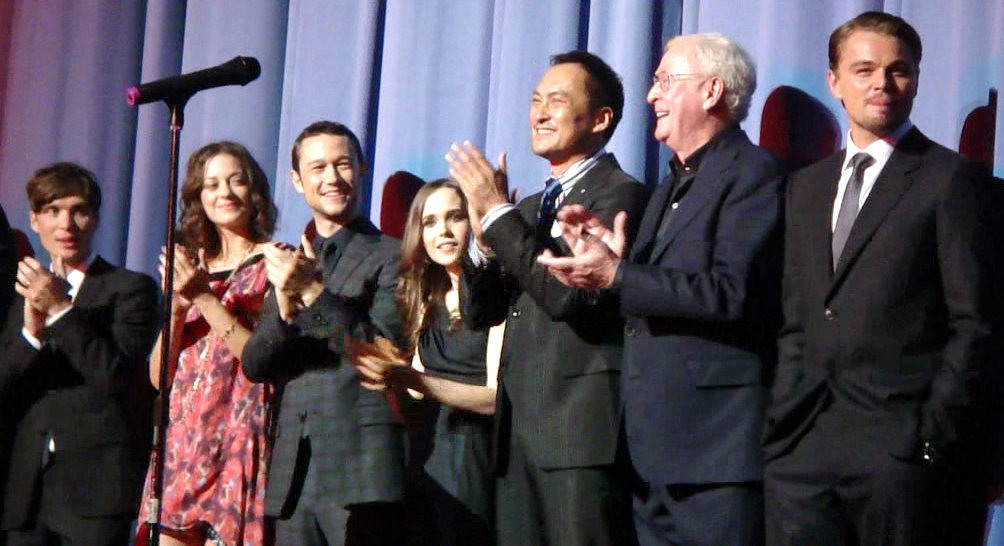

''Inception'' is a 2010 science fiction action heist film written and directed by Christopher Nolan, who also produced it with Emma Thomas, his wife. The film stars Leonardo DiCaprio as a professional thief who steals information by infiltrating the subconscious of his targets. He is offered a chance to have his criminal history erased as payment for the implantation of another person's idea into a target's subconscious. The ensemble cast includes Ken Watanabe, Joseph Gordon-Levitt, Marion Cotillard, Elliot Page, Tom Hardy, Cillian Murphy, Tom Berenger, Dileep Rao, and Michael Caine. After the 2002 completion of ''Insomnia'', Nolan presented to Warner Bros. a written 80-page treatment for a horror film envisioning "dream stealers," based on lucid dreaming. Deciding he needed more experience before tackling a production of this magnitude and complexity, Nolan shelved the project and instead worked on 2005's ''Batman Begins'', 2006's ''The Prestige'', and 2008's ''The Dark ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Networks

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, neur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Depthwise Separable Convolution

In artificial neural networks, a convolutional layer is a type of network layer that applies a convolution operation to the input. Convolutional layers are some of the primary building blocks of convolutional neural networks (CNNs), a class of neural network most commonly applied to images, video, audio, and other data that have the property of uniform translational symmetry. The convolution operation in a convolutional layer involves sliding a small window (called a kernel or filter) across the input data and computing the dot product between the values in the kernel and the input at each position. This process creates a feature map that represents detected features in the input. Concepts Kernel Kernels, also known as filters, are small matrices of weights that are learned during the training process. Each kernel is responsible for detecting a specific feature in the input data. The size of the kernel is a hyperparameter that affects the network's behavior. Convolution ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regularization (machine Learning)

In mathematics, statistics, finance, and computer science, particularly in machine learning and inverse problems, regularization is a process that converts the answer to a problem to a simpler one. It is often used in solving ill-posed problems or to prevent overfitting. Although regularization procedures can be divided in many ways, the following delineation is particularly helpful: * Explicit regularization is regularization whenever one explicitly adds a term to the optimization problem. These terms could be priors, penalties, or constraints. Explicit regularization is commonly employed with ill-posed optimization problems. The regularization term, or penalty, imposes a cost on the optimization function to make the optimal solution unique. * Implicit regularization is all other forms of regularization. This includes, for example, early stopping, using a robust loss function, and discarding outliers. Implicit regularization is essentially ubiquitous in modern machine learnin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pooling Layer

In neural networks, a pooling layer is a kind of network layer that downsamples and aggregates information that is dispersed among many vectors into fewer vectors. It has several uses. It removes redundant information, reducing the amount of computation and memory required, makes the model more robust to small variations in the input, and increases the receptive field of neurons in later layers in the network. Convolutional neural network pooling Pooling is most commonly used in convolutional neural networks (CNN). Below is a description of pooling in 2-dimensional CNNs. The generalization to n-dimensions is immediate. As notation, we consider a tensor x \in \R^, where H is height, W is width, and C is the number of channels. A pooling layer outputs a tensor y \in \R^. We define two variables f, s called "filter size" (aka "kernel size") and "stride". Sometimes, it is necessary to use a different filter size and stride for horizontal and vertical directions. In such cases, we ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Local Response Normalization

In machine learning, normalization is a statistical technique with various applications. There are two main forms of normalization, namely ''data normalization'' and ''activation normalization''. Data normalization (or feature scaling) includes methods that rescale input data so that the features have the same range, mean, variance, or other statistical properties. For instance, a popular choice of feature scaling method is min-max normalization, where each feature is transformed to have the same range (typically ,1/math> or 1,1/math>). This solves the problem of different features having vastly different scales, for example if one feature is measured in kilometers and another in nanometers. Activation normalization, on the other hand, is specific to deep learning, and includes methods that rescale the activation of hidden neurons inside neural networks. Normalization is often used to: * increase the speed of training convergence, * reduce sensitivity to variations and featu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Batch Normalization

Batch normalization (also known as batch norm) is a normalization technique used to make training of artificial neural networks faster and more stable by adjusting the inputs to each layer—re-centering them around zero and re-scaling them to a standard size. It was introduced by Sergey Ioffe and Christian Szegedy in 2015. Experts still debate why batch normalization works so well. It was initially thought to tackle ''internal covariate shift'', a problem where parameter initialization and changes in the distribution of the inputs of each layer affect the learning rate of the network. However, newer research suggests it doesn’t fix this shift but instead smooths the objective function—a mathematical guide the network follows to improve—enhancing performance. In very deep networks, batch normalization can initially cause a severe gradient explosion—where updates to the network grow uncontrollably large—but this is managed with shortcuts called skip connections in resi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Residual Neural Network

A residual neural network (also referred to as a residual network or ResNet) is a deep learning architecture in which the layers learn residual functions with reference to the layer inputs. It was developed in 2015 for image recognition, and won the ImageNet Large Scale Visual Recognition ChallengeILSVRC of that year. As a point of terminology, "residual connection" refers to the specific architectural motif of , where f is an arbitrary neural network module. The motif had been used previously (see Residual neural network#History, §History for details). However, the publication of ResNet made it widely popular for Feedforward neural network, feedforward networks, appearing in neural networks that are seemingly unrelated to ResNet. The residual connection stabilizes the training and convergence of deep neural networks with hundreds of layers, and is a common motif in deep neural networks, such as Transformer (deep learning architecture), transformer models (e.g., BERT (language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vanishing Gradient Problem

In machine learning, the vanishing gradient problem is the problem of greatly diverging gradient magnitudes between earlier and later layers encountered when training neural networks with backpropagation. In such methods, neural network weights are updated proportional to their partial derivative of the loss function. As the number of forward propagation steps in a network increases, for instance due to greater network depth, the gradients of earlier weights are calculated with increasingly many multiplications. These multiplications shrink the gradient magnitude. Consequently, the gradients of earlier weights will be exponentially smaller than the gradients of later weights. This difference in gradient magnitude might introduce instability in the training process, slow it, or halt it entirely. For instance, consider the hyperbolic tangent activation function. The gradients of this function are in range . The product of repeated multiplication with such gradients decreases exponent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different types of data including text, images and audio. Convolution-based networks are the de-facto standard in deep learning-based approaches to computer vision and image processing, and have only recently been replaced—in some cases—by newer deep learning architectures such as the transformer. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by the regularization that comes from using shared weights over fewer connections. For example, for ''each'' neuron in the fully-connected layer, 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded ''convolution'' (or cross-correlation) kernels, only 25 weights for each convolutio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |