|

Generative Phonology

Generative grammar, or generativism , is a linguistic theory that regards linguistics as the study of a hypothesised innate grammatical structure. It is a biological or biologistic modification of earlier structuralist theories of linguistics, deriving ultimately from glossematics. Generative grammar considers grammar as a system of rules that generates exactly those combinations of words that form grammatical sentences in a given language. It is a system of explicit rules that may apply repeatedly to generate an indefinite number of sentences which can be as long as one wants them to be. The difference from structural and functional models is that the object is base-generated within the verb phrase in generative grammar. This purportedly cognitive structure is thought of as being a part of a universal grammar, a syntactic structure which is caused by a genetic mutation in humans. Generativists have created numerous theories to make the NP VP (NP) analysis work in natural la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

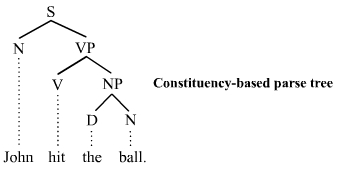

Parse Tree 1

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term ''parsing'' comes from Latin ''pars'' (''orationis''), meaning part (of speech). The term has slightly different meanings in different branches of linguistics and computer science. Traditional sentence parsing is often performed as a method of understanding the exact meaning of a sentence or word, sometimes with the aid of devices such as sentence diagrams. It usually emphasizes the importance of grammatical divisions such as subject and predicate. Within computational linguistics the term is used to refer to the formal analysis by a computer of a sentence or other string of words into its constituents, resulting in a parse tree showing their syntactic relation to each other, which may also contain semantic and other information ( p-values). Some parsing alg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subject (grammar)

The subject in a simple English sentence such as ''John runs'', ''John is a teacher'', or ''John drives a car'', is the person or thing about whom the statement is made, in this case ''John''. Traditionally the subject is the word or phrase which controls the verb in the clause, that is to say with which the verb agrees (''John is'' but ''John and Mary are''). If there is no verb, as in ''John what an idiot!'', or if the verb has a different subject, as in ''John I can't stand him!'', then 'John' is not considered to be the grammatical subject, but can be described as the '' topic'' of the sentence. While these definitions apply to simple English sentences, defining the subject is more difficult in more complex sentences and in languages other than English. For example, in the sentence ''It is difficult to learn French'', the subject seems to be the word ''it'', and yet arguably the real subject (the thing that is difficult) is ''to learn French''. A sentence such as ''It was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

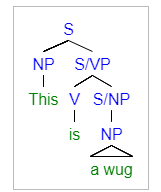

Tree-adjoining Grammar

Tree-adjoining grammar (TAG) is a grammar formalism defined by Aravind Joshi. Tree-adjoining grammars are somewhat similar to context-free grammars, but the elementary unit of rewriting is the tree rather than the symbol. Whereas context-free grammars have rules for rewriting symbols as strings of other symbols, tree-adjoining grammars have rules for rewriting the nodes of trees as other trees (see tree (graph theory) and tree (data structure)). History TAG originated in investigations by Joshi and his students into the family of adjunction grammars (AG), the "string grammar" of Zellig Harris. AGs handle exocentric properties of language in a natural and effective way, but do not have a good characterization of endocentric constructions; the converse is true of rewrite grammars, or phrase-structure grammar (PSG). In 1969, Joshi introduced a family of grammars that exploits this complementarity by mixing the two types of rules. A few very simple rewrite rules suffice to gen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Categorial Grammar

Categorial grammar is a family of formalisms in natural language syntax that share the central assumption that syntactic constituents combine as functions and arguments. Categorial grammar posits a close relationship between the syntax and semantic composition, since it typically treats syntactic categories as corresponding to semantic types. Categorial grammars were developed in the 1930s by Kazimierz Ajdukiewicz and in the 1950s by Yehoshua Bar-Hillel and Joachim Lambek. It saw a surge of interest in the 1970s following the work of Richard Montague, whose Montague grammar assumed a similar view of syntax. It continues to be a major paradigm, particularly within formal semantics. Basics A categorial grammar consists of two parts: a lexicon, which assigns a set of types (also called categories) to each basic symbol, and some type inference rules, which determine how the type of a string of symbols follows from the types of the constituent symbols. It has the advantage that the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Head-driven Phrase Structure Grammar

Head-driven phrase structure grammar (HPSG) is a highly lexicalized, constraint-based grammar developed by Carl Pollard and Ivan Sag. It is a type of phrase structure grammar, as opposed to a dependency grammar, and it is the immediate successor to generalized phrase structure grammar. HPSG draws from other fields such as computer science ( data type theory and knowledge representation) and uses Ferdinand de Saussure's notion of the sign. It uses a uniform formalism and is organized in a modular way which makes it attractive for natural language processing. An HPSG grammar includes principles and grammar rules and lexicon entries which are normally not considered to belong to a grammar. The formalism is based on lexicalism. This means that the lexicon is more than just a list of entries; it is in itself richly structured. Individual entries are marked with types. Types form a hierarchy. Early versions of the grammar were very lexicalized with few grammatical rules (schema). Mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalised Phrase Structure Grammar

Generalized phrase structure grammar (GPSG) is a framework for describing the syntax and semantics of natural languages. It is a type of constraint-based phrase structure grammar. Constraint based grammars are based around defining certain syntactic processes as ungrammatical for a given language and assuming everything not thus dismissed is grammatical within that language. Phrase structure grammars base their framework on constituency relationships, seeing the words in a sentence as ranked, with some words dominating the others. For example, in the sentence "The dog runs", "runs" is seen as dominating "dog" since it is the main focus of the sentence. This view stands in contrast to dependency grammars, which base their assumed structure on the relationship between a single word in a sentence (the sentence head) and its dependents. Origins GPSG was initially developed in the late 1970s by Gerald Gazdar. Other contributors include Ewan Klein, Ivan Sag, and Geoffrey Pullum. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relational Grammar

In linguistics, relational grammar (RG) is a syntactic theory which argues that primitive grammatical relations provide the ideal means to state syntactic rules in universal terms. Relational grammar began as an alternative to transformational grammar. Grammatical relations hierarchy In relational grammar, constituents that serve as the arguments to predicates are numbered in what is called the grammatical relations (GR) hierarchy. This numbering system corresponds loosely to the notions of subject, direct object and indirect object. The numbering scheme is subject → (1), direct object → (2) and indirect object → (3). Other constituents (such as oblique, genitive, and object of comparative) are called ''nonterms'' (N). The predicate is marked (P). According to Geoffrey K. Pullum (1977), the GR hierarchy directly corresponds to the accessibility hierarchy: A schematic representation of a clause in this formalism might look like: Other features * Strata * Chomage (se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minimalist Program

In linguistics, the minimalist program is a major line of inquiry that has been developing inside generative grammar since the early 1990s, starting with a 1993 paper by Noam Chomsky. Following Imre Lakatos's distinction, Chomsky presents minimalism as a program, understood as a mode of inquiry that provides a conceptual framework which guides the development of linguistic theory. As such, it is characterized by a broad and diverse range of research directions. For Chomsky, there are two basic minimalist questions — What is language? and Why does it have the properties it has? — but the answers to these two questions can be framed in any theory.Boeckx, Cedric ''Linguistic Minimalism. Origins, Concepts, Methods and Aims'', pp. 84 and 115. Conceptual framework Goals and assumptions Minimalism is an approach developed with the goal of understanding the nature of language. It models a speaker's knowledge of language as a computational system with one basic operation, nam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Government And Binding Theory

A government is the system or group of people governing an organized community, generally a state. In the case of its broad associative definition, government normally consists of legislature, executive, and judiciary. Government is a means by which organizational policies are enforced, as well as a mechanism for determining policy. In many countries, the government has a kind of constitution, a statement of its governing principles and philosophy. While all types of organizations have governance, the term ''government'' is often used more specifically to refer to the approximately 200 independent national governments and subsidiary organizations. The major types of political systems in the modern era are democracies, monarchies, and authoritarian and totalitarian regimes. Historically prevalent forms of government include monarchy, aristocracy, timocracy, oligarchy, democracy, theocracy, and tyranny. These forms are not always mutually exclusive, and mixed gover ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principles And Parameters

Principles and parameters is a framework within generative linguistics in which the syntax of a natural language is described in accordance with general ''principles'' (i.e. abstract rules or grammars) and specific ''parameters'' (i.e. markers, switches) that for particular languages are either turned ''on'' or ''off''. For example, the position of heads in phrases is determined by a parameter. Whether a language is '' head-initial or head-final'' is regarded as a parameter which is either on or off for particular languages (i.e. English is ''head-initial'', whereas Japanese is ''head-final''). Principles and parameters was largely formulated by the linguists Noam Chomsky and Howard Lasnik. Many linguists have worked within this framework, and for a period of time it was considered the dominant form of mainstream generative linguistics. Principles and parameters as a grammar framework is also known as government and binding theory. That is, the two terms ''principles and paramet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformational Grammar

In linguistics, transformational grammar (TG) or transformational-generative grammar (TGG) is part of the theory of generative grammar, especially of natural languages. It considers grammar to be a system of rules that generate exactly those combinations of words that form grammatical sentences in a given language and involves the use of defined operations (called transformations) to produce new sentences from existing ones. The method is commonly associated with American linguist Noam Chomsky. Generative algebra was first introduced to general linguistics by the structural linguist Louis Hjelmslev although the method was described before him by Albert Sechehaye in 1908. Chomsky adopted the concept of transformations from his teacher Zellig Harris, who followed the American descriptivist separation of semantics from syntax. Hjelmslev's structuralist conception including semantics and pragmatics is incorporated into functional grammar. Historical context Transformational anal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_Project_α.jpg)