|

Cowles Commission For Research In Economics

The Cowles Foundation for Research in Economics is an economic research institute at Yale University. It was created as the Cowles Commission for Research in Economics at Colorado Springs in 1932 by businessman and economist Alfred Cowles. In 1939, the Cowles Commission moved to the University of Chicago under Theodore O. Yntema. Jacob Marschak directed it from 1943 until 1948, when Tjalling C. Koopmans assumed leadership. Increasing opposition to the Cowles Commission from the department of economics of the University of Chicago during the 1950s impelled Koopmans to persuade the Cowles family to move the commission to Yale University in 1955 where it became the Cowles Foundation. As its motto ''Theory and Measurement'' implies, the Cowles Commission focuses on linking economic theory to mathematics and statistics. Its advances in economics involved the creation and integration of general equilibrium theory and econometrics. The thrust of the Cowles approach was a specific, pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

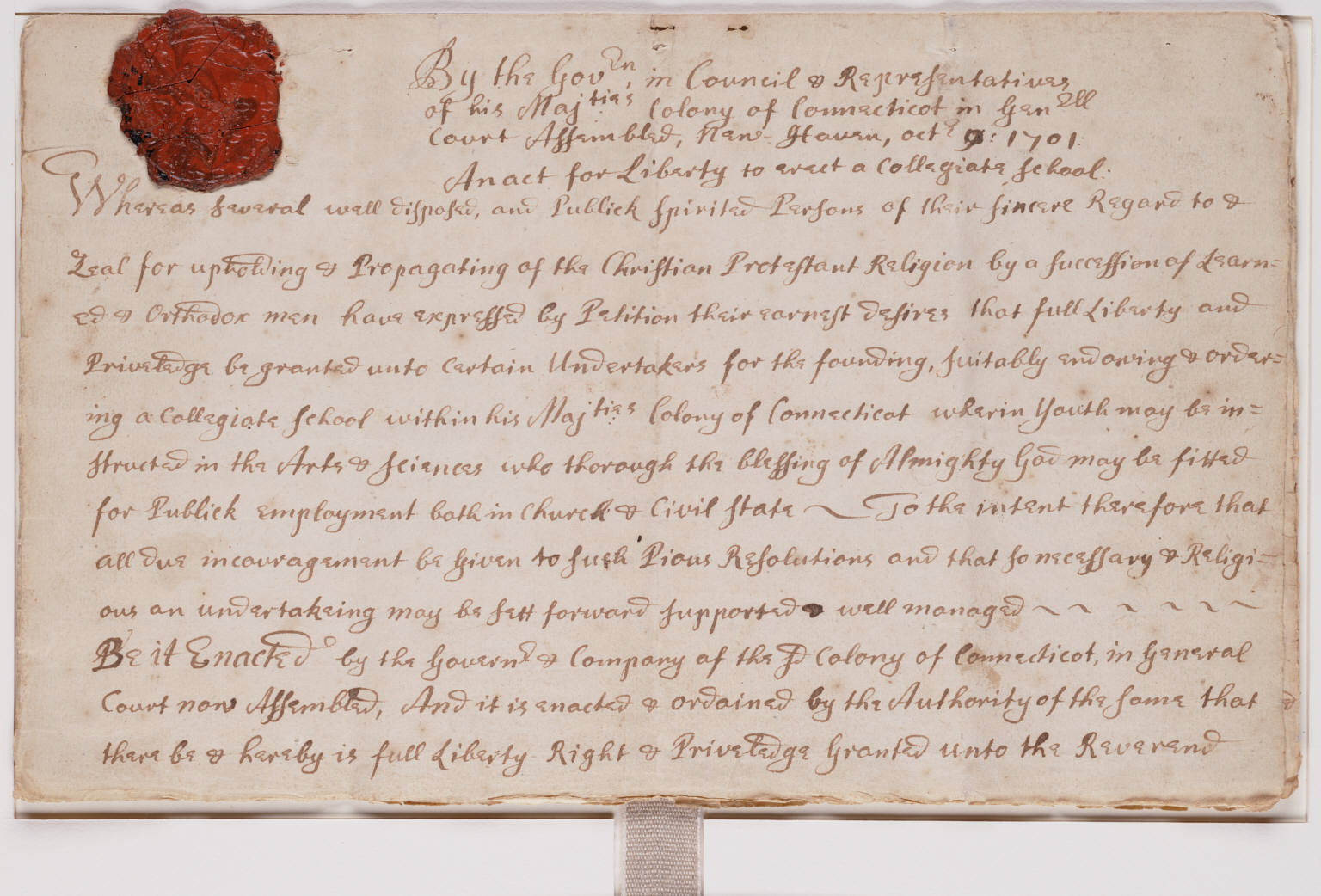

Yale University

Yale University is a Private university, private research university in New Haven, Connecticut. Established in 1701 as the Collegiate School, it is the List of Colonial Colleges, third-oldest institution of higher education in the United States and among the most prestigious in the world. It is a member of the Ivy League. Chartered by the Connecticut Colony, the Collegiate School was established in 1701 by clergy to educate Congregationalism in the United States, Congregational ministers before moving to New Haven in 1716. Originally restricted to theology and sacred languages, the curriculum began to incorporate humanities and sciences by the time of the American Revolution. In the 19th century, the college expanded into graduate and professional instruction, awarding the first Doctor of Philosophy, PhD in the United States in 1861 and organizing as a university in 1887. Yale's faculty and student populations grew after 1890 with rapid expansion of the physical campus and sc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ordinary Least Squares

In statistics, ordinary least squares (OLS) is a type of linear least squares method for choosing the unknown parameters in a linear regression model (with fixed level-one effects of a linear function of a set of explanatory variables) by the principle of least squares: minimizing the sum of the squares of the differences between the observed dependent variable (values of the variable being observed) in the input dataset and the output of the (linear) function of the independent variable. Geometrically, this is seen as the sum of the squared distances, parallel to the axis of the dependent variable, between each data point in the set and the corresponding point on the regression surface—the smaller the differences, the better the model fits the data. The resulting estimator can be expressed by a simple formula, especially in the case of a simple linear regression, in which there is a single regressor on the right side of the regression equation. The OLS estimator is c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Covariance Matrix

In probability theory and statistics, a covariance matrix (also known as auto-covariance matrix, dispersion matrix, variance matrix, or variance–covariance matrix) is a square matrix giving the covariance between each pair of elements of a given random vector. Any covariance matrix is symmetric and positive semi-definite and its main diagonal contains variances (i.e., the covariance of each element with itself). Intuitively, the covariance matrix generalizes the notion of variance to multiple dimensions. As an example, the variation in a collection of random points in two-dimensional space cannot be characterized fully by a single number, nor would the variances in the x and y directions contain all of the necessary information; a 2 \times 2 matrix would be necessary to fully characterize the two-dimensional variation. The covariance matrix of a random vector \mathbf is typically denoted by \operatorname_ or \Sigma. Definition Throughout this article, boldfaced unsub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonsingular

In linear algebra, an -by- square matrix is called invertible (also nonsingular or nondegenerate), if there exists an -by- square matrix such that :\mathbf = \mathbf = \mathbf_n \ where denotes the -by- identity matrix and the multiplication used is ordinary matrix multiplication. If this is the case, then the matrix is uniquely determined by , and is called the (multiplicative) ''inverse'' of , denoted by . Matrix inversion is the process of finding the matrix that satisfies the prior equation for a given invertible matrix . A square matrix that is ''not'' invertible is called singular or degenerate. A square matrix is singular if and only if its determinant is zero. Singular matrices are rare in the sense that if a square matrix's entries are randomly selected from any finite region on the number line or complex plane, the probability that the matrix is singular is 0, that is, it will "almost never" be singular. Non-square matrices (-by- matrices for which ) do not h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Covariance

In probability theory and statistics, covariance is a measure of the joint variability of two random variables. If the greater values of one variable mainly correspond with the greater values of the other variable, and the same holds for the lesser values (that is, the variables tend to show similar behavior), the covariance is positive. In the opposite case, when the greater values of one variable mainly correspond to the lesser values of the other, (that is, the variables tend to show opposite behavior), the covariance is negative. The sign of the covariance therefore shows the tendency in the linear relationship between the variables. The magnitude of the covariance is not easy to interpret because it is not normalized and hence depends on the magnitudes of the variables. The normalized version of the covariance, the correlation coefficient, however, shows by its magnitude the strength of the linear relation. A distinction must be made between (1) the covariance of two ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Structural Equation

Structural equation modeling (SEM) is a label for a diverse set of methods used by scientists in both experimental and observational research across the sciences, business, and other fields. It is used most in the social and behavioral sciences. A definition of SEM is difficult without reference to highly technical language, but a good starting place is the name itself. SEM involves the construction of a ''model'', to represent how various aspects of an observable or theoretical phenomenon are thought to be causally structurally related to one another. The ''structural'' aspect of the model implies theoretical associations between variables that represent the phenomenon under investigation. The postulated causal structuring is often depicted with arrows representing causal connections between variables (as in Figures 1 and 2) but these causal connections can be equivalently represented as equations. The causal structures imply that specific patterns of connections should appe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Explanatory Variable

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function), on the values of other variables. Independent variables, in turn, are not seen as depending on any other variable in the scope of the experiment in question. In this sense, some common independent variables are time, space, density, mass, fluid flow rate, and previous values of some observed value of interest (e.g. human population size) to predict future values (the dependent variable). Of the two, it is always the dependent variable whose variation is being studied, by altering inputs, also known as regressors in a statistical context. In an experiment, any variable that can be attributed a value without attributing a value to any other variable is called an i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reduced Form

In statistics, and particularly in econometrics, the reduced form of a system of equations is the result of solving the system for the endogenous variables. This gives the latter as functions of the exogenous variables, if any. In econometrics, the equations of a structural form model are estimated in their theoretically given form, while an alternative approach to estimation is to first solve the theoretical equations for the endogenous variables to obtain reduced form equations, and then to estimate the reduced form equations. Let ''Y'' be the vector of the variables to be explained (endogeneous variables) by a statistical model and ''X'' be the vector of explanatory (exogeneous) variables. In addition let \varepsilon be a vector of error terms. Then the general expression of a structural form is f(Y, X, \varepsilon) = 0 , where ''f'' is a function, possibly from vectors to vectors in the case of a multiple-equation model. The reduced form of this model is given by Y = g(X, \ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Endogenous Variable

In an economic model, an exogenous variable is one whose measure is determined outside the model and is imposed on the model, and an exogenous change is a change in an exogenous variable.Mankiw, N. Gregory. ''Macroeconomics'', third edition, 1997.Varian, Hal R., ''Microeconomic Analysis'', third edition, 1992.Chiang, Alpha C. ''Fundamental Methods of Mathematical Economics'', third edition, 1984. In contrast, an endogenous variable is a variable whose measure is determined by the model. An endogenous change is a change in an endogenous variable in response to an exogenous change that is imposed upon the model. The term endogeneity in econometrics has a related but distinct meaning. An endogenous random variable is correlated with the error term in the econometric model, while an exogenous variable is not. Examples In the LM model of interest rate determination, the supply of and demand for money determine the interest rate contingent on the level of the money supply, so the m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exogenous Variable

In an economic model, an exogenous variable is one whose measure is determined outside the model and is imposed on the model, and an exogenous change is a change in an exogenous variable.Mankiw, N. Gregory. ''Macroeconomics'', third edition, 1997.Varian, Hal R., ''Microeconomic Analysis'', third edition, 1992.Chiang, Alpha C. ''Fundamental Methods of Mathematical Economics'', third edition, 1984. In contrast, an endogenous variable is a variable whose measure is determined by the model. An endogenous change is a change in an endogenous variable in response to an exogenous change that is imposed upon the model. The term endogeneity in econometrics has a related but distinct meaning. An endogenous random variable is correlated with the error term in the econometric model, while an exogenous variable is not. Examples In the LM model of interest rate determination, the supply of and demand for money determine the interest rate contingent on the level of the money supply, so the m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when all observed outcomes are assumed to have Normal distributions with the same variance. From the perspective of Bayesian infere ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |