Transitive Data Skew on:

[Wikipedia]

[Google]

[Amazon]

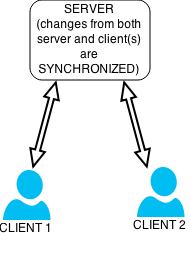

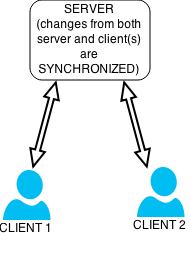

Data synchronization is the process of establishing

Data synchronization is the process of establishing

Data synchronization is the process of establishing

Data synchronization is the process of establishing consistency

In deductive logic, a consistent theory is one that does not lead to a logical contradiction. A theory T is consistent if there is no formula \varphi such that both \varphi and its negation \lnot\varphi are elements of the set of consequences ...

between source and target data store

A data store is a repository for persistently storing and managing collections of data which include not just repositories like databases, but also simpler store types such as simple files, emails, etc.

A ''database'' is a collection of data that ...

s, and the continuous harmonization of the data over time. It is fundamental to a wide variety of applications, including file synchronization and mobile device synchronization.

Data synchronization can also be useful in encryption for synchronizing public key servers.

Data synchronization is needed to update and keep multiple copies of a set of data coherent with one another or to maintain data integrity

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire Information Lifecycle Management, life-cycle. It is a critical aspect to the design, implementation, and usage of any system that stores, proc ...

, Figure 3. For example, database replication is used to keep multiple copies of data synchronized with database servers that store data in different locations.

Examples

Examples include: *File synchronization

File synchronization (or syncing) in computing is the process of ensuring that computer files in two or more locations are updated via certain rules.

In ''one-way file synchronization'', also called Web mirror, mirroring, updated files are copied ...

, such as syncing a hand-held MP3 player to a desktop computer;

* Cluster file systems, which are file systems that maintain data or indexes in a coherent fashion across a whole computing cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newes ...

;

* Cache coherency

In computer architecture, cache coherence is the uniformity of shared resource data that is stored in multiple local caches. In a cache coherent system, if multiple clients have a cached copy of the same region of a shared memory resource, all ...

, maintaining multiple copies of data in sync across multiple cache

Cache, caching, or caché may refer to:

Science and technology

* Cache (computing), a technique used in computer storage for easier data access

* Cache (biology) or hoarding, a food storing behavior of animals

* Cache (archaeology), artifacts p ...

s;

* RAID

RAID (; redundant array of inexpensive disks or redundant array of independent disks) is a data storage virtualization technology that combines multiple physical Computer data storage, data storage components into one or more logical units for th ...

, where data is written in a redundant fashion across multiple disks, so that the loss of any one disk does not lead to a loss of data;

* Database replication

Replication in computing refers to maintaining multiple copies of data, processes, or resources to ensure consistency across redundant components. This fundamental technique spans databases, file systems, and distributed systems, serving to impro ...

, where copies of data on a database

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and a ...

are kept in sync, despite possible large geographical separation;

* Journaling, a technique used by many modern file systems to make sure that file metadata are updated on a disk in a coherent, consistent manner.

Challenges

Some of the challenges which user may face in data synchronization: * data formats complexity; * real-timeliness; * data security; * data quality; * performance.Data formats complexity

Data formats tend to grow more complex with time as the organization grows and evolves. This results not only in building simple interfaces between the two applications (source and target), but also in a need to transform the data while passing them to the target application. ETL (extraction transformation loading) tools can be helpful at this stage for managing data format complexities.Real-timeliness

In real-time systems, customers want to see the current status of their order in e-shop, the current status of a parcel delivery—a real time parcel tracking—, the current balance on their account, etc. This shows the need of a real-time system, which is being updated as well to enable smooth manufacturing process in real-time, e.g., ordering material when enterprise is running out stock, synchronizing customer orders with manufacturing process, etc. From real life, there exist so many examples where real-time processing gives successful and competitive advantage.Data security

There are no fixed rules and policies to enforce data security. It may vary depending on the system which you are using. Even though the security is maintained correctly in the source system which captures the data, the security and information access privileges must be enforced on the target systems as well to prevent any potential misuse of the information. This is a serious issue and particularly when it comes for handling secret, confidential and personal information. So because of the sensitivity and confidentiality, data transfer and all in-between information must be encrypted.Data quality

Data quality is another serious constraint. For better management and to maintain good quality of data, the common practice is to store the data at one location and share with different people and different systems and/or applications from different locations. It helps in preventing inconsistencies in the data.Performance

There are five different phases involved in the data synchronization process: *data extraction

Data extraction is the act or process of retrieving data out of (usually unstructured or poorly structured) data sources for further data processing or data storage (data migration). The import into the intermediate extracting system is thus usual ...

from the source (or master, or main) system;

* data transfer

Data communication, including data transmission and data reception, is the transfer of data, transmitted and received over a point-to-point or point-to-multipoint communication channel. Examples of such channels are copper wires, optical ...

;

* data transformation

In computing, data transformation is the process of converting data from one format or structure into another format or structure. It is a fundamental aspect of most data integrationCIO.com. Agile Comes to Data Integration. Retrieved from: https ...

;

* data load to the target system.

* data updation

Each of these steps is critical. In case of large amounts of data, the synchronization process needs to be carefully planned and executed to avoid any negative impact on performance.

File-based solutions

There are tools available forfile synchronization

File synchronization (or syncing) in computing is the process of ensuring that computer files in two or more locations are updated via certain rules.

In ''one-way file synchronization'', also called Web mirror, mirroring, updated files are copied ...

, version control

Version control (also known as revision control, source control, and source code management) is the software engineering practice of controlling, organizing, and tracking different versions in history of computer files; primarily source code t ...

( CVS, Subversion

Subversion () refers to a process by which the values and principles of a system in place are contradicted or reversed in an attempt to sabotage the established social order and its structures of Power (philosophy), power, authority, tradition, h ...

, etc.), distributed filesystem

A clustered file system (CFS) is a file system which is shared by being simultaneously Mount (computing), mounted on multiple Server (computing), servers. There are several approaches to computer cluster, clustering, most of which do not emplo ...

s ( Coda, etc.), and mirroring

Mirroring is the behavior in which one person subconsciously imitates the gesture, idiolect, speech pattern, or attitude of another. Mirroring often occurs in social situations, particularly in the company of close friends or family, often going ...

(rsync

rsync (remote sync) is a utility for transferring and synchronizing files between a computer and a storage drive and across networked computers by comparing the modification times and sizes of files. It is commonly found on Unix-like opera ...

, etc.), in that all these attempt to keep sets of files synchronized. However, only version control and file synchronization tools can deal with modifications to more than one copy of the files.

* File synchronization is commonly used for home backups on external hard drive

A hard disk drive (HDD), hard disk, hard drive, or fixed disk is an electro-mechanical data storage device that stores and retrieves digital data using magnetic storage with one or more rigid rapidly rotating hard disk drive platter, pla ...

s or updating for transport on USB flash drives

A flash drive (also thumb drive, memory stick, and pen drive/pendrive) is a data storage device that includes flash memory with an integrated USB interface. A typical USB drive is removable, rewritable, and smaller than an optical disc, and ...

. The automatic process prevents copying already identical files, thus can save considerable time relative to a manual copy, also being faster and less error prone.

* Version control

Version control (also known as revision control, source control, and source code management) is the software engineering practice of controlling, organizing, and tracking different versions in history of computer files; primarily source code t ...

tools are intended to deal with situations where more than one user attempts to simultaneously modify the same file, while file synchronizers are optimized for situations where only one copy of the file will be edited at a time. For this reason, although version control tools can be used for file synchronization, dedicated programs require less overhead.

* Distributed filesystem

A clustered file system (CFS) is a file system which is shared by being simultaneously Mount (computing), mounted on multiple Server (computing), servers. There are several approaches to computer cluster, clustering, most of which do not emplo ...

s may also be seen as ensuring multiple versions of a file are synchronized. This normally requires that the devices storing the files are always connected, but some distributed file systems like Coda allow disconnected operation followed by reconciliation. The merging facilities of a distributed file system are typically more limited than those of a version control system because most file systems do not keep a version graph.

* Mirror (computing): A mirror is an exact copy of a data set. On the Internet, a mirror site is an exact copy of another Internet site. Mirror sites are most commonly used to provide multiple sources of the same information, and are of particular value as a way of providing reliable access to large downloads.

Theoretical models

Several theoretical models of data synchronization exist in the research literature, and the problem is also related to the problem of Slepian–Wolf coding ininformation theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, ...

. The models are classified based on how they consider the data to be synchronized.

Unordered data

The problem of synchronizing unordered data (also known as the set reconciliation problem) is modeled as an attempt to compute thesymmetric difference

In mathematics, the symmetric difference of two sets, also known as the disjunctive union and set sum, is the set of elements which are in either of the sets, but not in their intersection. For example, the symmetric difference of the sets \ and ...

between two remote sets

and of b-bit numbers.

Some solutions to this problem are typified by:

;Wholesale transfer: In this case all data is transferred to one host for a local comparison.

;Timestamp synchronization: In this case all changes to the data are marked with timestamps. Synchronization proceeds by transferring all data with a timestamp later than the previous synchronization.

;Mathematical synchronization: In this case data are treated as mathematical objects and synchronization corresponds to a mathematical process.

Ordered data

In this case, two remote strings and need to be reconciled. Typically, it is assumed that these strings differ by up to a fixed number of edits (i.e. character insertions, deletions, or modifications). Then data synchronization is the process of reducingedit distance

In computational linguistics and computer science, edit distance is a string metric, i.e. a way of quantifying how dissimilar two String (computing), strings (e.g., words) are to one another, that is measured by counting the minimum number of opera ...

between and , up to the ideal distance of zero. This is applied in all filesystem based synchronizations (where the data is ordered). Many practical applications of this are discussed or referenced above.

It is sometimes possible to transform the problem to one of unordered data through a process known as shingling (splitting the strings into ''shingles'').

Error handling

In fault-tolerant systems, distributed databases must be able to cope with the loss or corruption of (part of) their data. The first step is usually replication, which involves making multiple copies of the data and keeping them all up to date as changes are made. However, it is then necessary to decide which copy to rely on when loss or corruption of an instance occurs. The simplest approach is to have a single master instance that is the sole source of truth. Changes to it are replicated to other instances, and one of those instances becomes the new master when the old master fails. Paxos andRaft

A raft is any flat structure for support or transportation over water. It is usually of basic design, characterized by the absence of a hull. Rafts are usually kept afloat by using any combination of buoyant materials such as wood, sealed barre ...

are more complex protocols that exist to solve problems with transient effects during failover, such as two instances thinking they are the master at the same time.

Secret sharing

Secret sharing (also called secret splitting) refers to methods for distributing a secrecy, secret among a group, in such a way that no individual holds any intelligible information about the secret, but when a sufficient number of individuals c ...

is useful if failures of whole nodes are very common. This moves synchronization from an explicit recovery process to being part of each read, where a read of some data requires retrieving encoded data from several different nodes. If corrupt or out-of-date data may be present on some nodes, this approach may also benefit from the use of an error correction code

In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding is a technique used for controlling errors in data transmission over unreliable or noisy communication channels.

The centra ...

.

DHTs and Blockchain

The blockchain is a distributed ledger with growing lists of Record (computer science), records (''blocks'') that are securely linked together via Cryptographic hash function, cryptographic hashes. Each block contains a cryptographic hash of th ...

s try to solve the problem of synchronization between many nodes (hundreds to billions).

See also

*SyncML

SyncML, or Synchronization Markup Language, was originally developed as a platform-independent standard for information synchronization. Established by the SyncML Initiative, this project has evolved to become a key component in data synchroniza ...

, a standard mainly for calendar, contact and email synchronization

* Synchronization (computer science)

In computer science, synchronization is the task of coordinating multiple processes to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action.

Motivation

The need for synchronization ...

References

{{DEFAULTSORT:Data Synchronization Fault-tolerant computer systems