Software-defined Network on:

[Wikipedia]

[Google]

[Amazon]

Software-defined networking (SDN) is an approach to

The following list defines and explains the SDN architectural components:

; SDN application

: SDN applications are programs that communicate their network requirements and desired network behavior to the SDN controller via a northbound interface (NBI). In addition, they may consume an abstracted view of the network for their internal decision-making purposes. An SDN Application consists of SDN application logic and one or more NBI drivers. SDN applications may themselves expose another layer of abstracted network control, thus offering one or more higher-level NBIs through respective NBI agents.

; SDN controller

: The SDN controller is a logically centralized entity in charge of (i) translating the requirements from the SDN application layer down to the SDN datapaths and (ii) providing the SDN applications with an abstract view of the network (which may include statistics and events). An SDN controller consists of one or more NBI agents, the SDN control logic, and the control to data-plane interface (CDPI) driver. The controller's definition as a logically centralized entity neither prescribes nor precludes implementation architectures such as the federation of multiple controllers, the hierarchical connection of controllers, communication interfaces between controllers, nor virtualization or slicing of network resources.

; SDN datapath

: The SDN datapath is a logical network device that exposes visibility and uncontested control over its advertised forwarding and data processing capabilities. The logical representation may encompass all or a subset of the capabilities. An SDN datapath consists of a CDPI agent and a set of one or more traffic forwarding engines and zero or more traffic processing functions. These engines and functions may include simple forwarding between the datapath's external interfaces or internal traffic processing or termination functions. One or more SDN datapaths may be contained in a single (physical) network element—an integrated physical combination of communications resources, managed as a unit. An SDN datapath may also be defined across multiple physical network elements. This logical definition neither prescribes nor precludes implementation details such as the logical to physical mapping, management of shared physical resources, virtualization or slicing of the SDN datapath, interoperability with non-SDN networking, nor the data processing functionality, which can include OSI layer 4-7 functions.

; SDN Control to Data-Plane Interface (CDPI)

: The SDN CDPI is the interface defined between an SDN controller and an SDN datapath, which provides at least programmatic control of all forwarding operations, capabilities advertisement, statistics reporting, and event notification. One value of SDN lies in the expectation that the CDPI is implemented in an open, vendor-neutral and interoperable way.

; SDN Northbound Interfaces (NBI)

: SDN NBIs are interfaces between SDN applications and SDN controllers and typically provide abstract network views and enable direct expression of network behavior and requirements. This may occur at any level of abstraction and across different sets of functionality.

The following list defines and explains the SDN architectural components:

; SDN application

: SDN applications are programs that communicate their network requirements and desired network behavior to the SDN controller via a northbound interface (NBI). In addition, they may consume an abstracted view of the network for their internal decision-making purposes. An SDN Application consists of SDN application logic and one or more NBI drivers. SDN applications may themselves expose another layer of abstracted network control, thus offering one or more higher-level NBIs through respective NBI agents.

; SDN controller

: The SDN controller is a logically centralized entity in charge of (i) translating the requirements from the SDN application layer down to the SDN datapaths and (ii) providing the SDN applications with an abstract view of the network (which may include statistics and events). An SDN controller consists of one or more NBI agents, the SDN control logic, and the control to data-plane interface (CDPI) driver. The controller's definition as a logically centralized entity neither prescribes nor precludes implementation architectures such as the federation of multiple controllers, the hierarchical connection of controllers, communication interfaces between controllers, nor virtualization or slicing of network resources.

; SDN datapath

: The SDN datapath is a logical network device that exposes visibility and uncontested control over its advertised forwarding and data processing capabilities. The logical representation may encompass all or a subset of the capabilities. An SDN datapath consists of a CDPI agent and a set of one or more traffic forwarding engines and zero or more traffic processing functions. These engines and functions may include simple forwarding between the datapath's external interfaces or internal traffic processing or termination functions. One or more SDN datapaths may be contained in a single (physical) network element—an integrated physical combination of communications resources, managed as a unit. An SDN datapath may also be defined across multiple physical network elements. This logical definition neither prescribes nor precludes implementation details such as the logical to physical mapping, management of shared physical resources, virtualization or slicing of the SDN datapath, interoperability with non-SDN networking, nor the data processing functionality, which can include OSI layer 4-7 functions.

; SDN Control to Data-Plane Interface (CDPI)

: The SDN CDPI is the interface defined between an SDN controller and an SDN datapath, which provides at least programmatic control of all forwarding operations, capabilities advertisement, statistics reporting, and event notification. One value of SDN lies in the expectation that the CDPI is implemented in an open, vendor-neutral and interoperable way.

; SDN Northbound Interfaces (NBI)

: SDN NBIs are interfaces between SDN applications and SDN controllers and typically provide abstract network views and enable direct expression of network behavior and requirements. This may occur at any level of abstraction and across different sets of functionality.

network management

Network management is the process of administering and managing computer networks. Services provided by this discipline include fault analysis, performance management, provisioning of networks and maintaining quality of service. Network managem ...

that uses abstraction to enable dynamic and programmatically efficient network configuration to create grouping and segmentation while improving network performance and monitoring in a manner more akin to cloud computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for ...

than to traditional network management. SDN is meant to improve the static architecture of traditional networks and may be employed to centralize network intelligence in one network component by disassociating the forwarding process of network packet

In telecommunications and computer networking, a network packet is a formatted unit of Data (computing), data carried by a packet-switched network. A packet consists of control information and user data; the latter is also known as the ''Payload ...

s (data plane

In routing, the data plane, sometimes called the forwarding plane or user plane, defines the part of the router architecture that decides what to do with packets arriving on an inbound interface. Most commonly, it refers to a table in which the ...

) from the routing process (control plane

In network routing, the control plane is the part of the router architecture that is concerned with establishing the network topology, or the information in a routing table that defines what to do with incoming packets. Control plane functions, ...

). The control plane consists of one or more controllers, which are considered the brains of the SDN network, where the whole intelligence is incorporated. However, centralization has certain drawbacks related to security, scalability and elasticity.

SDN was commonly associated with the OpenFlow

OpenFlow is a communications protocol that gives access to the forwarding plane of a network switch or router (computing), router over the network.

Description

OpenFlow enables network controllers to determine the path of network packets across ...

protocol for remote communication with network plane elements to determine the path of network packet

In telecommunications and computer networking, a network packet is a formatted unit of Data (computing), data carried by a packet-switched network. A packet consists of control information and user data; the latter is also known as the ''Payload ...

s across network switch

A network switch (also called switching hub, bridging hub, Ethernet switch, and, by the IEEE, MAC bridge) is networking hardware that connects devices on a computer network by using packet switching to receive and forward data to the destinat ...

es since OpenFlow's emergence in 2011. However, since 2012, proprietary systems have also used the term. These include Cisco Systems

Cisco Systems, Inc. (using the trademark Cisco) is an American multinational corporation, multinational digital communications technology conglomerate (company), conglomerate corporation headquartered in San Jose, California. Cisco develops, m ...

' Open Network Environment and Nicira's network virtualization platform.

SD-WAN applies similar technology to a wide area network

A wide area network (WAN) is a telecommunications network that extends over a large geographic area. Wide area networks are often established with leased telecommunication circuits.

Businesses, as well as schools and government entities, use ...

(WAN).

History

The history of SDN principles can be traced back to the separation of the control and data plane first used in public switched telephone networks. This provided a manner of simplifying provisioning and management years before the architecture was used in data networks. TheInternet Engineering Task Force

The Internet Engineering Task Force (IETF) is a standards organization for the Internet standard, Internet and is responsible for the technical standards that make up the Internet protocol suite (TCP/IP). It has no formal membership roster ...

(IETF) began considering various ways to decouple the control and data forwarding functions in a proposed interface standard published in 2004 named Forwarding and Control Element Separation (ForCES). The ForCES Working Group also proposed a companion SoftRouter architecture. Additional early standards from the IETF that pursued separating control from data include the Linux Netlink as an IP services protocol and a path computation element (PCE)-based architecture.

These early attempts failed to gain traction. One reason is that many in the Internet community viewed separating control from data to be risky, especially given the potential for failure in the control plane. Another reason is that vendors were concerned that creating standard application programming interfaces (APIs) between the control and data planes would result in increased competition.

The use of open-source software in these separated architectures traces its roots to the Ethane project at Stanford

Leland Stanford Junior University, commonly referred to as Stanford University, is a private research university in Stanford, California, United States. It was founded in 1885 by railroad magnate Leland Stanford (the eighth governor of and th ...

's computer science department. Ethane's simple switch design led to the creation of OpenFlow, and an API for OpenFlow was first created in 2008. In that same year, NOX, an operating system for networks, was created.

SDN research included emulator

In computing, an emulator is Computer hardware, hardware or software that enables one computer system (called the ''host'') to behave like another computer system (called the ''guest''). An emulator typically enables the host system to run sof ...

s such as vSDNEmul, EstiNet, and Mininet.

Work on OpenFlow continued at Stanford, including with the creation of testbeds to evaluate the use of the protocol in a single campus network, as well as across the WAN as a backbone for connecting multiple campuses. In academic settings, there were several research and production networks based on OpenFlow switches from NEC

is a Japanese multinational information technology and electronics corporation, headquartered at the NEC Supertower in Minato, Tokyo, Japan. It provides IT and network solutions, including cloud computing, artificial intelligence (AI), Inte ...

and Hewlett-Packard

The Hewlett-Packard Company, commonly shortened to Hewlett-Packard ( ) or HP, was an American multinational information technology company. It was founded by Bill Hewlett and David Packard in 1939 in a one-car garage in Palo Alto, California ...

, as well as those based on Quanta Computer

Quanta Computer Incorporated () () is a Taiwan-based contracted manufacturer of electronic hardware.

Quanta's business extends to enterprise network systems, home entertainment, mobile communication, automotive electronics, and digital home ma ...

whiteboxes starting in about 2009.

Beyond academia, the first deployments were by Nicira in 2010 to control OVS from Onix, codeveloped with NTT and Google. A notable deployment was Google

Google LLC (, ) is an American multinational corporation and technology company focusing on online advertising, search engine technology, cloud computing, computer software, quantum computing, e-commerce, consumer electronics, and artificial ...

's B4 in 2012. Later, Google announced the first OpenFlow/Onix deployments in is datacenters. Another large deployment exists at China Mobile

China Mobile is the trade name of both China Mobile Limited and its ultimate controlling shareholder, China Mobile Communications Group Co., Ltd., a Chinese State-owned enterprises of China, state-owned telecommunicationsStrait deals ''The Econ ...

.

The Open Networking Foundation

The Open Networking Foundation (ONF) was a non-profit operator-led consortium. It used an open source business model aimed at promoting networking through software-defined networking (SDN) and standardizing the OpenFlow protocol and related te ...

was founded in 2011 to promote SDN and OpenFlow.

At the 2014 Interop and Tech Field Day, software-defined networking was demonstrated by Avaya

Avaya LLC(), formerly Avaya Inc., is an American multinational technology company headquartered in Morristown, New Jersey, that provides cloud communications and workstream collaboration services. The company's platform includes unified commun ...

using shortest-path bridging ( IEEE 802.1aq) and OpenStack

OpenStack is a free, open standard cloud computing platform. It is mostly deployed as infrastructure-as-a-service (IaaS) in both public and private clouds where virtual servers and other resources are made available to users. The software pla ...

as an automated campus, extending automation from the data center to the end device and removing manual provisioning from service delivery.

Concept

SDN architectures decouple network control (control plane

In network routing, the control plane is the part of the router architecture that is concerned with establishing the network topology, or the information in a routing table that defines what to do with incoming packets. Control plane functions, ...

) and forwarding (data plane

In routing, the data plane, sometimes called the forwarding plane or user plane, defines the part of the router architecture that decides what to do with packets arriving on an inbound interface. Most commonly, it refers to a table in which the ...

) functions, enabling the network control to become directly programmable and the underlying infrastructure to be abstracted from applications and network services.

The OpenFlow protocol can be used in SDN technologies. The SDN architecture is:

* Directly programmable: Network control is directly programmable because it is decoupled from forwarding functions.

* Agile: Abstracting control from forwarding lets administrators dynamically adjust network-wide traffic flow

In transportation engineering, traffic flow is the study of interactions between travellers (including pedestrians, cyclists, drivers, and their vehicles) and infrastructure (including highways, signage, and traffic control devices), with the ai ...

to meet changing needs.

* Centrally managed: Network intelligence is (logically) centralized in software-based SDN controllers that maintain a global view of the network, which appears to applications and policy engines as a single, logical switch.

* Programmatically configured: SDN lets network managers configure, manage, secure, and optimize network resources very quickly via dynamic, automated SDN programs, which they can write themselves because the programs do not depend on proprietary software.

* Open standards-based and vendor-neutral: When implemented through open standards, SDN simplifies network design and operation because instructions are provided by SDN controllers instead of multiple, vendor-specific devices and protocols.

New network architecture

The explosion of mobile devices and content, server virtualization, and the advent of cloud services are among the trends driving the networking industry to re-examine traditional network architectures. Many conventional networks are hierarchical, built with tiers of Ethernet switches arranged in a tree structure. This design made sense when client-server computing was dominant, but such a static architecture may be ill-suited to the dynamic computing and storage needs of today's enterprise data centers, campuses, and carrier environments. Some of the key computing trends driving the need for a new network paradigm include: ; Changing traffic patterns : Within the enterprise data center, traffic patterns have changed significantly. In contrast to client-server applications where the bulk of the communication occurs between one client and one server, today's applications access different databases and servers, creating a flurry of east-west machine-to-machine traffic before returning data to the end user device in the classicnorth-south traffic

In computer networking, north-south traffic is network traffic flowing into and out of a data center.

Traffic

Based on the most commonly deployed network topology of systems within a data center, north–south traffic typically indicates data flow ...

pattern. At the same time, users are changing network traffic patterns as they push for access to corporate content and applications from any type of device, connecting from anywhere, at any time. Finally, many enterprise data center managers are deploying a utility computing model, which may include a private cloud, public cloud, or some mix of both, resulting in additional traffic across the wide-area network.

; The consumerization of IT

: Users are increasingly employing mobile personal devices such as smartphones, tablets, and notebooks to access the corporate network. IT is under pressure to accommodate these personal devices in a fine-grained manner while protecting corporate data and intellectual property and meeting compliance mandates.

; The rise of cloud services

: Enterprises have enthusiastically embraced both public and private cloud services, resulting in unprecedented growth of these services. Many enterprise businesses want the agility to access applications, infrastructure and other IT resources on demand and discretely. IT planning for cloud services must be performed in an environment of increased security, compliance and auditing requirements, along with business reorganizations, consolidations and mergers that can rapidly change assumptions. Providing self-service provisioning, whether in a private or public cloud, requires elastic scaling of computing, storage and network resources, ideally from a common viewpoint and with a common suite of tools.

; Big data means more bandwidth

: Handling today's big data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

requires massive parallel processing on thousands of servers, all of which need direct connections to each other. The rise of these large data set

A data set (or dataset) is a collection of data. In the case of tabular data, a data set corresponds to one or more table (database), database tables, where every column (database), column of a table represents a particular Variable (computer sci ...

s is fueling a constant demand for additional network capacity in the data center. Operators of hyperscale data center networks face the daunting task of scaling the network to previously unimaginable size, maintaining any-to-any connectivity within a limited budget.

; Energy use in large data centers

: As Internet of things

Internet of things (IoT) describes devices with sensors, processing ability, software and other technologies that connect and exchange data with other devices and systems over the Internet or other communication networks. The IoT encompasse ...

, cloud computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for ...

and SaaS

Software as a service (SaaS ) is a cloud computing service model where the provider offers use of application software to a client and manages all needed physical and software resources. SaaS is usually accessed via a web application. Unlike oth ...

emerged, the need for larger data centers has increased the energy consumption of those facilities. Many researchers have improved SDN's energy efficiency Energy efficiency may refer to:

* Energy efficiency (physics), the ratio between the useful output and input of an energy conversion process

** Electrical efficiency, useful power output per electrical power consumed

** Mechanical efficiency, a rat ...

applying existing routing techniques to dynamically adjust the network data plane to save energy. Also techniques to improve control plane energy efficiency are being researched.

Architectural components

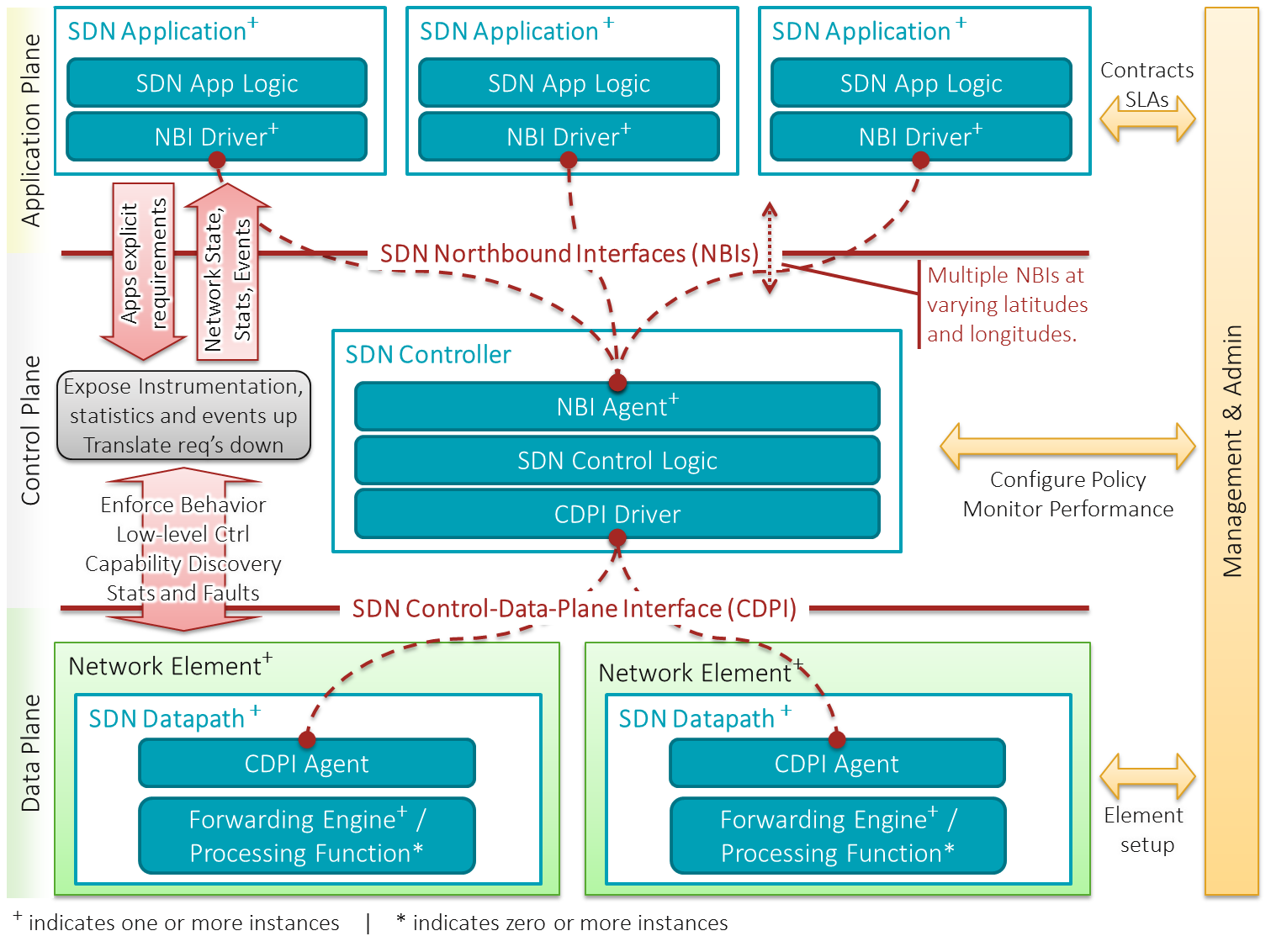

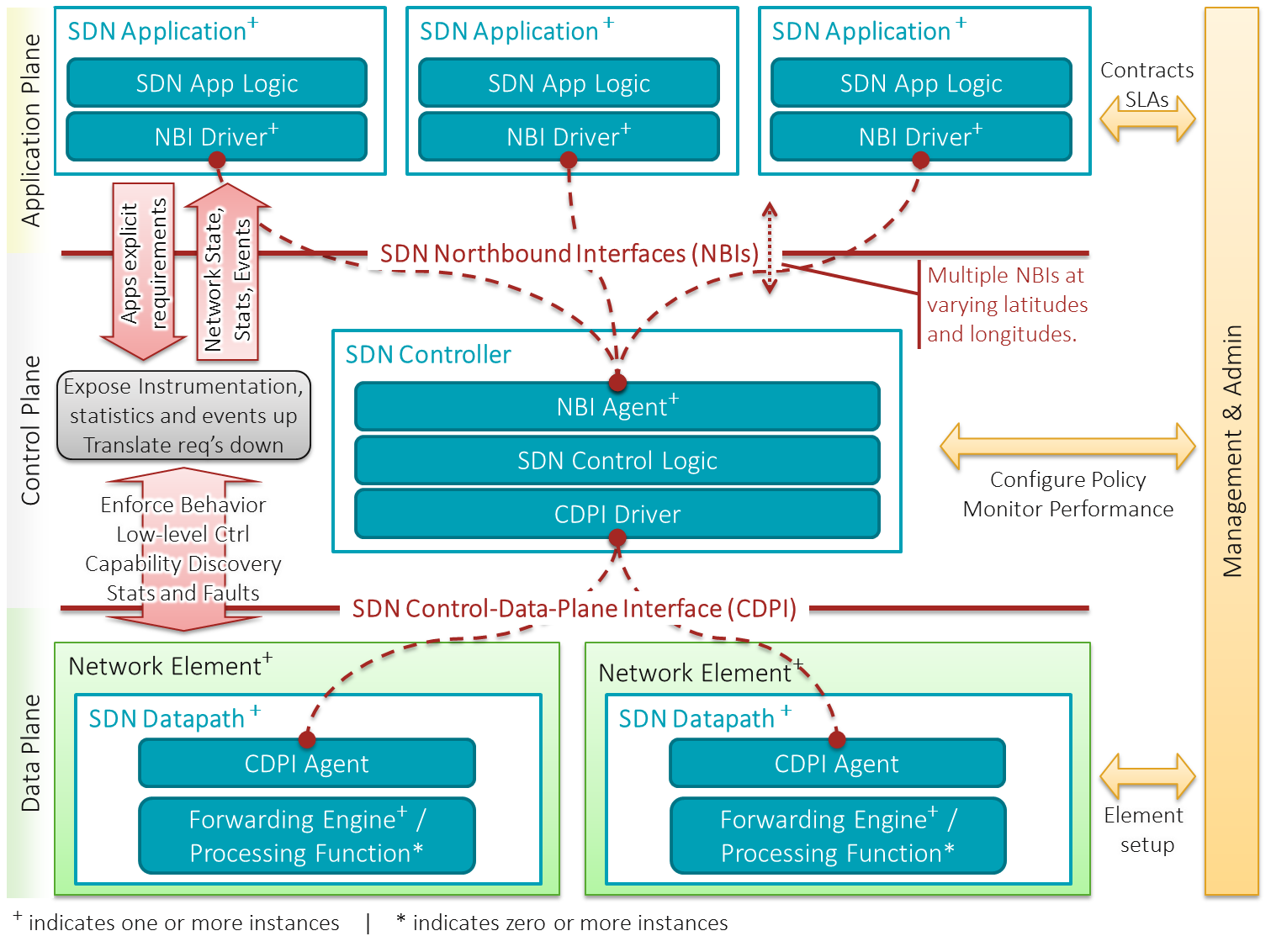

The following list defines and explains the SDN architectural components:

; SDN application

: SDN applications are programs that communicate their network requirements and desired network behavior to the SDN controller via a northbound interface (NBI). In addition, they may consume an abstracted view of the network for their internal decision-making purposes. An SDN Application consists of SDN application logic and one or more NBI drivers. SDN applications may themselves expose another layer of abstracted network control, thus offering one or more higher-level NBIs through respective NBI agents.

; SDN controller

: The SDN controller is a logically centralized entity in charge of (i) translating the requirements from the SDN application layer down to the SDN datapaths and (ii) providing the SDN applications with an abstract view of the network (which may include statistics and events). An SDN controller consists of one or more NBI agents, the SDN control logic, and the control to data-plane interface (CDPI) driver. The controller's definition as a logically centralized entity neither prescribes nor precludes implementation architectures such as the federation of multiple controllers, the hierarchical connection of controllers, communication interfaces between controllers, nor virtualization or slicing of network resources.

; SDN datapath

: The SDN datapath is a logical network device that exposes visibility and uncontested control over its advertised forwarding and data processing capabilities. The logical representation may encompass all or a subset of the capabilities. An SDN datapath consists of a CDPI agent and a set of one or more traffic forwarding engines and zero or more traffic processing functions. These engines and functions may include simple forwarding between the datapath's external interfaces or internal traffic processing or termination functions. One or more SDN datapaths may be contained in a single (physical) network element—an integrated physical combination of communications resources, managed as a unit. An SDN datapath may also be defined across multiple physical network elements. This logical definition neither prescribes nor precludes implementation details such as the logical to physical mapping, management of shared physical resources, virtualization or slicing of the SDN datapath, interoperability with non-SDN networking, nor the data processing functionality, which can include OSI layer 4-7 functions.

; SDN Control to Data-Plane Interface (CDPI)

: The SDN CDPI is the interface defined between an SDN controller and an SDN datapath, which provides at least programmatic control of all forwarding operations, capabilities advertisement, statistics reporting, and event notification. One value of SDN lies in the expectation that the CDPI is implemented in an open, vendor-neutral and interoperable way.

; SDN Northbound Interfaces (NBI)

: SDN NBIs are interfaces between SDN applications and SDN controllers and typically provide abstract network views and enable direct expression of network behavior and requirements. This may occur at any level of abstraction and across different sets of functionality.

The following list defines and explains the SDN architectural components:

; SDN application

: SDN applications are programs that communicate their network requirements and desired network behavior to the SDN controller via a northbound interface (NBI). In addition, they may consume an abstracted view of the network for their internal decision-making purposes. An SDN Application consists of SDN application logic and one or more NBI drivers. SDN applications may themselves expose another layer of abstracted network control, thus offering one or more higher-level NBIs through respective NBI agents.

; SDN controller

: The SDN controller is a logically centralized entity in charge of (i) translating the requirements from the SDN application layer down to the SDN datapaths and (ii) providing the SDN applications with an abstract view of the network (which may include statistics and events). An SDN controller consists of one or more NBI agents, the SDN control logic, and the control to data-plane interface (CDPI) driver. The controller's definition as a logically centralized entity neither prescribes nor precludes implementation architectures such as the federation of multiple controllers, the hierarchical connection of controllers, communication interfaces between controllers, nor virtualization or slicing of network resources.

; SDN datapath

: The SDN datapath is a logical network device that exposes visibility and uncontested control over its advertised forwarding and data processing capabilities. The logical representation may encompass all or a subset of the capabilities. An SDN datapath consists of a CDPI agent and a set of one or more traffic forwarding engines and zero or more traffic processing functions. These engines and functions may include simple forwarding between the datapath's external interfaces or internal traffic processing or termination functions. One or more SDN datapaths may be contained in a single (physical) network element—an integrated physical combination of communications resources, managed as a unit. An SDN datapath may also be defined across multiple physical network elements. This logical definition neither prescribes nor precludes implementation details such as the logical to physical mapping, management of shared physical resources, virtualization or slicing of the SDN datapath, interoperability with non-SDN networking, nor the data processing functionality, which can include OSI layer 4-7 functions.

; SDN Control to Data-Plane Interface (CDPI)

: The SDN CDPI is the interface defined between an SDN controller and an SDN datapath, which provides at least programmatic control of all forwarding operations, capabilities advertisement, statistics reporting, and event notification. One value of SDN lies in the expectation that the CDPI is implemented in an open, vendor-neutral and interoperable way.

; SDN Northbound Interfaces (NBI)

: SDN NBIs are interfaces between SDN applications and SDN controllers and typically provide abstract network views and enable direct expression of network behavior and requirements. This may occur at any level of abstraction and across different sets of functionality.

SDN control plane

The implementation of the SDN control plane can follow a centralized, hierarchical, or decentralized design. Initial SDN control plane proposals focused on a centralized solution, where a single control entity had a global view of the network. While this simplifies the implementation of the control logic, it has scalability limitations as the size and dynamics of the network increase. To overcome these limitations, hierarchical and distributed approaches have been proposed. In hierarchical solutions, controllers operate on a partitioned network view, while decisions that require network-wide knowledge are taken by a logically centralized root controller. In distributed approaches, controllers operate on their local view or they may exchange synchronization messages to enhance their knowledge. Distributed solutions are more suitable for supporting adaptive SDN applications. A key issue when designing a distributed SDN control plane is to decide on the number and placement of control entities. An important parameter to consider while doing so is the propagation delay between the controllers and the network devices, especially in the context of large networks. Other objectives that have been considered involve control path reliability, fault tolerance, and application requirements.SDN Data Plane

In SDN, the data plane is responsible for processing data-carrying packets using a set of rules specified by the control plane. The data plane may be implemented in physical hardware switches or in software implementations, such as Open vSwitch. The memory capacity of hardware switches may limit the number of rules that can be stored where as software implementations may have higher capacity. The location of the SDN data plane and agent can be used to classify SDN implementations: * ''Hardware Switch-based SDNs:'' This approach implements the data plane processing inside a physical device. OpenFlow switches may use TCAM tables to route packet sequences (flows). These switches may use anASIC

An application-specific integrated circuit (ASIC ) is an integrated circuit (IC) chip customized for a particular use, rather than intended for general-purpose use, such as a chip designed to run in a digital voice recorder or a high-efficien ...

for its implementation.

* ''Software Switch-Based SDNs:'' Some physical switches may implement SDN support using software on the device, such as Open vSwitch, to populate flow tables and to act as the SDN agent when communicating with the controller. Hypervisor

A hypervisor, also known as a virtual machine monitor (VMM) or virtualizer, is a type of computer software, firmware or hardware that creates and runs virtual machines. A computer on which a hypervisor runs one or more virtual machines is called ...

s may likewise use software implementations to support SDN protocols in the virtual switches used to support their virtual machine

In computing, a virtual machine (VM) is the virtualization or emulator, emulation of a computer system. Virtual machines are based on computer architectures and provide the functionality of a physical computer. Their implementations may involve ...

s.

* ''Host-Based SDNs:'' Rather than deploying the data plane and SDN agent in network infrastructure, host-based SDNs deploy the SDN agent inside the operating system of the communicating endpoints. Such implementations can provide additional context about the application, user, and activity associated with network flows. To achieve the same traffic engineering capabilities of switch-based SDNs, host-based SDNs may require the use of carefully designed VLAN

A virtual local area network (VLAN) is any broadcast domain that is partitioned and isolated in a computer network at the data link layer ( OSI layer 2).IEEE 802.1Q-2011, ''1.4 VLAN aims and benefits'' In this context, virtual refers to a ...

and spanning tree

In the mathematical field of graph theory, a spanning tree ''T'' of an undirected graph ''G'' is a subgraph that is a tree which includes all of the vertices of ''G''. In general, a graph may have several spanning trees, but a graph that is no ...

assignments.

Flow table entries may be populated in a proactive, reactive, or hybrid fashion. In the proactive mode, the controller populates flow table entries for all possible traffic matches possible for this switch in advance. This mode can be compared with typical routing table entries today, where all static entries are installed ahead of time. Following this, no request is sent to the controller since all incoming flows will find a matching entry. A major advantage in proactive mode is that all packets are forwarded in line rate (considering all flow table entries in TCAM) and no delay is added. In the reactive mode, entries are populated on demand. If a packet arrives without a corresponding match rule in the flow table, the SDN agent sends a request to the controller for further instruction it the reactive mode. The controller examines the SDN agent requests and provides instructions, installing a rule in the flow table for the corresponding packet if necessary. The hybrid mode uses the low-latency proactive forwarding mode for a portion of traffic while relying on the flexibility of reactive mode processing for the remaining traffic.

Applications

SDMN

Software-defined mobile networking (SDMN) is an approach to the design of mobile networks where all protocol-specific features are implemented in software, maximizing the use of generic and commodity hardware and software in both thecore network

A backbone or core network is a part of a computer network which interconnects networks, providing a path for the exchange of information between different LANs or subnetworks. A backbone can tie together diverse networks in the same buildi ...

and radio access network

A radio access network (RAN) is part of a mobile telecommunication system implementing a radio access technology (RAT). Conceptually, it resides between a device such as a mobile phone, a computer, or any remotely controlled machine and provi ...

. It is proposed as an extension of SDN paradigm to incorporate mobile network

A cellular network or mobile network is a telecommunications network where the link to and from end nodes is wireless and the network is distributed over land areas called ''cells'', each served by at least one fixed-location transceiver (suc ...

specific functionalities. Since 3GPP Rel.14, a Control User Plane Separation was introduced in the Mobile Core Network architectures with the PFCP protocol.

SD-WAN

An SD-WAN is a WAN managed using the principles of software-defined networking. The main driver of SD-WAN is to lower WAN costs using more affordable and commercially available leased lines, as an alternative or partial replacement of more expensiveMPLS

Multiprotocol Label Switching (MPLS) is a routing technique in telecommunications networks that directs data from one node to the next based on labels rather than network addresses. Whereas network addresses identify endpoints, the labels identi ...

lines. Control and management is administered separately from the hardware with central controllers allowing for easier configuration and administration.

SD-LAN

An SD-LAN is aLocal area network

A local area network (LAN) is a computer network that interconnects computers within a limited area such as a residence, campus, or building, and has its network equipment and interconnects locally managed. LANs facilitate the distribution of da ...

(LAN) built around the principles of software-defined networking, though there are key differences in topology, network security, application visibility and control, management and quality of service. SD-LAN decouples control management, and data planes to enable a policy driven architecture for wired and wireless LANs. SD-LANs are characterized by their use of a cloud management system and wireless connectivity without the presence of a physical controller.

Security using the SDN paradigm

SDN architecture may enable, facilitate or enhance network-related security applications due to the controller's central view of the network, and its capacity to reprogram the data plane at any time. While the security of SDN architecture itself remains an open question that has already been studied a couple of times in the research community, the following paragraphs only focus on the security applications made possible or revisited using SDN. Several research works on SDN have already investigated security applications built upon the SDN controller, with different aims in mind. Distributed Denial of Service (DDoS) detection and mitigation, as well as botnet and worm propagation, are some concrete use-cases of such applications: basically, the idea consists in periodically collecting network statistics from the forwarding plane of the network in a standardized manner (e.g. using Openflow), and then apply classification algorithms on those statistics in order to detect any network anomalies. If an anomaly is detected, the application instructs the controller how to reprogram the data plane in order to mitigate it. Another kind of security application leverages the SDN controller by implementing some moving target defense (MTD) algorithms. MTD algorithms are typically used to make any attack on a given system or network more difficult than usual by periodically hiding or changing key properties of that system or network. In traditional networks, implementing MTD algorithms is not a trivial task since it is difficult to build a central authority able of determining - for each part of the system to be protected - which key properties are hidden or changed. In an SDN network, such tasks become more straightforward thanks to the centrality of the controller. One application can for example periodically assign virtual IPs to hosts within the network, and the mapping virtual IP/real IP is then performed by the controller. Another application can simulate some fake opened/closed/filtered ports on random hosts in the network in order to add significant noise during reconnaissance phase (e.g. scanning) performed by an attacker. Additional value regarding security in SDN enabled networks can also be gained using FlowVisor and FlowChecker respectively. The former tries to use a single hardware forwarding plane sharing multiple separated logical networks. Following this approach the same hardware resources can be used for production and development purposes as well as separating monitoring, configuration and internet traffic, where each scenario can have its own logical topology which is called slice. In conjunction with this approach FlowChecker realizes the validation of new OpenFlow rules that are deployed by users using their own slice. SDN controller applications are mostly deployed in large-scale scenarios, which requires comprehensive checks of possible programming errors. A system to do this called NICE was described in 2012. Introducing an overarching security architecture requires a comprehensive and protracted approach to SDN. Since it was introduced, designers are looking at possible ways to secure SDN that do not compromise scalability. One architecture called SN-SECA (SDN+NFV) Security Architecture.Group Data Delivery Using SDN

Distributed applications that run across datacenters usually replicate data for the purpose of synchronization, fault resiliency, load balancing and getting data closer to users (which reduces latency to users and increases their perceived throughput). Also, many applications, such as Hadoop, replicate data within a datacenter across multiple racks to increase fault tolerance and make data recovery easier. All of these operations require data delivery from one machine or datacenter to multiple machines or datacenters. The process of reliably delivering data from one machine to multiple machines is referred to as Reliable Group Data Delivery (RGDD). SDN switches can be used for RGDD via installation of rules that allow forwarding to multiple outgoing ports. For example, OpenFlow provides support for Group Tables since version 1.1 which makes this possible. Using SDN, a central controller can carefully and intelligently setup forwarding trees for RGDD. Such trees can be built while paying attention to network congestion/load status to improve performance. For example, MCTCP is a scheme for delivery to many nodes inside datacenters that relies on regular and structured topologies of datacenter networks while DCCast and QuickCast are approaches for fast and efficient data and content replication across datacenters over private WANs.Relationship to NFV

Network Function Virtualization

Network functions virtualization (NFV) is a network architecture concept that leverages IT virtualization technologies to virtualize entire classes of network node functions into building blocks that may connect, or chain together, to create and de ...

, or NFV for short, is a concept that complements SDN. Thus, NFV is not dependent on SDN or SDN concepts. NFV separates software from hardware to enable flexible network deployment and dynamic operation. NFV deployments typically use commodity servers to run network services software versions that previously were hardware-based. These software-based services that run in an NFV environment are called Virtual Network Functions (VNF). SDN-NFV hybrid program was provided for high efficiency, elastic and scalable capabilities NFV aimed at accelerating service innovation and provisioning using standard IT virtualization technologies. SDN provides the agility of controlling the generic forwarding devices such as the routers and switches by using SDN controllers. On the other hand, NFV agility is provided for the network applications by using virtualized servers. It is entirely possible to implement a virtualized network function (VNF) as a standalone entity using existing networking and orchestration paradigms. However, there are inherent benefits in leveraging SDN concepts to implement and manage an NFV infrastructure, particularly when looking at the management and orchestration of VNFs, and that's why multivendor platforms are being defined that incorporate SDN and NFV in concerted ecosystems.

Relationship to DPI

Deep packet inspection

Deep packet inspection (DPI) is a type of data processing that inspects in detail the data (Network packet, packets) being sent over a computer network, and may take actions such as alerting, blocking, re-routing, or logging it accordingly. Deep ...

(DPI) provides network with application-awareness, while SDN provides applications with network-awareness. Although SDN will radically change the generic network architectures, it should cope with working with traditional network architectures to offer high interoperability. The new SDN based network architecture should consider all the capabilities that are currently provided in separate devices or software other than the main forwarding devices (routers and switches) such as the DPI, security appliances

Quality of Experience (QoE) estimation using SDN

When using an SDN based model for transmitting multimedia traffic, an important aspect to take account is the QoE estimation. To estimate the QoE, first we have to be able to classify the traffic and then, it's recommended that the system can solve critical problems on its own by analyzing the traffic.See also

* Active networking * Frenetic (programming language) * IEEE 802.1aq * Intel Data Plane Development Kit (DPDK) * List of SDN controller software *Network functions virtualization

Network functions virtualization (NFV) is a network architecture concept that leverages IT virtualization technologies to virtualize entire classes of network node functions into building blocks that may connect, or chain together, to create and de ...

* Network virtualization

* ONOS fa:کاربر:CSF-404/ONOS

:fa:کاربر:CSF-404/ONOS, The ONOS (Open Network Operating System) project is an open source community hosted by The Linux Foundation. The goal of the project is to create a software-defined networking (SDN) operatin ...

* OpenDaylight Project

* SD-WAN

* Software-defined data center

* Software-defined mobile network

* Software-defined protection

* Virtual Distributed Ethernet

Virtual Distributed Ethernet (VDE) is a set of programs to provide virtual software-defined Ethernet network interface controllers (NICs) across multiple devices, typically computers, which are either virtual or physical. It forms part of the Vir ...

References

{{Authority control Configuration management Network architecture