F-score on:

[Wikipedia]

[Google]

[Amazon]

In

In

statistical

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

analysis of binary classification

Binary classification is the task of classifying the elements of a set into one of two groups (each called ''class''). Typical binary classification problems include:

* Medical testing to determine if a patient has a certain disease or not;

* Qual ...

and information retrieval

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form ...

systems, the F-score or F-measure is a measure of predictive performance. It is calculated from the precision and recall of the test, where the precision is the number of true positive results divided by the number of all samples predicted to be positive, including those not identified correctly, and the recall is the number of true positive results divided by the number of all samples that should have been identified as positive. Precision is also known as positive predictive value

The positive and negative predictive values (PPV and NPV respectively) are the proportions of positive and negative results in statistics and diagnostic tests that are true positive and true negative results, respectively. The PPV and NPV desc ...

, and recall is also known as sensitivity in diagnostic binary classification.

The F1 score is the harmonic mean

In mathematics, the harmonic mean is a kind of average, one of the Pythagorean means.

It is the most appropriate average for ratios and rate (mathematics), rates such as speeds, and is normally only used for positive arguments.

The harmonic mean ...

of the precision and recall. It thus symmetrically represents both precision and recall in one metric. The more generic score applies additional weights, valuing one of precision or recall more than the other.

The highest possible value of an F-score is 1.0, indicating perfect precision and recall, and the lowest possible value is 0, if the precision or the recall is zero.

Etymology

The name F-measure is believed to be named after a different F function in Van Rijsbergen's book, when introduced to the FourthMessage Understanding Conference

The Message Understanding Conferences (MUC) for computing and computer science, were initiated and financed by DARPA (Defense Advanced Research Projects Agency) to encourage the development of new and better methods of information extraction. The ...

(MUC-4, 1992).

Definition

The traditional F-measure or balanced F-score (F1 score) is theharmonic mean

In mathematics, the harmonic mean is a kind of average, one of the Pythagorean means.

It is the most appropriate average for ratios and rate (mathematics), rates such as speeds, and is normally only used for positive arguments.

The harmonic mean ...

of precision and recall:

:

With and , it follows that the numerator of is the sum of their numerators and the denominator of is the sum of their denominators.

To see it as a harmonic mean, note that .

Fβ score

A more general F score, , that uses a positive real factor , where is chosen such that recall is considered times as important as precision, is: : To see that it as a weighted harmonic mean, note that . In terms ofType I and type II errors

Type I error, or a false positive, is the erroneous rejection of a true null hypothesis in statistical hypothesis testing. A type II error, or a false negative, is the erroneous failure in bringing about appropriate rejection of a false null hy ...

this becomes:

:

Two commonly used values for are 2, which weighs recall higher than precision, and 1/2, which weighs recall lower than precision.

The F-measure was derived so that "measures the effectiveness of retrieval with respect to a user who attaches times as much importance to recall as precision". It is based on Van Rijsbergen's effectiveness measure

:

Their relationship is: where

Diagnostic testing

This is related to the field ofbinary classification

Binary classification is the task of classifying the elements of a set into one of two groups (each called ''class''). Typical binary classification problems include:

* Medical testing to determine if a patient has a certain disease or not;

* Qual ...

where recall is often termed "sensitivity".

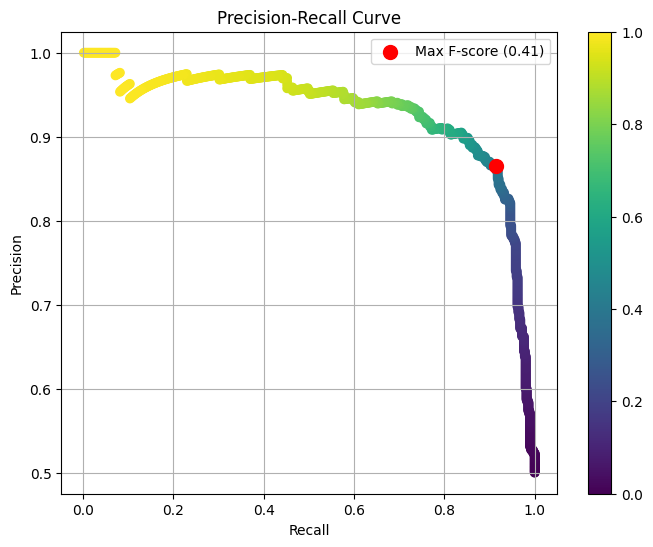

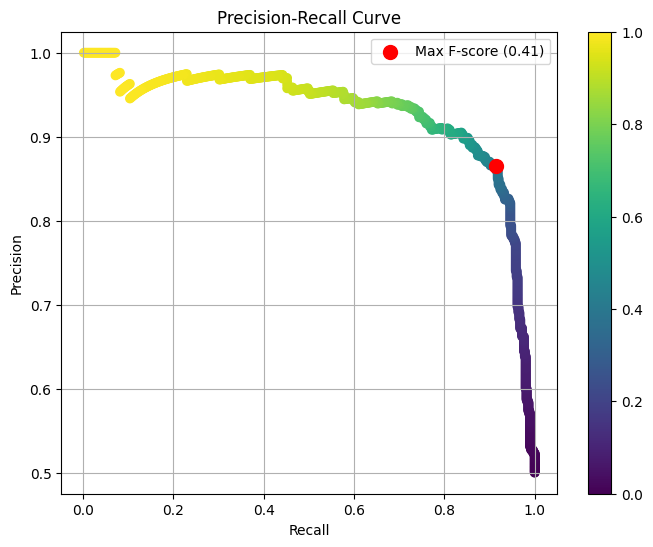

Dependence of the F-score on class imbalance

Precision-recall curve, and thus the score, explicitly depends on the ratio of positive to negative test cases. This means that comparison of the F-score across different problems with differing class ratios is problematic. One way to address this issue (see e.g., Siblini et al., 2020 ) is to use a standard class ratio when making such comparisons.Applications

The F-score is often used in the field ofinformation retrieval

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form ...

for measuring search

Searching may refer to:

Music

* "Searchin', Searchin", a 1957 song originally performed by The Coasters

* Searching (China Black song), "Searching" (China Black song), a 1991 song by China Black

* Searchin' (CeCe Peniston song), "Searchin" (C ...

, document classification

Document classification or document categorization is a problem in library science, information science and computer science. The task is to assign a document to one or more Class (philosophy), classes or Categorization, categories. This may be do ...

, and query classification performance. It is particularly relevant in applications which are primarily concerned with the positive class and where the positive class is rare relative to the negative class.

Earlier works focused primarily on the F1 score, but with the proliferation of large scale search engines, performance goals changed to place more emphasis on either precision or recall and so is seen in wide application.

The F-score is also used in machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

. However, the F-measures do not take true negatives into account, hence measures such as the Matthews correlation coefficient

In statistics, the phi coefficient, or mean square contingency coefficient, denoted by ''φ'' or ''r'φ'', is a measure of association for two binary variables.

In machine learning, it is known as the Matthews correlation coefficient (MCC) an ...

, Informedness

Youden's J statistic (also called Youden's index) is a single statistic that captures the performance of a dichotomous diagnostic test. In meteorology, this statistic is referred to as Peirce Skill Score (PSS), Hanssen–Kuipers Discriminant (HKD) ...

or Cohen's kappa may be preferred to assess the performance of a binary classifier.

The F-score has been widely used in the natural language processing literature, such as in the evaluation of named entity recognition and word segmentation

A word is a basic element of language that carries meaning, can be used on its own, and is uninterruptible. Despite the fact that language speakers often have an intuitive grasp of what a word is, there is no consensus among linguists on its ...

.

Properties

The F1 score is the Dice coefficient of the set of retrieved items and the set of relevant items. * The F1-score of a classifier which always predicts the positive class converges to 1 as the probability of the positive class increases. * The F1-score of a classifier which always predicts the positive class is equal to 2 * proportion_of_positive_class / ( 1 + proportion_of_positive_class ), since the recall is 1, and the precision is equal to the proportion of the positive class. * If the scoring model is uninformative (cannot distinguish between the positive and negative class) then the optimal threshold is 0 so that the positive class is always predicted. * F1 score isconcave

Concave or concavity may refer to:

Science and technology

* Concave lens

* Concave mirror

Mathematics

* Concave function, the negative of a convex function

* Concave polygon

A simple polygon that is not convex is called concave, non-convex or ...

in the true positive rate.

Criticism

David Hand and others criticize the widespread use of the F1 score since it gives equal importance to precision and recall. In practice, different types of mis-classifications incur different costs. In other words, the relative importance of precision and recall is an aspect of the problem. According to Davide Chicco and Giuseppe Jurman, the F1 score is less truthful and informative than the Matthews correlation coefficient (MCC) in binary evaluation classification. David M W Powers has pointed out that F1 ignores the True Negatives and thus is misleading for unbalanced classes, while kappa and correlation measures are symmetric and assess both directions of predictability - the classifier predicting the true class and the true class predicting the classifier prediction, proposing separate multiclass measuresInformedness

Youden's J statistic (also called Youden's index) is a single statistic that captures the performance of a dichotomous diagnostic test. In meteorology, this statistic is referred to as Peirce Skill Score (PSS), Hanssen–Kuipers Discriminant (HKD) ...

and Markedness

In linguistics and social sciences, markedness is the state of standing out as nontypical or divergent as opposed to regular or common. In a marked–unmarked relation, one term of an opposition is the broader, dominant one. The dominant defau ...

for the two directions, noting that their geometric mean is correlation.

Another source of critique of F1 is its lack of symmetry. It means it may change its value when dataset labeling is changed - the "positive" samples are named "negative" and vice versa.

This criticism is met by the P4 metric definition, which is sometimes indicated as a symmetrical extension of F1.

Finally, Ferrer

and Dyrland et al.

argue that the expected cost (or its counterpart, the expected utility) is the only principled metric for evaluation of classification decisions, having various advantages over the F-score and the MCC. Both works show that the F-score can result in wrong conclusions about the absolute and relative quality of systems.

Difference from Fowlkes–Mallows index

While the F-measure is theharmonic mean

In mathematics, the harmonic mean is a kind of average, one of the Pythagorean means.

It is the most appropriate average for ratios and rate (mathematics), rates such as speeds, and is normally only used for positive arguments.

The harmonic mean ...

of recall and precision, the Fowlkes–Mallows index is their geometric mean

In mathematics, the geometric mean is a mean or average which indicates a central tendency of a finite collection of positive real numbers by using the product of their values (as opposed to the arithmetic mean which uses their sum). The geometri ...

.

Extension to multi-class classification

The F-score is also used for evaluating classification problems with more than two classes ( Multiclass classification). A common method is to average the F-score over each class, aiming at a balanced measurement of performance.Macro F1

''Macro F1'' is a macro-averaged F1 score aiming at a balanced performance measurement. To calculate macro F1, two different averaging-formulas have been used: the F1 score of (arithmetic) class-wise precision and recall means or the arithmetic mean of class-wise F1 scores, where the latter exhibits more desirable properties.Micro F1

''Micro F1'' is the harmonic mean of ''micro precision'' and ''micro recall''. In single-label multi-class classification, micro precision equals micro recall, thus micro F1 is equal to both. However, contrary to a common misconception, micro F1 does not generally equal ''accuracy'', because accuracy takes true negatives into account while micro F1 does not.See also

*BLEU

Bleu or BLEU may refer to:

* '' Three Colors: Blue'', a 1993 film

* BLEU (Bilingual Evaluation Understudy), a machine translation evaluation metric

* Belgium–Luxembourg Economic Union

* Blue cheese, a type of cheese

* Parti bleu, 19th century ...

* Confusion matrix

In the field of machine learning and specifically the problem of statistical classification, a confusion matrix, also known as error matrix, is a specific table layout that allows visualization of the performance of an algorithm, typically a super ...

* Hypothesis tests for accuracy

* METEOR

A meteor, known colloquially as a shooting star, is a glowing streak of a small body (usually meteoroid) going through Earth's atmosphere, after being heated to incandescence by collisions with air molecules in the upper atmosphere,

creating a ...

* NIST (metric) NIST is a method for evaluating the quality of text which has been translated using machine translation. Its name comes from the US National Institute of Standards and Technology.

It is based on the BLEU metric, but with some alterations. Where ...

* Receiver operating characteristic

A receiver operating characteristic curve, or ROC curve, is a graph of a function, graphical plot that illustrates the performance of a binary classifier model (can be used for multi class classification as well) at varying threshold values. ROC ...

* ROUGE (metric)

ROUGE, or Recall-Oriented Understudy for Gisting Evaluation, is a set of metrics and a software package used for evaluating automatic summarization and machine translation software in natural language processing. The metrics compare an automaticall ...

* Uncertainty coefficient

In statistics, the uncertainty coefficient, also called proficiency, entropy coefficient or Theil's U, is a measure of nominal Association (statistics), association. It was first introduced by Henri Theil and is based on the concept of informatio ...

, aka Proficiency

* Word error rate

Word error rate (WER) is a common metric of the performance of a speech recognition or machine translation system. The WER metric typically ranges from 0 to 1, where 0 indicates that the compared pieces of text are exactly identical, and 1 (or larg ...

* LEPOR

References

{{DEFAULTSORT:F1 Score Statistical natural language processing Evaluation of machine translation Statistical ratios Summary statistics for contingency tables Clustering criteria de:Beurteilung eines Klassifikators#Kombinierte Maße