Digital Image Processing on:

[Wikipedia]

[Google]

[Amazon]

Digital image processing is the use of a

img=checkerboard(20); % generate checkerboard

% ************************** SPATIAL DOMAIN ***************************

klaplace= -1 0; -1 5 -1; 0 -1 0 % Laplacian filter kernel

X=conv2(img,klaplace); % convolve test img with

% 3x3 Laplacian kernel

figure()

imshow(X,[]) % show Laplacian filtered

title('Laplacian Edge Detection')

A Brief, Early History of Computer Graphics in Film

, Larry Yaeger, 16 August 2002 (last update), retrieved 24 March 2010 Image processing is also vastly used to produce the

Face detection can be implemented with mathematical morphology, the

Face detection can be implemented with mathematical morphology, the

In discrete time, the area of gray level histogram is (see figure 1) while the area of uniform distribution is (see figure 2). It is clear that the area will not change, so .

From the uniform distribution, the probability of is while the

In continuous time, the equation is .

Moreover, based on the definition of a function, the Gray level histogram method is like finding a function that satisfies f(p)=q.

{, class="wikitable"

, -

! Improvement method

! Issue

! Before improvement

! Process

! After improvement

, -

, -

, Smoothing method

, noise

with Matlab, salt & pepper with 0.01 parameter is added

In discrete time, the area of gray level histogram is (see figure 1) while the area of uniform distribution is (see figure 2). It is clear that the area will not change, so .

From the uniform distribution, the probability of is while the

In continuous time, the equation is .

Moreover, based on the definition of a function, the Gray level histogram method is like finding a function that satisfies f(p)=q.

{, class="wikitable"

, -

! Improvement method

! Issue

! Before improvement

! Process

! After improvement

, -

, -

, Smoothing method

, noise

with Matlab, salt & pepper with 0.01 parameter is added

to the original image in order to create a noisy image. , ,

# read image and convert image into grayscale

# convolution the graysale image with the mask

# denoisy image will be the result of step 2.

,

,

# read image and convert image into grayscale

# convolution the graysale image with the mask

# denoisy image will be the result of step 2.

,  , -

, -

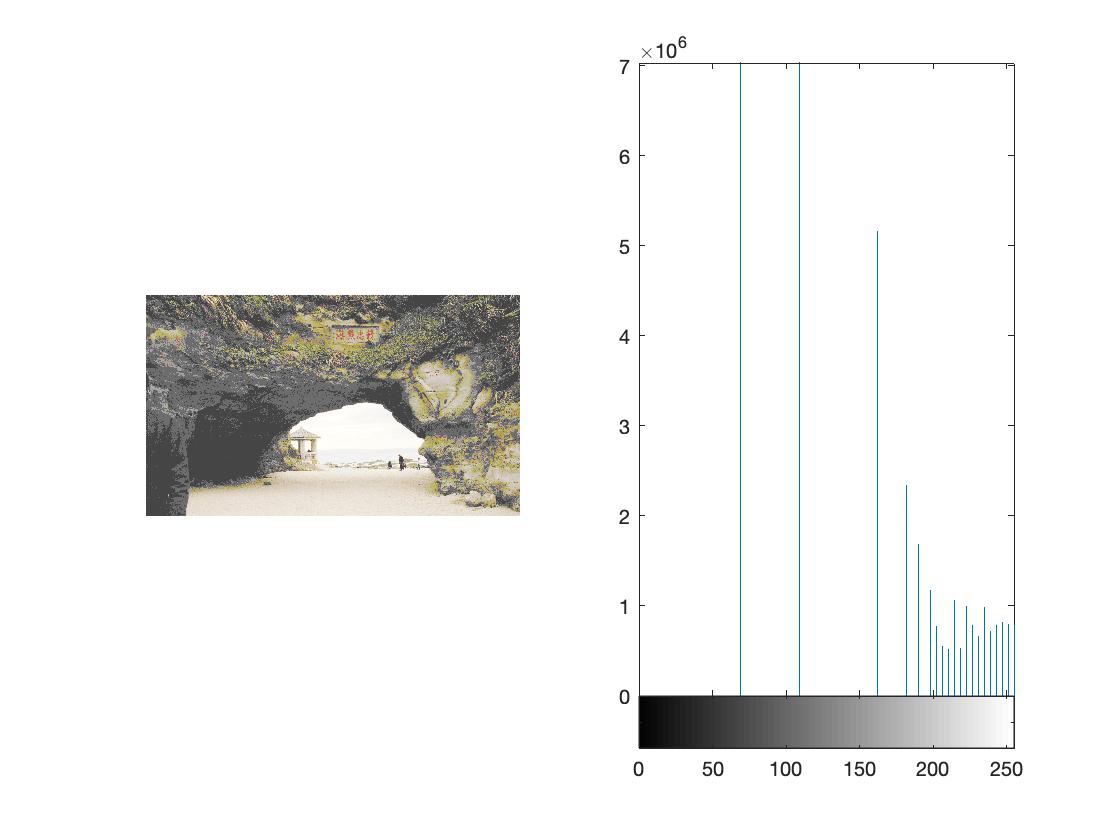

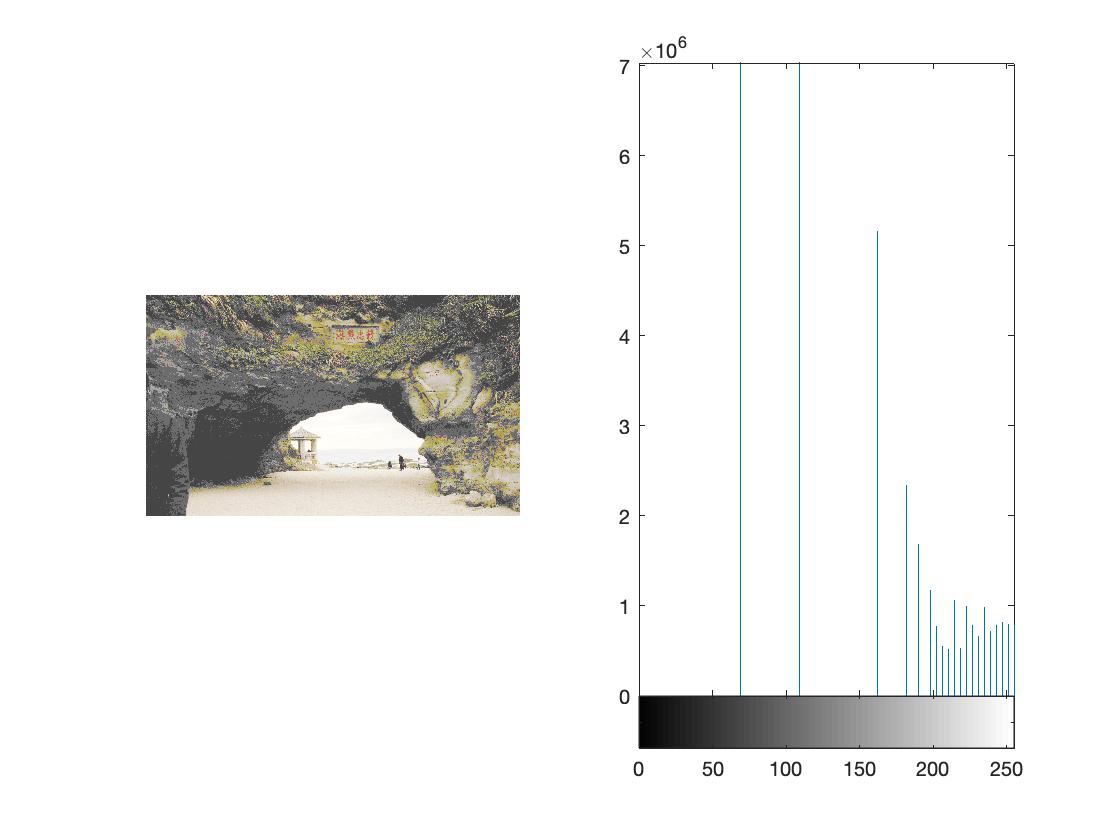

, Histogram Equalization

, Gray level distribution too centralized

,

, -

, -

, Histogram Equalization

, Gray level distribution too centralized

,  , Refer to the Histogram equalization

,

, Refer to the Histogram equalization

,  , -

, -

Lectures on Image Processing

by Alan Peters. Vanderbilt University. Updated 7 January 2016.

{{DEFAULTSORT:Digital image processing Computer-related introductions in the 1960s Computer vision Digital imaging

digital computer

A computer is a machine that can be programmed to automatically carry out sequences of arithmetic or logical operations (''computation''). Modern digital electronic computers can perform generic sets of operations known as ''programs'', wh ...

to process digital image

A digital image is an image composed of picture elements, also known as pixels, each with '' finite'', '' discrete quantities'' of numeric representation for its intensity or gray level that is an output from its two-dimensional functions f ...

s through an algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ...

. As a subcategory or field of digital signal processing

Digital signal processing (DSP) is the use of digital processing, such as by computers or more specialized digital signal processors, to perform a wide variety of signal processing operations. The digital signals processed in this manner are a ...

, digital image processing has many advantages over analog image processing. It allows a much wider range of algorithms to be applied to the input data and can avoid problems such as the build-up of noise

Noise is sound, chiefly unwanted, unintentional, or harmful sound considered unpleasant, loud, or disruptive to mental or hearing faculties. From a physics standpoint, there is no distinction between noise and desired sound, as both are vibrat ...

and distortion

In signal processing, distortion is the alteration of the original shape (or other characteristic) of a signal. In communications and electronics it means the alteration of the waveform of an information-bearing signal, such as an audio signal ...

during processing. Since images are defined over two dimensions (perhaps more), digital image processing may be modeled in the form of multidimensional systems. The generation and development of digital image processing are mainly affected by three factors: first, the development of computers; second, the development of mathematics (especially the creation and improvement of discrete mathematics theory); and third, the demand for a wide range of applications in environment, agriculture, military, industry and medical science has increased.

History

Many of the techniques ofdigital image

A digital image is an image composed of picture elements, also known as pixels, each with '' finite'', '' discrete quantities'' of numeric representation for its intensity or gray level that is an output from its two-dimensional functions f ...

processing, or digital picture processing as it often was called, were developed in the 1960s, at Bell Laboratories

Nokia Bell Labs, commonly referred to as ''Bell Labs'', is an American industrial research and development company owned by Finnish technology company Nokia. With headquarters located in Murray Hill, New Jersey, the company operates several lab ...

, the Jet Propulsion Laboratory

The Jet Propulsion Laboratory (JPL) is a Federally funded research and development centers, federally funded research and development center (FFRDC) in La Cañada Flintridge, California, Crescenta Valley, United States. Founded in 1936 by Cali ...

, Massachusetts Institute of Technology

The Massachusetts Institute of Technology (MIT) is a Private university, private research university in Cambridge, Massachusetts, United States. Established in 1861, MIT has played a significant role in the development of many areas of moder ...

, University of Maryland

The University of Maryland, College Park (University of Maryland, UMD, or simply Maryland) is a public land-grant research university in College Park, Maryland, United States. Founded in 1856, UMD is the flagship institution of the Univ ...

, and a few other research facilities, with application to satellite imagery

Satellite images (also Earth observation imagery, spaceborne photography, or simply satellite photo) are images of Earth collected by imaging satellites operated by governments and businesses around the world. Satellite imaging companies sell im ...

, wire-photo standards conversion, medical imaging

Medical imaging is the technique and process of imaging the interior of a body for clinical analysis and medical intervention, as well as visual representation of the function of some organs or tissues (physiology). Medical imaging seeks to revea ...

, videophone

Videotelephony (also known as videoconferencing or video calling) is the use of audio signal, audio and video for simultaneous two-way communication. Today, videotelephony is widespread. There are many terms to refer to videotelephony. ''Vide ...

, character recognition

Optical character recognition or optical character reader (OCR) is the electronic or mechanical conversion of images of typed, handwritten or printed text into machine-encoded text, whether from a scanned document, a photo of a document, a sce ...

, and photograph enhancement. The purpose of early image processing was to improve the quality of the image. It was aimed for human beings to improve the visual effect of people. In image processing, the input is a low-quality image, and the output is an image with improved quality. Common image processing include image enhancement, restoration, encoding, and compression. The first successful application was the American Jet Propulsion Laboratory (JPL). They used image processing techniques such as geometric correction, gradation transformation, noise removal, etc. on the thousands of lunar photos sent back by the Space Detector Ranger 7 in 1964, taking into account the position of the Sun and the environment of the Moon. The impact of the successful mapping of the Moon's surface map by the computer has been a success. Later, more complex image processing was performed on the nearly 100,000 photos sent back by the spacecraft, so that the topographic map, color map and panoramic mosaic of the Moon were obtained, which achieved extraordinary results and laid a solid foundation for human landing on the Moon.

The cost of processing was fairly high, however, with the computing equipment of that era. That changed in the 1970s, when digital image processing proliferated as cheaper computers and dedicated hardware became available. This led to images being processed in real-time, for some dedicated problems such as television standards conversion. As general-purpose computers became faster, they started to take over the role of dedicated hardware for all but the most specialized and computer-intensive operations. With the fast computers and signal processors available in the 2000s, digital image processing has become the most common form of image processing, and is generally used because it is not only the most versatile method, but also the cheapest.

Image sensors

The basis for modernimage sensors An image sensor or imager is a sensor that detects and conveys information used to form an image. It does so by converting the variable attenuation of light waves (as they pass through or reflect off objects) into signals, small bursts of curren ...

is metal–oxide–semiconductor

upright=1.3, Two power MOSFETs in amperes">A in the ''on'' state, dissipating up to about 100 watt">W and controlling a load of over 2000 W. A matchstick is pictured for scale.

In electronics, the metal–oxide–semiconductor field- ...

(MOS) technology, invented at Bell Labs between 1955 and 1960, This led to the development of digital semiconductor

A semiconductor is a material with electrical conductivity between that of a conductor and an insulator. Its conductivity can be modified by adding impurities (" doping") to its crystal structure. When two regions with different doping level ...

image sensors, including the charge-coupled device

A charge-coupled device (CCD) is an integrated circuit containing an array of linked, or coupled, capacitors. Under the control of an external circuit, each capacitor can transfer its electric charge to a neighboring capacitor. CCD sensors are a ...

(CCD) and later the CMOS sensor

An active-pixel sensor (APS) is an image sensor, which was invented by Peter J.W. Noble in 1968, where each pixel sensor unit cell has a photodetector (typically a pinned photodiode) and one or more active transistors. In a metal–oxide–semic ...

.

The charge-coupled device was invented by Willard S. Boyle and George E. Smith at Bell Labs in 1969. While researching MOS technology, they realized that an electric charge was the analogy of the magnetic bubble and that it could be stored on a tiny MOS capacitor

upright=1.3, Two power MOSFETs in amperes">A in the ''on'' state, dissipating up to about 100 watt">W and controlling a load of over 2000 W. A matchstick is pictured for scale.

In electronics, the metal–oxide–semiconductor field- ...

. As it was fairly straightforward to fabricate a series of MOS capacitors in a row, they connected a suitable voltage to them so that the charge could be stepped along from one to the next. The CCD is a semiconductor circuit that was later used in the first digital video camera

A video camera is an Optics, optical instrument that captures videos, as opposed to a movie camera, which records images on Film stock, film. Video cameras were initially developed for the television industry but have since become widely used for ...

s for television broadcasting

A television broadcaster or television network is a telecommunications network for the distribution of television content, where a central operation provides programming to many television stations, pay television providers or, in the United ...

.

The NMOS active-pixel sensor

An active-pixel sensor (APS) is an image sensor, which was invented by Peter J.W. Noble in 1968, where each pixel sensor unit cell has a photodetector (typically a pinned photodiode) and one or more active transistors. In a metal–oxide–semico ...

(APS) was invented by Olympus in Japan during the mid-1980s. This was enabled by advances in MOS semiconductor device fabrication

Semiconductor device fabrication is the process used to manufacture semiconductor devices, typically integrated circuits (ICs) such as microprocessors, microcontrollers, and memories (such as Random-access memory, RAM and flash memory). It is a ...

, with MOSFET scaling

file:D2PAK.JPG, upright=1.3, Two power transistor, power MOSFETs in D2PAK surface-mount packages. Operating as switches, each of these components can sustain a blocking voltage of 120volts, V in the ''off'' state, and can conduct a conti ...

reaching smaller micron and then sub-micron levels. The NMOS APS was fabricated by Tsutomu Nakamura's team at Olympus in 1985. The CMOS

Complementary metal–oxide–semiconductor (CMOS, pronounced "sea-moss

", , ) is a type of MOSFET, metal–oxide–semiconductor field-effect transistor (MOSFET) semiconductor device fabrication, fabrication process that uses complementary an ...

active-pixel sensor (CMOS sensor) was later developed by Eric Fossum's team at the NASA

The National Aeronautics and Space Administration (NASA ) is an independent agencies of the United States government, independent agency of the federal government of the United States, US federal government responsible for the United States ...

Jet Propulsion Laboratory

The Jet Propulsion Laboratory (JPL) is a Federally funded research and development centers, federally funded research and development center (FFRDC) in La Cañada Flintridge, California, Crescenta Valley, United States. Founded in 1936 by Cali ...

in 1993. By 2007, sales of CMOS sensors had surpassed CCD sensors.

MOS image sensors are widely used in optical mouse technology. The first optical mouse, invented by Richard F. Lyon at Xerox

Xerox Holdings Corporation (, ) is an American corporation that sells print and electronic document, digital document products and services in more than 160 countries. Xerox was the pioneer of the photocopier market, beginning with the introduc ...

in 1980, used a 5μm NMOS integrated circuit

An integrated circuit (IC), also known as a microchip or simply chip, is a set of electronic circuits, consisting of various electronic components (such as transistors, resistors, and capacitors) and their interconnections. These components a ...

sensor chip. Since the first commercial optical mouse, the IntelliMouse introduced in 1999, most optical mouse devices use CMOS sensors.

Image compression

An important development in digitalimage compression

Image compression is a type of data compression applied to digital images, to reduce their cost for computer data storage, storage or data transmission, transmission. Algorithms may take advantage of visual perception and the statistical properti ...

technology was the discrete cosine transform

A discrete cosine transform (DCT) expresses a finite sequence of data points in terms of a sum of cosine functions oscillating at different frequency, frequencies. The DCT, first proposed by Nasir Ahmed (engineer), Nasir Ahmed in 1972, is a widely ...

(DCT), a lossy compression

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size ...

technique first proposed by Nasir Ahmed in 1972. DCT compression became the basis for JPEG

JPEG ( , short for Joint Photographic Experts Group and sometimes retroactively referred to as JPEG 1) is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degr ...

, which was introduced by the Joint Photographic Experts Group

The Joint Photographic Experts Group (JPEG) is the joint committee between ISO/ IEC JTC 1/ SC 29 and ITU-T Study Group 16 that created and maintains the JPEG, JPEG 2000, JPEG XR, JPEG XT, JPEG XS, JPEG XL, and related digital image standard ...

in 1992. JPEG compresses images down to much smaller file sizes, and has become the most widely used image file format

An image file format is a file format for a digital image. There are many formats that can be used, such as JPEG, PNG, and GIF. Most formats up until 2022 were for storing 2D images, not 3D ones. The data stored in an image file format may be co ...

on the Internet

The Internet (or internet) is the Global network, global system of interconnected computer networks that uses the Internet protocol suite (TCP/IP) to communicate between networks and devices. It is a internetworking, network of networks ...

. Its highly efficient DCT compression algorithm was largely responsible for the wide proliferation of digital images

A digital image is an image composed of picture elements, also known as pixels, each with '' finite'', '' discrete quantities'' of numeric representation for its intensity or gray level that is an output from its two-dimensional functions f ...

and digital photos, with several billion JPEG images produced every day .

Medical imaging techniques produce very large amounts of data, especially from CT, MRI and PET modalities. As a result, storage and communications of electronic image data are prohibitive without the use of compression. JPEG 2000

JPEG 2000 (JP2) is an image compression standard and coding system. It was developed from 1997 to 2000 by a Joint Photographic Experts Group committee chaired by Touradj Ebrahimi (later the JPEG president), with the intention of superseding their ...

image compression is used by the DICOM

Digital Imaging and Communications in Medicine (DICOM) is a technical standard for the digital storage and Medical image sharing, transmission of medical images and related information. It includes a file format definition, which specifies the str ...

standard for storage and transmission of medical images. The cost and feasibility of accessing large image data sets over low or various bandwidths are further addressed by use of another DICOM standard, called JPIP

JPIP (JPEG 2000 Interactive Protocol) is a compression streamlining protocol that works with JPEG 2000 to produce an image using the least bandwidth required. It can be very useful for medical and environmental awareness purposes, among others, an ...

, to enable efficient streaming of the JPEG 2000

JPEG 2000 (JP2) is an image compression standard and coding system. It was developed from 1997 to 2000 by a Joint Photographic Experts Group committee chaired by Touradj Ebrahimi (later the JPEG president), with the intention of superseding their ...

compressed image data.

Digital signal processor (DSP)

Electronicsignal processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomograph ...

was revolutionized by the wide adoption of MOS technology

MOS Technology, Inc. ("MOS" being short for Metal Oxide Semiconductor), later known as CSG (Commodore Semiconductor Group) and GMT Microelectronics, was a semiconductor design and fabrication company based in Audubon, Pennsylvania. It is ...

in the 1970s. MOS integrated circuit

upright=1.4, gate oxide">insulating layer (pink).

The MOSFET (metal–oxide–semiconductor field-effect transistor) is a type of insulated-gate field-effect transistor (IGFET) that is fabricated by the controlled oxidation of a semiconduct ...

technology was the basis for the first single-chip microprocessors

A microprocessor is a computer processor for which the data processing logic and control is included on a single integrated circuit (IC), or a small number of ICs. The microprocessor contains the arithmetic, logic, and control circuitry r ...

and microcontrollers

A microcontroller (MC, uC, or μC) or microcontroller unit (MCU) is a small computer on a single integrated circuit. A microcontroller contains one or more CPUs (processor cores) along with memory and programmable input/output peripherals. Pro ...

in the early 1970s, and then the first single-chip digital signal processor

A digital signal processor (DSP) is a specialized microprocessor chip, with its architecture optimized for the operational needs of digital signal processing. DSPs are fabricated on metal–oxide–semiconductor (MOS) integrated circuit chips. ...

(DSP) chips in the late 1970s. DSP chips have since been widely used in digital image processing.

The discrete cosine transform

A discrete cosine transform (DCT) expresses a finite sequence of data points in terms of a sum of cosine functions oscillating at different frequency, frequencies. The DCT, first proposed by Nasir Ahmed (engineer), Nasir Ahmed in 1972, is a widely ...

(DCT) image compression

Image compression is a type of data compression applied to digital images, to reduce their cost for computer data storage, storage or data transmission, transmission. Algorithms may take advantage of visual perception and the statistical properti ...

algorithm has been widely implemented in DSP chips, with many companies developing DSP chips based on DCT technology. DCTs are widely used for encoding

In communications and Data processing, information processing, code is a system of rules to convert information—such as a letter (alphabet), letter, word, sound, image, or gesture—into another form, sometimes data compression, shortened or ...

, decoding, video coding, audio coding

An audio coding format (or sometimes audio compression format) is a content representation format for storage or transmission of digital audio (such as in digital television, digital radio and in audio and video files). Examples of audio coding f ...

, multiplexing

In telecommunications and computer networking, multiplexing (sometimes contracted to muxing) is a method by which multiple analog or digital signals are combined into one signal over a shared medium. The aim is to share a scarce resource� ...

, control signals, signaling, analog-to-digital conversion

In electronics, an analog-to-digital converter (ADC, A/D, or A-to-D) is a system that converts an analog signal, such as a sound picked up by a microphone or light entering a digital camera, into a digital signal. An ADC may also provide ...

, formatting luminance

Luminance is a photometric measure of the luminous intensity per unit area of light travelling in a given direction. It describes the amount of light that passes through, is emitted from, or is reflected from a particular area, and falls wit ...

and color differences, and color formats such as YUV444 and YUV411. DCTs are also used for encoding operations such as motion estimation

In computer vision and image processing, motion estimation is the process of determining ''motion vectors'' that describe the transformation from one 2D image to another; usually from adjacent video frame, frames in a video sequence. It is an wel ...

, motion compensation

Motion compensation in computing is an algorithmic technique used to predict a frame in a video given the previous and/or future frames by accounting for motion of the camera and/or objects in the video. It is employed in the encoding of video ...

, inter-frame prediction, quantization, perceptual weighting, entropy encoding

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared by Shannon's source coding theorem, which states that any lossless data compression method ...

, variable encoding, and motion vectors, and decoding operations such as the inverse operation between different color formats (YIQ

YIQ is the color space used by the analog NTSC color TV system. ''I'' stands for ''in-phase'', while ''Q'' stands for ''quadrature'', referring to the components used in quadrature amplitude modulation. Other TV systems used different color spa ...

, YUV and RGB) for display purposes. DCTs are also commonly used for high-definition television

High-definition television (HDTV) describes a television or video system which provides a substantially higher image resolution than the previous generation of technologies. The term has been used since at least 1933; in more recent times, it ref ...

(HDTV) encoder/decoder chips.

Tasks

Digital image processing allows the use of much more complex algorithms, and hence, can offer both more sophisticated performance at simple tasks, and the implementation of methods which would be impossible by analogue means. In particular, digital image processing is a concrete application of, and a practical technology based on: *Classification

Classification is the activity of assigning objects to some pre-existing classes or categories. This is distinct from the task of establishing the classes themselves (for example through cluster analysis). Examples include diagnostic tests, identif ...

* Feature extraction

Feature may refer to:

Computing

* Feature recognition, could be a hole, pocket, or notch

* Feature (computer vision), could be an edge, corner or blob

* Feature (machine learning), in statistics: individual measurable properties of the phenome ...

* Multi-scale signal analysis

* Pattern recognition

Pattern recognition is the task of assigning a class to an observation based on patterns extracted from data. While similar, pattern recognition (PR) is not to be confused with pattern machines (PM) which may possess PR capabilities but their p ...

* Projection

Projection or projections may refer to:

Physics

* Projection (physics), the action/process of light, heat, or sound reflecting from a surface to another in a different direction

* The display of images by a projector

Optics, graphics, and carto ...

Some techniques which are used in digital image processing include:

* Anisotropic diffusion

In image processing and computer vision, anisotropic diffusion, also called Perona–Malik diffusion, is a technique aiming at reducing image noise without removing significant parts of the image content, typically edges, lines or other details t ...

* Hidden Markov model

A hidden Markov model (HMM) is a Markov model in which the observations are dependent on a latent (or ''hidden'') Markov process (referred to as X). An HMM requires that there be an observable process Y whose outcomes depend on the outcomes of X ...

s

* Image editing

Image editing encompasses the processes of altering images, whether they are Digital photography, digital photographs, traditional Photographic processing, photo-chemical photographs, or illustrations. Traditional analog image editing is known ...

* Image restoration

* Independent component analysis

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate statistics, multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and ...

* Linear filtering

* Neural networks

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either Cell (biology), biological cells or signal pathways. While individual neurons are simple, many of them together in a netwo ...

* Partial differential equations

In mathematics, a partial differential equation (PDE) is an equation which involves a multivariable function and one or more of its partial derivatives.

The function is often thought of as an "unknown" that solves the equation, similar to how ...

* Pixelation

* Point feature matching

* Principal components analysis

Principal component analysis (PCA) is a Linear map, linear dimensionality reduction technique with applications in exploratory data analysis, visualization and Data Preprocessing, data preprocessing.

The data is linear map, linearly transformed ...

* Self-organizing map

A self-organizing map (SOM) or self-organizing feature map (SOFM) is an unsupervised machine learning technique used to produce a low-dimensional (typically two-dimensional) representation of a higher-dimensional data set while preserving the t ...

s

* Wavelet

A wavelet is a wave-like oscillation with an amplitude that begins at zero, increases or decreases, and then returns to zero one or more times. Wavelets are termed a "brief oscillation". A taxonomy of wavelets has been established, based on the n ...

s

Digital image transformations

Filtering

Digital filters are used to blur and sharpen digital images. Filtering can be performed by: *convolution

In mathematics (in particular, functional analysis), convolution is a operation (mathematics), mathematical operation on two function (mathematics), functions f and g that produces a third function f*g, as the integral of the product of the two ...

with specifically designed kernels (filter array) in the spatial domain

* masking specific frequency regions in the frequency (Fourier) domain

The following examples show both methods:

Image padding in Fourier domain filtering

Images are typically padded before being transformed to the Fourier space, the highpass filtered images below illustrate the consequences of different padding techniques: Notice that the highpass filter shows extra edges when zero padded compared to the repeated edge padding.Filtering code examples

MATLAB example for spatial domain highpass filtering.Affine transformations

Affine transformations enable basic image transformations including scale, rotate, translate, mirror and shear as is shown in the following examples: To apply the affine matrix to an image, the image is converted to matrix in which each entry corresponds to the pixel intensity at that location. Then each pixel's location can be represented as a vector indicating the coordinates of that pixel in the image, , where and are the row and column of a pixel in the image matrix. This allows the coordinate to be multiplied by an affine-transformation matrix, which gives the position that the pixel value will be copied to in the output image. However, to allow transformations that require translation transformations, 3-dimensionalhomogeneous coordinates

In mathematics, homogeneous coordinates or projective coordinates, introduced by August Ferdinand Möbius in his 1827 work , are a system of coordinates used in projective geometry, just as Cartesian coordinates are used in Euclidean geometry. ...

are needed. The third dimension is usually set to a non-zero constant, usually , so that the new coordinate is . This allows the coordinate vector to be multiplied by a 3×3 matrix, enabling translation shifts. Thus, the third dimension, i.e. the constant , allows translation.

Because matrix multiplication is associative

In mathematics, the associative property is a property of some binary operations that rearranging the parentheses in an expression will not change the result. In propositional logic, associativity is a valid rule of replacement for express ...

, multiple affine transformations can be combined into a single affine transformation by multiplying the matrix of each individual transformation in the order that the transformations are done. This results in a single matrix that, when applied to a point vector, gives the same result as all the individual transformations performed on the vector in sequence. Thus a sequence of affine transformation matrices can be reduced to a single affine transformation matrix.

For example, 2-dimensional coordinates only permit rotation about the origin . But 3-dimensional homogeneous coordinates can be used to first translate any point to , then perform the rotation, and lastly translate the origin back to the original point (the opposite of the first translation). These three affine transformations can be combined into a single matrix—thus allowing rotation around any point in the image.

Image denoising with mathematical morphology

Mathematical morphology (MM) is a nonlinear image processing framework that analyzes shapes within images by probing local pixel neighborhoods using a small, predefined function called a structuring element. In the context ofgrayscale image

In digital photography, computer-generated imagery, and colorimetry, a greyscale (more common in Commonwealth English) or grayscale (more common in American English) image is one in which the value of each pixel is a single sample (signal), s ...

s, MM is especially useful for denoising through dilation

wiktionary:dilation, Dilation (or dilatation) may refer to:

Physiology or medicine

* Cervical dilation, the widening of the cervix in childbirth, miscarriage etc.

* Coronary dilation, or coronary reflex

* Dilation and curettage, the opening of ...

and erosion

Erosion is the action of surface processes (such as Surface runoff, water flow or wind) that removes soil, Rock (geology), rock, or dissolved material from one location on the Earth's crust#Crust, Earth's crust and then sediment transport, tran ...

—primitive operators that can be combined to build more complex filters.

Suppose we have:

* A discrete grayscale image:

* A structuring element:

Here, defines the neighborhood of relative coordinates over which local operations are computed. The values of bias the image during dilation and erosion.

; Dilation : Grayscale dilation is defined as:

:For example, the dilation at position is calculated as:

; Erosion : Grayscale erosion is defined as:

:For example, the erosion at position is calculated as:

Results

After applying dilation to : After applying erosion to :Opening and Closing

MM operations, such as opening and closing, are composite processes that utilize both dilation and erosion to modify the structure of an image. These operations are particularly useful for tasks such as noise removal, shape smoothing, and object separation. * ''Opening'': This operation is performed by applying erosion to an image first, followed by dilation. The purpose of opening is to remove small objects or noise from the foreground while preserving the overall structure of larger objects. It is especially effective in situations where noise appears as isolated bright pixels or small, disconnected features. For example, applying opening to an image with a structuring element would first reduce small details (through erosion) and then restore the main shapes (through dilation). This ensures that unwanted noise is removed without significantly altering the size or shape of larger objects. * ''Closing'': This operation is performed by applying dilation first, followed by erosion. Closing is typically used to fill small holes or gaps within objects and to connect broken parts of the foreground. It works by initially expanding the boundaries of objects (through dilation) and then refining the boundaries (through erosion). For instance, applying closing to the same image would fill in small gaps within objects, such as connecting breaks in thin lines or closing small holes, while ensuring that the surrounding areas are not significantly affected. Both opening and closing can be visualized as ways of refining the structure of an image: opening simplifies and removes small, unnecessary details, while closing consolidates and connects objects to form more cohesive structures.Applications

Digital camera images

Digital cameras generally include specialized digital image processing hardware – either dedicated chips or added circuitry on other chips – to convert the raw data from theirimage sensor An image sensor or imager is a sensor that detects and conveys information used to form an image. It does so by converting the variable attenuation of light waves (as they refraction, pass through or reflection (physics), reflect off objects) into s ...

into a color-corrected image in a standard image file format

An image file format is a file format for a digital image. There are many formats that can be used, such as JPEG, PNG, and GIF. Most formats up until 2022 were for storing 2D images, not 3D ones. The data stored in an image file format may be co ...

. Additional post processing techniques increase edge sharpness or color saturation to create more naturally looking images.

Film

''Westworld

''Westworld'' is an American science fiction dystopia media franchise that began with the Westworld (film), 1973 film ''Westworld'', written and directed by Michael Crichton. The film depicts a technologically advanced Wild West, Wild-West-th ...

'' (1973) was the first feature film to use the digital image processing to pixellate photography to simulate an android's point of view., Larry Yaeger, 16 August 2002 (last update), retrieved 24 March 2010 Image processing is also vastly used to produce the

chroma key

Chroma key compositing, or chroma keying, is a Visual effects, visual-effects and post-production technique for compositing (layering) two or more images or video streams together based on colour hues (colorfulness, chroma range). The techniq ...

effect that replaces the background of actors with natural or artistic scenery.

Face detection

Face detection can be implemented with mathematical morphology, the

Face detection can be implemented with mathematical morphology, the discrete cosine transform

A discrete cosine transform (DCT) expresses a finite sequence of data points in terms of a sum of cosine functions oscillating at different frequency, frequencies. The DCT, first proposed by Nasir Ahmed (engineer), Nasir Ahmed in 1972, is a widely ...

(DCT), and horizontal projection

Projection or projections may refer to:

Physics

* Projection (physics), the action/process of light, heat, or sound reflecting from a surface to another in a different direction

* The display of images by a projector

Optics, graphics, and carto ...

.

General method with feature-based method

The feature-based method of face detection is using skin tone, edge detection, face shape, and feature of a face (like eyes, mouth, etc.) to achieve face detection. The skin tone, face shape, and all the unique elements that only the human face have can be described as features.

Process explanation

# Given a batch of face images, first, extract the skin tone range by sampling face images. The skin tone range is just a skin filter.

## Structural similarity

The structural similarity index measure (SSIM) is a method for predicting the perceived quality of digital television and cinematic pictures, as well as other kinds of digital images and videos. It is also used for measuring the similarity betwe ...

index measure (SSIM) can be applied to compare images in terms of extracting the skin tone.

## Normally, HSV or RGB color spaces are suitable for the skin filter. E.g. HSV mode, the skin tone range is ,48,50~ 0,255,255# After filtering images with skin tone, to get the face edge, morphology and DCT are used to remove noise and fill up missing skin areas.

## Opening method or closing method can be used to achieve filling up missing skin.

## DCT is to avoid the object with skin-like tone. Since human faces always have higher texture.

## Sobel operator or other operators can be applied to detect face edge.

# To position human features like eyes, using the projection and find the peak of the histogram of projection help to get the detail feature like mouth, hair, and lip.

## Projection is just projecting the image to see the high frequency which is usually the feature position.

Improvement of image quality method

Image quality can be influenced by camera vibration, over-exposure, gray level distribution too centralized, and noise, etc. For example, noise problem can be solved by smoothing method while gray level distribution problem can be improved by histogram equalization. Smoothing method In drawing, if there is some dissatisfied color, taking some color around dissatisfied color and averaging them. This is an easy way to think of Smoothing method. Smoothing method can be implemented with mask andconvolution

In mathematics (in particular, functional analysis), convolution is a operation (mathematics), mathematical operation on two function (mathematics), functions f and g that produces a third function f*g, as the integral of the product of the two ...

. Take the small image and mask for instance as below.

image is

mask is

After convolution and smoothing, image is

Observing image, 1

The comma is a punctuation mark that appears in several variants in different languages. Some typefaces render it as a small line, slightly curved or straight, but inclined from the vertical; others give it the appearance of a miniature fille ...

image , 2 image, 1

The comma is a punctuation mark that appears in several variants in different languages. Some typefaces render it as a small line, slightly curved or straight, but inclined from the vertical; others give it the appearance of a miniature fille ...

and image , 2

The original image pixel is 1, 4, 28, 30. After smoothing mask, the pixel becomes 9, 10, 9, 9 respectively.

new image, 1

The comma is a punctuation mark that appears in several variants in different languages. Some typefaces render it as a small line, slightly curved or straight, but inclined from the vertical; others give it the appearance of a miniature fille ...

= * (image ,0image ,1image ,2image ,0image ,1image ,2image ,0image ,1image ,2

new image, 1

The comma is a punctuation mark that appears in several variants in different languages. Some typefaces render it as a small line, slightly curved or straight, but inclined from the vertical; others give it the appearance of a miniature fille ...

= floor( * (2+5+6+3+1+4+1+28+30)) = 9

new image , 2= floor({ * (5+6+5+1+4+6+28+30+2)) = 10

new image, 1

The comma is a punctuation mark that appears in several variants in different languages. Some typefaces render it as a small line, slightly curved or straight, but inclined from the vertical; others give it the appearance of a miniature fille ...

= floor( * (3+1+4+1+28+30+7+3+2)) = 9

new image , 2= floor( * (1+4+6+28+30+2+3+2+2)) = 9

Gray Level Histogram method

Generally, given a gray level histogram from an image as below. Changing the histogram to uniform distribution from an image is usually what we called histogram equalization.

In discrete time, the area of gray level histogram is (see figure 1) while the area of uniform distribution is (see figure 2). It is clear that the area will not change, so .

From the uniform distribution, the probability of is while the

In continuous time, the equation is .

Moreover, based on the definition of a function, the Gray level histogram method is like finding a function that satisfies f(p)=q.

{, class="wikitable"

, -

! Improvement method

! Issue

! Before improvement

! Process

! After improvement

, -

, -

, Smoothing method

, noise

with Matlab, salt & pepper with 0.01 parameter is added

In discrete time, the area of gray level histogram is (see figure 1) while the area of uniform distribution is (see figure 2). It is clear that the area will not change, so .

From the uniform distribution, the probability of is while the

In continuous time, the equation is .

Moreover, based on the definition of a function, the Gray level histogram method is like finding a function that satisfies f(p)=q.

{, class="wikitable"

, -

! Improvement method

! Issue

! Before improvement

! Process

! After improvement

, -

, -

, Smoothing method

, noise

with Matlab, salt & pepper with 0.01 parameter is addedto the original image in order to create a noisy image. ,

,

# read image and convert image into grayscale

# convolution the graysale image with the mask

# denoisy image will be the result of step 2.

,

,

# read image and convert image into grayscale

# convolution the graysale image with the mask

# denoisy image will be the result of step 2.

,  , -

, -

, Histogram Equalization

, Gray level distribution too centralized

,

, -

, -

, Histogram Equalization

, Gray level distribution too centralized

,  , Refer to the Histogram equalization

,

, Refer to the Histogram equalization

,  , -

, -

Challenges

# Noise and Distortions: Imperfections in images due to poor lighting, limited sensors, and file compression can result in unclear images that impact accurate image conversion. # Variability in Image Quality: Variations in image quality and resolution, including blurry images and incomplete details, can hinder uniform processing across a database. # Object Detection and Recognition: Identifying and recognising objects within images, especially in complex scenarios with multiple objects and occlusions, poses a significant challenge. # Data Annotation and Labelling: Labelling diverse and multiple images for machine recognition is crucial for further processing accuracy, as incorrect identification can lead to unrealistic results. # Computational Resource Intensity: Accessing adequate computational resources for image processing can be challenging and costly, hindering progress without sufficient resources.See also

References

Further reading

* * * * * * * * *External links

Lectures on Image Processing

by Alan Peters. Vanderbilt University. Updated 7 January 2016.

{{DEFAULTSORT:Digital image processing Computer-related introductions in the 1960s Computer vision Digital imaging