number

A number is a mathematical object used to count, measure, and label. The most basic examples are the natural numbers 1, 2, 3, 4, and so forth. Numbers can be represented in language with number words. More universally, individual numbers can ...

of elements of a finite set

In mathematics, particularly set theory, a finite set is a set that has a finite number of elements. Informally, a finite set is a set which one could in principle count and finish counting. For example,

is a finite set with five elements. Th ...

of objects; that is, determining the size

Size in general is the Magnitude (mathematics), magnitude or dimensions of a thing. More specifically, ''geometrical size'' (or ''spatial size'') can refer to three geometrical measures: length, area, or volume. Length can be generalized ...

of a set. The traditional way of counting consists of continually increasing a (mental or spoken) counter by a unit for every element of the set, in some order, while marking (or displacing) those elements to avoid visiting the same element more than once, until no unmarked elements are left; if the counter was set to one after the first object, the value after visiting the final object gives the desired number of elements. The related term ''enumeration

An enumeration is a complete, ordered listing of all the items in a collection. The term is commonly used in mathematics and computer science to refer to a listing of all of the element (mathematics), elements of a Set (mathematics), set. The pre ...

'' refers to uniquely identifying the elements of a finite (combinatorial) set or infinite set by assigning a number to each element.

Counting sometimes involves numbers other than one; for example, when counting money, counting out change, "counting by twos" (2, 4, 6, 8, 10, 12, ...), or "counting by fives" (5, 10, 15, 20, 25, ...).

There is archaeological evidence suggesting that humans have been counting for at least 50,000 years. Counting was primarily used by ancient cultures to keep track of social and economic data such as the number of group members, prey animals, property, or debts (that is, accountancy

Accounting, also known as accountancy, is the process of recording and processing information about economic entities, such as businesses and corporations. Accounting measures the results of an organization's economic activities and conveys ...

). Notched bones were also found in the Border Caves in South Africa, which may suggest that the concept of counting was known to humans as far back as 44,000 BCE. The development of counting led to the development of mathematical notation

Mathematical notation consists of using glossary of mathematical symbols, symbols for representing operation (mathematics), operations, unspecified numbers, relation (mathematics), relations, and any other mathematical objects and assembling ...

, numeral system

A numeral system is a writing system for expressing numbers; that is, a mathematical notation for representing numbers of a given set, using digits or other symbols in a consistent manner.

The same sequence of symbols may represent differe ...

s, and writing

Writing is the act of creating a persistent representation of language. A writing system includes a particular set of symbols called a ''script'', as well as the rules by which they encode a particular spoken language. Every written language ...

.

Forms of counting

Verbal counting involves speaking sequential numbers aloud or mentally to track progress. Generally such counting is done with base 10 numbers: "1, 2, 3, 4", etc. Verbal counting is often used for objects that are currently present rather than for counting things over time, since following an interruption counting must resume from where it was left off, a number that has to be recorded or remembered.

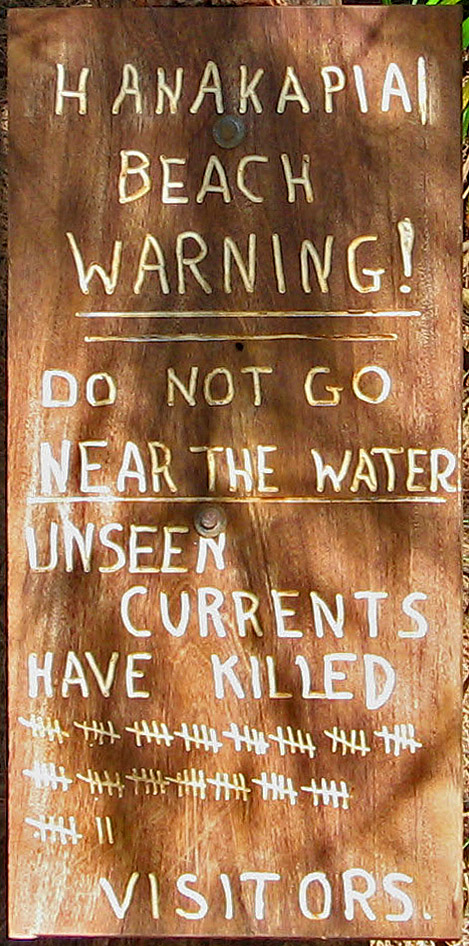

Counting a small set of objects, especially over time, can be accomplished efficiently with tally marks: making a mark for each number and then counting all of the marks when done tallying. Tallying is base 1 counting.

Verbal counting involves speaking sequential numbers aloud or mentally to track progress. Generally such counting is done with base 10 numbers: "1, 2, 3, 4", etc. Verbal counting is often used for objects that are currently present rather than for counting things over time, since following an interruption counting must resume from where it was left off, a number that has to be recorded or remembered.

Counting a small set of objects, especially over time, can be accomplished efficiently with tally marks: making a mark for each number and then counting all of the marks when done tallying. Tallying is base 1 counting.

Finger counting

Finger-counting, also known as dactylonomy, is the act of counting using one's fingers. There are multiple different systems used across time and between cultures, though many of these have seen a decline in use because of the spread of Arabic nu ...

is convenient and common for small numbers. Children count on fingers to facilitate tallying and for performing simple mathematical operations. Older finger counting methods used the four fingers and the three bones in each finger (phalanges

The phalanges (: phalanx ) are digit (anatomy), digital bones in the hands and foot, feet of most vertebrates. In primates, the Thumb, thumbs and Hallux, big toes have two phalanges while the other Digit (anatomy), digits have three phalanges. ...

) to count to twelve. Other hand-gesture systems are also in use, for example the Chinese system by which one can count to 10 using only gestures of one hand. With finger binary it is possible to keep a finger count up to .

Various devices can also be used to facilitate counting, such as tally counters and abacuses.

Inclusive counting

Inclusive/exclusive counting are two different methods of counting. For exclusive counting, unit intervals are counted at the end of each interval. For inclusive counting, unit intervals are counted beginning with the start of the first interval and ending with end of the last interval. This results in a count which is always greater by one when using inclusive counting, as compared to using exclusive counting, for the same set. Apparently, the introduction of the number zero to the number line resolved this difficulty; however, inclusive counting is still useful for some things. Refer also to the fencepost error, which is a type of off-by-one error. Modern mathematical English language usage has introduced another difficulty, however. Because an exclusive counting is generally tacitly assumed, the term "inclusive" is generally used in reference to a set which is actually counted exclusively. For example; How many numbers are included in the set that ranges from 3 to 8, inclusive? The set is counted exclusively, once the range of the set has been made certain by the use of the word "inclusive". The answer is 6; that is 8-3+1, where the +1 range adjustment makes the adjusted exclusive count numerically equivalent to an inclusive count, even though the range of the inclusive count does not include the number eight unit interval. So, it's necessary to discern the difference in usage between the terms "inclusive counting" and "inclusive" or "inclusively", and one must recognize that it's not uncommon for the former term to be loosely used for the latter process. Inclusive counting is usually encountered when dealing with time inRoman calendar

The Roman calendar was the calendar used by the Roman Kingdom and Roman Republic. Although the term is primarily used for Rome's pre-Julian calendars, it is often used inclusively of the Julian calendar established by Julius Caesar in 46&nbs ...

s and the Romance language

The Romance languages, also known as the Latin or Neo-Latin languages, are the languages that are Language family, directly descended from Vulgar Latin. They are the only extant subgroup of the Italic languages, Italic branch of the Indo-E ...

s. In the ancient Roman calendar, the ''nones'' (meaning "nine") is 8 days before the ''ides''; more generally, dates are specified as inclusively counted days up to the next named day.

In the Christian liturgical calendar, Quinquagesima (meaning 50) is 49 days before Easter Sunday. When counting "inclusively", the Sunday (the start day) will be ''day 1'' and therefore the following Sunday will be the ''eighth day''. For example, the French phrase for " fortnight" is ''quinzaine'' (15 ays, and similar words are present in Greek (δεκαπενθήμερο, ''dekapenthímero''), Spanish (''quincena'') and Portuguese (''quinzena'').

In contrast, the English word "fortnight" itself derives from "a fourteen-night", as the archaic " sennight" does from "a seven-night"; the English words are not examples of inclusive counting. In exclusive counting languages such as English, when counting eight days "from Sunday", Monday will be ''day 1'', Tuesday ''day 2'', and the following Monday will be the ''eighth day''. For many years it was a standard practice in English law for the phrase "from a date" to mean "beginning on the day after that date": this practice is now deprecated because of the high risk of misunderstanding.

Similar counting is involved in East Asian age reckoning, in which newborns are considered to be 1 at birth.

Musical terminology also uses inclusive counting of intervals between notes of the standard scale: going up one note is a second interval, going up two notes is a third interval, etc., and going up seven notes is an ''octave

In music, an octave (: eighth) or perfect octave (sometimes called the diapason) is an interval between two notes, one having twice the frequency of vibration of the other. The octave relationship is a natural phenomenon that has been referr ...

''.

Education and development

Learning to count is an important educational/developmental milestone in most cultures of the world. Learning to count is a child's very first step into mathematics, and constitutes the most fundamental idea of that discipline. However, some cultures in Amazonia and the Australian Outback do not count, and their languages do not have number words. Many children at just 2 years of age have some skill in reciting the count list (that is, saying "one, two, three, ..."). They can also answer questions of ordinality for small numbers, for example, "What comes after ''three''?". They can even be skilled at pointing to each object in a set and reciting the words one after another. This leads many parents and educators to the conclusion that the child knows how to use counting to determine the size of a set. Research suggests that it takes about a year after learning these skills for a child to understand what they mean and why the procedures are performed.Le Corre, M., Van de Walle, G., Brannon, E. M., Carey, S. (2006). Re-visiting the competence/performance debate in the acquisition of the counting principles. Cognitive Psychology, 52(2), 130–169. In the meantime, children learn how to name cardinalities that they can subitize.Counting in mathematics

In mathematics, the essence of counting a set and finding a result ''n'', is that it establishes a one-to-one correspondence (or bijection) of the subject set with the subset of positive integers . A fundamental fact, which can be proved bymathematical induction

Mathematical induction is a method for mathematical proof, proving that a statement P(n) is true for every natural number n, that is, that the infinitely many cases P(0), P(1), P(2), P(3), \dots all hold. This is done by first proving a ...

, is that no bijection can exist between and unless ; this fact (together with the fact that two bijections can be composed to give another bijection) ensures that counting the same set in different ways can never result in different numbers (unless an error is made). This is the fundamental mathematical theorem that gives counting its purpose; however you count a (finite) set, the answer is the same. In a broader context, the theorem is an example of a theorem in the mathematical field of (finite) combinatorics

Combinatorics is an area of mathematics primarily concerned with counting, both as a means and as an end to obtaining results, and certain properties of finite structures. It is closely related to many other areas of mathematics and has many ...

—hence (finite) combinatorics is sometimes referred to as "the mathematics of counting."

Many sets that arise in mathematics do not allow a bijection to be established with for ''any'' natural number

In mathematics, the natural numbers are the numbers 0, 1, 2, 3, and so on, possibly excluding 0. Some start counting with 0, defining the natural numbers as the non-negative integers , while others start with 1, defining them as the positive in ...

''n''; these are called infinite sets, while those sets for which such a bijection does exist (for some ''n'') are called finite set

In mathematics, particularly set theory, a finite set is a set that has a finite number of elements. Informally, a finite set is a set which one could in principle count and finish counting. For example,

is a finite set with five elements. Th ...

s. Infinite sets cannot be counted in the usual sense; for one thing, the mathematical theorems which underlie this usual sense for finite sets are false for infinite sets. Furthermore, different definitions of the concepts in terms of which these theorems are stated, while equivalent for finite sets, are inequivalent in the context of infinite sets.

The notion of counting may be extended to them in the sense of establishing (the existence of) a bijection with some well-understood set. For instance, if a set can be brought into bijection with the set of all natural numbers, then it is called " countably infinite." This kind of counting differs in a fundamental way from counting of finite sets, in that adding new elements to a set does not necessarily increase its size, because the possibility of a bijection with the original set is not excluded. For instance, the set of all integer

An integer is the number zero (0), a positive natural number (1, 2, 3, ...), or the negation of a positive natural number (−1, −2, −3, ...). The negations or additive inverses of the positive natural numbers are referred to as negative in ...

s (including negative numbers) can be brought into bijection with the set of natural numbers, and even seemingly much larger sets like that of all finite sequences of rational numbers are still (only) countably infinite. Nevertheless, there are sets, such as the set of real number

In mathematics, a real number is a number that can be used to measure a continuous one- dimensional quantity such as a duration or temperature. Here, ''continuous'' means that pairs of values can have arbitrarily small differences. Every re ...

s, that can be shown to be "too large" to admit a bijection with the natural numbers, and these sets are called " uncountable". Sets for which there exists a bijection between them are said to have the same cardinality

The thumb is the first digit of the hand, next to the index finger. When a person is standing in the medical anatomical position (where the palm is facing to the front), the thumb is the outermost digit. The Medical Latin English noun for thum ...

, and in the most general sense counting a set can be taken to mean determining its cardinality. Beyond the cardinalities given by each of the natural numbers, there is an infinite hierarchy of infinite cardinalities, although only very few such cardinalities occur in ordinary mathematics (that is, outside set theory

Set theory is the branch of mathematical logic that studies Set (mathematics), sets, which can be informally described as collections of objects. Although objects of any kind can be collected into a set, set theory – as a branch of mathema ...

that explicitly studies possible cardinalities).

Counting, mostly of finite sets, has various applications in mathematics. One important principle is that if two sets ''X'' and ''Y'' have the same finite number of elements, and a function is known to be injective, then it is also surjective, and vice versa. A related fact is known as the pigeonhole principle, which states that if two sets ''X'' and ''Y'' have finite numbers of elements ''n'' and ''m'' with , then any map is ''not'' injective (so there exist two distinct elements of ''X'' that ''f'' sends to the same element of ''Y''); this follows from the former principle, since if ''f'' were injective, then so would its restriction to a strict subset ''S'' of ''X'' with ''m'' elements, which restriction would then be surjective, contradicting the fact that for ''x'' in ''X'' outside ''S'', ''f''(''x'') cannot be in the image of the restriction. Similar counting arguments can prove the existence of certain objects without explicitly providing an example. In the case of infinite sets this can even apply in situations where it is impossible to give an example.

The domain of enumerative combinatorics deals with computing the number of elements of finite sets, without actually counting them; the latter usually being impossible because infinite families of finite sets are considered at once, such as the set of permutation

In mathematics, a permutation of a set can mean one of two different things:

* an arrangement of its members in a sequence or linear order, or

* the act or process of changing the linear order of an ordered set.

An example of the first mean ...

s of for any natural number ''n''.

See also

* Calculation * Card reading (bridge) *Cardinal number

In mathematics, a cardinal number, or cardinal for short, is what is commonly called the number of elements of a set. In the case of a finite set, its cardinal number, or cardinality is therefore a natural number. For dealing with the cas ...

*Combinatorics

Combinatorics is an area of mathematics primarily concerned with counting, both as a means and as an end to obtaining results, and certain properties of finite structures. It is closely related to many other areas of mathematics and has many ...

* Count data

* Counting (music)

* Counting problem (complexity)

* Counting-out game

*Developmental psychology

Developmental psychology is the scientific study of how and why humans grow, change, and adapt across the course of their lives. Originally concerned with infants and children, the field has expanded to include adolescence, adult development ...

*Elementary arithmetic

Elementary arithmetic is a branch of mathematics involving addition, subtraction, multiplication, and Division (mathematics), division. Due to its low level of abstraction, broad range of application, and position as the foundation of all mathema ...

*Finger counting

Finger-counting, also known as dactylonomy, is the act of counting using one's fingers. There are multiple different systems used across time and between cultures, though many of these have seen a decline in use because of the spread of Arabic nu ...

*History of mathematics

The history of mathematics deals with the origin of discoveries in mathematics and the History of mathematical notation, mathematical methods and notation of the past. Before the modern age and the worldwide spread of knowledge, written examples ...

* Jeton

* Level of measurement

* List of numbers

* Mathematical quantity

*Ordinal number

In set theory, an ordinal number, or ordinal, is a generalization of ordinal numerals (first, second, th, etc.) aimed to extend enumeration to infinite sets.

A finite set can be enumerated by successively labeling each element with the leas ...

* Particle number

* Subitizing and counting

* Tally mark

* Unary numeral system

* Yan tan tethera (Counting sheep in Britain)

References

{{Authority control Elementary mathematics Numeral systems Measurement Applied mathematics Statistical concepts Mathematical logic ca:Comptar