The history of artificial intelligence (

AI) began in

antiquity, with myths, stories, and rumors of artificial beings endowed with intelligence or consciousness by master craftsmen. The study of logic and formal reasoning from antiquity to the present led directly to the invention of the

programmable digital computer in the 1940s, a machine based on abstract mathematical reasoning. This device and the ideas behind it inspired scientists to begin discussing the possibility of building an

electronic brain.

The field of AI research was founded at a

workshop

Beginning with the Industrial Revolution era, a workshop may be a room, rooms or building which provides both the area and tools (or machinery) that may be required for the manufacture or repair of manufactured goods. Workshops were the only ...

held on the campus of

Dartmouth College

Dartmouth College ( ) is a Private university, private Ivy League research university in Hanover, New Hampshire, United States. Established in 1769 by Eleazar Wheelock, Dartmouth is one of the nine colonial colleges chartered before the America ...

in 1956. Attendees of the workshop became the leaders of AI research for decades. Many of them predicted that machines as intelligent as humans would exist within a generation. The

U.S. government

The Federal Government of the United States of America (U.S. federal government or U.S. government) is the national government of the United States.

The U.S. federal government is composed of three distinct branches: legislative, executi ...

provided millions of dollars with the hope of making this vision come true.

Eventually, it became obvious that researchers had grossly underestimated the difficulty of this feat. In 1974, criticism from

James Lighthill

Sir Michael James Lighthill (23 January 1924 – 17 July 1998) was a British applied mathematician, known for his pioneering work in the field of aeroacoustics and for writing the Lighthill report in 1973, which pessimistically stated t ...

and pressure from the U.S.A. Congress led the U.S. and

British Government

His Majesty's Government, abbreviated to HM Government or otherwise UK Government, is the central government, central executive authority of the United Kingdom of Great Britain and Northern Ireland. s to stop funding undirected research into artificial intelligence. Seven years later, a visionary initiative by the

Japanese Government

The Government of Japan is the central government of Japan. It consists of legislative, executive and judiciary branches and functions under the framework established by the Constitution of Japan. Japan is a unitary state, containing forty- ...

and the success of

expert system

In artificial intelligence (AI), an expert system is a computer system emulating the decision-making ability of a human expert.

Expert systems are designed to solve complex problems by reasoning through bodies of knowledge, represented mainly as ...

s reinvigorated investment in AI, and by the late 1980s, the industry had grown into a billion-dollar enterprise. However, investors' enthusiasm waned in the 1990s, and the field was criticized in the press and avoided by industry (a period known as an "

AI winter

In the history of artificial intelligence (AI), an AI winter is a period of reduced funding and interest in AI research.[machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...](_blank)

was applied to a wide range of problems in academia and industry. The success was due to the availability of powerful computer hardware, the collection of immense data sets, and the application of solid mathematical methods. Soon after,

deep learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience a ...

proved to be a breakthrough technology, eclipsing all other methods. The

transformer architecture debuted in 2017 and was used to produce impressive

generative AI

Generative artificial intelligence (Generative AI, GenAI, or GAI) is a subfield of artificial intelligence that uses generative models to produce text, images, videos, or other forms of data. These models learn the underlying patterns and str ...

applications, amongst other use cases.

Investment in AI

boomed in the 2020s. The recent AI boom, initiated by the development of transformer architecture, led to the rapid scaling and public releases of

large language models

A large language model (LLM) is a language model trained with Self-supervised learning, self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially Natural language generation, language g ...

(LLMs) like

ChatGPT

ChatGPT is a generative artificial intelligence chatbot developed by OpenAI and released on November 30, 2022. It uses large language models (LLMs) such as GPT-4o as well as other Multimodal learning, multimodal models to create human-like re ...

. These models exhibit human-like traits of knowledge, attention, and creativity, and have been integrated into various sectors, fueling exponential investment in AI. However, concerns about the potential risks and

ethical implications of advanced AI have also emerged, causing debate about the future of AI and its impact on society.

Precursors

Mythical, fictional, and speculative precursors

Myth and legend

In

Greek mythology

Greek mythology is the body of myths originally told by the Ancient Greece, ancient Greeks, and a genre of ancient Greek folklore, today absorbed alongside Roman mythology into the broader designation of classical mythology. These stories conc ...

,

Talos

In Greek mythology, Talos, also spelled Talus (; , ''Tálōs'') or Talon (; , ''Tálōn''), was a man of bronze who protected Crete from pirates and invaders. Despite the popular idea that he was a giant, no ancient source states this explicitl ...

was a creature made of bronze who acted as guardian for the

island of Crete. He would throw boulders at the ships of invaders and would complete 3 circuits around the island's perimeter daily. According to

pseudo-Apollodorus

The ''Bibliotheca'' (Ancient Greek: ), is a compendium of Greek myths and heroic legends, genealogical tables and histories arranged in three books, generally dated to the first or second century AD. The work is commonly described as having been ...

' ''

Bibliotheke'', Hephaestus forged Talos with the aid of a cyclops and presented the

automaton

An automaton (; : automata or automatons) is a relatively self-operating machine, or control mechanism designed to automatically follow a sequence of operations, or respond to predetermined instructions. Some automata, such as bellstrikers i ...

as a gift to

Minos

Main injector neutrino oscillation search (MINOS) was a particle physics experiment designed to study the phenomena of neutrino oscillations, first discovered by a Super-Kamiokande (Super-K) experiment in 1998. Neutrinos produced by the NuMI ...

. In the

Argonautica

The ''Argonautica'' () is a Greek literature, Greek epic poem written by Apollonius of Rhodes, Apollonius Rhodius in the 3rd century BC. The only entirely surviving Hellenistic civilization, Hellenistic epic (though Aetia (Callimachus), Callim ...

,

Jason

Jason ( ; ) was an ancient Greek mythological hero and leader of the Argonauts, whose quest for the Golden Fleece is featured in Greek literature. He was the son of Aeson, the rightful king of Iolcos. He was married to the sorceress Med ...

and the

Argonauts

The Argonauts ( ; ) were a band of heroes in Greek mythology, who in the years before the Trojan War (around 1300 BC) accompanied Jason to Colchis in his quest to find the Golden Fleece. Their name comes from their ship, ''Argo'', named after it ...

defeated Talos by removing a plug near his foot, causing the vital

ichor

In Greek mythology, ichor () is the ethereal fluid that is the blood of the gods and/or immortals. The Ancient Greek word () is of uncertain etymology, and has been suggested to be a foreign word, possibly the Pre-Greek substrate.

In classic ...

to flow out from his body and rendering him lifeless.

Pygmalion was a legendary king and sculptor of Greek mythology, famously represented in

Ovid

Publius Ovidius Naso (; 20 March 43 BC – AD 17/18), known in English as Ovid ( ), was a Augustan literature (ancient Rome), Roman poet who lived during the reign of Augustus. He was a younger contemporary of Virgil and Horace, with whom he i ...

's ''

Metamorphoses

The ''Metamorphoses'' (, , ) is a Latin Narrative poetry, narrative poem from 8 Common Era, CE by the Ancient Rome, Roman poet Ovid. It is considered his ''Masterpiece, magnum opus''. The poem chronicles the history of the world from its Cre ...

''. In the 10th book of Ovid's narrative poem, Pygmalion becomes disgusted with women when he witnesses the way in which the

Propoetides prostitute themselves. Despite this, he makes offerings at the temple of Venus asking the goddess to bring to him a woman just like a statue he carved.

Medieval legends of artificial beings

In ''Of the Nature of Things'', the Swiss alchemist

Paracelsus

Paracelsus (; ; 1493 – 24 September 1541), born Theophrastus von Hohenheim (full name Philippus Aureolus Theophrastus Bombastus von Hohenheim), was a Swiss physician, alchemist, lay theologian, and philosopher of the German Renaissance.

H ...

describes a procedure that he claims can fabricate an "artificial man". By placing the "sperm of a man" in horse dung, and feeding it the "Arcanum of Mans blood" after 40 days, the concoction will become a living infant.

The earliest written account regarding golem-making is found in the writings of

Eleazar ben Judah of Worms in the early 13th century. During the Middle Ages, it was believed that the animation of a

Golem

A golem ( ; ) is an animated Anthropomorphism, anthropomorphic being in Jewish folklore, which is created entirely from inanimate matter, usually clay or mud. The most famous golem narrative involves Judah Loew ben Bezalel, the late 16th-century ...

could be achieved by insertion of a piece of paper with any of God's names on it, into the mouth of the clay figure. Unlike legendary automata like

Brazen Heads, a

Golem

A golem ( ; ) is an animated Anthropomorphism, anthropomorphic being in Jewish folklore, which is created entirely from inanimate matter, usually clay or mud. The most famous golem narrative involves Judah Loew ben Bezalel, the late 16th-century ...

was unable to speak.

''

Takwin'', the artificial creation of life, was a frequent topic of

Ismaili

Ismailism () is a branch of Shia Islam. The Isma'ili () get their name from their acceptance of Imam Isma'il ibn Jafar as the appointed spiritual successor ( imām) to Ja'far al-Sadiq, wherein they differ from the Twelver Shia, who accept ...

alchemical manuscripts, especially those attributed to

Jabir ibn Hayyan

Abū Mūsā Jābir ibn Ḥayyān (Arabic: , variously called al-Ṣūfī, al-Azdī, al-Kūfī, or al-Ṭūsī), died 806−816, is the purported author of a large number of works in Arabic, often called the Jabirian corpus. The treatises that ...

. Islamic alchemists attempted to create a broad range of life through their work, ranging from plants to animals.

In

Faust: The Second Part of the Tragedy by

Johann Wolfgang von Goethe

Johann Wolfgang (von) Goethe (28 August 1749 – 22 March 1832) was a German polymath who is widely regarded as the most influential writer in the German language. His work has had a wide-ranging influence on Western literature, literary, Polit ...

, an alchemically fabricated

homunculus

A homunculus ( , , ; "little person", : homunculi , , ) is a small human being. Popularized in 16th-century alchemy and 19th-century fiction, it has historically referred to the creation of a miniature, fully formed human. The concept has root ...

, destined to live forever in the flask in which he was made, endeavors to be born into a full human body. Upon the initiation of this transformation, however, the flask shatters and the homunculus dies.

Modern fiction

By the 19th century, ideas about artificial men and thinking machines became a popular theme in fiction. Notable works like

Mary Shelley

Mary Wollstonecraft Shelley ( , ; ; 30 August 1797 – 1 February 1851) was an English novelist who wrote the Gothic novel ''Frankenstein, Frankenstein; or, The Modern Prometheus'' (1818), which is considered an History of science fiction# ...

's ''

Frankenstein

''Frankenstein; or, The Modern Prometheus'' is an 1818 Gothic novel written by English author Mary Shelley. ''Frankenstein'' tells the story of Victor Frankenstein, a young scientist who creates a Sapience, sapient Frankenstein's monster, crea ...

'' and

Karel Čapek

Karel Čapek (; 9 January 1890 – 25 December 1938) was a Czech writer, playwright, critic and journalist. He has become best known for his science fiction, including his novel '' War with the Newts'' (1936) and play '' R.U.R.'' (''Rossum' ...

's ''

R.U.R. (Rossum's Universal Robots)

''R.U.R.'' is a 1920 science fiction play by the Czech writer Karel Čapek. "R.U.R." stands for (Rossum's Universal Robots, a phrase that has been used as a subtitle in English versions). The play had its world premiere on 2 January 1921 in ...

''

explored the concept of artificial life. Speculative essays, such as

Samuel Butler's "

Darwin among the Machines

Darwin may refer to:

Common meanings

* Charles Darwin (1809–1882), English naturalist and writer, best known as the originator of the theory of biological evolution by natural selection

* Darwin, Northern Territory, a capital city in Australia, ...

", and

Edgar Allan Poe's "

Maelzel's Chess Player" reflected society's growing interest in machines with artificial intelligence. AI remains a common topic in science fiction today.

Automata

Realistic humanoid

automata

An automaton (; : automata or automatons) is a relatively self-operating machine, or control mechanism designed to automatically follow a sequence of operations, or respond to predetermined instructions. Some automata, such as bellstrikers i ...

were built by craftsman from many civilizations, including

Yan Shi,

Hero of Alexandria

Hero of Alexandria (; , , also known as Heron of Alexandria ; probably 1st or 2nd century AD) was a Greek mathematician and engineer who was active in Alexandria in Egypt during the Roman era. He has been described as the greatest experimental ...

,

Al-Jazari

Badīʿ az-Zaman Abu l-ʿIzz ibn Ismāʿīl ibn ar-Razāz al-Jazarī (1136–1206, , ) was a Muslim polymath: a scholar, inventor, mechanical engineer, artisan and artist from the Artuqid Dynasty of Jazira in Mesopotamia. He is best known for ...

,

Haroun al-Rashid,

Jacques de Vaucanson

Jacques de Vaucanson (; February 24, 1709 – November 21, 1782) was a French inventor and artist who built the first all-metal lathe. This invention was crucial for the Industrial Revolution. The lathe is known as the mother of machine tools, a ...

,

Leonardo Torres y Quevedo,

Pierre Jaquet-Droz

Pierre Jaquet-Droz (; 1721–1790) was a watchmaker of the late eighteenth century. He was born on 28 July 1721 in La Chaux-de-Fonds, in the Principality of Neuchâtel, which was then part of the Kingdom of Prussia. He lived in Paris, London, and ...

and

Wolfgang von Kempelen.

The oldest known automata were the

sacred statues of

ancient Egypt

Ancient Egypt () was a cradle of civilization concentrated along the lower reaches of the Nile River in Northeast Africa. It emerged from prehistoric Egypt around 3150BC (according to conventional Egyptian chronology), when Upper and Lower E ...

and

Greece

Greece, officially the Hellenic Republic, is a country in Southeast Europe. Located on the southern tip of the Balkan peninsula, it shares land borders with Albania to the northwest, North Macedonia and Bulgaria to the north, and Turkey to th ...

. The faithful believed that craftsman had imbued these figures with very real minds, capable of wisdom and emotion—

Hermes Trismegistus

Hermes Trismegistus (from , "Hermes the Thrice-Greatest") is a legendary Hellenistic period figure that originated as a syncretic combination of the Greek god Hermes and the Egyptian god Thoth.A survey of the literary and archaeological eviden ...

wrote that "by discovering the true nature of the gods, man has been able to reproduce it". English scholar

Alexander Neckham asserted that the Ancient Roman poet

Virgil

Publius Vergilius Maro (; 15 October 70 BC21 September 19 BC), usually called Virgil or Vergil ( ) in English, was an ancient Rome, ancient Roman poet of the Augustan literature (ancient Rome), Augustan period. He composed three of the most fa ...

had built a palace with automaton statues.

During the early modern period, these legendary automata were said to possess the magical ability to answer questions put to them. The late medieval alchemist and proto-Protestant

Roger Bacon

Roger Bacon (; or ', also '' Rogerus''; ), also known by the Scholastic accolades, scholastic accolade ''Doctor Mirabilis'', was a medieval English polymath, philosopher, scientist, theologian and Franciscans, Franciscan friar who placed co ...

was purported to have fabricated a

brazen head, having developed a legend of having been a wizard. These legends were similar to the Norse myth of the Head of

Mímir

Mímir or Mim is a figure in Norse mythology, renowned for his knowledge and wisdom, who is beheaded during the Æsir–Vanir War. Afterward, the god Odin carries around Mímir's head and it recites secret knowledge and counsel to him.

Mímir ...

. According to legend, Mímir was known for his intellect and wisdom, and was beheaded in the

Æsir-Vanir War.

Odin

Odin (; from ) is a widely revered god in Norse mythology and Germanic paganism. Most surviving information on Odin comes from Norse mythology, but he figures prominently in the recorded history of Northern Europe. This includes the Roman Em ...

is said to have "embalmed" the head with herbs and spoke incantations over it such that Mímir's head remained able to speak wisdom to Odin. Odin then kept the head near him for counsel.

Formal reasoning

Artificial intelligence is based on the assumption that the process of human thought can be mechanized. The study of mechanical—or "formal"—reasoning has a long history.

Chinese,

Indian and

Greek

Greek may refer to:

Anything of, from, or related to Greece, a country in Southern Europe:

*Greeks, an ethnic group

*Greek language, a branch of the Indo-European language family

**Proto-Greek language, the assumed last common ancestor of all kno ...

philosophers all developed structured methods of formal deduction by the first millennium BCE. Their ideas were developed over the centuries by philosophers such as

Aristotle

Aristotle (; 384–322 BC) was an Ancient Greek philosophy, Ancient Greek philosopher and polymath. His writings cover a broad range of subjects spanning the natural sciences, philosophy, linguistics, economics, politics, psychology, a ...

(who gave a formal analysis of the

syllogism

A syllogism (, ''syllogismos'', 'conclusion, inference') is a kind of logical argument that applies deductive reasoning to arrive at a conclusion based on two propositions that are asserted or assumed to be true.

In its earliest form (defin ...

),

Euclid

Euclid (; ; BC) was an ancient Greek mathematician active as a geometer and logician. Considered the "father of geometry", he is chiefly known for the '' Elements'' treatise, which established the foundations of geometry that largely domina ...

(whose ''

Elements'' was a model of formal reasoning),

al-Khwārizmī

Muhammad ibn Musa al-Khwarizmi , or simply al-Khwarizmi, was a mathematician active during the Islamic Golden Age, who produced Arabic-language works in mathematics, astronomy, and geography. Around 820, he worked at the House of Wisdom in B ...

(who developed

algebra

Algebra is a branch of mathematics that deals with abstract systems, known as algebraic structures, and the manipulation of expressions within those systems. It is a generalization of arithmetic that introduces variables and algebraic ope ...

and gave his name to the word ''

algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ...

'') and European

scholastic philosophers such as

William of Ockham

William of Ockham or Occam ( ; ; 9/10 April 1347) was an English Franciscan friar, scholastic philosopher, apologist, and theologian, who was born in Ockham, a small village in Surrey. He is considered to be one of the major figures of medie ...

and

Duns Scotus

John Duns Scotus ( ; , "Duns the Scot"; – 8 November 1308) was a Scottish Catholic priest and Franciscan friar, university professor, philosopher and theologian. He is considered one of the four most important Christian philosopher-t ...

.

Spanish philosopher

Ramon Llull

Ramon Llull (; ; – 1316), sometimes anglicized as ''Raymond Lully'', was a philosopher, theologian, poet, missionary, Christian apologist and former knight from the Kingdom of Majorca.

He invented a philosophical system known as the ''Art ...

(1232–1315) developed several ''logical machines'' devoted to the production of knowledge by logical means; Llull described his machines as mechanical entities that could combine basic and undeniable truths by simple logical operations, produced by the machine by mechanical meanings, in such ways as to produce all the possible knowledge. Llull's work had a great influence on

Gottfried Leibniz

Gottfried Wilhelm Leibniz (or Leibnitz; – 14 November 1716) was a German polymath active as a mathematician, philosopher, scientist and diplomat who is credited, alongside Isaac Newton, Sir Isaac Newton, with the creation of calculus in ad ...

, who redeveloped his ideas.

In the 17th century,

Leibniz

Gottfried Wilhelm Leibniz (or Leibnitz; – 14 November 1716) was a German polymath active as a mathematician, philosopher, scientist and diplomat who is credited, alongside Sir Isaac Newton, with the creation of calculus in addition to many ...

,

Thomas Hobbes

Thomas Hobbes ( ; 5 April 1588 – 4 December 1679) was an English philosopher, best known for his 1651 book ''Leviathan (Hobbes book), Leviathan'', in which he expounds an influential formulation of social contract theory. He is considered t ...

and

René Descartes

René Descartes ( , ; ; 31 March 1596 – 11 February 1650) was a French philosopher, scientist, and mathematician, widely considered a seminal figure in the emergence of modern philosophy and Modern science, science. Mathematics was paramou ...

explored the possibility that all rational thought could be made as systematic as algebra or geometry.

Hobbes

Thomas Hobbes ( ; 5 April 1588 – 4 December 1679) was an English philosopher, best known for his 1651 book ''Leviathan'', in which he expounds an influential formulation of social contract theory. He is considered to be one of the founders ...

famously wrote in

''Leviathan'': "For ''reason'' ... is nothing but ''reckoning'', that is adding and subtracting".

Leibniz

Gottfried Wilhelm Leibniz (or Leibnitz; – 14 November 1716) was a German polymath active as a mathematician, philosopher, scientist and diplomat who is credited, alongside Sir Isaac Newton, with the creation of calculus in addition to many ...

envisioned a universal language of reasoning, the ''

characteristica universalis

The Latin term ''characteristica universalis'', commonly interpreted as ''universal characteristic'', or ''universal character'' in English, is a universal and formal language imagined by Gottfried Leibniz able to express mathematical, scienti ...

'', which would reduce argumentation to calculation so that "there would be no more need of disputation between two philosophers than between two accountants. For it would suffice to take their pencils in hand, down to their slates, and to say each other (with a friend as witness, if they liked): ''Let us calculate''." These philosophers had begun to articulate the

physical symbol system

A physical symbol system (also called a formal system) takes physical patterns (symbols), combining them into structures (expressions) and manipulating them (using processes) to produce new expressions.

The physical symbol system hypothesis (PSSH ...

hypothesis that would become the guiding faith of AI research.

The study of

mathematical logic

Mathematical logic is the study of Logic#Formal logic, formal logic within mathematics. Major subareas include model theory, proof theory, set theory, and recursion theory (also known as computability theory). Research in mathematical logic com ...

provided the essential breakthrough that made artificial intelligence seem plausible. The foundations had been set by such works as

Boole

George Boole ( ; 2 November 1815 – 8 December 1864) was a largely self-taught English mathematician, philosopher and logician, most of whose short career was spent as the first professor of mathematics at Queen's College, Cork in Ireland. ...

's ''

The Laws of Thought

''An Investigation of the Laws of Thought: on Which are Founded the Mathematical Theories of Logic and Probabilities'' by George Boole, published in 1854, is the second of Boole's two monographs on algebraic logic. Boole was a professor of mathe ...

'' and

Frege

Friedrich Ludwig Gottlob Frege (; ; 8 November 1848 – 26 July 1925) was a German philosopher, logician, and mathematician. He was a mathematics professor at the University of Jena, and is understood by many to be the father of analytic philos ...

's ''

Begriffsschrift

''Begriffsschrift'' (German for, roughly, "concept-writing") is a book on logic by Gottlob Frege, published in 1879, and the formal system set out in that book.

''Begriffsschrift'' is usually translated as ''concept writing'' or ''concept notati ...

''. Building on

Frege

Friedrich Ludwig Gottlob Frege (; ; 8 November 1848 – 26 July 1925) was a German philosopher, logician, and mathematician. He was a mathematics professor at the University of Jena, and is understood by many to be the father of analytic philos ...

's system,

Russell and

Whitehead presented a formal treatment of the foundations of mathematics in their masterpiece, the ''

Principia Mathematica

The ''Principia Mathematica'' (often abbreviated ''PM'') is a three-volume work on the foundations of mathematics written by the mathematician–philosophers Alfred North Whitehead and Bertrand Russell and published in 1910, 1912, and 1 ...

'' in 1913. Inspired by

Russell's success,

David Hilbert

David Hilbert (; ; 23 January 1862 – 14 February 1943) was a German mathematician and philosopher of mathematics and one of the most influential mathematicians of his time.

Hilbert discovered and developed a broad range of fundamental idea ...

challenged mathematicians of the 1920s and 30s to answer this fundamental question: "can all of mathematical reasoning be formalized?" His question was answered by

Gödel's

incompleteness proof,

Turing

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher and theoretical biologist. He was highly influential in the development of theoretical compute ...

's

machine

A machine is a physical system that uses power to apply forces and control movement to perform an action. The term is commonly applied to artificial devices, such as those employing engines or motors, but also to natural biological macromol ...

and

Church

Church may refer to:

Religion

* Church (building), a place/building for Christian religious activities and praying

* Church (congregation), a local congregation of a Christian denomination

* Church service, a formalized period of Christian comm ...

's

Lambda calculus

In mathematical logic, the lambda calculus (also written as ''λ''-calculus) is a formal system for expressing computability, computation based on function Abstraction (computer science), abstraction and function application, application using var ...

.

Their answer was surprising in two ways. First, they proved that there were, in fact, limits to what mathematical logic could accomplish. But second (and more important for AI) their work suggested that, within these limits, ''any'' form of mathematical reasoning could be mechanized. The

Church-Turing thesis implied that a mechanical device, shuffling symbols as simple as ''0'' and ''1'', could imitate any conceivable process of mathematical deduction. The key insight was the

Turing machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algori ...

—a simple theoretical construct that captured the essence of abstract symbol manipulation. This invention would inspire a handful of scientists to begin discussing the possibility of thinking machines.

Computer science

Calculating machines were designed or built in antiquity and throughout history by many people, including

Gottfried Leibniz

Gottfried Wilhelm Leibniz (or Leibnitz; – 14 November 1716) was a German polymath active as a mathematician, philosopher, scientist and diplomat who is credited, alongside Isaac Newton, Sir Isaac Newton, with the creation of calculus in ad ...

,

Joseph Marie Jacquard

Joseph Marie Charles ''dit'' (called or nicknamed) Jacquard (; 7 July 1752 – 7 August 1834) was a French weaver and merchant. He played an important role in the development of the earliest programmable loom (the "Jacquard loom"), which in tur ...

,

Charles Babbage

Charles Babbage (; 26 December 1791 – 18 October 1871) was an English polymath. A mathematician, philosopher, inventor and mechanical engineer, Babbage originated the concept of a digital programmable computer.

Babbage is considered ...

,

Percy Ludgate

Percy Edwin Ludgate (2 August 1883 – 16 October 1922) was an Ireland, Irish amateur scientist who designed the second analytical engine (general-purpose Turing-complete computer) in history.

Life

Ludgate was born on 2 August 1883 in Skibb ...

,

Leonardo Torres Quevedo

Leonardo Torres Quevedo (; 28 December 1852 – 18 December 1936) was a Spanish civil engineer, mathematician and inventor, known for his numerous engineering innovations, including Aerial tramway, aerial trams, airships, catamarans, and remote ...

,

Vannevar Bush

Vannevar Bush ( ; March 11, 1890 – June 28, 1974) was an American engineer, inventor and science administrator, who during World War II, World War II headed the U.S. Office of Scientific Research and Development (OSRD), through which almo ...

,

and others.

Ada Lovelace

Augusta Ada King, Countess of Lovelace (''née'' Byron; 10 December 1815 – 27 November 1852), also known as Ada Lovelace, was an English mathematician and writer chiefly known for her work on Charles Babbage's proposed mechanical general-pur ...

speculated that Babbage's machine was "a thinking or ... reasoning machine", but warned "It is desirable to guard against the possibility of exaggerated ideas that arise as to the powers" of the machine.

The first modern computers were the massive machines of the

Second World War

World War II or the Second World War (1 September 1939 – 2 September 1945) was a World war, global conflict between two coalitions: the Allies of World War II, Allies and the Axis powers. World War II by country, Nearly all of the wo ...

(such as

Konrad Zuse

Konrad Ernst Otto Zuse (; ; 22 June 1910 – 18 December 1995) was a German civil engineer, List of pioneers in computer science, pioneering computer scientist, inventor and businessman. His greatest achievement was the world's first programm ...

's

Z3,

Alan Turing

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher and theoretical biologist. He was highly influential in the development of theoretical computer ...

's

Heath Robinson

William Heath Robinson (31 May 1872 – 13 September 1944) was an English cartoonist, illustrator and artist who drew whimsically elaborate machines to achieve simple objectives.

The earliest citation in the ''Oxford English Dictionary'' f ...

and

Colossus

Colossus, Colossos, or the plural Colossi or Colossuses, may refer to:

Statues

* Any exceptionally large statue; colossal statues, are generally taken to mean a statue at least twice life-size

** List of tallest statues

** :Colossal statues

* ...

,

Atanasoff and

Berry

A berry is a small, pulpy, and often edible fruit. Typically, berries are juicy, rounded, brightly colored, sweet, sour or tart, and do not have a stone or pit although many pips or seeds may be present. Common examples of berries in the cul ...

's

ABC

ABC are the first three letters of the Latin script.

ABC or abc may also refer to:

Arts, entertainment and media Broadcasting

* Aliw Broadcasting Corporation, Philippine broadcast company

* American Broadcasting Company, a commercial American ...

and

ENIAC

ENIAC (; Electronic Numerical Integrator and Computer) was the first Computer programming, programmable, Electronics, electronic, general-purpose digital computer, completed in 1945. Other computers had some of these features, but ENIAC was ...

at the

University of Pennsylvania

The University of Pennsylvania (Penn or UPenn) is a Private university, private Ivy League research university in Philadelphia, Pennsylvania, United States. One of nine colonial colleges, it was chartered in 1755 through the efforts of f ...

).

ENIAC

ENIAC (; Electronic Numerical Integrator and Computer) was the first Computer programming, programmable, Electronics, electronic, general-purpose digital computer, completed in 1945. Other computers had some of these features, but ENIAC was ...

was based on the theoretical foundation laid by

Alan Turing

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher and theoretical biologist. He was highly influential in the development of theoretical computer ...

and developed by

John von Neumann

John von Neumann ( ; ; December 28, 1903 – February 8, 1957) was a Hungarian and American mathematician, physicist, computer scientist and engineer. Von Neumann had perhaps the widest coverage of any mathematician of his time, in ...

, and proved to be the most influential.

Birth of artificial intelligence (1941-56)

The earliest research into thinking machines was inspired by a confluence of ideas that became prevalent in the late 1930s, 1940s, and early 1950s. Recent research in

neurology

Neurology (from , "string, nerve" and the suffix wikt:-logia, -logia, "study of") is the branch of specialty (medicine) , medicine dealing with the diagnosis and treatment of all categories of conditions and disease involving the nervous syst ...

had shown that the brain was an electrical network of

neuron

A neuron (American English), neurone (British English), or nerve cell, is an membrane potential#Cell excitability, excitable cell (biology), cell that fires electric signals called action potentials across a neural network (biology), neural net ...

s that fired in all-or-nothing pulses.

Norbert Wiener

Norbert Wiener (November 26, 1894 – March 18, 1964) was an American computer scientist, mathematician, and philosopher. He became a professor of mathematics at the Massachusetts Institute of Technology ( MIT). A child prodigy, Wiener late ...

's

cybernetic

Cybernetics is the transdisciplinary study of circular causal processes such as feedback and recursion, where the effects of a system's actions (its outputs) return as inputs to that system, influencing subsequent action. It is concerned with ...

s described control and stability in electrical networks.

Claude Shannon

Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was an American mathematician, electrical engineer, computer scientist, cryptographer and inventor known as the "father of information theory" and the man who laid the foundations of th ...

's

information theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, ...

described digital signals (i.e., all-or-nothing signals).

Alan Turing

Alan Mathison Turing (; 23 June 1912 – 7 June 1954) was an English mathematician, computer scientist, logician, cryptanalyst, philosopher and theoretical biologist. He was highly influential in the development of theoretical computer ...

's

theory of computation

In theoretical computer science and mathematics, the theory of computation is the branch that deals with what problems can be solved on a model of computation, using an algorithm, how efficiently they can be solved or to what degree (e.g., app ...

showed that any form of computation could be described digitally. The close relationship between these ideas suggested that it might be possible to construct an "electronic brain".

In the 1940s and 50s, a handful of scientists from a variety of fields (mathematics, psychology, engineering, economics and political science) explored several research directions that would be vital to later AI research. Alan Turing was among the first people to seriously investigate the theoretical possibility of "machine intelligence". The field of "

artificial intelligence research" was founded as an academic discipline in 1956.

Turing Test

In 1950 Turing published a landmark paper "

Computing Machinery and Intelligence

"Computing Machinery and Intelligence" is a seminal paper written by Alan Turing on the topic of artificial intelligence. The paper, published in 1950 in ''Mind (journal), Mind'', was the first to introduce his concept of what is now known as th ...

", in which he speculated about the possibility of creating machines that think. In the paper, he noted that "thinking" is difficult to define and devised his famous

Turing Test

The Turing test, originally called the imitation game by Alan Turing in 1949,. Turing wrote about the ‘imitation game’ centrally and extensively throughout his 1950 text, but apparently retired the term thereafter. He referred to ‘ iste ...

: If a machine could carry on a conversation (over a

teleprinter

A teleprinter (teletypewriter, teletype or TTY) is an electromechanical device that can be used to send and receive typed messages through various communications channels, in both point-to-point (telecommunications), point-to-point and point- ...

) that was indistinguishable from a conversation with a human being, then it was reasonable to say that the machine was "thinking". This simplified version of the problem allowed Turing to argue convincingly that a "thinking machine" was at least ''plausible'' and the paper answered all the most common objections to the proposition. The Turing Test was the first serious proposal in the

philosophy of artificial intelligence

The philosophy of artificial intelligence is a branch of the philosophy of mind and the philosophy of computer science that explores artificial intelligence and its implications for knowledge and understanding of intelligence, ethics, conscious ...

.

Neuroscience and Hebbian theory

Donald Hebb

Donald Olding Hebb (July 22, 1904 – August 20, 1985) was a Canadian psychologist who was influential in the area of neuropsychology, where he sought to understand how the function of neurons contributed to psychological processes such as learn ...

was a Canadian psychologist whose work laid the foundation for modern neuroscience, particularly in understanding learning, memory, and neural plasticity. His most influential book, The Organization of Behavior (1949), introduced the concept of Hebbian learning, often summarized as "cells that fire together wire together."

Hebb began formulating the foundational ideas for this book in the early 1940s, particularly during his time at the Yerkes Laboratories of Primate Biology from 1942 to 1947. He made extensive notes between June 1944 and March 1945 and sent a complete draft to his mentor Karl Lashley in 1946. The manuscript for The Organization of Behavior wasn’t published until 1949. The delay was due to various factors, including World War II and shifts in academic focus. By the time it was published, several of his peers had already published related ideas, making Hebb’s work seem less groundbreaking at first glance. However, his synthesis of psychological and neurophysiological principles became a cornerstone of neuroscience and machine learning.

Artificial neural networks

Walter Pitts

Walter Harry Pitts, Jr. (April 23, 1923 – May 14, 1969) was an American logician who worked in the field of computational neuroscience.Smalheiser, Neil R"Walter Pitts", ''Perspectives in Biology and Medicine'', Volume 43, Number 2, Wint ...

and

Warren McCulloch

Warren Sturgis McCulloch (November 16, 1898 – September 24, 1969) was an American neurophysiologist and cybernetician known for his work on the foundation for certain brain theories and his contribution to the cybernetics movement.Ken Aizawa ...

analyzed networks of idealized

artificial neuron

An artificial neuron is a mathematical function conceived as a model of a biological neuron in a neural network. The artificial neuron is the elementary unit of an ''artificial neural network''.

The design of the artificial neuron was inspired ...

s and showed how they might perform simple logical functions in 1943. They were the first to describe what later researchers would call a

neural network

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either biological cells or signal pathways. While individual neurons are simple, many of them together in a network can perfor ...

. The paper was influenced by Turing's paper '

On Computable Numbers' from 1936 using similar two-state boolean 'neurons', but was the first to apply it to neuronal function. One of the students inspired by Pitts and McCulloch was

Marvin Minsky

Marvin Lee Minsky (August 9, 1927 – January 24, 2016) was an American cognitive scientist, cognitive and computer scientist concerned largely with research in artificial intelligence (AI). He co-founded the Massachusetts Institute of Technology ...

who was a 24-year-old graduate student at the time. In 1951 Minsky and Dean Edmonds built the first neural net machine, the

SNARC. Minsky would later become one of the most important leaders and innovators in AI.

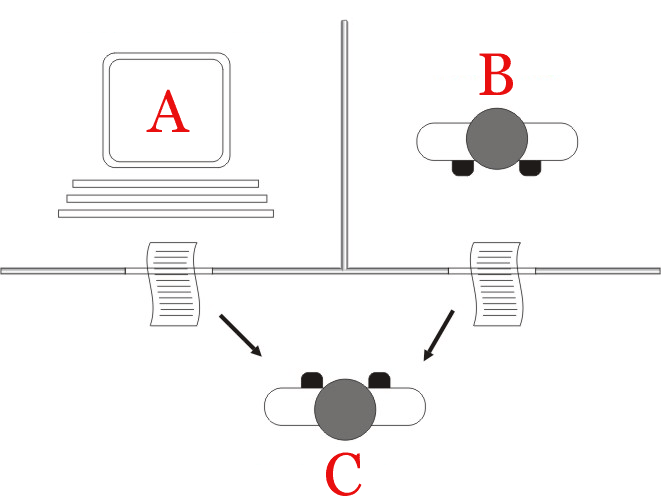

Cybernetic robots

Experimental robots such as

W. Grey Walter's

turtles

Turtles are reptiles of the order Testudines, characterized by a special shell developed mainly from their ribs. Modern turtles are divided into two major groups, the Pleurodira (side necked turtles) and Cryptodira (hidden necked turtle ...

and the

Johns Hopkins Beast, were built in the 1950s. These machines did not use computers, digital electronics or symbolic reasoning; they were controlled entirely by analog circuitry.

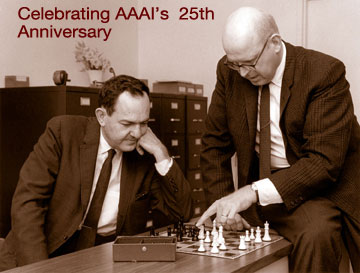

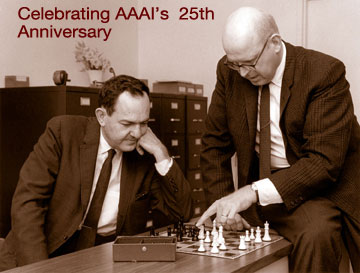

Game AI

In 1951, using the

Ferranti Mark 1

The Ferranti Mark 1, also known as the Manchester Electronic Computer in its sales literature, and thus sometimes called the Manchester Ferranti, was produced by British electrical engineering firm Ferranti Ltd. It was the world's first commer ...

machine of the

University of Manchester

The University of Manchester is a public university, public research university in Manchester, England. The main campus is south of Manchester city centre, Manchester City Centre on Wilmslow Road, Oxford Road. The University of Manchester is c ...

,

Christopher Strachey

Christopher S. Strachey (; 16 November 1916 – 18 May 1975) was a British computer scientist. He was one of the founders of denotational semantics, and a pioneer in programming language design and computer time-sharing.F. J. Corbató, et al., T ...

wrote a checkers program and

Dietrich Prinz

Dietrich Gunther Prinz (March 29, 1903 – December 1989) was a computer science pioneer, notable for his work on early British computers at Ferranti, and in particular for developing the first limited chess program in 1951.

Biography

He was born ...

wrote one for chess.

Arthur Samuel Arthur Samuel may refer to:

* Arthur Samuel (computer scientist)

Arthur Lee Samuel (December 5, 1901 – July 29, 1990) was an American pioneer in the field of computer gaming and artificial intelligence. He popularized the term "machine learni ...

's checkers program, the subject of his 1959 paper "Some Studies in Machine Learning Using the Game of Checkers", eventually achieved sufficient skill to challenge a respectable amateur. Samuel's program was among the first uses of what would later be called

machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

.

Game AI

In video games, artificial intelligence (AI) is used to generate responsive, adaptive or intelligent behaviors primarily in non-playable characters (NPCs) similar to human-like intelligence. Artificial intelligence has been an integral part ...

would continue to be used as a measure of progress in AI throughout its history.

Symbolic reasoning and the Logic Theorist

When access to

digital computer

A computer is a machine that can be programmed to automatically carry out sequences of arithmetic or logical operations (''computation''). Modern digital electronic computers can perform generic sets of operations known as ''programs'', wh ...

s became possible in the mid-fifties, a few scientists instinctively recognized that a machine that could manipulate numbers could also manipulate symbols and that the manipulation of symbols could well be the essence of human thought. This was a new approach to creating thinking machines.

In 1955,

Allen Newell

Allen Newell (March 19, 1927 – July 19, 1992) was an American researcher in computer science and cognitive psychology at the RAND Corporation and at Carnegie Mellon University's School of Computer Science, Tepper School of Business, and D ...

and future Nobel Laureate

Herbert A. Simon

Herbert Alexander Simon (June 15, 1916 – February 9, 2001) was an American scholar whose work influenced the fields of computer science, economics, and cognitive psychology. His primary research interest was decision-making within organi ...

created the "Logic Theorist", with help from

J. C. Shaw. The program would eventually prove 38 of the first 52 theorems in

Russell and

Whitehead's ''

Principia Mathematica

The ''Principia Mathematica'' (often abbreviated ''PM'') is a three-volume work on the foundations of mathematics written by the mathematician–philosophers Alfred North Whitehead and Bertrand Russell and published in 1910, 1912, and 1 ...

'', and find new and more elegant proofs for some. Simon said that they had "solved the venerable

mind/body problem, explaining how a system composed of matter can have the properties of mind." The symbolic reasoning paradigm they introduced would dominate AI research and funding until the middle 90s, as well as inspire the

cognitive revolution

The cognitive revolution was an intellectual movement that began in the 1950s as an interdisciplinary study of the mind and its processes, from which emerged a new field known as cognitive science. The preexisting relevant fields were psychology, ...

.

Dartmouth Workshop

The Dartmouth workshop of 1956 was a pivotal event that marked the formal inception of AI as an academic discipline.

[

]Dartmouth workshop

The Dartmouth Summer Research Project on Artificial Intelligence was a 1956 summer workshop widely consideredKline, Ronald R., "Cybernetics, Automata Studies and the Dartmouth Conference on Artificial Intelligence", ''IEEE Annals of the History ...

:

*

*

*

*

It was organized by

Marvin Minsky

Marvin Lee Minsky (August 9, 1927 – January 24, 2016) was an American cognitive scientist, cognitive and computer scientist concerned largely with research in artificial intelligence (AI). He co-founded the Massachusetts Institute of Technology ...

and

John McCarthy, with the support of two senior scientists

Claude Shannon

Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was an American mathematician, electrical engineer, computer scientist, cryptographer and inventor known as the "father of information theory" and the man who laid the foundations of th ...

and

Nathan Rochester of

IBM

International Business Machines Corporation (using the trademark IBM), nicknamed Big Blue, is an American Multinational corporation, multinational technology company headquartered in Armonk, New York, and present in over 175 countries. It is ...

. The proposal for the conference stated they intended to test the assertion that "every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it". The term "Artificial Intelligence" was introduced by John McCarthy at the workshop.

The participants included

Ray Solomonoff

Ray Solomonoff (July 25, 1926 – December 7, 2009) was an American mathematician who invented algorithmic probability, his General Theory of Inductive Inference (also known as Universal Inductive Inference),Samuel Rathmanner and Marcus Hutter. ...

,

Oliver Selfridge

Oliver Gordon Selfridge (10 May 1926 – 3 December 2008) was a mathematician and computer scientist who pioneered the early foundations of modern artificial intelligence. He is mostly known for his 1959 paper, ''Pandemonium: A paradigm for lea ...

,

Trenchard More,

Arthur Samuel Arthur Samuel may refer to:

* Arthur Samuel (computer scientist)

Arthur Lee Samuel (December 5, 1901 – July 29, 1990) was an American pioneer in the field of computer gaming and artificial intelligence. He popularized the term "machine learni ...

,

Allen Newell

Allen Newell (March 19, 1927 – July 19, 1992) was an American researcher in computer science and cognitive psychology at the RAND Corporation and at Carnegie Mellon University's School of Computer Science, Tepper School of Business, and D ...

and

Herbert A. Simon

Herbert Alexander Simon (June 15, 1916 – February 9, 2001) was an American scholar whose work influenced the fields of computer science, economics, and cognitive psychology. His primary research interest was decision-making within organi ...

, all of whom would create important programs during the first decades of AI research. At the workshop Newell and Simon debuted the "Logic Theorist". The workshop was the moment that AI gained its name, its mission, its first major success and its key players, and is widely considered the birth of AI.

Cognitive revolution

In the autumn of 1956, Newell and Simon also presented the Logic Theorist at a meeting of the Special Interest Group in Information Theory at the

Massachusetts Institute of Technology

The Massachusetts Institute of Technology (MIT) is a Private university, private research university in Cambridge, Massachusetts, United States. Established in 1861, MIT has played a significant role in the development of many areas of moder ...

(MIT). At the same meeting,

Noam Chomsky

Avram Noam Chomsky (born December 7, 1928) is an American professor and public intellectual known for his work in linguistics, political activism, and social criticism. Sometimes called "the father of modern linguistics", Chomsky is also a ...

discussed his

generative grammar

Generative grammar is a research tradition in linguistics that aims to explain the cognitive basis of language by formulating and testing explicit models of humans' subconscious grammatical knowledge. Generative linguists, or generativists (), ...

, and

George Miller described his landmark paper "

The Magical Number Seven, Plus or Minus Two

"The Magical Number Seven, Plus or Minus Two: Some Limits on Our Capacity for Processing Information" is one of the most highly cited papers in psychology. It was written by the cognitive psychologist George A. Miller of Harvard University's ...

". Miller wrote "I left the symposium with a conviction, more intuitive than rational, that experimental psychology, theoretical linguistics, and the computer simulation of cognitive processes were all pieces from a larger whole."

This meeting was the beginning of the "

cognitive revolution

The cognitive revolution was an intellectual movement that began in the 1950s as an interdisciplinary study of the mind and its processes, from which emerged a new field known as cognitive science. The preexisting relevant fields were psychology, ...

"—an interdisciplinary

paradigm shift

A paradigm shift is a fundamental change in the basic concepts and experimental practices of a scientific discipline. It is a concept in the philosophy of science that was introduced and brought into the common lexicon by the American physicist a ...

in psychology, philosophy, computer science and neuroscience. It inspired the creation of the sub-fields of

symbolic artificial intelligence

Symbolic may refer to:

* Symbol, something that represents an idea, a process, or a physical entity

Mathematics, logic, and computing

* Symbolic computation, a scientific area concerned with computing with mathematical formulas

* Symbolic dynamic ...

,

generative linguistics

Generative grammar is a research tradition in linguistics that aims to explain the cognition, cognitive basis of language by formulating and testing explicit models of humans' subconscious grammatical knowledge. Generative linguists, or generat ...

,

cognitive science

Cognitive science is the interdisciplinary, scientific study of the mind and its processes. It examines the nature, the tasks, and the functions of cognition (in a broad sense). Mental faculties of concern to cognitive scientists include percep ...

,

cognitive psychology

Cognitive psychology is the scientific study of human mental processes such as attention, language use, memory, perception, problem solving, creativity, and reasoning.

Cognitive psychology originated in the 1960s in a break from behaviorism, whi ...

,

cognitive neuroscience

Cognitive neuroscience is the scientific field that is concerned with the study of the Biology, biological processes and aspects that underlie cognition, with a specific focus on the neural connections in the brain which are involved in mental ...

and the philosophical schools of

computationalism

In philosophy of mind, the computational theory of mind (CTM), also known as computationalism, is a family of views that hold that the human mind is an information processing system and that cognition and consciousness together are a form of comp ...

and

functionalism. All these fields used related tools to model the mind and results discovered in one field were relevant to the others.

The cognitive approach allowed researchers to consider "mental objects" like thoughts, plans, goals, facts or memories, often analyzed using

high level symbols in functional networks. These objects had been forbidden as "unobservable" by earlier paradigms such as

behaviorism

Behaviorism is a systematic approach to understand the behavior of humans and other animals. It assumes that behavior is either a reflex elicited by the pairing of certain antecedent stimuli in the environment, or a consequence of that indivi ...

. Symbolic mental objects would become the major focus of AI research and funding for the next several decades.

Early successes (1956-1974)

The programs developed in the years after the

Dartmouth Workshop

The Dartmouth Summer Research Project on Artificial Intelligence was a 1956 summer workshop widely consideredKline, Ronald R., "Cybernetics, Automata Studies and the Dartmouth Conference on Artificial Intelligence", ''IEEE Annals of the History ...

were, to most people, simply "astonishing": computers were solving algebra word problems, proving theorems in geometry and learning to speak English. Few at the time would have believed that such "intelligent" behavior by machines was possible at all. Researchers expressed an intense optimism in private and in print, predicting that a fully intelligent machine would be built in less than 20 years. Government agencies like the

Defense Advanced Research Projects Agency

The Defense Advanced Research Projects Agency (DARPA) is a research and development agency of the United States Department of Defense responsible for the development of emerging technologies for use by the military. Originally known as the Adva ...

(DARPA, then known as "ARPA") poured money into the field. Artificial Intelligence laboratories were set up at a number of British and US universities in the latter 1950s and early 1960s.

Approaches

There were many successful programs and new directions in the late 50s and 1960s. Among the most influential were these:

Reasoning, planning and problem solving as search

Many early AI programs used the same basic

algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ...

. To achieve some goal (like winning a game or proving a theorem), they proceeded step by step towards it (by making a move or a deduction) as if searching through a maze,

backtracking

Backtracking is a class of algorithms for finding solutions to some computational problems, notably constraint satisfaction problems, that incrementally builds candidates to the solutions, and abandons a candidate ("backtracks") as soon as it de ...

whenever they reached a dead end. The principal difficulty was that, for many problems, the number of possible paths through the "maze" was astronomical (a situation known as a "

combinatorial explosion

In mathematics, a combinatorial explosion is the rapid growth of the complexity of a problem due to the way its combinatorics depends on input, constraints and bounds. Combinatorial explosion is sometimes used to justify the intractability of cert ...

"). Researchers would reduce the search space by using

heuristics

A heuristic or heuristic technique (''problem solving'', '' mental shortcut'', ''rule of thumb'') is any approach to problem solving that employs a pragmatic method that is not fully optimized, perfected, or rationalized, but is nevertheless ...

that would eliminate paths that were unlikely to lead to a solution.

Newell and

Simon tried to capture a general version of this algorithm in a program called the "

General Problem Solver

General Problem Solver (GPS) is a computer program created in 1957 by Herbert A. Simon, J. C. Shaw, and Allen Newell ( RAND Corporation) intended to work as a universal problem solver machine. In contrast to the former Logic Theorist project, ...

". Other "searching" programs were able to accomplish impressive tasks like solving problems in geometry and algebra, such as

Herbert Gelernter

Herbert Leo Gelernter (December 17, 1929 – May 28, 2015)American Men and Women of Science, 21st edition, vol. 3, Thomson/ Gale, 2009, p. 76 was a professor in the Computer Science Department of Stony Brook University.

Short biography

Having ta ...

's Geometry Theorem Prover (1958) and Symbolic Automatic Integrator (SAINT), written by

Minsky's

''Minsky's'' is a musical by Bob Martin (book), Charles Strouse (music), and Susan Birkenhead (lyrics), and is loosely based on the 1968 movie ''The Night They Raided Minsky's''.

Set during the Great Depression era in Manhattan, the story cente ...

student James Slagle in 1961. Other programs searched through goals and subgoals to

plan actions, like the

STRIPS system developed at

Stanford

Leland Stanford Junior University, commonly referred to as Stanford University, is a private research university in Stanford, California, United States. It was founded in 1885 by railroad magnate Leland Stanford (the eighth governor of and th ...

to control the behavior of the robot

Shakey.

Natural language

An important goal of AI research is to allow computers to communicate in

natural languages

A natural language or ordinary language is a language that occurs naturally in a human community by a process of use, repetition, and Language change, change. It can take different forms, typically either a spoken language or a sign language. Na ...

like English. An early success was

Daniel Bobrow's program

STUDENT

A student is a person enrolled in a school or other educational institution, or more generally, a person who takes a special interest in a subject.

In the United Kingdom and most The Commonwealth, commonwealth countries, a "student" attends ...

, which could solve high school algebra word problems.

A

semantic net

Semantics is the study of linguistic Meaning (philosophy), meaning. It examines what meaning is, how words get their meaning, and how the meaning of a complex expression depends on its parts. Part of this process involves the distinction betwee ...

represents concepts (e.g. "house", "door") as nodes, and relations among concepts as links between the nodes (e.g. "has-a"). The first AI program to use a semantic net was written by Ross Quillian and the most successful (and controversial) version was

Roger Schank

Roger Carl Schank (March 12, 1946 – January 29, 2023) was an American artificial intelligence theorist, cognitive psychologist, learning scientist, educational reformer, and entrepreneur. Beginning in the late 1960s, he pioneered conceptual d ...

's

Conceptual dependency theory Conceptual dependency theory is a model of natural language understanding used in artificial intelligence systems.

Roger Schank at Stanford University introduced the model in 1969, in the early days of artificial intelligence. This model was extens ...

.

Joseph Weizenbaum

Joseph Weizenbaum (8 January 1923 – 5 March 2008) was a German-American computer scientist and a professor at Massachusetts Institute of Technology, MIT. He is the namesake of the Weizenbaum Award and the Weizenbaum Institute.

Life and career

...

's

ELIZA

ELIZA is an early natural language processing computer program developed from 1964 to 1967 at MIT by Joseph Weizenbaum. Created to explore communication between humans and machines, ELIZA simulated conversation by using a pattern matching and ...

could carry out conversations that were so realistic that users occasionally were fooled into thinking they were communicating with a human being and not a computer program (see

ELIZA effect

In computer science, the ELIZA effect is a tendency to project human traits — such as experience, semantic comprehension or empathy — onto rudimentary computer programs having a textual interface. ELIZA was a symbolic AI chatbot developed in 1 ...

). But in fact, ELIZA simply gave a

canned response

Canned responses are predetermined responses to common questions.

In fields such as technical support, canned responses to frequently asked questions may be an effective solution for both the customer and the technical adviser, as they offer the ...

or repeated back what was said to it, rephrasing its response with a few grammar rules. ELIZA was the first

chatbot

A chatbot (originally chatterbot) is a software application or web interface designed to have textual or spoken conversations. Modern chatbots are typically online and use generative artificial intelligence systems that are capable of main ...

.

Micro-worlds

In the late 60s,

Marvin Minsky

Marvin Lee Minsky (August 9, 1927 – January 24, 2016) was an American cognitive scientist, cognitive and computer scientist concerned largely with research in artificial intelligence (AI). He co-founded the Massachusetts Institute of Technology ...

and

Seymour Papert

Seymour Aubrey Papert (; 29 February 1928 – 31 July 2016) was a South African-born American mathematician, computer scientist, and educator, who spent most of his career teaching and researching at MIT. He was one of the pioneers of artif ...

of the

MIT

The Massachusetts Institute of Technology (MIT) is a private research university in Cambridge, Massachusetts, United States. Established in 1861, MIT has played a significant role in the development of many areas of modern technology and sc ...

AI Laboratory proposed that AI research should focus on artificially simple situations known as micro-worlds. They pointed out that in successful sciences like physics, basic principles were often best understood using simplified models like frictionless planes or perfectly rigid bodies. Much of the research focused on a "

blocks world

The blocks world is a planning domain in artificial intelligence. It consists of a set of wooden blocks of various shapes and colors sitting on a table. The goal is to build one or more vertical stacks of blocks. Only one block may be moved at ...

," which consists of colored blocks of various shapes and sizes arrayed on a flat surface.

Blocks world

The blocks world is a planning domain in artificial intelligence. It consists of a set of wooden blocks of various shapes and colors sitting on a table. The goal is to build one or more vertical stacks of blocks. Only one block may be moved at ...

:

*

*

*

*

This paradigm led to innovative work in

machine vision

Machine vision is the technology and methods used to provide image, imaging-based automation, automatic inspection and analysis for such applications as automatic inspection, process control, and robot guidance, usually in industry. Machine vision ...

by

Gerald Sussman

Gerald Jay Sussman (born February 8, 1947) is the Panasonic Professor of Electrical Engineering at the Massachusetts Institute of Technology (MIT). He has been involved in artificial intelligence (AI) research at MIT since 1964. His research ha ...

, Adolfo Guzman,

David Waltz (who invented "

constraint propagation

In constraint satisfaction, local consistency conditions are properties of constraint satisfaction problems related to the consistency of subsets of variables or constraints. They can be used to reduce the search space and make the problem easier t ...

"), and especially

Patrick Winston

Patrick Henry Winston (February 5, 1943 – July 19, 2019) was an American computer scientist and professor at the Massachusetts Institute of Technology. Winston was director of the MIT Artificial Intelligence Laboratory from 1972 to 1997, succe ...

. At the same time, Minsky and Papert built a robot arm that could stack blocks, bringing the blocks world to life.

Terry Winograd's

SHRDLU

SHRDLU is an early natural-language understanding computer program that was developed by Terry Winograd at MIT in 1968–1970. In the program, the user carries on a conversation with the computer, moving objects, naming collections and query ...

could communicate in ordinary English sentences about the micro-world, plan operations and execute them.

Perceptrons and early neural networks

In the 1960s funding was primarily directed towards laboratories researching

symbolic AI

Symbolic may refer to:

* Symbol, something that represents an idea, a process, or a physical entity

Mathematics, logic, and computing

* Symbolic computation, a scientific area concerned with computing with mathematical formulas

* Symbolic dynamic ...

, however several people still pursued research in neural networks.

The

perceptron

In machine learning, the perceptron is an algorithm for supervised classification, supervised learning of binary classification, binary classifiers. A binary classifier is a function that can decide whether or not an input, represented by a vect ...

, a single-layer

neural network

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either biological cells or signal pathways. While individual neurons are simple, many of them together in a network can perfor ...

was introduced in 1958 by

Frank Rosenblatt

Frank Rosenblatt (July 11, 1928July 11, 1971) was an American psychologist notable in the field of artificial intelligence. He is sometimes called the father of deep learning for his pioneering work on artificial neural networks.

Life and career

...

(who had been a schoolmate of

Marvin Minsky

Marvin Lee Minsky (August 9, 1927 – January 24, 2016) was an American cognitive scientist, cognitive and computer scientist concerned largely with research in artificial intelligence (AI). He co-founded the Massachusetts Institute of Technology ...

at the

Bronx High School of Science

The Bronx High School of Science is a State school, public Specialized high schools in New York City, specialized high school in the Bronx in New York City. It is operated by the New York City Department of Education. Admission to Bronx Science ...

). Like most AI researchers, he was optimistic about their power, predicting that a perceptron "may eventually be able to learn, make decisions, and translate languages." Rosenblatt was primarily funded by

Office of Naval Research

The Office of Naval Research (ONR) is an organization within the United States Department of the Navy responsible for the science and technology programs of the U.S. Navy and Marine Corps. Established by Congress in 1946, its mission is to plan ...

.

Bernard Widrow

Bernard Widrow (born December 24, 1929) is a U.S. professor of electrical engineering at Stanford University. He is the co-inventor of the Widrow–Hoff least mean squares filter (LMS) adaptive algorithm with his then doctoral student Ted Hoff ...

and his student

Ted Hoff built

ADALINE (1960) and

MADALINE Madaline may refer to:

Computing

* MADALINE (from "Many ADALINE"), a neural network architecture

People called Madaline

* Madaline Lee (1912–1974), American actress

* Madaline A. Williams (1894–1968), American politician

* Madlaine Traver ...

(1962), which had up to 1000 adjustable weights. A group at

Stanford Research Institute

SRI International (SRI) is a nonprofit organization, nonprofit scientific research, scientific research institute and organization headquartered in Menlo Park, California, United States. It was established in 1946 by trustees of Stanford Univer ...

led by

Charles A. Rosen and Alfred E. (Ted) Brain built two neural network machines named MINOS I (1960) and II (1963), mainly funded by

U.S. Army Signal Corps. MINOS II had 6600 adjustable weights, and was controlled with an

SDS 910 computer in a configuration named MINOS III (1968), which could classify symbols on army maps, and recognize hand-printed characters on

Fortran coding sheets. Most of neural network research during this early period involved building and using bespoke hardware, rather than simulation on digital computers.

However, partly due to lack of results and partly due to competition from

symbolic AI

Symbolic may refer to:

* Symbol, something that represents an idea, a process, or a physical entity

Mathematics, logic, and computing

* Symbolic computation, a scientific area concerned with computing with mathematical formulas

* Symbolic dynamic ...

research, the MINOS project ran out of funding in 1966. Rosenblatt failed to secure continued funding in the 1960s. In 1969, research came to a sudden halt with the publication of

Minsky and

Papert's 1969 book ''

Perceptrons''. It suggested that there were severe limitations to what perceptrons could do and that Rosenblatt's predictions had been grossly exaggerated. The effect of the book was that virtually no research was funded in

connectionism

Connectionism is an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks.

Connectionism has had many "waves" since its beginnings. The first ...

for 10 years. The competition for government funding ended with the victory of symbolic AI approaches over neural networks.

Minsky (who had worked on

SNARC) became a staunch objector to pure connectionist AI.

Widrow (who had worked on

ADALINE) turned to adaptive signal processing. The

SRI

Shri (; , ) is a Sanskrit term denoting resplendence, wealth and prosperity, primarily used as an honorific.

The word is widely used in South and Southeast Asian languages such as Assamese, Meitei ( Manipuri), Marathi, Malay (including In ...

group (which worked on MINOS) turned to symbolic AI and robotics.

The main problem was the inability to train multilayered networks (versions of

backpropagation

In machine learning, backpropagation is a gradient computation method commonly used for training a neural network to compute its parameter updates.

It is an efficient application of the chain rule to neural networks. Backpropagation computes th ...

had already been used in other fields but it was unknown to these researchers). The AI community became aware of backpropogation in the 80s, and, in the 21st century, neural networks would become enormously successful, fulfilling all of Rosenblatt's optimistic predictions. Rosenblatt did not live to see this, however, as he died in a boating accident in 1971.

Optimism

The first generation of AI researchers made these predictions about their work:

* 1958,

H. A. Simon and

Allen Newell

Allen Newell (March 19, 1927 – July 19, 1992) was an American researcher in computer science and cognitive psychology at the RAND Corporation and at Carnegie Mellon University's School of Computer Science, Tepper School of Business, and D ...

: "within ten years a digital computer will be the world's chess champion" and "within ten years a digital computer will discover and prove an important new mathematical theorem."

* 1965, H. A. Simon: "machines will be capable, within twenty years, of doing any work a man can do."

* 1967,

Marvin Minsky

Marvin Lee Minsky (August 9, 1927 – January 24, 2016) was an American cognitive scientist, cognitive and computer scientist concerned largely with research in artificial intelligence (AI). He co-founded the Massachusetts Institute of Technology ...

: "Within a generation... the problem of creating 'artificial intelligence' will substantially be solved."

* 1970, Marvin Minsky (in

''Life'' magazine): "In from three to eight years we will have a machine with the general intelligence of an average human being."

Financing

In June 1963,

MIT

The Massachusetts Institute of Technology (MIT) is a private research university in Cambridge, Massachusetts, United States. Established in 1861, MIT has played a significant role in the development of many areas of modern technology and sc ...

received a $2.2 million grant from the newly created Advanced Research Projects Agency (ARPA, later known as

DARPA