|

Shifting (syntax)

In syntax, shifting occurs when two or more constituent (linguistics), constituents appearing on the same side of their common head (linguistics), head exchange positions in a sense to obtain non-canonical order. The most widely acknowledged type of shifting is heavy NP shift, but shifting involving a heavy NP is just one manifestation of the shifting mechanism. Shifting occurs in most if not all European languages, and it may in fact be possible in all natural languages including sign language, sign languages. Shifting is not inversion (linguistics), inversion, and inversion is not shifting, but the two mechanisms are similar insofar as they are both present in languages like English that have relatively strict word order. The theoretical analysis of shifting varies in part depending on the theory of sentence structure that one adopts. If one assumes relatively flat structures, shifting does not result in a discontinuity (linguistics), discontinuity. Shifting is often motivated by t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Syntax

In linguistics, syntax ( ) is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). Diverse approaches, such as generative grammar and functional grammar, offer unique perspectives on syntax, reflecting its complexity and centrality to understanding human language. Etymology The word ''syntax'' comes from the ancient Greek word , meaning an orderly or systematic arrangement, which consists of (''syn-'', "together" or "alike"), and (''táxis'', "arrangement"). In Hellenistic Greek, this also specifically developed a use referring to the grammatical order of words, with a slightly altered spelling: . The English term, which first appeared in 1548, is partly borrowed from Latin () and Greek, though the L ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phrase Structure Grammar

The term phrase structure grammar was originally introduced by Noam Chomsky as the term for grammar studied previously by Emil Post and Axel Thue ( Post canonical systems). Some authors, however, reserve the term for more restricted grammars in the Chomsky hierarchy: context-sensitive grammars or context-free grammars. In a broader sense, phrase structure grammars are also known as ''constituency grammars''. The defining character of phrase structure grammars is thus their adherence to the constituency relation, as opposed to the dependency relation of dependency grammars. History In 1956, Chomsky wrote, "A phrase-structure grammar is defined by a finite vocabulary (alphabet) Vp, and a finite set Σ of initial strings in Vp, and a finite set F of rules of the form: X → Y, where X and Y are strings in Vp." Constituency relation In linguistics, phrase structure grammars are all those grammars that are based on the constituency relation, as opposed to the dependency relation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wh-movement

In linguistics, wh-movement (also known as wh-fronting, wh-extraction, or wh-raising) is the formation of syntactic dependencies involving interrogative words. An example in English is the dependency formed between ''what'' and the object position of ''doing'' in "What are you doing?". Interrogative forms are sometimes known within English linguistics as '' wh-words'', such as ''what, when, where, who'', and ''why'', but also include other interrogative words, such as ''how''. This dependency has been used as a diagnostic tool in syntactic studies as it can be observed to interact with other grammatical constraints. In languages with wh- movement, sentences or clauses with a wh-word show a non-canonical word order that places the wh-word (or phrase containing the wh-word) at or near the front of the sentence or clause ("''Whom'' are you thinking about?") instead of the canonical position later in the sentence ("I am thinking about ''you''"). Leaving the wh-word in its canonical pos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ray Jackendoff

Ray Jackendoff (born January 23, 1945) is an American linguist. He is professor of philosophy, Seth Merrin Chair in the Humanities and, with Daniel Dennett, co-director of the Center for Cognitive Studies at Tufts University. He has always straddled the boundary between generative linguistics and cognitive linguistics, committed to both the existence of an innate universal grammar (an important thesis of generative linguistics) and to giving an account of language that is consistent with the current understanding of the human mind and cognition (the main purpose of cognitive linguistics). Jackendoff's research deals with the semantics of natural language, its bearing on the formal structure of cognition, and its lexical and syntactic expression. He has conducted extensive research on the relationship between conscious awareness and the computational theory of mind, on syntactic theory, and, with Fred Lerdahl, on musical cognition, culminating in their generative theory of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dependency Grammar

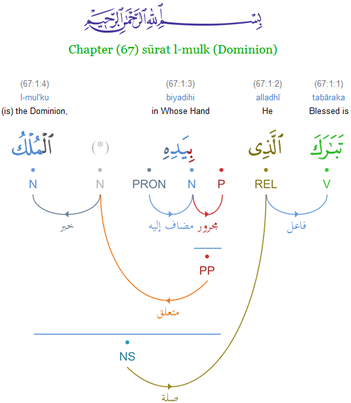

Dependency grammar (DG) is a class of modern Grammar, grammatical theories that are all based on the dependency relation (as opposed to the ''constituency relation'' of Phrase structure grammar, phrase structure) and that can be traced back primarily to the work of Lucien Tesnière. Dependency is the notion that linguistic units, e.g. words, are connected to each other by directed links. The (finite) verb is taken to be the structural center of clause structure. All other syntactic units (words) are either directly or indirectly connected to the verb in terms of the directed links, which are called ''dependencies''. Dependency grammar differs from phrase structure grammar in that while it can identify phrases it tends to overlook phrasal nodes. A dependency structure is determined by the relation between a word (a Head (linguistics), head) and its dependents. Dependency structures are flatter than phrase structures in part because they lack a finite verb, finite verb phrase constit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Head-final

In linguistics, head directionality is a proposed Principles and parameters, parameter that classifies languages according to whether they are head-initial (the head (linguistics), head of a phrase precedes its Complement (linguistics), complements) or head-final (the head follows its complements). The Head (linguistics), head is the element that determines the category of a phrase: for example, in a verb phrase, the head is a verb. Therefore, head initial would be Verb–object word order, "VO" languages and head final would be Object–verb word order, "OV" languages. Some languages are consistently head-initial or head-final at all phrasal levels. English grammar, English is considered to be mainly head-initial (verbs precede their objects, for example), while Japanese grammar, Japanese is an example of a language that is consistently head-final. In certain other languages, such as German language, German and Gbe languages, Gbe, examples of both types of head directionality occu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constituent (linguistics)

In syntactic analysis, a constituent is a word or a group of words that function as a single unit within a hierarchical structure. The constituent structure of sentences is identified using ''tests for constituents''. These tests apply to a portion of a sentence, and the results provide evidence about the constituent structure of the sentence. Many constituents are phrases. A phrase is a sequence of one or more words (in some theories two or more) built around a head lexical item and working as a unit within a sentence. A word sequence is shown to be a phrase/constituent if it exhibits one or more of the behaviors discussed below. The analysis of constituent structure is associated mainly with phrase structure grammars, although dependency grammars also allow sentence structure to be broken down into constituent parts. Tests for constituents in English Tests for constituents are diagnostics used to identify sentence structure. There are numerous tests for constituents that are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Syntactic Category

A syntactic category is a syntactic unit that theories of syntax assume. Word classes, largely corresponding to traditional parts of speech (e.g. noun, verb, preposition, etc.), are syntactic categories. In phrase structure grammars, the ''phrasal categories'' (e.g. noun phrase, verb phrase, prepositional phrase, etc.) are also syntactic categories. Dependency grammars, however, do not acknowledge phrasal categories (at least not in the traditional sense). Word classes considered as syntactic categories may be called ''lexical categories'', as distinct from phrasal categories. The terminology is somewhat inconsistent between the theoretical models of different linguists. However, many grammars also draw a distinction between ''lexical categories'' (which tend to consist of content words, or phrases headed by them) and ''functional categories'' (which tend to consist of function words or abstract functional elements, or phrases headed by them). The term ''lexical category'' the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic

Semantics is the study of linguistic Meaning (philosophy), meaning. It examines what meaning is, how words get their meaning, and how the meaning of a complex expression depends on its parts. Part of this process involves the distinction between sense and reference. Sense is given by the ideas and concepts associated with an expression while reference is the object to which an expression points. Semantics contrasts with syntax, which studies the rules that dictate how to create grammatically correct sentences, and pragmatics, which investigates how people use language in communication. Lexical semantics is the branch of semantics that studies word meaning. It examines whether words have one or several meanings and in what lexical relations they stand to one another. Phrasal semantics studies the meaning of sentences by exploring the phenomenon of compositionality or how new meanings can be created by arranging words. Formal semantics (natural language), Formal semantics relies o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |