|

Operational Data Store

An operational data store (ODS) is used for operational reporting and as a source of data for the enterprise data warehouse (EDW). It is a complementary element to an EDW in a decision support environment, and is used for operational reporting, controls, and decision making, as opposed to the EDW, which is used for tactical and strategic decision support. An ODS is a database designed to integrate data from multiple sources for additional operations on the data, for reporting, controls and operational decision support. Unlike a production master data store, the data is not passed back to operational systems. It may be passed for further operations and to the data warehouse for reporting. An ODS should not be confused with an enterprise data hub (EDH). An operational data store will take transactional data from one or more production systems and loosely integrate it, in some respects it is still subject oriented, integrated and time variant, but without the volatility constr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Enterprise Data Warehouse

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is a core component of business intelligence. Data warehouses are central repositories of data integrated from disparate sources. They store current and historical data organized in a way that is optimized for data analysis, generation of reports, and developing insights across the integrated data. They are intended to be used by analysts and managers to help make organizational decisions. The data stored in the warehouse is uploaded from operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the data warehouse for reporting. The two main workflows for building a data warehouse system are extract, transform, load (ETL) and extract, load, transform (ELT). Components The environment ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Management

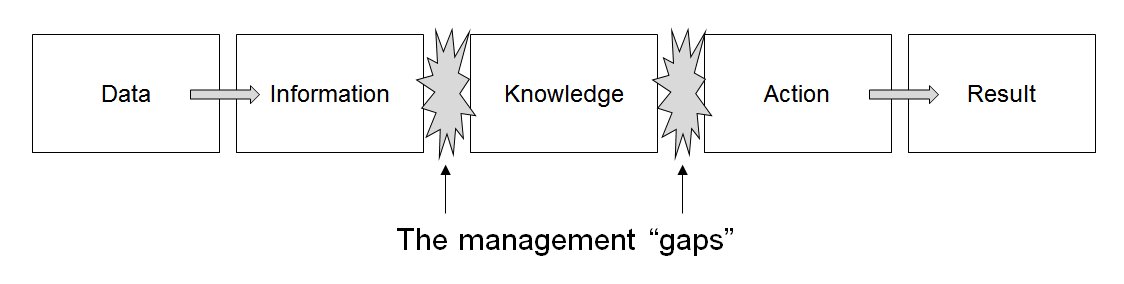

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization's data so it can be analyzed for decision making. Concept The concept of data management emerged alongside the evolution of computing technology. In the 1950s, as computers became more prevalent, organizations began to grapple with the challenge of organizing and storing data efficiently. Early methods relied on punch cards and manual sorting, which were labor-intensive and prone to errors. The introduction of database management systems in the 1970s marked a significant milestone, enabling structured storage and retrieval of data. By the 1980s, relational database models revolutionized data management, emphasizing the importance of data as an asset and fostering a data-centric mindset in business. This era also saw the rise of data governance practices, which prioritized the organization and regulation of data to ensure quality and complian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John Wiley & Sons

John Wiley & Sons, Inc., commonly known as Wiley (), is an American Multinational corporation, multinational Publishing, publishing company that focuses on academic publishing and instructional materials. The company was founded in 1807 and produces books, Academic journal, journals, and encyclopedias, in print and electronically, as well as online products and services, training materials, and educational materials for undergraduate, graduate, and continuing education students. History The company was established in 1807 when Charles Wiley opened a print shop in Manhattan. The company was the publisher of 19th century American literary figures like James Fenimore Cooper, Washington Irving, Herman Melville, and Edgar Allan Poe, as well as of legal, religious, and other non-fiction titles. The firm took its current name in 1865. Wiley later shifted its focus to scientific, Technology, technical, and engineering subject areas, abandoning its literary interests. Wiley's son Joh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Third Normal Form

Third normal form (3NF) is a database schema design approach for relational databases which uses normalizing principles to reduce the duplication of data, avoid data anomalies, ensure referential integrity, and simplify data management. It was defined in 1971 by Edgar F. Codd, an English computer scientist who invented the relational model for database management. A database relation (e.g. a database table) is said to meet third normal form standards if all the attributes (e.g. database columns) are functionally dependent on solely a key, except the case of functional dependency whose right hand side is a prime attribute (an attribute which is strictly included into some key). Codd defined this as a relation in second normal form where all non-prime attributes depend only on the candidate keys and do not have a transitive dependency on another key. A hypothetical example of a failure to meet third normal form would be a hospital database having a table of patients which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Enterprise Architecture

Enterprise architecture (EA) is a business function concerned with the structures and behaviours of a business, especially business roles and processes that create and use business data. The international definition according to the Federation of Enterprise Architecture Professional Organizations is "a well-defined practice for conducting enterprise (economics), enterprise analysis, design, planning, and implementation, using a comprehensive approach at all times, for the successful development and execution of strategy. Enterprise architecture applies architecture principles and practices to guide organizations through the business, information, process, and technology changes necessary to execute their strategies. These practices utilize the various aspects of an enterprise to identify, motivate, and achieve these changes." The United States Government, United States Federal Government is an example of an organization that practices EA, in this case with its Capital Planning an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Architectural Pattern (computer Science)

Software architecture pattern is a reusable, proven solution to a specific, recurring problem focused on architectural design challenges, which can be applied within various architectural styles. Examples Some examples of architectural patterns: * Publish–subscribe pattern * Message broker See also * List of software architecture styles and patterns * Process Driven Messaging Service * Enterprise architecture Enterprise architecture (EA) is a business function concerned with the structures and behaviours of a business, especially business roles and processes that create and use business data. The international definition according to the Federation of ... * Common layers in an information system logical architecture References Bibliography * * * {{Design Patterns patterns Software design patterns ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extract, Transform, Load

Extract, transform, load (ETL) is a three-phase computing process where data is ''extracted'' from an input source, ''transformed'' (including cleaning), and ''loaded'' into an output data container. The data can be collected from one or more sources and it can also be output to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on recurring schedules either as single jobs or aggregated into a batch of jobs. A properly designed ETL system extracts data from source systems and enforces data type and data validity standards and ensures it conforms structurally to the requirements of the output. Some ETL systems can also deliver data in a presentation-ready format so that application developers can build applications and end users can make decisions. The ETL process is often used in data warehousing. ETL sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Federated Database System

A federated database system (FDBS) is a type of Meta (prefix), meta-database management system (DBMS), which transparently maps multiple autonomous Database management system, database systems into a single federated database. The constituent databases are interconnected via a computer network and may be geographically decentralized. Since the constituent database systems remain autonomous, a federated database system is a contrastable alternative to the (sometimes daunting) task of merging several disparate databases. A federated database, or virtual database, is a composite of all constituent databases in a federated database system. There is no actual data integration in the constituent disparate databases as a result of data federation. Through data abstraction, federated database systems can provide a uniform user interface, enabling user (computing), users and Client (computing), clients to store and retrieve data from multiple noncontiguous databases with a single Informati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Integrity

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire Information Lifecycle Management, life-cycle. It is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The term is broad in scope and may have widely different meanings depending on the specific context even under the same general umbrella of computing. It is at times used as a proxy term for data quality, while data validation is a prerequisite for data integrity. Definition Data integrity is the opposite of data corruption. The overall intent of any data integrity technique is the same: ensure data is recorded exactly as intended (such as a database correctly rejecting mutually exclusive possibilities). Moreover, upon later Data retrieval, retrieval, ensure the data is the same as when it was originally recorded. In short, data integrity aims to prevent unintentional changes to information. Data integrity is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Support

A decision support system (DSS) is an information system that supports business or organizational decision-making activities. DSSs serve the management, operations and planning levels of an organization (usually mid and higher management) and help people make decisions about problems that may be rapidly changing and not easily specified in advance—i.e., unstructured and semi-structured decision problems. Decision support systems can be either fully computerized or human-powered, or a combination of both. While academics have perceived DSS as a tool to support decision making processes, DSS users see DSS as a tool to facilitate organizational processes. Some authors have extended the definition of DSS to include any system that might support decision making and some DSS include a decision-making software component; Sprague (1980)Sprague, R;(1980).A Framework for the Development of Decision Support Systems" MIS Quarterly. Vol. 4, No. 4, pp. 1–25. defines a properly termed DSS ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |