|

Non-negative Matrix Factorization

Non-negative matrix factorization (NMF or NNMF), also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix is factorized into (usually) two matrices and , with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms or muscular activity, non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically. NMF finds applications in such fields as astronomy, computer vision, document clustering, missing data imputation, chemometrics, audio signal processing, recommender systems, and bioinformatics. History In chemometrics non-negative matrix factorization has a long history under the name "self modeling curve resolution". In this framework the vectors in the right matrix are continuous ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

MIT Press

The MIT Press is the university press of the Massachusetts Institute of Technology (MIT), a private research university in Cambridge, Massachusetts. The MIT Press publishes a number of academic journals and has been a pioneer in the Open Access movement in academic publishing. History MIT Press traces its origins back to 1926 when MIT published a lecture series entitled ''Problems of Atomic Dynamics'' given by the visiting German physicist and later Nobel Prize winner, Max Born. In 1932, MIT's publishing operations were first formally instituted by the creation of an imprint called Technology Press. This imprint was founded by James R. Killian, Jr., at the time editor of MIT's alumni magazine and later to become MIT president. Technology Press published eight titles independently, then in 1937 entered into an arrangement with John Wiley & Sons in which Wiley took over marketing and editorial responsibilities. In 1961, the centennial of MIT's founding charter, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Lasso (statistics)

In statistics and machine learning, lasso (least absolute shrinkage and selection operator; also Lasso, LASSO or L1 regularization) is a regression analysis method that performs both variable selection and Regularization (mathematics), regularization in order to enhance the prediction accuracy and interpretability of the resulting statistical model. The lasso method assumes that the coefficients of the linear model are sparse, meaning that few of them are non-zero. It was originally introduced in geophysics, and later by Robert Tibshirani, who coined the term. Lasso was originally formulated for linear regression models. This simple case reveals a substantial amount about the estimator. These include its relationship to ridge regression and best subset selection and the connections between lasso coefficient estimates and so-called soft thresholding. It also reveals that (like standard linear regression) the coefficient estimates do not need to be unique if covariates are collinear ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Neurocomputing (journal)

''Neurocomputing'' is a peer-reviewed scientific journal covering research on artificial intelligence, machine learning, and neural computation. It was established in 1989 and is published by Elsevier. The editor-in-chief is Zidong Wang (Brunel University London). Independent scientometric studies noted that despite being one of the most productive journals in the field, it has kept its reputation across the years intact and plays an important role in leading the research in the area. The journal is abstracted and indexed in Scopus and Science Citation Index Expanded. According to the ''Journal Citation Reports'', its 2023 impact factor The impact factor (IF) or journal impact factor (JIF) of an academic journal is a type of journal ranking. Journals with higher impact factor values are considered more prestigious or important within their field. The Impact Factor of a journa ... is 5.5. References External links * Neuroscience journals Elsevier academic journals Aca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Total Variation Norm

In mathematics, the total variation identifies several slightly different concepts, related to the (local or global) structure of the codomain of a function or a measure. For a real-valued continuous function ''f'', defined on an interval 'a'', ''b''⊂ R, its total variation on the interval of definition is a measure of the one-dimensional arclength of the curve with parametric equation ''x'' ↦ ''f''(''x''), for ''x'' ∈ 'a'', ''b'' Functions whose total variation is finite are called '' functions of bounded variation''. Historical note The concept of total variation for functions of one real variable was first introduced by Camille Jordan in the paper . He used the new concept in order to prove a convergence theorem for Fourier series of discontinuous periodic functions whose variation is bounded. The extension of the concept to functions of more than one variable however is not simple for various reasons. Definitions Total variation for functions of one real variab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Frobenius Norm

In the field of mathematics, norms are defined for elements within a vector space. Specifically, when the vector space comprises matrices, such norms are referred to as matrix norms. Matrix norms differ from vector norms in that they must also interact with matrix multiplication. Preliminaries Given a field \ K\ of either real or complex numbers (or any complete subset thereof), let \ K^\ be the -vector space of matrices with m rows and n columns and entries in the field \ K ~. A matrix norm is a norm on \ K^~. Norms are often expressed with double vertical bars (like so: \ \, A\, \ ). Thus, the matrix norm is a function \ \, \cdot\, : K^ \to \R^\ that must satisfy the following properties: For all scalars \ \alpha \in K\ and matrices \ A, B \in K^\ , * \, A\, \ge 0\ (''positive-valued'') * \, A\, = 0 \iff A=0_ (''definite'') * \left\, \alpha\ A \right\, = \left, \alpha \\ \left\, A\right\, \ (''absolutely homogeneous'') * \, A + B \, \le \, A \, + \, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Regularization (mathematics)

In mathematics, statistics, Mathematical finance, finance, and computer science, particularly in machine learning and inverse problems, regularization is a process that converts the Problem solving, answer to a problem to a simpler one. It is often used in solving ill-posed problems or to prevent overfitting. Although regularization procedures can be divided in many ways, the following delineation is particularly helpful: * Explicit regularization is regularization whenever one explicitly adds a term to the optimization problem. These terms could be Prior probability, priors, penalties, or constraints. Explicit regularization is commonly employed with ill-posed optimization problems. The regularization term, or penalty, imposes a cost on the optimization function to make the optimal solution unique. * Implicit regularization is all other forms of regularization. This includes, for example, early stopping, using a robust loss function, and discarding outliers. Implicit regularizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

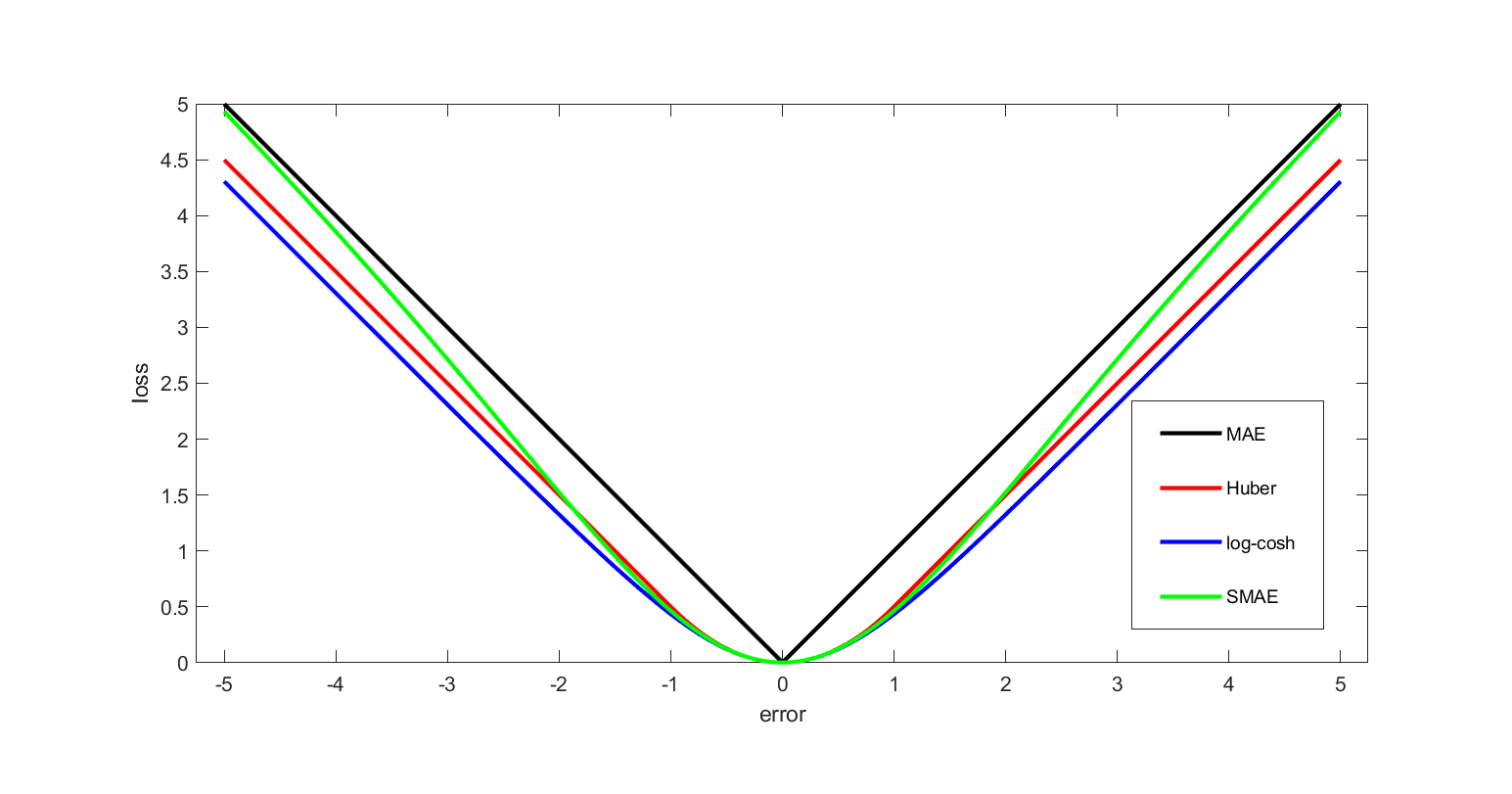

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Pierre-Simon Laplace, Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Nonnegative Rank (linear Algebra)

In linear algebra, the nonnegative rank of a nonnegative matrix is a concept similar to the usual linear rank of a real matrix, but adding the requirement that certain coefficients and entries of vectors/matrices have to be nonnegative. For example, the linear rank of a matrix is the smallest number of vectors, such that every column of the matrix can be written as a linear combination of those vectors. For the nonnegative rank, it is required that the vectors must have nonnegative entries, and also that the coefficients in the linear combinations are nonnegative. Formal definition There are several equivalent definitions, all modifying the definition of the linear rank slightly. Apart from the definition given above, there is the following: The nonnegative rank of a nonnegative ''m×n''-matrix ''A'' is equal to the smallest number ''q'' such there exists a nonnegative ''m×q''-matrix ''B'' and a nonnegative ''q×n''-matrix ''C'' such that ''A = BC'' (the usual matrix product ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Convex Combination

In convex geometry and Vector space, vector algebra, a convex combination is a linear combination of point (geometry), points (which can be vector (geometric), vectors, scalar (mathematics), scalars, or more generally points in an affine space) where all coefficients are non-negative and sum to 1. In other words, the operation is equivalent to a standard weighted average, but whose weights are expressed as a percent of the total weight, instead of as a fraction of the ''count'' of the weights as in a standard weighted average. Formal definition More formally, given a finite number of points x_1, x_2, \dots, x_n in a real vector space, a convex combination of these points is a point of the form : \alpha_1x_1+\alpha_2x_2+\cdots+\alpha_nx_n where the real numbers \alpha_i satisfy \alpha_i\ge 0 and \alpha_1+\alpha_2+\cdots+\alpha_n=1. As a particular example, every convex combination of two points lies on the line segment between the points. A set is convex set, convex if it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Probabilistic Latent Semantic Analysis

Probabilistic latent semantic analysis (PLSA), also known as probabilistic latent semantic indexing (PLSI, especially in information retrieval circles) is a statistical technique for the analysis of two-mode and co-occurrence data. In effect, one can derive a low-dimensional representation of the observed variables in terms of their affinity to certain hidden variables, just as in latent semantic analysis, from which PLSA evolved. Compared to standard latent semantic analysis which stems from linear algebra and downsizes the occurrence tables (usually via a singular value decomposition), probabilistic latent semantic analysis is based on a mixture decomposition derived from a latent class model. Model Considering observations in the form of co-occurrences (w,d) of words and documents, PLSA models the probability of each co-occurrence as a mixture of conditionally independent multinomial distributions: : P(w,d) = \sum_c P(c) P(d, c) P(w, c) = P(d) \sum_c P(c, d) P(w, c) with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |