|

Noisy Channel Model

The noisy channel model is a framework used in spell checkers, question answering, speech recognition, and machine translation. In this model, the goal is to find the intended word given a word where the letters have been scrambled in some manner. In spell-checking See Chapter B of. Given an alphabet \Sigma, let \Sigma^* be the set of all finite strings over \Sigma. Let the dictionary D of valid words be some subset of \Sigma^*, i.e., D\subseteq\Sigma^*. The noisy channel is the matrix :\Gamma_ = \Pr(s, w), where w\in D is the intended word and s\in\Sigma^* is the scrambled word that was actually received. The goal of the noisy channel model is to find the intended word given the scrambled word that was received. The decision function \sigma : \Sigma^* \to D is a function that, given a scrambled word, returns the intended word. Methods of constructing a decision function include the maximum likelihood rule, the maximum a posteriori rule, and the minimum distance rule. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

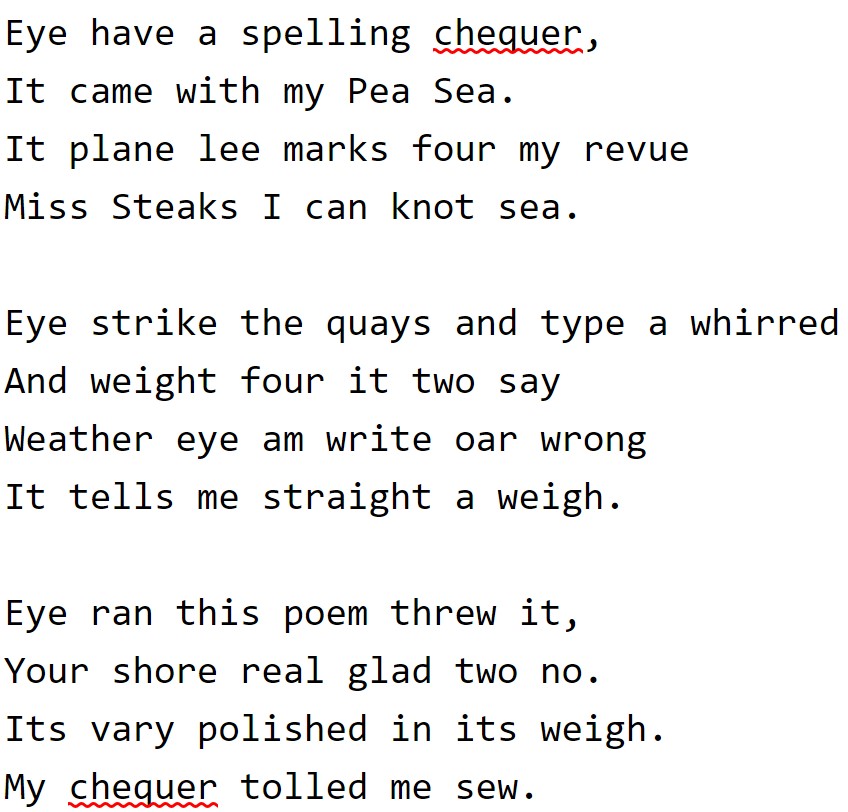

Spell Checker

In software, a spell checker (or spelling checker or spell check) is a software feature that checks for misspellings in a text. Spell-checking features are often embedded in software or services, such as a word processor, email client, electronic dictionary, or search engine. Design A basic spell checker carries out the following processes: * It scans the text and extracts the words contained in it. * It then compares each word with a known list of correctly spelled words (i.e. a dictionary). This might contain just a list of words, or it might also contain additional information, such as hyphenation points or lexical and grammatical attributes. * An additional step is a language-dependent algorithm for handling morphology. Even for a lightly inflected language like English, the spell checker will need to consider different forms of the same word, such as plurals, verbal forms, contractions, and possessives. For many other languages, such as those featuring agglutination and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Question Answering

Question answering (QA) is a computer science discipline within the fields of information retrieval and natural language processing (NLP) that is concerned with building systems that automatically answer questions that are posed by humans in a natural language. Overview A question-answering implementation, usually a computer program, may construct its answers by querying a structured database of knowledge or information, usually a knowledge base. More commonly, question-answering systems can pull answers from an unstructured collection of natural language documents. Some examples of natural language document collections used for question answering systems include: * a collection of reference texts * internal organization documents and web pages * compiled newswire reports * a set of Wikipedia pages * a subset of World Wide Web pages Types of question answering Question-answering research attempts to develop ways of answering a wide range of question types, including fact, li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Speech Recognition

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers. It is also known as automatic speech recognition (ASR), computer speech recognition or speech-to-text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition applications include voice user interfaces ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Machine Translation

Machine translation is use of computational techniques to translate text or speech from one language to another, including the contextual, idiomatic and pragmatic nuances of both languages. Early approaches were mostly rule-based or statistical. These methods have since been superseded by neural machine translation and large language models. History Origins The origins of machine translation can be traced back to the work of Al-Kindi, a ninth-century Arabic cryptographer who developed techniques for systemic language translation, including cryptanalysis, frequency analysis, and probability and statistics, which are used in modern machine translation. The idea of machine translation later appeared in the 17th century. In 1629, René Descartes proposed a universal language, with equivalent ideas in different tongues sharing one symbol. The idea of using digital computers for translation of natural languages was proposed as early as 1947 by England's A. D. Booth and Warr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Maximum Likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when the random errors are assumed to have normal distributions with the same variance. From the perspective of Bayesian inference, ML ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Maximum A Posteriori

An estimation procedure that is often claimed to be part of Bayesian statistics is the maximum a posteriori (MAP) estimate of an unknown quantity, that equals the mode of the posterior density with respect to some reference measure, typically the Lebesgue measure. The MAP can be used to obtain a point estimate of an unobserved quantity on the basis of empirical data. It is closely related to the method of maximum likelihood (ML) estimation, but employs an augmented optimization objective which incorporates a prior density over the quantity one wants to estimate. MAP estimation is therefore a regularization of maximum likelihood estimation, so is not a well-defined statistic of the Bayesian posterior distribution. Description Assume that we want to estimate an unobserved population parameter \theta on the basis of observations x. Let f be the sampling distribution of x, so that f(x\mid\theta) is the probability of x when the underlying population parameter is \theta. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Minimum Distance Estimation

Minimum-distance estimation (MDE) is a conceptual method for fitting a statistical model to data, usually the empirical distribution. Often-used estimators such as ordinary least squares can be thought of as special cases of minimum-distance estimation. While consistent and asymptotically normal, minimum-distance estimators are generally not statistically efficient when compared to maximum likelihood estimators, because they omit the Jacobian usually present in the likelihood function. This, however, substantially reduces the computational complexity of the optimization problem. Definition Let \displaystyle X_1,\ldots,X_n be an independent and identically distributed (iid) random sample from a population with distribution F(x;\theta)\colon \theta\in\Theta and \Theta\subseteq\mathbb^k (k\geq 1). Let \displaystyle F_n(x) be the empirical distribution function based on the sample. Let \hat be an estimator for \displaystyle \theta. Then F(x;\hat) is an estimator for \displaysty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Schönfinkeling

In mathematics and computer science, currying is the technique of translating a function that takes multiple arguments into a sequence of families of functions, each taking a single argument. In the prototypical example, one begins with a function f:(X\times Y)\to Z that takes two arguments, one from X and one from Y, and produces objects in Z. The curried form of this function treats the first argument as a parameter, so as to create a family of functions f_x :Y\to Z. The family is arranged so that for each object x in X, there is exactly one function f_x. In this example, \mbox itself becomes a function that takes f as an argument, and returns a function that maps each x to f_x. The proper notation for expressing this is verbose. The function f belongs to the set of functions (X\times Y)\to Z. Meanwhile, f_x belongs to the set of functions Y\to Z. Thus, something that maps x to f_x will be of the type X\to \to Z With this notation, \mbox is a function that takes objects from ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |