|

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantification (science)

In mathematics and empirical science, quantification (or quantitation) is the act of counting and measuring that maps human sense observations and experiences into quantity, quantities. Quantification in this sense is fundamental to the scientific method. Natural science Some measure of the undisputed general importance of quantification in the natural sciences can be gleaned from the following comments: * "these are mere facts, but they are quantitative facts and the basis of science." * It seems to be held as universally true that "the foundation of quantification is measurement." * There is little doubt that "quantification provided a basis for the objectivity of science." * In ancient times, "musicians and artists ... rejected quantification, but merchants, by definition, quantified their affairs, in order to survive, made them visible on parchment and paper." * Any reasonable "comparison between Aristotle and Galileo shows clearly that there can be no unique lawfulness discov ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fair Coin

In probability theory and statistics, a sequence of Independence (probability theory), independent Bernoulli trials with probability 1/2 of success on each trial is metaphorically called a fair coin. One for which the probability is not 1/2 is called a biased or unfair coin. In theoretical studies, the assumption that a coin is fair is often made by referring to an ideal coin. John Edmund Kerrich performed experiments in coin flipping and found that a coin made from a wooden disk about the size of a Crown (British coin), crown and coated on one side with lead landed heads (wooden side up) 679 times out of 1000. In this experiment the coin was tossed by balancing it on the forefinger, flipping it using the thumb so that it spun through the air for about a foot before landing on a flat cloth spread over a table. Edwin Thompson Jaynes claimed that when a coin is caught in the hand, instead of being allowed to bounce, the physical bias in the coin is insignificant compared to the met ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Detection And Correction

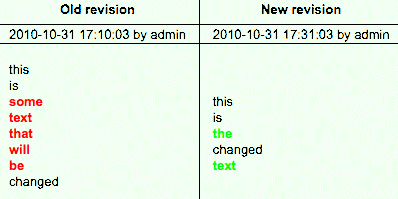

In information theory and coding theory with applications in computer science and telecommunications, error detection and correction (EDAC) or error control are techniques that enable reliable delivery of digital data over unreliable communication channels. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases. Definitions ''Error detection'' is the detection of errors caused by noise or other impairments during transmission from the transmitter to the receiver. ''Error correction'' is the detection of errors and reconstruction of the original, error-free data. History In classical antiquity, copyists of the Hebrew Bible were paid for their work according to the number of stichs (lines of verse). As the prose books of the Bible were hardly ever w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ZIP (file Format)

ZIP is an archive file format that supports lossless compression, lossless data compression. A ZIP file may contain one or more files or directories that may have been compressed. The ZIP file format permits a number of Data compression, compression algorithms, though DEFLATE is the most common. This format was originally created in 1989 and was first implemented in PKWARE, Inc.'s PKZIP utility, as a replacement for the previous ARC (file format), ARC compression format by Thom Henderson. The ZIP format was then quickly supported by many software utilities other than PKZIP. Microsoft has included built-in ZIP support (under the name "compressed folders") in versions of Microsoft Windows since 1998 via the "Plus! 98" addon for Windows 98. Native support was added as of the year 2000 in Windows ME. Apple has included built-in ZIP support in macOS, Mac OS X 10.3 (via BOMArchiveHelper, now Archive Utility) and later. Most :Free software operating systems, free operating s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information-theoretic Security

A cryptosystem is considered to have information-theoretic security (also called unconditional security) if the system is secure against adversaries with unlimited computing resources and time. In contrast, a system which depends on the computational cost of cryptanalysis to be secure (and thus can be broken by an attack with unlimited computation) is called computationally secure or conditionally secure. Overview An encryption protocol with information-theoretic security is impossible to break even with infinite computational power. Protocols proven to be information-theoretically secure are resistant to future developments in computing. The concept of information-theoretically secure communication was introduced in 1949 by American mathematician Claude Shannon, one of the founders of classical information theory, who used it to prove the one-time pad system was secure. Information-theoretically secure cryptosystems have been used for the most sensitive governmental communic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Complexity Theory

In algorithmic information theory (a subfield of computer science and mathematics), the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program (in a predetermined programming language) that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory. The notion of Kolmogorov complexity can be used to state and prove impossibility results akin to Cantor's diagonal argument, Gödel's incompleteness theorem, and Turing's halting problem. In particular, no program ''P'' computing a lower bound for each text's Kolmogorov complexity can return a value essentially larger than ''P'''s own len ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Source Coding

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sign ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relative Entropy

Relative may refer to: General use *Kinship and family, the principle binding the most basic social units of society. If two people are connected by circumstances of birth, they are said to be ''relatives''. Philosophy *Relativism, the concept that points of view have no absolute truth or validity, having only relative, subjective value according to differences in perception and consideration, or relatively, as in the relative value of an object to a person * Relative value (philosophy) Economics * Relative value (economics) Popular culture Film and television * ''Relatively Speaking'' (1965 play), 1965 British play * ''Relatively Speaking'' (game show), late 1980s television game show * ''Everything's Relative'' (episode)#Yu-Gi-Oh! (Yu-Gi-Oh! Duel Monsters), 2000 Japanese anime ''Yu-Gi-Oh! Duel Monsters'' episode *'' Relative Values'', 2000 film based on the play of the same name. *'' It's All Relative'', 2003-4 comedy television series *''Intelligence is Relative'', tag lin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Exponent

In information theory, the error exponent of a channel code or source code over the block length of the code is the rate at which the error probability decays exponentially with the block length of the code. Formally, it is defined as the limiting ratio of the negative logarithm of the error probability to the block length of the code for large block lengths. For example, if the probability of error P_ of a decoder drops as e^, where n is the block length, the error exponent is \alpha. In this example, \frac approaches \alpha for large n. Many of the information-theoretic theorems are of asymptotic nature, for example, the channel coding theorem states that for any rate less than the channel capacity, the probability of the error of the channel code can be made to go to zero as the block length goes to infinity. In practical situations, there are limitations to the delay of the communication and the block length must be finite. Therefore, it is important to study how the prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

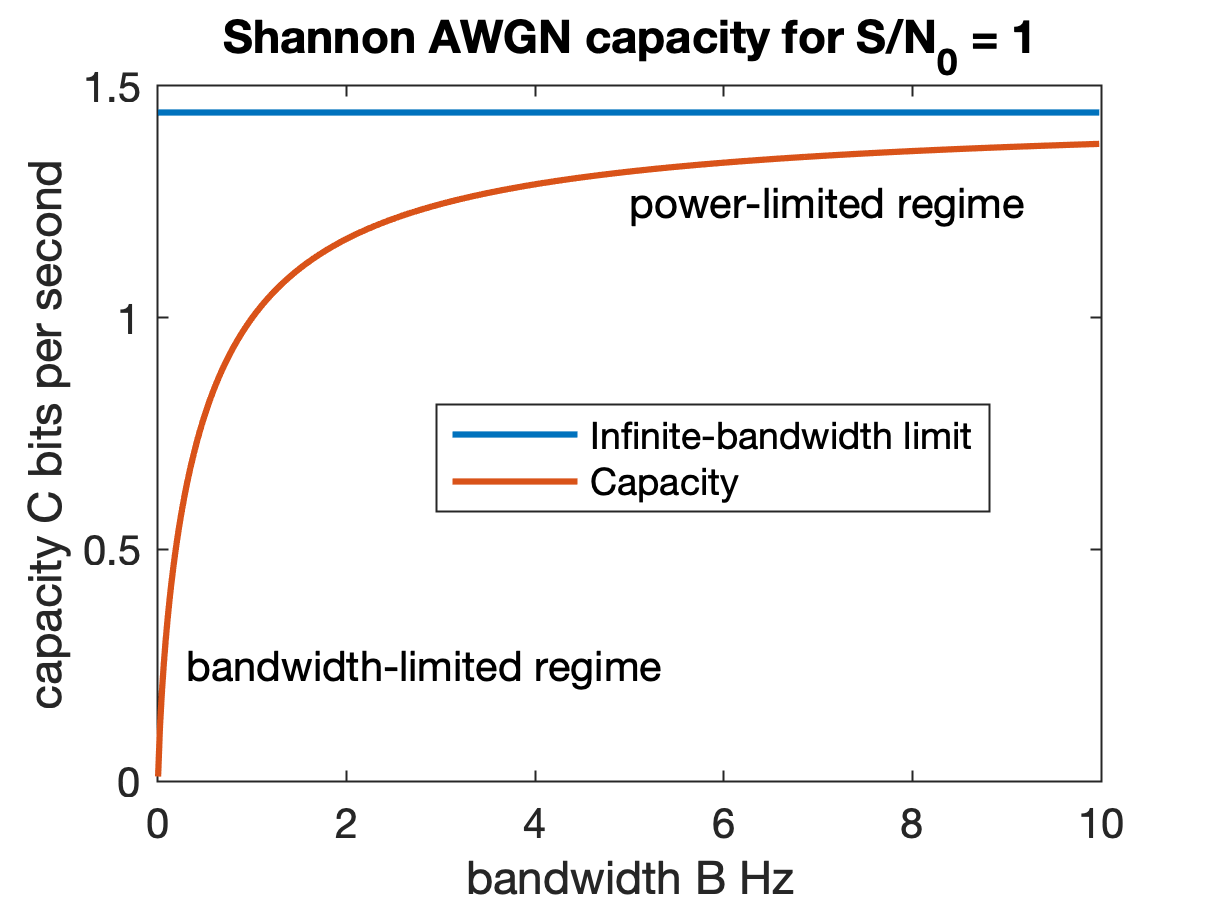

Channel Capacity

Channel capacity, in electrical engineering, computer science, and information theory, is the theoretical maximum rate at which information can be reliably transmitted over a communication channel. Following the terms of the noisy-channel coding theorem, the channel capacity of a given Channel (communications), channel is the highest information rate (in units of information entropy, information per unit time) that can be achieved with arbitrarily small error probability. Information theory, developed by Claude E. Shannon in 1948, defines the notion of channel capacity and provides a mathematical model by which it may be computed. The key result states that the capacity of the channel, as defined above, is given by the maximum of the mutual information between the input and output of the channel, where the maximization is with respect to the input distribution. The notion of channel capacity has been central to the development of modern wireline and wireless communication system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |