|

Error Exponent

In information theory, the error exponent of a channel code or source code over the block length of the code is the rate at which the error probability decays exponentially with the block length of the code. Formally, it is defined as the limiting ratio of the negative logarithm of the error probability to the block length of the code for large block lengths. For example, if the probability of error P_ of a decoder drops as e^, where n is the block length, the error exponent is \alpha. In this example, \frac approaches \alpha for large n. Many of the information-theoretic theorems are of asymptotic nature, for example, the channel coding theorem states that for any rate less than the channel capacity, the probability of the error of the channel code can be made to go to zero as the block length goes to infinity. In practical situations, there are limitations to the delay of the communication and the block length must be finite. Therefore, it is important to study how the prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

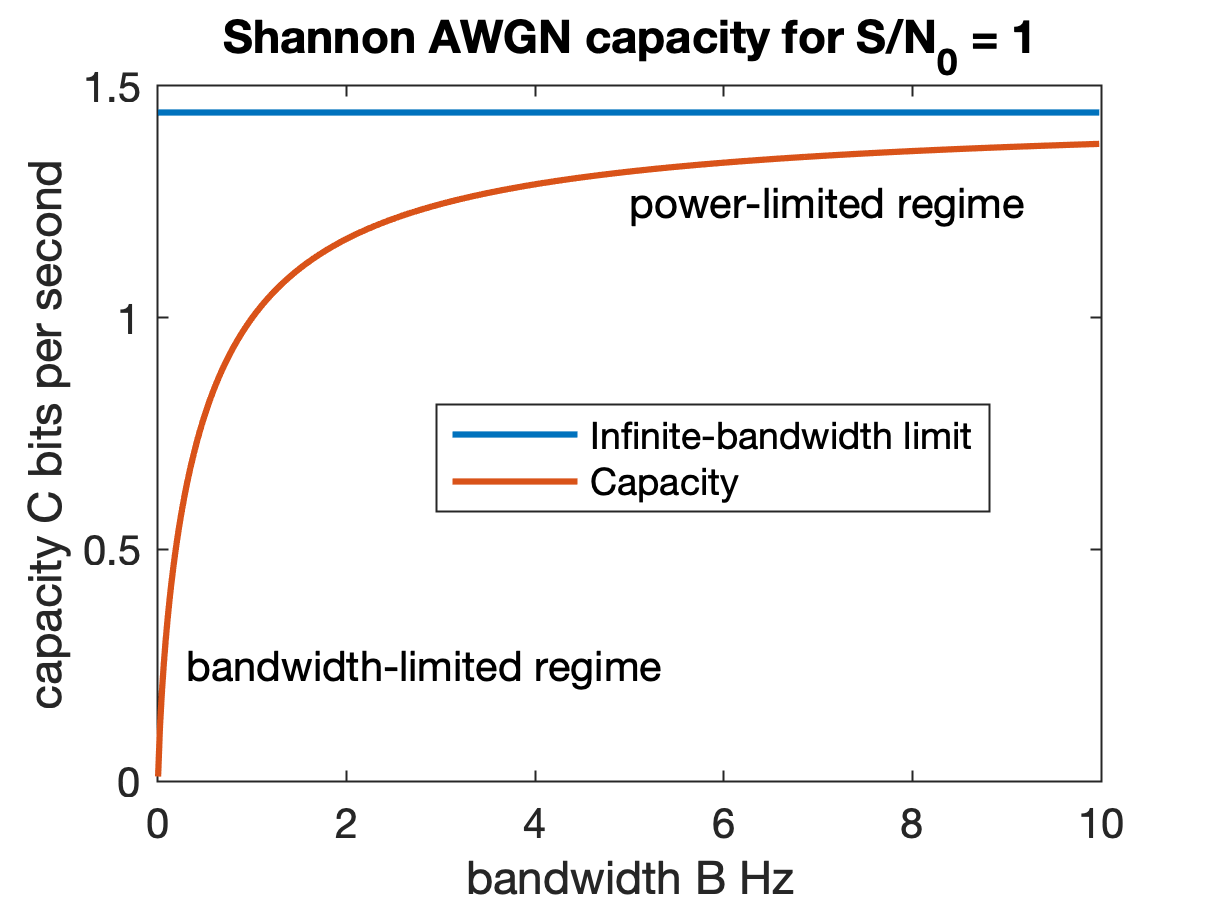

Channel Capacity

Channel capacity, in electrical engineering, computer science, and information theory, is the theoretical maximum rate at which information can be reliably transmitted over a communication channel. Following the terms of the noisy-channel coding theorem, the channel capacity of a given Channel (communications), channel is the highest information rate (in units of information entropy, information per unit time) that can be achieved with arbitrarily small error probability. Information theory, developed by Claude E. Shannon in 1948, defines the notion of channel capacity and provides a mathematical model by which it may be computed. The key result states that the capacity of the channel, as defined above, is given by the maximum of the mutual information between the input and output of the channel, where the maximization is with respect to the input distribution. The notion of channel capacity has been central to the development of modern wireline and wireless communication system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Codec

A codec is a computer hardware or software component that encodes or decodes a data stream or signal. ''Codec'' is a portmanteau of coder/decoder. In electronic communications, an endec is a device that acts as both an encoder and a decoder on a signal or data stream, and hence is a type of codec. ''Endec'' is a portmanteau of encoder/decoder. A coder or encoder encodes a data stream or a signal for transmission or storage, possibly in encrypted form, and the decoder function reverses the encoding for playback or editing. Codecs are used in videoconferencing, streaming media, and video editing applications. History Originally, in the mid-20th century, a codec was a hardware device that coded analog signals into digital form using pulse-code modulation (PCM). Later, the term was also applied to software for converting between digital signal formats, including companding functions. Examples An audio codec converts analog audio signals into digital signals for transmissi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noisy Channel Coding Theorem

In information theory, the noisy-channel coding theorem (sometimes Shannon's theorem or Shannon's limit), establishes that for any given degree of noise contamination of a communication channel, it is possible (in theory) to communicate discrete data (digital information) nearly error-free up to a computable maximum rate through the channel. This result was presented by Claude Shannon in 1948 and was based in part on earlier work and ideas of Harry Nyquist and Ralph Hartley. The Shannon limit or Shannon capacity of a communication channel refers to the maximum rate of error-free data that can theoretically be transferred over the channel if the link is subject to random data transmission errors, for a particular noise level. It was first described by Shannon (1948), and shortly after published in a book by Shannon and Warren Weaver entitled ''The Mathematical Theory of Communication'' (1949). This founded the modern discipline of information theory. Overview Stated by Claud ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Mass Function

In probability and statistics, a probability mass function (sometimes called ''probability function'' or ''frequency function'') is a function that gives the probability that a discrete random variable is exactly equal to some value. Sometimes it is also known as the discrete probability density function. The probability mass function is often the primary means of defining a discrete probability distribution, and such functions exist for either scalar or multivariate random variables whose domain is discrete. A probability mass function differs from a continuous probability density function (PDF) in that the latter is associated with continuous rather than discrete random variables. A continuous PDF must be integrated over an interval to yield a probability. The value of the random variable having the largest probability mass is called the mode. Formal definition Probability mass function is the probability distribution of a discrete random variable, and provides the p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Source Coding

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sign ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy

Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory. It has found far-ranging applications in chemistry and physics, in biological systems and their relation to life, in cosmology, economics, sociology, weather science, climate change and information systems including the transmission of information in telecommunication. Entropy is central to the second law of thermodynamics, which states that the entropy of an isolated system left to spontaneous evolution cannot decrease with time. As a result, isolated systems evolve toward thermodynamic equilibrium, where the entropy is highest. A consequence of the second law of thermodynamics is that certain processes are irreversible. The thermodynami ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Source Coding

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sign ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Channel Coding

In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding is a technique used for error control, controlling errors in data transmission over unreliable or noisy communication channels. The central idea is that the sender encodes the message in a Redundancy (information theory), redundant way, most often by using an error correction code, or error correcting code (ECC). The redundancy allows the receiver not only to error detection, detect errors that may occur anywhere in the message, but often to correct a limited number of errors. Therefore a reverse channel to request re-transmission may not be needed. The cost is a fixed, higher forward channel bandwidth. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. FEC can be applied in situations where re-transmissions are costly or impossible, such as one-way communic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |