|

Heavy-tailed

In probability theory, heavy-tailed distributions are probability distributions whose tails are not exponentially bounded: that is, they have heavier tails than the exponential distribution. Roughly speaking, “heavy-tailed” means the distribution decreases more slowly than an exponential distribution, so extreme values are more likely. In many applications it is the right tail of the distribution that is of interest, but a distribution may have a heavy left tail, or both tails may be heavy. There are three important subclasses of heavy-tailed distributions: the fat-tailed distributions, the long-tailed distributions, and the subexponential distributions. In practice, all commonly used heavy-tailed distributions belong to the subexponential class, introduced by Jozef Teugels. There is still some discrepancy over the use of the term heavy-tailed. There are two other definitions in use. Some authors use the term to refer to those distributions which do not have all their po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fat-tailed Distribution

A fat-tailed distribution is a probability distribution that exhibits a large skewness or kurtosis, relative to that of either a normal distribution or an exponential distribution. In common usage, the terms fat-tailed and Heavy-tailed distribution, heavy-tailed are sometimes synonymous; fat-tailed is sometimes also defined as a subset of heavy-tailed. Different research communities favor one or the other largely for historical reasons, and may have differences in the precise definition of either. Fat-tailed distributions have been empirically encountered in a variety of areas: physics, earth sciences, economics and political science. The class of fat-tailed distributions includes those whose tails decay like a power law, which is a common point of reference in their use in the scientific literature. However, fat-tailed distributions also include other slowly-decaying distributions, such as the log-normal distribution, log-normal. The extreme case: a power-law distribution The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stable Distributions

In probability theory, a distribution is said to be stable if a linear combination of two independent random variables with this distribution has the same distribution, up to location and scale parameters. A random variable is said to be stable if its distribution is stable. The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution, after Paul Lévy, the first mathematician to have studied it. Of the four parameters defining the family, most attention has been focused on the stability parameter, \alpha (see panel). Stable distributions have 0 < \alpha \leq 2, with the upper bound corresponding to the , and to the |

Lévy Distribution

In probability theory and statistics, the Lévy distribution, named after Paul Lévy, is a continuous probability distribution for a non-negative random variable. In spectroscopy, this distribution, with frequency as the dependent variable, is known as a van der Waals profile."van der Waals profile" appears with lowercase "van" in almost all sources, such as: ''Statistical mechanics of the liquid surface'' by Clive Anthony Croxton, 1980, A Wiley-Interscience publication, , and in ''Journal of technical physics'', Volume 36, by Instytut Podstawowych Problemów Techniki (Polska Akademia Nauk), publisher: Państwowe Wydawn. Naukowe., 1995/ref> It is a special case of the inverse-gamma distribution. It is a stable distribution. Definition The probability density function of the Lévy distribution over the domain x \ge \mu is : f(x; \mu, c) = \sqrt \, \frac, where \mu is the location parameter, and c is the scale parameter. The cumulative distribution function is : F(x; \mu, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log-Cauchy Distribution

In probability theory, a log-Cauchy distribution is a probability distribution of a random variable whose logarithm is distributed in accordance with a Cauchy distribution. If ''X'' is a random variable with a Cauchy distribution, then ''Y'' = exp(''X'') has a log-Cauchy distribution; likewise, if ''Y'' has a log-Cauchy distribution, then ''X'' = log(''Y'') has a Cauchy distribution. Characterization The log-Cauchy distribution is a special case of the log-t distribution where the degrees of freedom parameter is equal to 1. Probability density function The log-Cauchy distribution has the probability density function: :\begin f(x; \mu,\sigma) & = \frac, \ \ x>0 \\ & = \left \right \ \ x>0 \end where \mu is a real number and \sigma >0. If \sigma is known, the scale parameter is e^. \mu and \sigma correspond to the location parameter and scale parameter of the associated Cauchy distribution. Some authors define \mu and \sigma as the location and scale parameter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. It is the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviation; for example, the variance of a sum of uncorrelated random variables is equal to the sum of their variances. A disadvantage of the variance for practical applications is that, unlike the standard deviation, its units differ from the random variable, which is why the standard devi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Long-tailed Distribution

In statistics and business, a long tail of some distributions of numbers is the portion of the distribution having many occurrences far from the "head" or central part of the distribution. The distribution could involve popularities, random numbers of occurrences of events with various probabilities, etc. The term is often used loosely, with no definition or an arbitrary definition, but precise definitions are possible. In statistics, the term ''long-tailed distribution'' has a narrow technical meaning, and is a subtype of heavy-tailed distribution. Intuitively, a distribution is (right) long-tailed if, for any fixed amount, when a quantity exceeds a high level, it almost certainly exceeds it by at least that amount: large quantities are probably even larger. Note that there is no sense of ''the'' "long tail" of a distribution, but only the ''property'' of a distribution being long-tailed. In business, the term ''long tail'' is applied to rank-size distributions or rank-fre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithmic Growth

In mathematics, logarithmic growth describes a phenomenon whose size or cost can be described as a logarithm function of some input. e.g. ''y'' = ''C'' log (''x''). Any logarithm base can be used, since one can be converted to another by multiplying by a fixed constant.. Logarithmic growth is the inverse of exponential growth and is very slow. A familiar example of logarithmic growth is a number, ''N'', in positional notation, which grows as log''b'' (''N''), where ''b'' is the base of the number system used, e.g. 10 for decimal arithmetic. In more advanced mathematics, the partial sums of the harmonic series :1+\frac+\frac+\frac+\frac+\cdots grow logarithmically. In the design of computer algorithms, logarithmic growth, and related variants, such as log-linear, or linearithmic, growth are very desirable indications of efficiency, and occur in the time complexity analysis of algorithms such as binary search. Logarithmic growth can lead to apparent paradoxes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Q-Gaussian Distribution

The ''q''-Gaussian is a probability distribution arising from the maximization of the Tsallis entropy under appropriate constraints. It is one example of a Tsallis distribution. The ''q''-Gaussian is a generalization of the Gaussian in the same way that Tsallis entropy is a generalization of standard Entropy (statistical thermodynamics), Boltzmann–Gibbs entropy or Entropy (information theory), Shannon entropy. The normal distribution is recovered as ''q'' → 1. The ''q''-Gaussian has been applied to problems in the fields of statistical mechanics, geology, anatomy, astronomy, economics, finance, and machine learning. The distribution is often favored for its heavy tails in comparison to the Gaussian for 1 < ''q'' < 3. For the ''q''-Gaussian distribution is the PDF of a bounded random variable. This makes in biology and other domains the ''q''-Gaussian distribution more suitable than Gaussian distribution to model the effect of external stochast ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Indicator Function

In mathematics, an indicator function or a characteristic function of a subset of a set is a function that maps elements of the subset to one, and all other elements to zero. That is, if is a subset of some set , then the indicator function of is the function \mathbf_A defined by \mathbf_\!(x) = 1 if x \in A, and \mathbf_\!(x) = 0 otherwise. Other common notations are and \chi_A. The indicator function of is the Iverson bracket of the property of belonging to ; that is, \mathbf_(x) = \left x\in A\ \right For example, the Dirichlet function is the indicator function of the rational numbers as a subset of the real numbers. Definition Given an arbitrary set , the indicator function of a subset of is the function \mathbf_A \colon X \mapsto \ defined by \operatorname\mathbf_A\!( x ) = \begin 1 & \text x \in A \\ 0 & \text x \notin A \,. \end The Iverson bracket provides the equivalent notation \left x\in A\ \right/math> or that can be used instead of \mathbf_\ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fréchet Distribution

The Fréchet distribution, also known as inverse Weibull distribution, is a special case of the generalized extreme value distribution. It has the cumulative distribution function :\ \Pr(\ X \le x\ ) = e^ ~ \text ~ x > 0 ~. where is a shape parameter. It can be generalised to include a location parameter (the minimum) and a scale parameter with the cumulative distribution function :\ \Pr(\ X \le x\ ) = \exp\left[\ -\left( \tfrac \right)^\ \right] ~~ \text ~ x > m ~. Named for Maurice Fréchet who wrote a related paper in 1927, further work was done by Fisher–Tippett distribution, Fisher and Tippett in 1928 and by Emil Julius Gumbel, Gumbel in 1958. Characteristics The single parameter Fréchet, with parameter \ \alpha\ , has standardized moment :\mu_k = \int_0^\infty x^k f(x)\ \operatorname x =\int_0^\infty t^e^ \ \operatorname t\ , (with \ t = x^\ ) defined only for \ k1 the Expected value, expectation is E[X]=\Gamma(1-\tfrac) * For \alpha>2 the variance is \text(X)=\Gamma( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

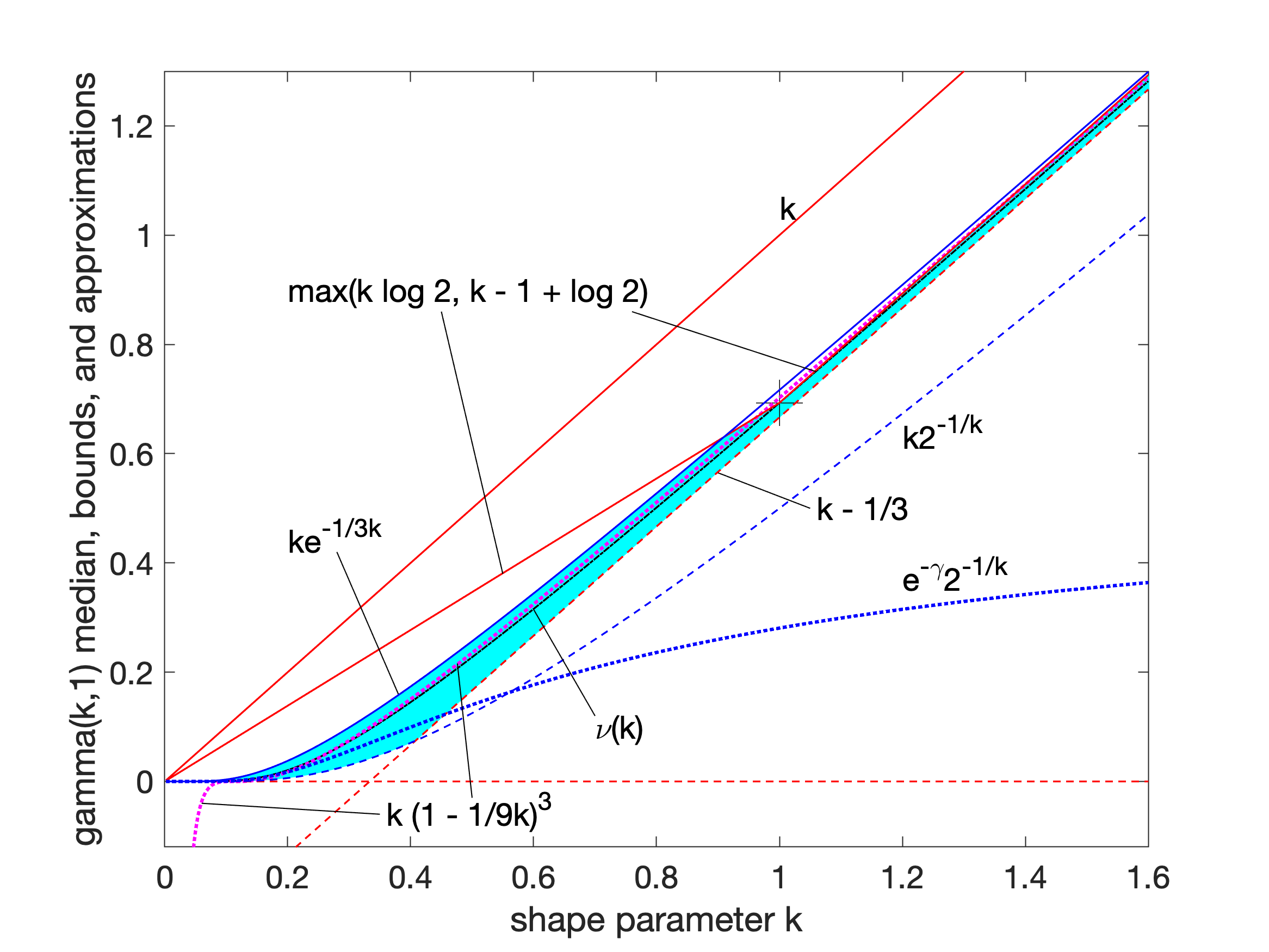

Gamma Distribution

In probability theory and statistics, the gamma distribution is a versatile two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-squared distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use: # With a shape parameter and a scale parameter # With a shape parameter \alpha and a rate parameter In each of these forms, both parameters are positive real numbers. The distribution has important applications in various fields, including econometrics, Bayesian statistics, and life testing. In econometrics, the (''α'', ''θ'') parameterization is common for modeling waiting times, such as the time until death, where it often takes the form of an Erlang distribution for integer ''α'' values. Bayesian statisticians prefer the (''α'',''λ'') parameterization, utilizing the gamma distribution as a conjugate prior for several inverse scale parameters, facilit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log-logistic Distribution

In probability and statistics, the log-logistic distribution (known as the Fisk distribution in economics) is a continuous probability distribution for a non-negative random variable. It is used in survival analysis as a parametric model for events whose rate increases initially and decreases later, as, for example, mortality rate from cancer following diagnosis or treatment. It has also been used in hydrology to model stream flow and precipitation, in economics as a simple model of the distribution of wealth or income, and in networking to model the transmission times of data considering both the network and the software. The log-logistic distribution is the probability distribution of a random variable whose logarithm has a logistic distribution. It is similar in shape to the log-normal distribution but has heavier tails. Unlike the log-normal, its cumulative distribution function can be written in closed form. Characterization There are several different parameterizations of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |