|

Variational Bayes

Variational Bayesian methods are a family of techniques for approximating intractable integrals arising in Bayesian inference and machine learning. They are typically used in complex statistical models consisting of observed variables (usually termed "data") as well as unknown parameters and latent variables, with various sorts of relationships among the three types of random variables, as might be described by a graphical model. As typical in Bayesian inference, the parameters and latent variables are grouped together as "unobserved variables". Variational Bayesian methods are primarily used for two purposes: # To provide an analytical approximation to the posterior probability of the unobserved variables, in order to do statistical inference over these variables. # To derive a lower bound for the marginal likelihood (sometimes called the ''evidence'') of the observed data (i.e. the marginal probability of the data given the model, with marginalization performed over unobserved ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integral

In mathematics, an integral is the continuous analog of a Summation, sum, which is used to calculate area, areas, volume, volumes, and their generalizations. Integration, the process of computing an integral, is one of the two fundamental operations of calculus,Integral calculus is a very well established mathematical discipline for which there are many sources. See and , for example. the other being Derivative, differentiation. Integration was initially used to solve problems in mathematics and physics, such as finding the area under a curve, or determining displacement from velocity. Usage of integration expanded to a wide variety of scientific fields thereafter. A definite integral computes the signed area of the region in the plane that is bounded by the Graph of a function, graph of a given Function (mathematics), function between two points in the real line. Conventionally, areas above the horizontal Coordinate axis, axis of the plane are positive while areas below are n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

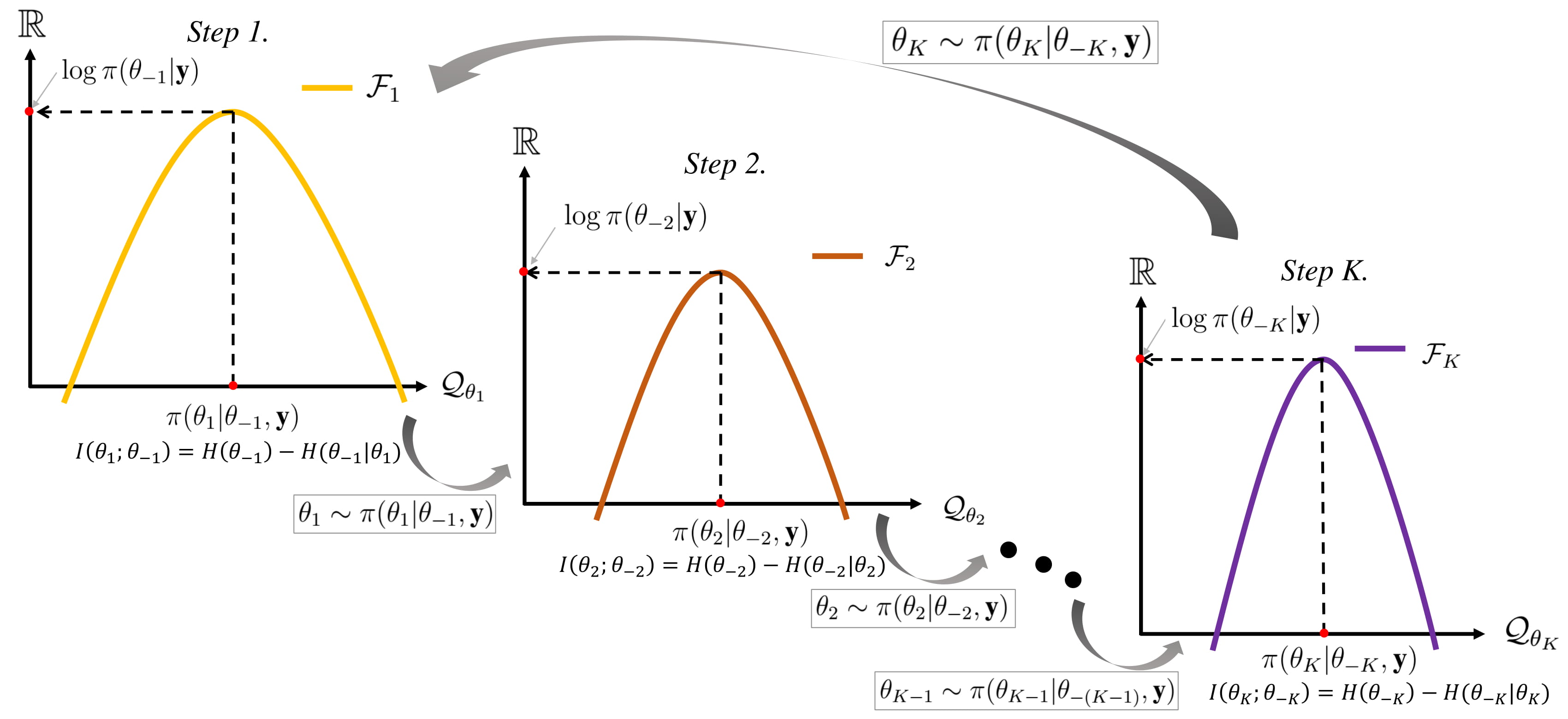

Gibbs Sampling

In statistics, Gibbs sampling or a Gibbs sampler is a Markov chain Monte Carlo (MCMC) algorithm for sampling from a specified multivariate distribution, multivariate probability distribution when direct sampling from the joint distribution is difficult, but sampling from the Conditional probability distribution, conditional distribution is more practical. This sequence can be used to approximate the joint distribution (e.g., to generate a histogram of the distribution); to approximate the marginal distribution of one of the variables, or some subset of the variables (for example, the unknown parameters or latent variables); or to compute an integral (such as the expected value of one of the variables). Typically, some of the variables correspond to observations whose values are known, and hence do not need to be sampled. Gibbs sampling is commonly used as a means of statistical inference, especially Bayesian inference. It is a randomized algorithm (i.e. an algorithm that makes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across all potential states. Given a discrete random variable X, which may be any member x within the set \mathcal and is distributed according to p\colon \mathcal\to[0, 1], the entropy is \Eta(X) := -\sum_ p(x) \log p(x), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or "shannon (unit), shannons"), while base Euler's number, ''e'' gives "natural units" nat (unit), nat, and base 10 gives units of "dits", "bans", or "Hartley (unit), hartleys". An equivalent definition of entropy is the expected value of the self-information of a v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thermodynamic Free Energy

In thermodynamics, the thermodynamic free energy is one of the state functions of a thermodynamic system. The change in the free energy is the maximum amount of work that the system can perform in a process at constant temperature, and its sign indicates whether the process is thermodynamically favorable or forbidden. Since free energy usually contains potential energy, it is not absolute but depends on the choice of a zero point. Therefore, only relative free energy values, or changes in free energy, are physically meaningful. The free energy is the portion of any first-law energy that is available to perform thermodynamic work at constant temperature, ''i.e.'', work mediated by thermal energy. Free energy is subject to irreversible loss in the course of such work. Since first-law energy is always conserved, it is evident that free energy is an expendable, second-law kind of energy. Several free energy functions may be formulated based on system criteria. Free energy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Model Evidence

A marginal likelihood is a likelihood function that has been integrated over the parameter space. In Bayesian statistics, it represents the probability of generating the observed sample for all possible values of the parameters; it can be understood as the probability of the model itself and is therefore often referred to as model evidence or simply evidence. Due to the integration over the parameter space, the marginal likelihood does not directly depend upon the parameters. If the focus is not on model comparison, the marginal likelihood is simply the normalizing constant that ensures that the posterior is a proper probability. It is related to the partition function in statistical mechanics. Concept Given a set of independent identically distributed data points \mathbf=(x_1,\ldots,x_n), where x_i \sim p(x, \theta) according to some probability distribution parameterized by \theta, where \theta itself is a random variable described by a distribution, i.e. \theta \sim p(\the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value

In probability theory, the expected value (also called expectation, expectancy, expectation operator, mathematical expectation, mean, expectation value, or first Moment (mathematics), moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean, mean of the possible values a random variable can take, weighted by the probability of those outcomes. Since it is obtained through arithmetic, the expected value sometimes may not even be included in the sample data set; it is not the value you would expect to get in reality. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by Integral, integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation Propagation

Expectation propagation (EP) is a technique in Bayesian machine learning. EP finds approximations to a probability distribution. It uses an iterative approach that uses the factorization structure of the target distribution. It differs from other Bayesian approximation approaches such as variational Bayesian methods. More specifically, suppose we wish to approximate an intractable probability distribution p(\mathbf) with a tractable distribution q(\mathbf). Expectation propagation achieves this approximation by minimizing the Kullback-Leibler divergence \mathrm(p, , q). Variational Bayesian methods minimize \mathrm(q, , p) instead. If q(\mathbf) is a Gaussian \mathcal(\mathbf, \mu, \Sigma), then \mathrm(p, , q) is minimized with \mu and \Sigma being equal to the mean of p(\mathbf) and the covariance of p(\mathbf), respectively; this is called moment matching. Applications Expectation propagation via moment matching plays a vital role in approximation for indicator functions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

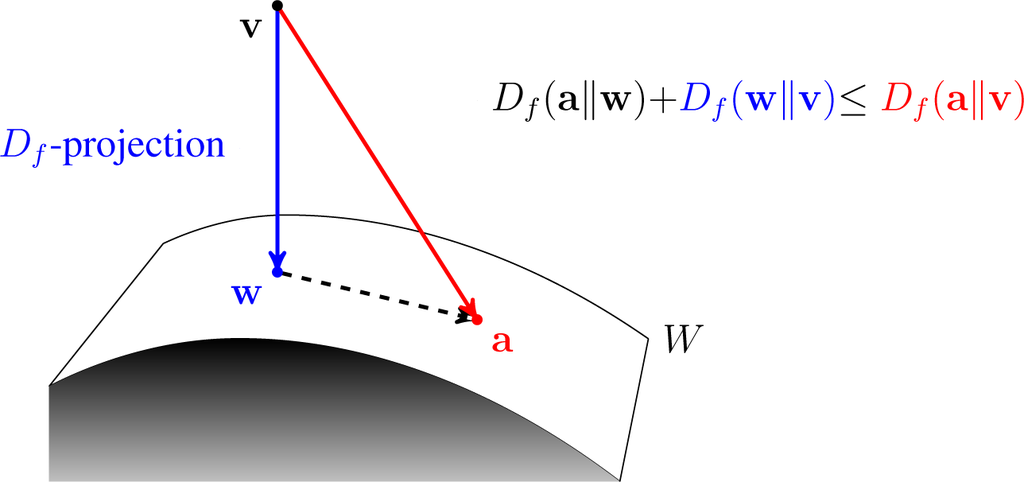

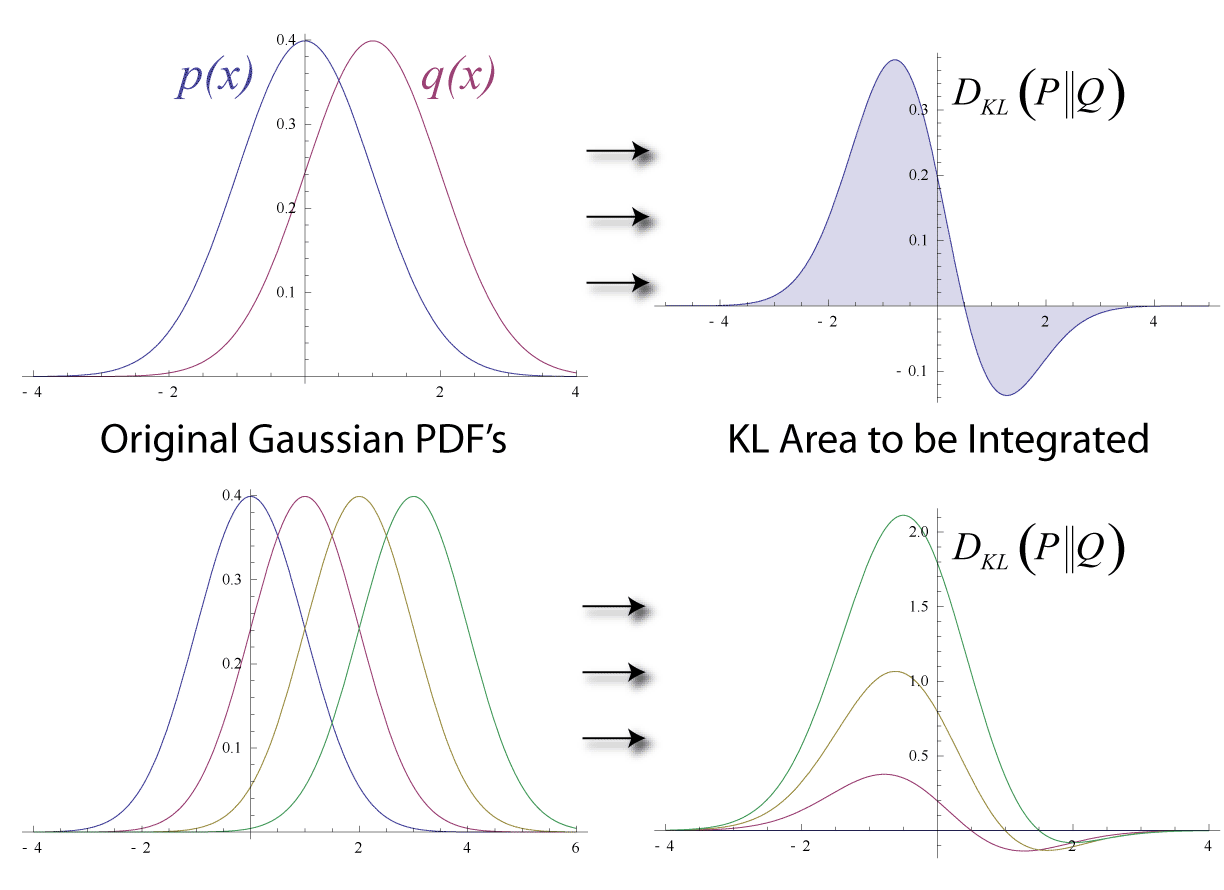

Kullback–Leibler Divergence

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is different from a true probability distribution . Mathematically, it is defined as D_\text(P \parallel Q) = \sum_ P(x) \, \log \frac\text A simple interpretation of the KL divergence of from is the expected excess surprise from using as a model instead of when the actual distribution is . While it is a measure of how different two distributions are and is thus a distance in some sense, it is not actually a metric, which is the most familiar and formal type of distance. In particular, it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for cer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Calculus Of Variations

The calculus of variations (or variational calculus) is a field of mathematical analysis that uses variations, which are small changes in Function (mathematics), functions and functional (mathematics), functionals, to find maxima and minima of functionals: Map (mathematics), mappings from a set of Function (mathematics), functions to the real numbers. Functionals are often expressed as definite integrals involving functions and their derivatives. Functions that maximize or minimize functionals may be found using the Euler–Lagrange equation of the calculus of variations. A simple example of such a problem is to find the curve of shortest length connecting two points. If there are no constraints, the solution is a straight line between the points. However, if the curve is constrained to lie on a surface in space, then the solution is less obvious, and possibly many solutions may exist. Such solutions are known as ''geodesics''. A related problem is posed by Fermat's principle: li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Posterior Distribution

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior probability contains everything there is to know about an uncertain proposition (such as a scientific hypothesis, or parameter values), given prior knowledge and a mathematical model describing the observations available at a particular time. After the arrival of new information, the current posterior probability may serve as the prior in another round of Bayesian updating. In the context of Bayesian statistics, the posterior probability distribution usually describes the epistemic uncertainty about statistical parameters conditional on a collection of observed data. From a given posterior distribution, various point and interval estimates can be derived, such as the maximum a posteriori (MAP) or the highest posterior density interval ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum A Posteriori Estimation

An estimation procedure that is often claimed to be part of Bayesian statistics is the maximum a posteriori (MAP) estimate of an unknown quantity, that equals the mode of the posterior density with respect to some reference measure, typically the Lebesgue measure. The MAP can be used to obtain a point estimate of an unobserved quantity on the basis of empirical data. It is closely related to the method of maximum likelihood (ML) estimation, but employs an augmented optimization objective which incorporates a prior density over the quantity one wants to estimate. MAP estimation is therefore a regularization of maximum likelihood estimation, so is not a well-defined statistic of the Bayesian posterior distribution. Description Assume that we want to estimate an unobserved population parameter \theta on the basis of observations x. Let f be the sampling distribution of x, so that f(x\mid\theta) is the probability of x when the underlying population parameter is \theta. Then t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood Estimation

In statistics, maximum likelihood estimation (MLE) is a method of estimation theory, estimating the Statistical parameter, parameters of an assumed probability distribution, given some observed data. This is achieved by Mathematical optimization, maximizing a likelihood function so that, under the assumed statistical model, the Realization (probability), observed data is most probable. The point estimate, point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is Differentiable function, differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |