|

Surrogate Data Testing

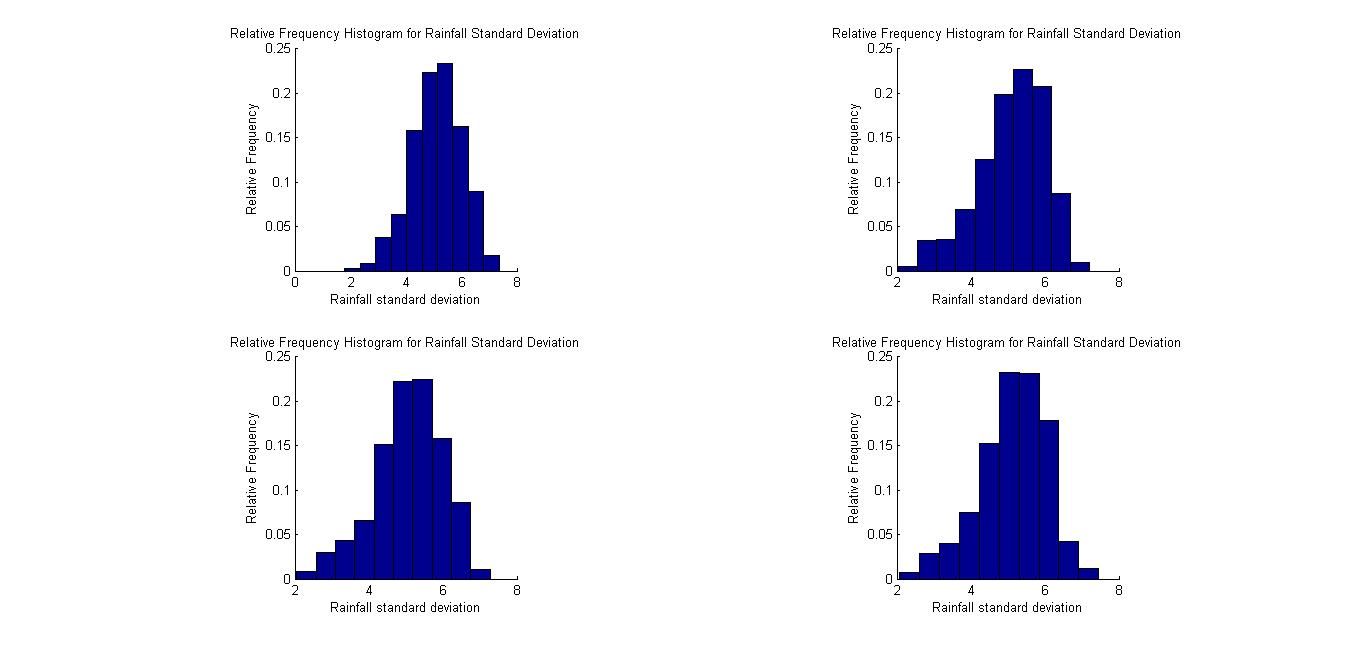

Surrogate data testing (or the ''method of surrogate data'') is a statistical proof by contradiction technique similar to permutation tests and parametric bootstrapping. It is used to detect non-linearity in a time series. The technique involves specifying a null hypothesis H_0 describing a linear process and then generating several surrogate data sets according to H_0 using Monte Carlo methods. A discriminating statistic is then calculated for the original time series and all the surrogate set. If the value of the statistic is significantly different for the original series than for the surrogate set, the null hypothesis is rejected and non-linearity assumed. The particular surrogate data testing method to be used is directly related to the null hypothesis. Usually this is similar to the following: ''The data is a realization of a stationary linear system, whose output has been possibly measured by a monotonically increasing possibly nonlinear (but static) function''. Here ''li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proof By Contradiction

In logic, proof by contradiction is a form of proof that establishes the truth or the validity of a proposition by showing that assuming the proposition to be false leads to a contradiction. Although it is quite freely used in mathematical proofs, not every school of mathematical thought accepts this kind of nonconstructive proof as universally valid. More broadly, proof by contradiction is any form of argument that establishes a statement by arriving at a contradiction, even when the initial assumption is not the negation of the statement to be proved. In this general sense, proof by contradiction is also known as indirect proof, proof by assuming the opposite, and ''reductio ad impossibile''. A mathematical proof employing proof by contradiction usually proceeds as follows: #The proposition to be proved is ''P''. #We assume ''P'' to be false, i.e., we assume ''¬P''. #It is then shown that ''¬P'' implies falsehood. This is typically accomplished by deriving two mutually ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ARMA Model

In the statistical analysis of time series, autoregressive–moving-average (ARMA) models are a way to describe a (weakly) stationary stochastic process using autoregression (AR) and a moving average (MA), each with a polynomial. They are a tool for understanding a series and predicting future values. AR involves regressing the variable on its own lagged (i.e., past) values. MA involves modeling the error as a linear combination of error terms occurring contemporaneously and at various times in the past. The model is usually denoted ARMA(''p'', ''q''), where ''p'' is the order of AR and ''q'' is the order of MA. The general ARMA model was described in the 1951 thesis of Peter Whittle, ''Hypothesis testing in time series analysis'', and it was popularized in the 1970 book by George E. P. Box and Gwilym Jenkins. ARMA models can be estimated by using the Box–Jenkins method. Mathematical formulation Autoregressive model The notation AR(''p'') refers to the autoregressive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Permutation Test

A permutation test (also called re-randomization test or shuffle test) is an exact statistical hypothesis test. A permutation test involves two or more samples. The (possibly counterfactual) null hypothesis is that all samples come from the same distribution H_0: F=G. Under the null hypothesis, the distribution of the test statistic is obtained by calculating all possible values of the test statistic under possible rearrangements of the observed data. Permutation tests are, therefore, a form of resampling. Permutation tests can be understood as surrogate data testing where the surrogate data under the null hypothesis are obtained through permutations of the original data. In other words, the method by which treatments are allocated to subjects in an experimental design is mirrored in the analysis of that design. If the labels are exchangeable under the null hypothesis, then the resulting tests yield exact significance levels; see also exchangeability. Confidence intervals can ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resampling (statistics)

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are: # Permutation tests (also re-randomization tests) for generating counterfactual samples # Bootstrapping # Cross validation # Jackknife Permutation tests Permutation tests rely on resampling the original data assuming the null hypothesis. Based on the resampled data it can be concluded how likely the original data is to occur under the null hypothesis. Bootstrap Bootstrapping is a statistical method for estimating the sampling distribution of an estimator by sampling with replacement from the original sample, most often with the purpose of deriving robust estimates of standard errors and confidence intervals of a population parameter like a mean, median, proportion, odds ratio, correlation coefficient or regression coefficient. It has been called the plug-in principle,Logan, J. David and Wolesensky, Willian R. Mathematical methods in biology. Pure and Ap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chaos, Solitons & Fractals

Elsevier ( ) is a Dutch academic publishing company specializing in scientific, technical, and medical content. Its products include journals such as ''The Lancet'', '' Cell'', the ScienceDirect collection of electronic journals, '' Trends'', the '' Current Opinion'' series, the online citation database Scopus, the SciVal tool for measuring research performance, the ClinicalKey search engine for clinicians, and the ClinicalPath evidence-based cancer care service. Elsevier's products and services include digital tools for data management, instruction, research analytics, and assessment. Elsevier is part of the RELX Group, known until 2015 as Reed Elsevier, a publicly traded company. According to RELX reports, in 2022 Elsevier published more than 600,000 articles annually in over 2,800 journals. As of 2018, its archives contained over 17 million documents and 40,000 e-books, with over one billion annual downloads. Researchers have criticized Elsevier for its high profit margins an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Transform

In mathematics, the Fourier transform (FT) is an integral transform that takes a function as input then outputs another function that describes the extent to which various frequencies are present in the original function. The output of the transform is a complex-valued function of frequency. The term ''Fourier transform'' refers to both this complex-valued function and the mathematical operation. When a distinction needs to be made, the output of the operation is sometimes called the frequency domain representation of the original function. The Fourier transform is analogous to decomposing the sound of a musical chord into the intensities of its constituent pitches. Functions that are localized in the time domain have Fourier transforms that are spread out across the frequency domain and vice versa, a phenomenon known as the uncertainty principle. The critical case for this principle is the Gaussian function, of substantial importance in probability theory and statist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Periodogram

In signal processing, a periodogram is an estimate of the spectral density of a signal. The term was coined by Arthur Schuster in 1898. Today, the periodogram is a component of more sophisticated methods (see spectral estimation). It is the most common tool for examining the amplitude vs frequency characteristics of FIR filters and window functions. FFT spectrum analyzers are also implemented as a time-sequence of periodograms. Definition There are at least two different definitions in use today. One of them involves time-averaging, and one does not. Time-averaging is also the purview of other articles ( Bartlett's method and Welch's method). This article is not about time-averaging. The definition of interest here is that the power spectral density of a continuous function, , is the Fourier transform of its auto-correlation function (see Cross-correlation theorem, Spectral density, and Wiener–Khinchin theorem): \mathcal\ = X(f)\cdot X^*(f) = \left, X(f) \^2. Computa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation Function

Autocorrelation, sometimes known as serial correlation in the discrete time case, measures the correlation of a signal with a delayed copy of itself. Essentially, it quantifies the similarity between observations of a random variable at different points in time. The analysis of autocorrelation is a mathematical tool for identifying repeating patterns or hidden periodicities within a signal obscured by noise. Autocorrelation is widely used in signal processing, time domain and time series analysis to understand the behavior of data over time. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Various time series models incorporate autocorrelation, such as unit root processes, trend-stationary processes, autoregressive processes, and moving average processes. Autocorrelation of stochastic processes In statistics, the autocorrelation of a real or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Method

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. The name comes from the Monte Carlo Casino in Monaco, where the primary developer of the method, mathematician Stanisław Ulam, was inspired by his uncle's gambling habits. Monte Carlo methods are mainly used in three distinct problem classes: optimization, numerical integration, and generating draws from a probability distribution. They can also be used to model phenomena with significant uncertainty in inputs, such as calculating the risk of a nuclear power plant failure. Monte Carlo methods are often implemented using computer simulations, and they can provide approximate solutions to problems that are otherwise intractable or too complex to analyze mathematically. Monte Carlo methods are widely used in va ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Permutation Test

A permutation test (also called re-randomization test or shuffle test) is an exact statistical hypothesis test. A permutation test involves two or more samples. The (possibly counterfactual) null hypothesis is that all samples come from the same distribution H_0: F=G. Under the null hypothesis, the distribution of the test statistic is obtained by calculating all possible values of the test statistic under possible rearrangements of the observed data. Permutation tests are, therefore, a form of resampling. Permutation tests can be understood as surrogate data testing where the surrogate data under the null hypothesis are obtained through permutations of the original data. In other words, the method by which treatments are allocated to subjects in an experimental design is mirrored in the analysis of that design. If the labels are exchangeable under the null hypothesis, then the resulting tests yield exact significance levels; see also exchangeability. Confidence intervals can ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Surrogate Data

Surrogate data, sometimes known as analogous data, usually refers to time series data that is produced using well-defined (linear) models like ARMA processes that reproduce various statistical properties like the autocorrelation structure of a measured data set. The resulting surrogate data can then for example be used for testing for non-linear structure in the empirical data; this is called surrogate data testing. Surrogate or analogous data also refers to data used to supplement available data from which a mathematical model is built. Under this definition, it may be generated (i.e., synthetic data) or transformed from another source. Uses Surrogate data is used in environmental and laboratory settings, when study data from one source is used in estimation of characteristics of another source. For example, it has been used to model population trends in animal species. It can also be used to model biodiversity, as it would be difficult to gather actual data on all species in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Model

In statistics, the term linear model refers to any model which assumes linearity in the system. The most common occurrence is in connection with regression models and the term is often taken as synonymous with linear regression model. However, the term is also used in time series analysis with a different meaning. In each case, the designation "linear" is used to identify a subclass of models for which substantial reduction in the complexity of the related statistical theory is possible. Linear regression models For the regression case, the statistical model is as follows. Given a (random) sample (Y_i, X_, \ldots, X_), \, i = 1, \ldots, n the relation between the observations Y_i and the independent variables X_ is formulated as :Y_i = \beta_0 + \beta_1 \phi_1(X_) + \cdots + \beta_p \phi_p(X_) + \varepsilon_i \qquad i = 1, \ldots, n where \phi_1, \ldots, \phi_p may be nonlinear functions. In the above, the quantities \varepsilon_i are random variables representing err ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |