|

Stochastic Context-free Grammar

In theoretical linguistics and computational linguistics, probabilistic context free grammars (PCFGs) extend context-free grammars, similar to how hidden Markov models extend regular grammars. Each Formal grammar#The syntax of grammars, production is assigned a probability. The probability of a derivation (parse) is the product of the probabilities of the productions used in that derivation. These probabilities can be viewed as parameters of the model, and for large problems it is convenient to learn these parameters via machine learning. A probabilistic grammar's validity is constrained by context of its training dataset. PCFGs originated from grammar theory, and have application in areas as diverse as natural language processing to the study the structure of RNA molecules and design of programming languages. Designing efficient PCFGs has to weigh factors of scalability and generality. Issues such as grammar ambiguity must be resolved. The grammar design affects results accuracy. Gr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theoretical Linguistics

Theoretical linguistics is a term in linguistics that, like the related term general linguistics, can be understood in different ways. Both can be taken as a reference to the theory of language, or the branch of linguistics that inquires into the nature of language and seeks to answer fundamental questions as to what language is, or what the common ground of all languages is. The goal of theoretical linguistics can also be the construction of a general theoretical framework for the description of language. Another use of the term depends on the organisation of linguistics into different sub-fields. The term 'theoretical linguistics' is commonly juxtaposed with applied linguistics. This perspective implies that the aspiring language professional, e.g. a student, must first learn the ''theory'' i.e. properties of the linguistic system, or what Ferdinand de Saussure called ''internal linguistics''. This is followed by ''practice,'' or studies in the applied field. The dichotomy is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inside–outside Algorithm

For Parsing, parsing algorithms in computer science, the inside–outside algorithm is a way of re-estimating production probabilities in a probabilistic context-free grammar. It was introduced by James K. Baker in 1979 as a generalization of the forward–backward algorithm for parameter estimation on hidden Markov models to stochastic context-free grammars. It is used to compute expectations, for example as part of the expectation–maximization algorithm (an unsupervised learning algorithm). Inside and outside probabilities The inside probability \beta_j(p,q) is the total probability of generating words w_p \cdots w_q, given the root nonterminal N^j and a grammar G: :\beta_j(p,q) = P(w_, N^j_, G) The outside probability \alpha_j(p,q) is the total probability of beginning with the start symbol N^1 and generating the nonterminal N^j_ and all the words outside w_p \cdots w_q, given a grammar G: :\alpha_j(p,q) = P(w_, N^j_, w_, G) Computing inside probabilities Base Case: \beta_j( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability

Probability is a branch of mathematics and statistics concerning events and numerical descriptions of how likely they are to occur. The probability of an event is a number between 0 and 1; the larger the probability, the more likely an event is to occur."Kendall's Advanced Theory of Statistics, Volume 1: Distribution Theory", Alan Stuart and Keith Ord, 6th ed., (2009), .William Feller, ''An Introduction to Probability Theory and Its Applications'', vol. 1, 3rd ed., (1968), Wiley, . This number is often expressed as a percentage (%), ranging from 0% to 100%. A simple example is the tossing of a fair (unbiased) coin. Since the coin is fair, the two outcomes ("heads" and "tails") are both equally probable; the probability of "heads" equals the probability of "tails"; and since no other outcomes are possible, the probability of either "heads" or "tails" is 1/2 (which could also be written as 0.5 or 50%). These concepts have been given an axiomatic mathematical formaliza ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithm

In mathematics, the logarithm of a number is the exponent by which another fixed value, the base, must be raised to produce that number. For example, the logarithm of to base is , because is to the rd power: . More generally, if , then is the logarithm of to base , written , so . As a single-variable function, the logarithm to base is the inverse of exponentiation with base . The logarithm base is called the ''decimal'' or ''common'' logarithm and is commonly used in science and engineering. The ''natural'' logarithm has the number as its base; its use is widespread in mathematics and physics because of its very simple derivative. The ''binary'' logarithm uses base and is widely used in computer science, information theory, music theory, and photography. When the base is unambiguous from the context or irrelevant it is often omitted, and the logarithm is written . Logarithms were introduced by John Napier in 1614 as a means of simplifying calculation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parse Tree

A parse tree or parsing tree (also known as a derivation tree or concrete syntax tree) is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term ''parse tree'' itself is used primarily in computational linguistics; in theoretical syntax, the term ''syntax tree'' is more common. Concrete syntax trees reflect the syntax of the input language, making them distinct from the abstract syntax trees used in computer programming. Unlike Reed-Kellogg sentence diagrams used for teaching grammar, parse trees do not use distinct symbol shapes for different types of constituents. Parse trees are usually constructed based on either the constituency relation of constituency grammars ( phrase structure grammars) or the dependency relation of dependency grammars. Parse trees may be generated for sentences in natural languages (see natural language processing), as well as during processing of computer languages, such a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Historical Linguistics

Historical linguistics, also known as diachronic linguistics, is the scientific study of how languages change over time. It seeks to understand the nature and causes of linguistic change and to trace the evolution of languages. Historical linguistics involves several key areas of study, including the reconstruction of ancestral languages, the classification of languages into families, ( comparative linguistics) and the analysis of the cultural and social influences on language development. This field is grounded in the uniformitarian principle, which posits that the processes of language change observed today were also at work in the past, unless there is clear evidence to suggest otherwise. Historical linguists aim to describe and explain changes in individual languages, explore the history of speech communities, and study the origins and meanings of words ( etymology). Development Modern historical linguistics dates to the late 18th century, having originally grown o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pāṇini

(; , ) was a Sanskrit grammarian, logician, philologist, and revered scholar in ancient India during the mid-1st millennium BCE, dated variously by most scholars between the 6th–5th and 4th century BCE. The historical facts of his life are unknown, except only what can be inferred from his works, and legends recorded long after. His most notable work, the ''Aṣṭādhyāyī,'' is conventionally taken to mark the start of Classical Sanskrit. His work formally codified Classical Sanskrit as a refined and standardized language, making use of a technical metalanguage consisting of a syntax, morphology, and lexicon, organised according to a series of meta-rules. Since the exposure of European scholars to his ''Aṣṭādhyāyī'' in the nineteenth century, Pāṇini has been considered the "first Descriptive linguistics, descriptive linguist",#FPencyclo, François & Ponsonnet (2013: 184). and even labelled as "the father of linguistics". His approach to grammar influenced such ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

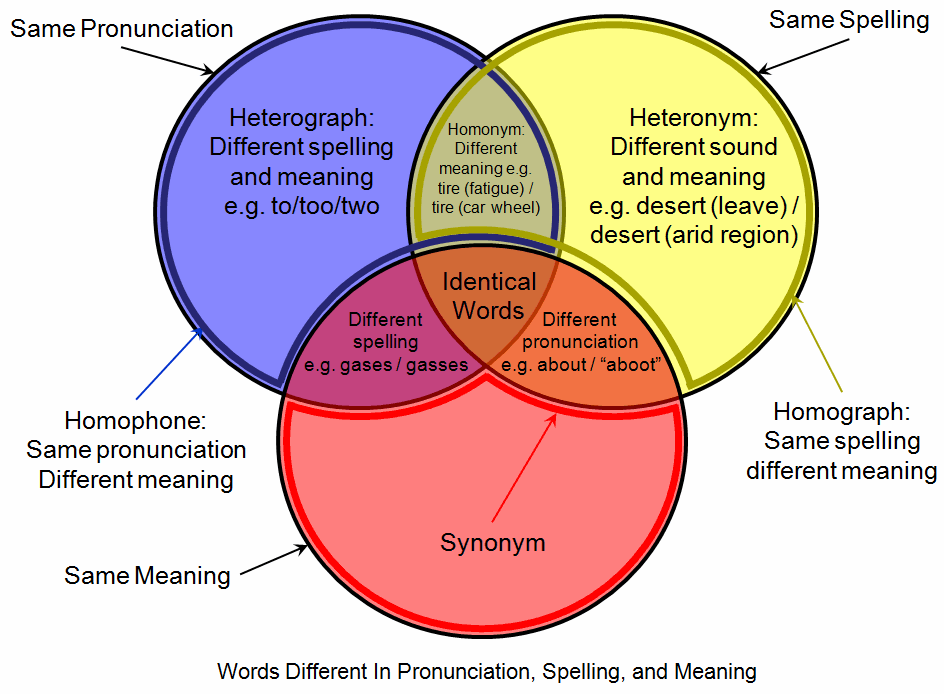

Homograph

A homograph (from the , and , ) is a word that shares the same written form as another word but has a different meaning. However, some dictionaries insist that the words must also be pronounced differently, while the Oxford English Dictionary says that the words should also be of "different origin". In this vein, ''The Oxford Guide to Practical Lexicography'' lists various types of homographs, including those in which the words are discriminated by being in a different ''word class'', such as ''hit'', the verb ''to strike'', and ''hit'', the noun ''a strike''. If, when spoken, the meanings may be distinguished by different pronunciations, the words are also heteronyms. Words with the same writing ''and'' pronunciation (i.e. are both homographs and homophones) are considered homonyms. However, in a broader sense the term "homonym" may be applied to words with the same writing ''or'' pronunciation. Homograph disambiguation is critically important in speech synthesis, natural ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ambiguous Grammar

In computer science, an ambiguous grammar is a context-free grammar for which there exists a string (computer science), string that can have more than one leftmost derivation or parse tree. Every non-empty context-free language admits an ambiguous grammar by introducing e.g. a duplicate rule. A language that only admits ambiguous grammars is called an #Inherently ambiguous languages, inherently ambiguous language. Deterministic context-free grammars are always unambiguous, and are an important subclass of unambiguous grammars; there are non-deterministic unambiguous grammars, however. For computer programming languages, the reference grammar is often ambiguous, due to issues such as the dangling else problem. If present, these ambiguities are generally resolved by adding precedence rules or other context-sensitive grammar, context-sensitive parsing rules, so the overall phrase grammar is unambiguous. Some parsing algorithms (such as Earley parser, Earley or Generalized LR parser, GL ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backus–Naur Form

In computer science, Backus–Naur form (BNF, pronounced ), also known as Backus normal form, is a notation system for defining the Syntax (programming languages), syntax of Programming language, programming languages and other Formal language, formal languages, developed by John Backus and Peter Naur. It is a metasyntax for Context-free grammar, context-free grammars, providing a precise way to outline the rules of a language's structure. It has been widely used in official specifications, manuals, and textbooks on programming language theory, as well as to describe Document format, document formats, Instruction set, instruction sets, and Communication protocol, communication protocols. Over time, variations such as extended Backus–Naur form (EBNF) and augmented Backus–Naur form (ABNF) have emerged, building on the original framework with added features. Structure BNF specifications outline how symbols are combined to form syntactically valid sequences. Each BNF consists of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

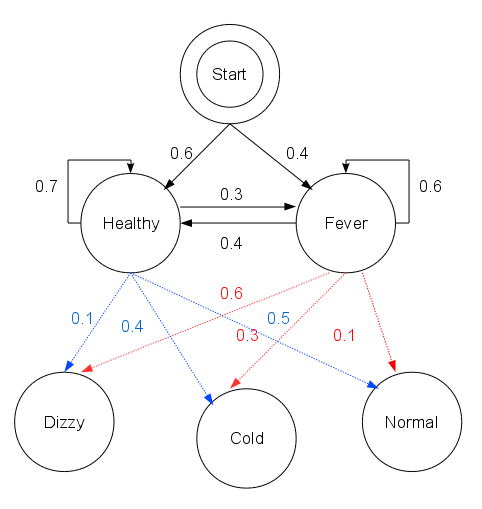

Viterbi Algorithm

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events. This is done especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. His ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |