|

Sparse Autoencoder

An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data (unsupervised learning). An autoencoder learns two functions: an encoding function that transforms the input data, and a decoding function that recreates the input data from the encoded representation. The autoencoder learns an efficient representation (encoding) for a set of data, typically for dimensionality reduction, to generate lower-dimensional embeddings for subsequent use by other machine learning algorithms. Variants exist which aim to make the learned representations assume useful properties. Examples are regularized autoencoders (''sparse'', ''denoising'' and ''contractive'' autoencoders), which are effective in learning representations for subsequent classification tasks, and ''variational'' autoencoders, which can be used as generative models. Autoencoders are applied to many problems, including facial recognition, feature detection, anomaly detection, and le ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multilayer Perceptron

In deep learning, a multilayer perceptron (MLP) is a name for a modern feedforward neural network consisting of fully connected neurons with nonlinear activation functions, organized in layers, notable for being able to distinguish data that is not linearly separable.Cybenko, G. 1989. Approximation by superpositions of a sigmoidal function '' Mathematics of Control, Signals, and Systems'', 2(4), 303–314. Modern neural networks are trained using backpropagationRumelhart, David E., Geoffrey E. Hinton, and R. J. Williams.Learning Internal Representations by Error Propagation. David E. Rumelhart, James L. McClelland, and the PDP research group. (editors), Parallel distributed processing: Explorations in the microstructure of cognition, Volume 1: Foundation. MIT Press, 1986. and are colloquially referred to as "vanilla" networks. MLPs grew out of an effort to improve single-layer perceptrons, which could only be applied to linearly separable data. A perceptron traditionally used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Coding

Neural coding (or neural representation) is a neuroscience field concerned with characterising the hypothetical relationship between the Stimulus (physiology), stimulus and the neuronal responses, and the relationship among the Electrophysiology, electrical activities of the neurons in the Neuronal ensemble, ensemble. Based on the theory that sensory and other information is represented in the brain by Biological neural network, networks of neurons, it is believed that neurons can encode both Digital data, digital and analog signal, analog information. Overview Neurons have an ability uncommon among the cells of the body to propagate signals rapidly over large distances by generating characteristic electrical pulses called action potentials: voltage spikes that can travel down axons. Sensory neurons change their activities by firing sequences of action potentials in various temporal patterns, with the presence of external sensory stimuli, such as light, sound, taste, Olfaction, sm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

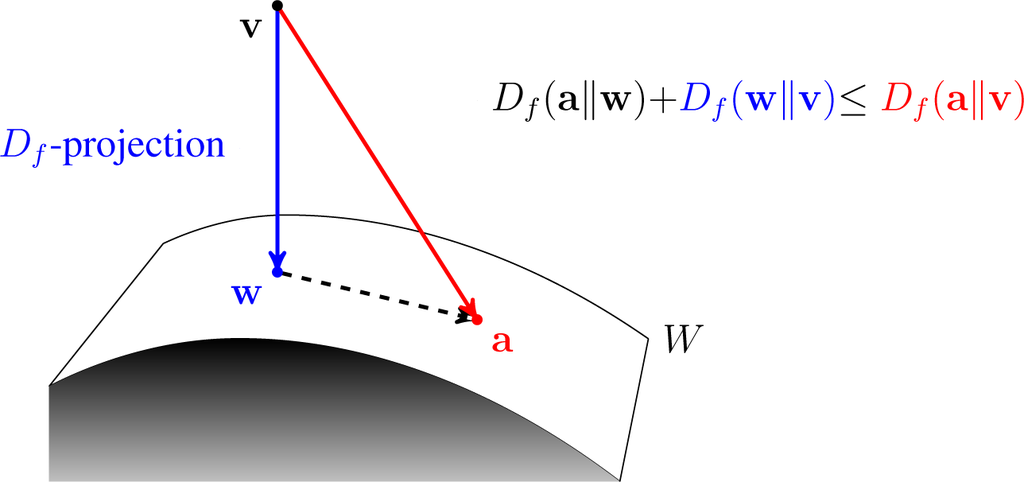

Variational Bayesian Methods

Variational Bayesian methods are a family of techniques for approximating intractable integrals arising in Bayesian inference and machine learning. They are typically used in complex statistical models consisting of observed variables (usually termed "data") as well as unknown parameters and latent variables, with various sorts of relationships among the three types of random variables, as might be described by a graphical model. As typical in Bayesian inference, the parameters and latent variables are grouped together as "unobserved variables". Variational Bayesian methods are primarily used for two purposes: # To provide an analytical approximation to the posterior probability of the unobserved variables, in order to do statistical inference over these variables. # To derive a lower bound for the marginal likelihood (sometimes called the ''evidence'') of the observed data (i.e. the marginal probability of the data given the model, with marginalization performed over unobserv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VAE Basic

Vae or VAE may refer to: * Vae (name) * VAE Nortrak North America, a manufacturer of railroad track components * Validation des Acquis de l'Expérience, a procedure of granting degrees based on work experience in France * Variational autoencoder In machine learning, a variational autoencoder (VAE) is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling. It is part of the families of probabilistic graphical models and variational Bayesian metho ..., an artificial neural network architecture See also * * {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

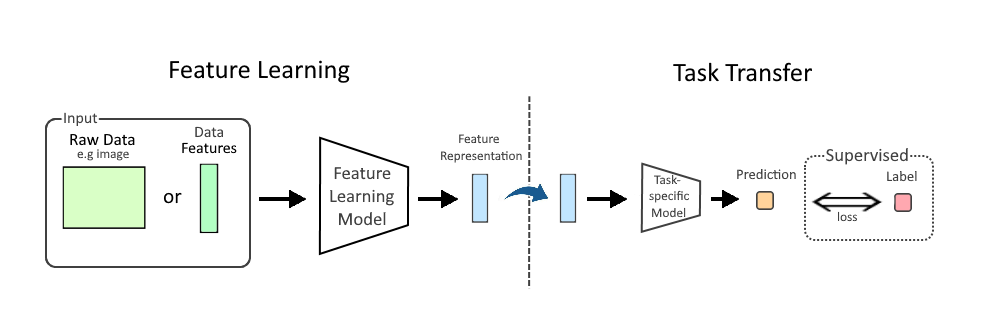

Feature Learning

In machine learning (ML), feature learning or representation learning is a set of techniques that allow a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task. Feature learning is motivated by the fact that ML tasks such as classification often require input that is mathematically and computationally convenient to process. However, real-world data, such as image, video, and sensor data, have not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms. Feature learning can be either supervised, unsupervised, or self-supervised: * In supervised feature learning, features are learned using labeled input data. Labeled data includes input-label pairs where the inp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Identity Function

Graph of the identity function on the real numbers In mathematics, an identity function, also called an identity relation, identity map or identity transformation, is a function that always returns the value that was used as its argument, unchanged. That is, when is the identity function, the equality is true for all values of to which can be applied. Definition Formally, if is a set, the identity function on is defined to be a function with as its domain and codomain, satisfying In other words, the function value in the codomain is always the same as the input element in the domain . The identity function on is clearly an injective function as well as a surjective function (its codomain is also its range), so it is bijective. The identity function on is often denoted by . In set theory, where a function is defined as a particular kind of binary relation, the identity function is given by the identity relation, or ''diagonal'' of . Algebraic propert ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dimensionality Reduction

Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data, ideally close to its intrinsic dimension. Working in high-dimensional spaces can be undesirable for many reasons; raw data are often sparse as a consequence of the curse of dimensionality, and analyzing the data is usually computationally intractable. Dimensionality reduction is common in fields that deal with large numbers of observations and/or large numbers of variables, such as signal processing, speech recognition, neuroinformatics, and bioinformatics. Methods are commonly divided into linear and nonlinear approaches. Linear approaches can be further divided into feature selection and feature extraction. Dimensionality reduction can be used for noise reduction, data visualization, cluster analysis, or as an intermediate step to facilitat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least Squares

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The method is widely used in areas such as regression analysis, curve fitting and data modeling. The least squares method can be categorized into linear and nonlinear forms, depending on the relationship between the model parameters and the observed data. The method was first proposed by Adrien-Marie Legendre in 1805 and further developed by Carl Friedrich Gauss. History Founding The method of least squares grew out of the fields of astronomy and geodesy, as scientists and mathematicians sought to provide solutions to the challenges of navigating the Earth's oceans during the Age of Discovery. The accurate description of the behavior of celestial bodies was the key to enabling ships to sail in open seas, where sailors could no longer rely on la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Norm (mathematics)

In mathematics, a norm is a function (mathematics), function from a real or complex vector space to the non-negative real numbers that behaves in certain ways like the distance from the Origin (mathematics), origin: it Equivariant map, commutes with scaling, obeys a form of the triangle inequality, and zero is only at the origin. In particular, the Euclidean distance in a Euclidean space is defined by a norm on the associated Euclidean vector space, called the #Euclidean norm, Euclidean norm, the #p-norm, 2-norm, or, sometimes, the magnitude or length of the vector. This norm can be defined as the square root of the inner product of a vector with itself. A seminorm satisfies the first two properties of a norm but may be zero for vectors other than the origin. A vector space with a specified norm is called a normed vector space. In a similar manner, a vector space with a seminorm is called a ''seminormed vector space''. The term pseudonorm has been used for several related meaning ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dirac Measure

In mathematics, a Dirac measure assigns a size to a set based solely on whether it contains a fixed element ''x'' or not. It is one way of formalizing the idea of the Dirac delta function, an important tool in physics and other technical fields. Definition A Dirac measure is a measure on a set (with any -algebra of subsets of ) defined for a given and any (measurable) set by :\delta_x (A) = 1_A(x)= \begin 0, & x \not \in A; \\ 1, & x \in A. \end where is the indicator function of . The Dirac measure is a probability measure, and in terms of probability it represents the almost sure outcome in the sample space . We can also say that the measure is a single atom at ; however, treating the Dirac measure as an atomic measure is not correct when we consider the sequential definition of Dirac delta, as the limit of a delta sequence. The Dirac measures are the extreme points of the convex set of probability measures on . The name is a back-formation from the Dirac delta fun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |