|

Slipstream (computer Science)

A slipstream processor is an architecture designed to reduce the length of a running program by removing the non-essential instructions. It is a form of speculative computing. Non-essential instructions include such things as results that are not written to memory, or compare operations that will always return true. Also as statistically most branch instructions will be taken it makes sense to assume this will always be the case. Because of the speculation involved slipstream processors are generally described as having two parallel executing streams. One is an optimized faster A-stream (advanced stream) executing the reduced code, the other is the slower R-stream (redundant stream), which runs behind the A-stream and executes the full code. The R-stream runs faster than if it were a single stream due to data being prefetched by the A-stream effectively hiding memory latency, and due to the A-stream's assistance with branch prediction. The two streams both complete faster than a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speculative Execution

Speculative execution is an optimization technique where a computer system performs some task that may not be needed. Work is done before it is known whether it is actually needed, so as to prevent a delay that would have to be incurred by doing the work after it is known that it is needed. If it turns out the work was not needed after all, most changes made by the work are reverted and the results are ignored. The objective is to provide more concurrency if extra resources are available. This approach is employed in a variety of areas, including branch prediction in pipelined processors, value prediction for exploiting value locality, prefetching memory and files, and optimistic concurrency control in database systems.Lazy and Speculative Execution [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

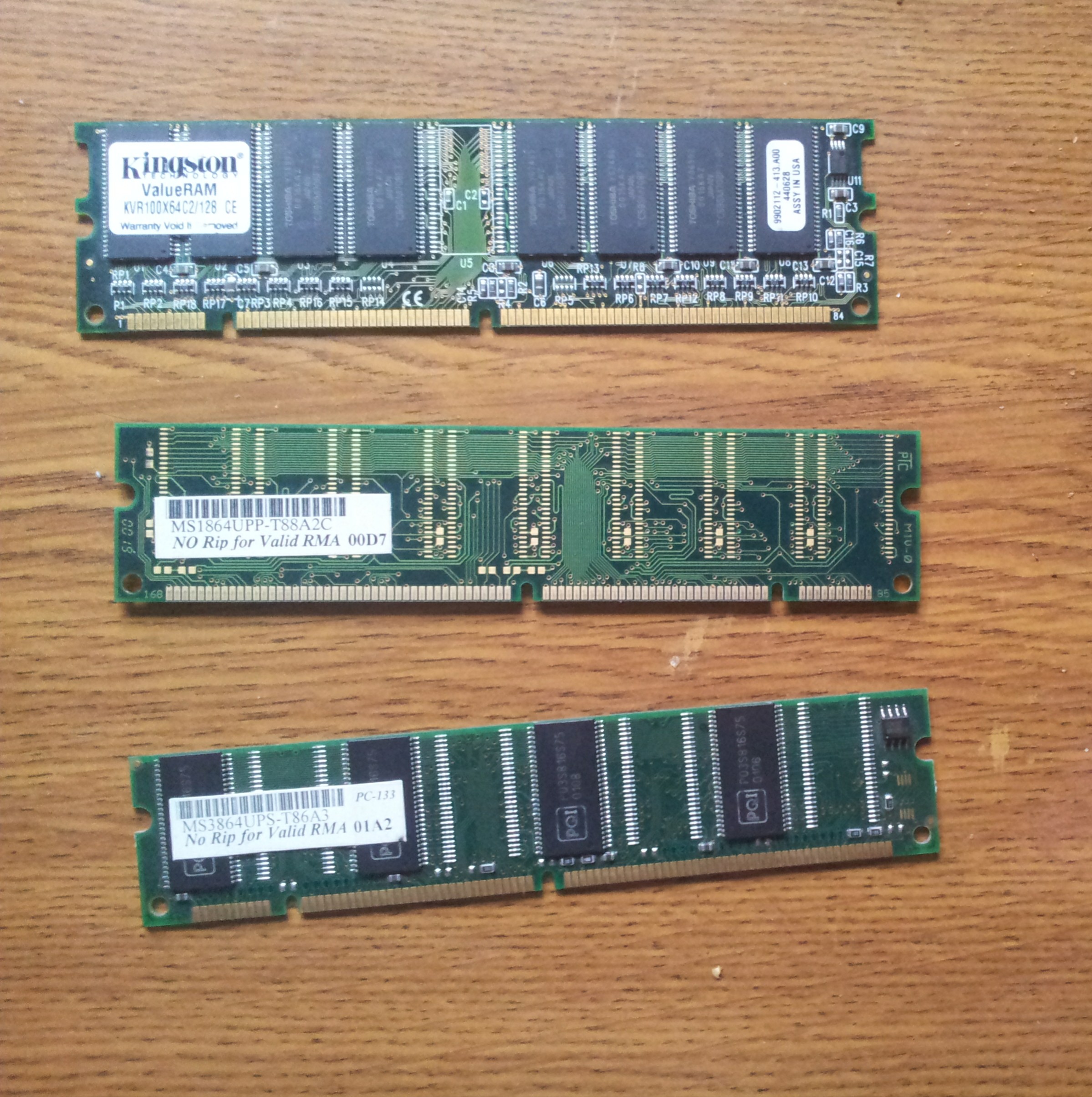

Prefetch Buffer

Synchronous dynamic random-access memory (synchronous dynamic RAM or SDRAM) is any DRAM where the operation of its external pin interface is coordinated by an externally supplied clock signal. DRAM integrated circuits (ICs) produced from the early 1970s to early 1990s used an ''asynchronous'' interface, in which input control signals have a direct effect on internal functions only delayed by the trip across its semiconductor pathways. SDRAM has a ''synchronous'' interface, whereby changes on control inputs are recognised after a rising edge of its clock input. In SDRAM families standardized by JEDEC, the clock signal controls the stepping of an internal finite-state machine that responds to incoming commands. These commands can be pipelined to improve performance, with previously started operations completing while new commands are received. The memory is divided into several equally sized but independent sections called ''banks'', allowing the device to operate on a memory acc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Branch Prediction

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g., an if–then–else structure) will go before this is known definitively. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors play a critical role in achieving high performance in many modern pipelined microprocessor architectures such as x86. Two-way branching is usually implemented with a conditional jump instruction. A conditional jump can either be "taken" and jump to a different place in program memory, or it can be "not taken" and continue execution immediately after the conditional jump. It is not known for certain whether a conditional jump will be taken or not taken until the condition has been calculated and the conditional jump has passed the execution stage in the instruction pipeline (see fig. 1). Without branch prediction, the processor would have to wait until the conditional jump instruction has ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Miss

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A ''cache hit'' occurs when the requested data can be found in a cache, while a ''cache miss'' occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective and to enable efficient use of data, caches must be relatively small. Nevertheless, caches have proven themselves in many areas of computing, because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested already, and spatial locality, where ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |