|

Remote Shell

The remote shell (rsh) is a command-line computer program that can execute shell commands as another user, and on another computer across a computer network. The remote system to which ''rsh'' connects runs the ''rsh'' daemon (rshd). The daemon typically uses the well-known Transmission Control Protocol (TCP) port number 513. History ''Rsh'' originated as part of the BSD Unix operating system, along with rcp, as part of the rlogin package on 4.2BSD in 1983. rsh has since been ported to other operating systems. The rsh command has the same name as another common UNIX utility, the restricted shell, which first appeared in PWB/UNIX; in System V Release 4, the restricted shell is often located at /usr/bin/rsh. As other Berkeley r-commands which involve user authentication, the rsh protocol is not secure for network use, because it sends unencrypted information over the network, among other reasons. Some implementations also authenticate by sending unencrypted pass ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C (programming Language)

C (''pronounced'' '' – like the letter c'') is a general-purpose programming language. It was created in the 1970s by Dennis Ritchie and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted Central processing unit, CPUs. It has found lasting use in operating systems code (especially in Kernel (operating system), kernels), device drivers, and protocol stacks, but its use in application software has been decreasing. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the most widely used programming langu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PWB/UNIX

The Programmer's Workbench (PWB/UNIX) was an early, now discontinued, version of the Unix operating system that had been created in the Bell Labs Computer Science Research Group of AT&T. Its stated goal was to provide a time-sharing working environment for large groups of programmers, writing software for larger batch processing computers. Prior to 1973 Unix development at AT&T was a project of a small group of researchers in Department 1127 of Bell Labs. As the usefulness of Unix in other departments of Bell Labs was evident, the company decided to develop a version of Unix tailored to support programmers in production work, not just research. The Programmer's Workbench was started in 1973,John R. Mashey (2004)Languages, Levels, Libraries, and Longevity. ACM Queue 2 (9). by Evan Ivie and Rudd Canaday to support a computer center for a 1000-employee Bell Labs division, which would be the largest Unix site for several years. PWB/UNIX was to provide tools for teams of programmers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet Protocols

The Internet protocol suite, commonly known as TCP/IP, is a framework for organizing the communication protocols used in the Internet and similar computer networks according to functional criteria. The foundational protocols in the suite are the Transmission Control Protocol (TCP), the User Datagram Protocol (UDP), and the Internet Protocol (IP). Early versions of this networking model were known as the Department of Defense (DoD) model because the research and development were funded by the United States Department of Defense through DARPA. The Internet protocol suite provides End-to-end principle, end-to-end data communication specifying how data should be packetized, addressed, transmitted, routed, and received. This functionality is organized into four abstraction layers, which classify all related protocols according to each protocol's scope of networking. An implementation of the layers for a particular application forms a protocol stack. From lowest to highest, the laye ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

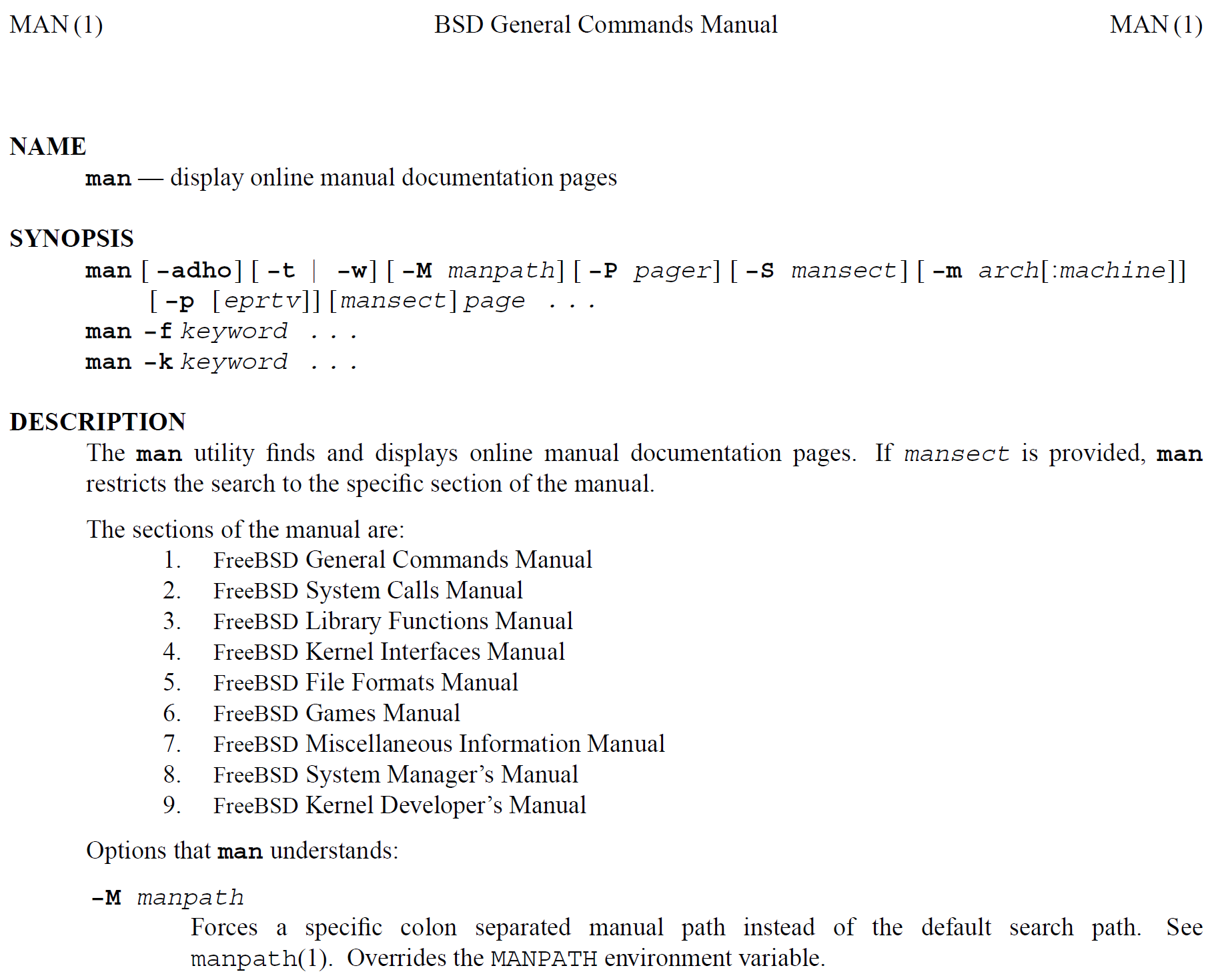

Man Page

A man page (short for manual page) is a form of software documentation found on Unix and Unix-like operating systems. Topics covered include programs, system libraries, system calls, and sometimes local system details. The local host administrators can create and install manual pages associated with the specific host. A manual end user may invoke a documentation page by issuing the man command followed by the name of the item for which they want the documentation. These manual pages are typically requested by end users, programmers and administrators doing real time work but can also be formatted for printing. By default, man typically uses a formatting program such as nroff with a macro package or mandoc, and also a terminal pager program such as more or less to display its output on the user's screen. Man pages are often referred to as an ''online'' form of software documentation, even though the man command does not require internet access. The environment variable M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Streams

In computer programming, standard streams are preconnected input and output communication channels between a computer program and its environment when it begins execution. The three input/output (I/O) connections are called standard input (stdin), standard output (stdout) and standard error (stderr). Originally I/O happened via a physically connected system console (input via keyboard, output via monitor), but standard streams abstract this. When a command is executed via an interactive shell, the streams are typically connected to the text terminal on which the shell is running, but can be changed with redirection or a pipeline. More generally, a child process inherits the standard streams of its parent process. Application Users generally know standard streams as input and output channels that handle data coming from an input device, or that write data from the application. The data may be text with any encoding, or binary data. When a program is run as a daemon, its stan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

File Descriptor

In Unix and Unix-like computer operating systems, a file descriptor (FD, less frequently fildes) is a process-unique identifier (handle) for a file or other input/output resource, such as a pipe or network socket. File descriptors typically have non-negative integer values, with negative values being reserved to indicate "no value" or error conditions. File descriptors are a part of the POSIX API. Each Unix process (except perhaps daemons) should have three standard POSIX file descriptors, corresponding to the three standard streams: Overview In the traditional implementation of Unix, file descriptors index into a per-process maintained by the kernel, that in turn indexes into a system-wide table of files opened by all processes, called the . This table records the ''mode'' with which the file (or other resource) has been opened: for reading, writing, appending, and possibly other modes. It also indexes into a third table called the inode table that describes the ac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Domain Name System

The Domain Name System (DNS) is a hierarchical and distributed name service that provides a naming system for computers, services, and other resources on the Internet or other Internet Protocol (IP) networks. It associates various information with ''domain names'' (identification (information), identification String (computer science), strings) assigned to each of the associated entities. Most prominently, it translates readily memorized domain names to the numerical IP addresses needed for locating and identifying computer services and devices with the underlying network protocols. The Domain Name System has been an essential component of the functionality of the Internet since 1985. The Domain Name System delegates the responsibility of assigning domain names and mapping those names to Internet resources by designating authoritative name servers for each domain. Network administrators may delegate authority over subdomains of their allocated name space to other name servers. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

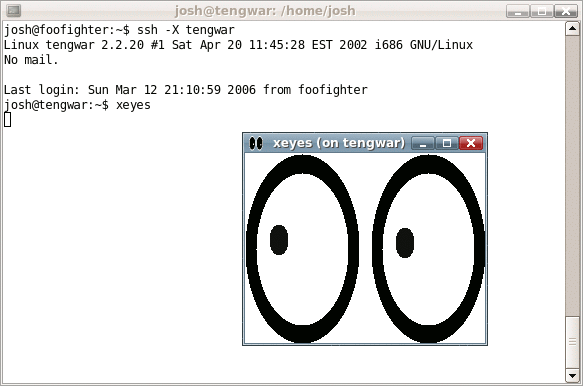

Secure Shell

The Secure Shell Protocol (SSH Protocol) is a cryptographic network protocol for operating network services securely over an unsecured network. Its most notable applications are remote login and command-line execution. SSH was designed for Unix-like operating systems as a replacement for Telnet and unsecured remote Unix shell protocols, such as the Berkeley Remote Shell (rsh) and the related rlogin and rexec protocols, which all use insecure, plaintext methods of authentication, like passwords. Since mechanisms like Telnet and Remote Shell are designed to access and operate remote computers, sending the authentication tokens (e.g. username and password) for this access to these computers across a public network in an unsecured way poses a great risk of 3rd parties obtaining the password and achieving the same level of access to the remote system as the telnet user. Secure Shell mitigates this risk through the use of encryption mechanisms that are intended to hide th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

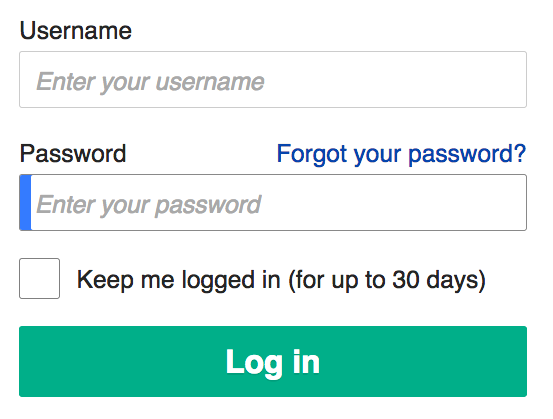

Password

A password, sometimes called a passcode, is secret data, typically a string of characters, usually used to confirm a user's identity. Traditionally, passwords were expected to be memorized, but the large number of password-protected services that a typical individual accesses can make memorization of unique passwords for each service impractical. Using the terminology of the NIST Digital Identity Guidelines, the secret is held by a party called the ''claimant'' while the party verifying the identity of the claimant is called the ''verifier''. When the claimant successfully demonstrates knowledge of the password to the verifier through an established authentication protocol, the verifier is able to infer the claimant's identity. In general, a password is an arbitrary String (computer science), string of character (computing), characters including letters, digits, or other symbols. If the permissible characters are constrained to be numeric, the corresponding secret is sometimes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Authentication

Authentication (from ''authentikos'', "real, genuine", from αὐθέντης ''authentes'', "author") is the act of proving an Logical assertion, assertion, such as the Digital identity, identity of a computer system user. In contrast with identification, the act of indicating a person or thing's identity, authentication is the process of verifying that identity. Authentication is relevant to multiple fields. In art, antiques, and anthropology, a common problem is verifying that a given artifact was produced by a certain person, or in a certain place (i.e. to assert that it is not counterfeit), or in a given period of history (e.g. by determining the age via carbon dating). In computer science, verifying a user's identity is often required to allow access to confidential data or systems. It might involve validating personal identity documents. In art, antiques and anthropology Authentication can be considered to be of three types: The ''first'' type of authentication is accep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cryptography

Cryptography, or cryptology (from "hidden, secret"; and ''graphein'', "to write", or ''-logy, -logia'', "study", respectively), is the practice and study of techniques for secure communication in the presence of Adversary (cryptography), adversarial behavior. More generally, cryptography is about constructing and analyzing Communication protocol, protocols that prevent third parties or the public from reading private messages. Modern cryptography exists at the intersection of the disciplines of mathematics, computer science, information security, electrical engineering, digital signal processing, physics, and others. Core concepts related to information security (confidentiality, data confidentiality, data integrity, authentication, and non-repudiation) are also central to cryptography. Practical applications of cryptography include electronic commerce, Smart card#EMV, chip-based payment cards, digital currencies, password, computer passwords, and military communications. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Security

Computer security (also cybersecurity, digital security, or information technology (IT) security) is a subdiscipline within the field of information security. It consists of the protection of computer software, systems and computer network, networks from Threat (security), threats that can lead to unauthorized information disclosure, theft or damage to computer hardware, hardware, software, or Data (computing), data, as well as from the disruption or misdirection of the Service (economics), services they provide. The significance of the field stems from the expanded reliance on computer systems, the Internet, and wireless network standards. Its importance is further amplified by the growth of smart devices, including smartphones, televisions, and the various devices that constitute the Internet of things (IoT). Cybersecurity has emerged as one of the most significant new challenges facing the contemporary world, due to both the complexity of information systems and the societi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |