|

Regular Estimator

Regular estimators are a class of statistical estimators that satisfy certain regularity conditions which make them amenable to asymptotic analysis. The convergence of a regular estimator's distribution is, in a sense, locally uniform. This is often considered desirable and leads to the convenient property that a small change in the parameter does not dramatically change the distribution of the estimator.Vaart AW van der. Asymptotic Statistics. Cambridge University Press; 1998. Definition An estimator \hat_n of \psi(\theta) based on a sample of size n is said to be regular if for every h: \sqrt n \left ( \hat_n - \psi (\theta + h/\sqrt n) \right ) \stackrel L_\theta where the convergence is in distribution under the law of \theta + h/\sqrt n. L_\theta is some asymptotic distribution (usually this is a normal distribution with mean zero and variance which may depend on \theta). Examples of non-regular estimators Both the Hodges' estimator and the James-Stein estimatorBeran ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimator

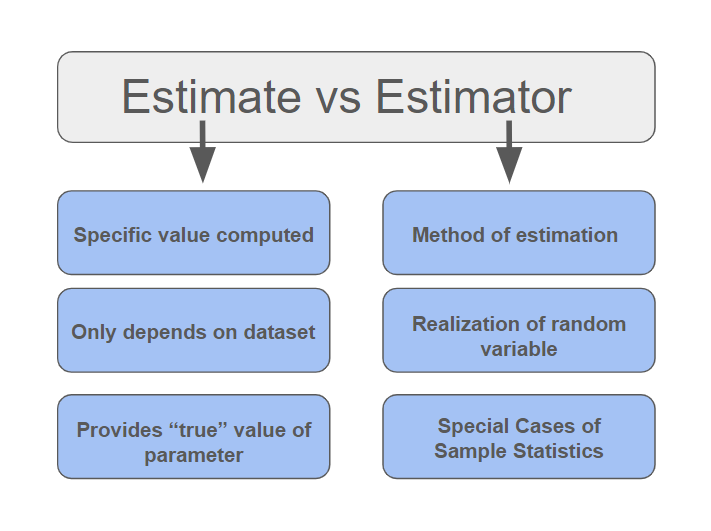

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asymptotic

In analytic geometry, an asymptote () of a curve is a line such that the distance between the curve and the line approaches zero as one or both of the ''x'' or ''y'' coordinates Limit of a function#Limits at infinity, tends to infinity. In projective geometry and related contexts, an asymptote of a curve is a line which is tangent to the curve at a point at infinity. The word asymptote is derived from the Greek language, Greek ἀσύμπτωτος (''asumptōtos'') which means "not falling together", from ἀ Privative alpha, priv. + σύν "together" + πτωτ-ός "fallen". The term was introduced by Apollonius of Perga in his work on conic sections, but in contrast to its modern meaning, he used it to mean any line that does not intersect the given curve. There are three kinds of asymptotes: ''horizontal'', ''vertical'' and ''oblique''. For curves given by the graph of a function, graph of a function (mathematics), function , horizontal asymptotes are horizontal lines tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asymptotic Distribution

In mathematics and statistics, an asymptotic distribution is a probability distribution that is in a sense the limiting distribution of a sequence of distributions. One of the main uses of the idea of an asymptotic distribution is in providing approximations to the cumulative distribution functions of statistical estimators. Definition A sequence of distributions corresponds to a sequence of random variables ''Zi'' for ''i'' = 1, 2, ..., I . In the simplest case, an asymptotic distribution exists if the probability distribution of ''Zi'' converges to a probability distribution (the asymptotic distribution) as ''i'' increases: see convergence in distribution. A special case of an asymptotic distribution is when the sequence of random variables is always zero or ''Zi'' = 0 as ''i'' approaches infinity. Here the asymptotic distribution is a degenerate distribution, corresponding to the value zero. However, the most usual sense in which the term asymptotic distribution is used arises ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hodges' Estimator

In statistics, Hodges' estimator (or the Hodges–Le Cam estimator), named for Joseph Lawson Hodges Jr., Joseph Hodges, is a famous counterexample of an estimator which is "superefficient", i.e. it attains smaller asymptotic variance than regular efficient estimators. The existence of such a counterexample is the reason for the introduction of the notion of regular estimators. Hodges' estimator improves upon a regular estimator at a single point. In general, any superefficient estimator may surpass a regular estimator at most on a set of Lebesgue measure zero. Although Hodges discovered the estimator he never published it; the first publication was in the doctoral thesis of Lucien Le Cam. Construction Suppose \hat_n is a "common" estimator for some parameter \theta: it is consistent estimator, consistent, and converges to some asymptotic distribution L_\theta (usually this is a normal distribution with mean zero and variance which may depend on \theta) at the \sqrt-rate: : ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |