|

Qwen

''Qwen'' (also called ''Tongyi Qianwen'', zh, s=通义千问) is a family of large language models developed by Alibaba Cloud. In July 2024, it was ranked as the top Chinese language model in some benchmarks and third globally behind the top models of Anthropic and OpenAI. Models Alibaba first launched a beta of Qwen in April 2023 under the name ''Tongyi Qianwen''. The model's architecture was based on the Llama architecture developed by Meta AI. It was publicly released in September 2023 after receiving approval from the Chinese government. In December 2023 it released its 72B and 1.8B models as open source, while Qwen 7B was open sourced in August. In June 2024 Alibaba launched Qwen 2 and in September it released some of its models as open source, while keeping its most advanced models proprietary. Qwen 2 contains both dense and sparse models. In November 2024, QwQ-32B-Preview, a model focusing on reasoning similar to OpenAI's o1, was released under the Apache 2.0 Licens ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Alibaba Cloud

Alibaba Cloud, also known as Aliyun (), is a cloud computing company, a subsidiary of Alibaba Group. Alibaba Cloud provides cloud computing services to online businesses and Alibaba's own e-commerce ecosystem. Its international operations are registered and headquartered in Singapore. Alibaba Cloud offers cloud services that are available on a pay-as-you-go basis, and include elastic compute, data storage, relational databases, big-data processing, anti-DDoS protection and content delivery networks (CDN). It is the largest cloud computing company in China, and in Asia Pacific according to Gartner. Alibaba Cloud operates data centers in 24 regions and 74 availability zones around the globe. As of June 2017, Alibaba Cloud is placed in the Visionaries' quadrant of Gartner's Magic Quadrant for cloud infrastructure as a service, worldwide. History * September 2009 – Alibaba Cloud is founded and R&D centers and operation centers are subsequently opened in Hangzhou, Beijing, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vision Transformer

A Vision Transformer (ViT) is a transformer that is targeted at vision processing tasks such as image recognition. Vision Transformers Transformers found their initial applications in natural language processing (NLP) tasks, as demonstrated by language models such as BERT and GPT-3. By contrast the typical image processing system uses a convolutional neural network (CNN). Well-known projects include Xception, ResNet, EfficientNet, DenseNet, and Inception. Transformers measure the relationships between pairs of input tokens (words in the case of text strings), termed attention. The cost is quadratic in the number of tokens. For images, the basic unit of analysis is the pixel. However, computing relationships for every pixel pair in a typical image is prohibitive in terms of memory and computation. Instead, ViT computes relationships among pixels in various small sections of the image (e.g., 16x16 pixels), at a drastically reduced cost. The sections (with positional embeddings) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reasoning Language Model

Reasoning language models are artificial intelligence systems that combine natural language processing with structured reasoning capabilities. These models are usually constructed by prompting, supervised finetuning (SFT), and reinforcement learning (RL) initialized with pretrained language models. Prompting A language model is a generative model of a training dataset of texts. Prompting means constructing a text prompt, such that, conditional on the text prompt, the language model generates a solution to the task. Prompting can be applied to a pretrained model ("base model"), a base model that has undergone SFT, or RL, or both. Chain of thought Chain of Thought prompting (CoT) prompts the model to answer a question by first generating a "chain of thought", i.e. steps of reasoning that mimic a train of thought. It was published in 2022 by the Brain team of Google on the PaLM-540B model. In CoT prompting, the prompt is of the form " Let's think step by step", and the mod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context Window

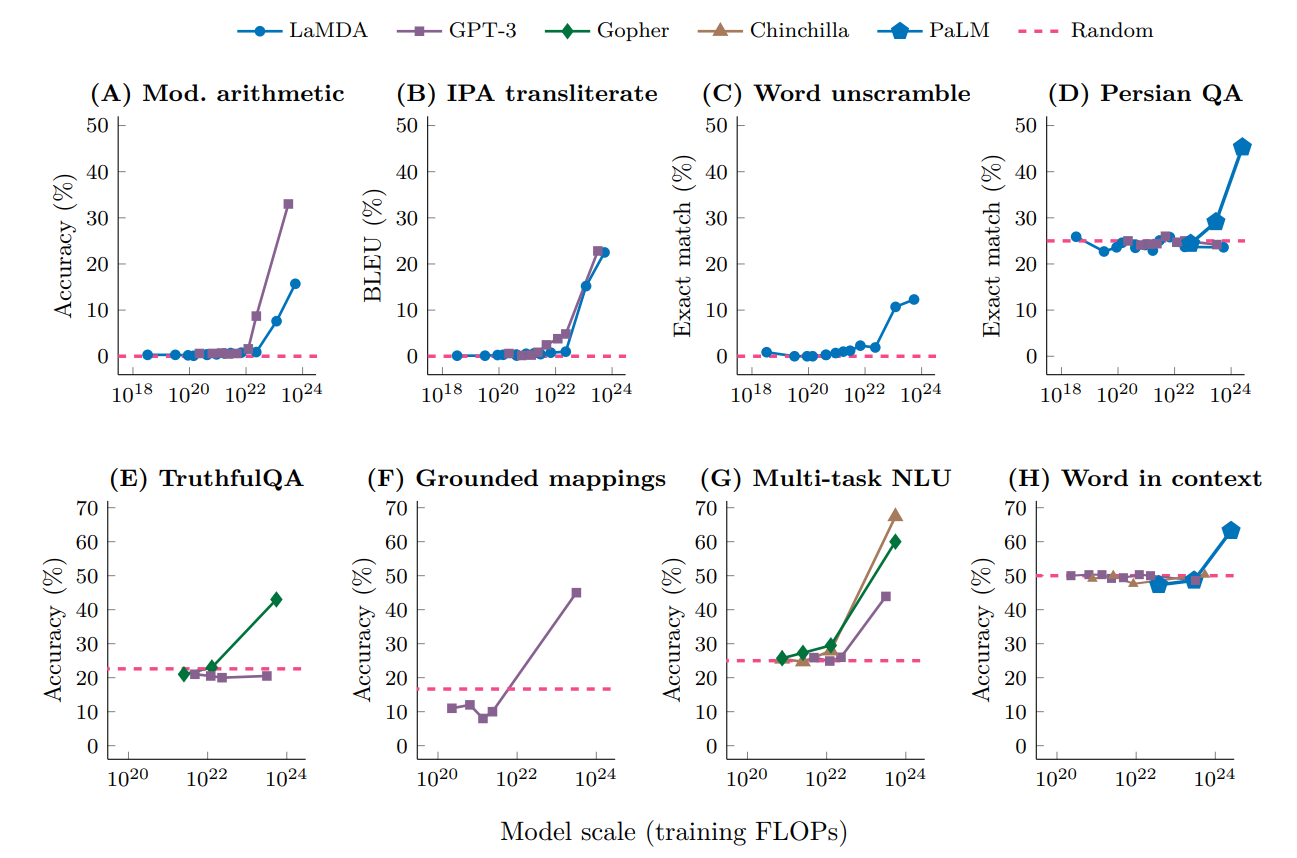

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixture Of Experts

Mixture of experts (MoE) refers to a machine learning technique where multiple expert networks (learners) are used to divide a problem space into homogeneous regions. It differs from ensemble techniques in that typically only a few, or 1, expert model will be run, rather than combining results from all models. An example from computer vision is combining one neural network model for human detection with another for pose estimation. Hierarchical mixture If the output is conditioned on multiple levels of (probabilistic) gating functions, the mixture is called a hierarchical mixture of experts. A gating network decides which expert to use for each input region. Learning thus consists of learning the parameters of: * individual learners and * gating network. Applications Meta Meta (from the Greek μετά, '' meta'', meaning "after" or "beyond") is a prefix meaning "more comprehensive" or "transcending". In modern nomenclature, ''meta''- can also serve as a prefix meaning ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPT-4o

GPT-4o ("o" for "omni") is a multilingual, multimodal generative pre-trained transformer developed by OpenAI and released in May 2024. GPT-4o is free, but with a usage limit that is five times higher for ChatGPT Plus subscribers. It can process and generate text, images and audio. Its application programming interface (API) is twice as fast and half the price of its predecessor, GPT-4 Turbo. Background Multiple versions of GPT-4o were originally secretly launched under different names on Large Model Systems Organization's (LMSYS) Chatbot Arena as three different models. These three models were called gpt2-chatbot, im-a-good-gpt2-chatbot, and im-also-a-good-gpt2-chatbot. On 7 May 2024, Sam Altman tweeted "im-a-good-gpt2-chatbot", which was commonly interpreted as a confirmation that these were new OpenAI models being A/B tested. Capabilities GPT-4o achieved state-of-the-art results in voice, multilingual, and vision benchmarks, setting new records in audio speech recognit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GitHub

GitHub, Inc. () is an Internet hosting service for software development and version control using Git. It provides the distributed version control of Git plus access control, bug tracking, software feature requests, task management, continuous integration, and wikis for every project. Headquartered in California, it has been a subsidiary of Microsoft since 2018. It is commonly used to host open source software development projects. As of June 2022, GitHub reported having over 83 million developers and more than 200 million repositories, including at least 28 million public repositories. It is the largest source code host . History GitHub.com Development of the GitHub.com platform began on October 19, 2007. The site was launched in April 2008 by Tom Preston-Werner, Chris Wanstrath, P. J. Hyett and Scott Chacon after it had been made available for a few months prior as a beta release. GitHub has an annual keynote called GitHub Universe. Org ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hugging Face

Hugging Face, Inc. is an American company that develops tools for building applications using machine learning. It is most notable for its Transformers library built for natural language processing applications and its platform that allows users to share machine learning models and datasets. History The company was founded in 2016 by Clément Delangue, Julien Chaumond, and Thomas Wolf originally as a company that developed a chatbot app targeted at teenagers. After open-sourcing the model behind the chatbot, the company pivoted to focus on being a platform for democratizing machine learning. In March 2021, Hugging Face raised $40 million in a Series B funding round. On April 28, 2021, the company launched the BigScience Research Workshop in collaboration with several other research groups to release an open large language model. In 2022, the workshop concluded with the announcement of BLOOM, a multilingual large language model with 176 billion parameters. On December 21, 202 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Open Source

Open source is source code that is made freely available for possible modification and redistribution. Products include permission to use the source code, design documents, or content of the product. The open-source model is a decentralized software development model that encourages open collaboration. A main principle of open-source software development is peer production, with products such as source code, blueprints, and documentation freely available to the public. The open-source movement in software began as a response to the limitations of proprietary code. The model is used for projects such as in open-source appropriate technology, and open-source drug discovery. Open source promotes universal access via an open-source or free license to a product's design or blueprint, and universal redistribution of that design or blueprint. Before the phrase ''open source'' became widely adopted, developers and producers have used a variety of other terms. ''Open source'' gai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Twitter

Twitter is an online social media and social networking service owned and operated by American company Twitter, Inc., on which users post and interact with 280-character-long messages known as "tweets". Registered users can post, like, and 'Reblogging, retweet' tweets, while unregistered users only have the ability to read public tweets. Users interact with Twitter through browser or mobile Frontend and backend, frontend software, or programmatically via its APIs. Twitter was created by Jack Dorsey, Noah Glass, Biz Stone, and Evan Williams (Internet entrepreneur), Evan Williams in March 2006 and launched in July of that year. Twitter, Inc. is based in San Francisco, California and has more than 25 offices around the world. , more than 100 million users posted 340 million tweets a day, and the service handled an average of 1.6 billion Web search query, search queries per day. In 2013, it was one of the ten List of most popular websites, most-visited websites and has been de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fine-tuning (deep Learning)

In deep learning, fine-tuning is an approach to transfer learning in which the weights of a pre-trained model are trained on new data. Fine-tuning can be done on the entire neural network, or on only a subset of its layers, in which case the layers that are not being fine-tuned are "frozen" (not updated during the backpropagation step). A model may also be augmented with "adapters" that consist of far fewer parameters than the original model, and fine-tuned in a parameter-efficient way by tuning the weights of the adapters and leaving the rest of the model's weights frozen. For some architectures, such as convolutional neural networks, it is common to keep the earlier layers (those closest to the input layer) frozen because they capture lower-level features, while later layers often discern high-level features that can be more related to the task that the model is trained on. Models that are pre-trained on large and general corpora are usually fine-tuned by reusing the model's p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |