|

Portable Format For Analytics

The Portable Format for Analytics (PFA) is a JSON-based predictive model interchange format conceived and developed by Jim Pivarski. PFA provides a way for analytic applications to describe and exchange predictive models produced by analytics and machine learning algorithms. It supports common models such as logistic regression and decision trees. Version 0.8 was published in 2015. Subsequent versions have been developed by the Data Mining Group. As a predictive model interchange format developed by the Data Mining Group, PFA is complementary to the DMG's XML-based standard called the Predictive Model Markup Language or PMML The Predictive Model Markup Language (PMML) is an XML-based predictive model interchange format conceived by Dr. Robert Lee Grossman, then the director of the National Center for Data Mining at the University of Illinois at Chicago. PMML prov .... Release history Data Mining Group The Data Mining Group is a consortium managed by the Center for Compu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predictive Modelling

Predictive modelling uses statistics to predict outcomes. Most often the event one wants to predict is in the future, but predictive modelling can be applied to any type of unknown event, regardless of when it occurred. For example, predictive models are often used to detect crimes and identify suspects, after the crime has taken place. In many cases the model is chosen on the basis of detection theory to try to guess the probability of an outcome given a set amount of input data, for example given an email determining how likely that it is spam. Models can use one or more classifiers in trying to determine the probability of a set of data belonging to another set. For example, a model might be used to determine whether an email is spam or "ham" (non-spam). Depending on definitional boundaries, predictive modelling is synonymous with, or largely overlapping with, the field of machine learning, as it is more commonly referred to in academic or research and development contexts. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JSON

JSON (JavaScript Object Notation, pronounced ; also ) is an open standard file format and data interchange format that uses human-readable text to store and transmit data objects consisting of attribute–value pairs and arrays (or other serializable values). It is a common data format with diverse uses in electronic data interchange, including that of web applications with servers. JSON is a language-independent data format. It was derived from JavaScript, but many modern programming languages include code to generate and parse JSON-format data. JSON filenames use the extension .json. Any valid JSON file is a valid JavaScript (.js) file, even though it makes no changes to a web page on its own. Douglas Crockford originally specified the JSON format in the early 2000s. He and Chip Morningstar sent the first JSON message in April 2001. Naming and pronunciation The 2017 international standard (ECMA-404 and ISO/IEC 21778:2017) specifies "Pronounced , as in ' Jaso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analytics

Analytics is the systematic computational analysis of data or statistics. It is used for the discovery, interpretation, and communication of meaningful patterns in data. It also entails applying data patterns toward effective decision-making. It can be valuable in areas rich with recorded information; analytics relies on the simultaneous application of statistics, computer programming, and operations research to quantify performance. Organizations may apply analytics to business data to describe, predict, and improve business performance. Specifically, areas within analytics include descriptive analytics, diagnostic analytics, predictive analytics, prescriptive analytics, and cognitive analytics. Analytics may apply to a variety of fields such as marketing, management, finance, online systems, information security, and software services. Since analytics can require extensive computation (see big data), the algorithms and software used for analytics harness the most current meth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making pred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Regression

In statistics, the logistic model (or logit model) is a statistical model that models the probability of an event taking place by having the log-odds for the event be a linear function (calculus), linear combination of one or more independent variables. In regression analysis, logistic regression (or logit regression) is estimation theory, estimating the parameters of a logistic model (the coefficients in the linear combination). Formally, in binary logistic regression there is a single binary variable, binary dependent variable, coded by an indicator variable, where the two values are labeled "0" and "1", while the independent variables can each be a binary variable (two classes, coded by an indicator variable) or a continuous variable (any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the logistic function, h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Tree

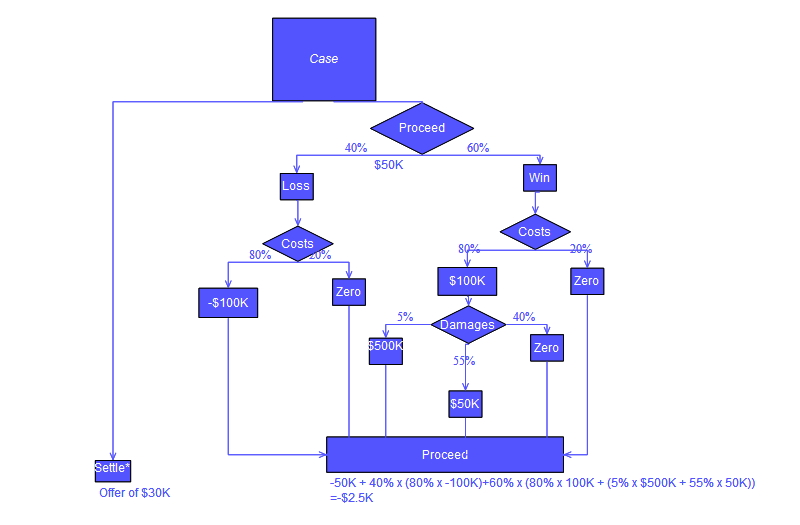

A decision tree is a decision support tool that uses a tree-like model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility. It is one way to display an algorithm that only contains conditional control statements. Decision trees are commonly used in operations research, specifically in decision analysis, to help identify a strategy most likely to reach a goal, but are also a popular tool in machine learning. Overview A decision tree is a flowchart-like structure in which each internal node represents a "test" on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules. In decision analysis, a decision tree and the closely related influence diagram are used as a visual and analytical decision support tool, wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predictive Model Markup Language

The Predictive Model Markup Language (PMML) is an XML-based Predictive modelling, predictive model interchange format conceived by Dr. Robert Lee Grossman, then the director of the National Center for Data Mining at the University of Illinois at Chicago. PMML provides a way for analytic applications to describe and exchange Predictive modelling, predictive models produced by data mining and machine learning algorithms. It supports common models such as logistic regression and other feedforward neural networks. Version 0.9 was published in 1998. Subsequent versions have been developed by the Data Mining Group. Since PMML is an XML-based standard, the specification comes in the form of an XML Schema (W3C), XML schema. PMML itself is a mature standard with over 30 organizations having announced products supporting PMML. PMML Components A PMML file can be described by the following components: * Header (computing), Header: contains general information about the PMML document, su ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Domain-specific Knowledge Representation Languages

Domain specificity is a theoretical position in cognitive science (especially modern cognitive development) that argues that many aspects of cognition are supported by specialized, presumably evolutionarily specified, learning devices. The position is a close relative of modularity of mind, but is considered more general in that it does not necessarily entail all the assumptions of Fodorian modularity (e.g., informational encapsulation). Instead, it is properly described as a variant of psychological nativism. Other cognitive scientists also hold the mind to be modular, without the modules necessarily possessing the characteristics of Fodorian modularity. Domain specificity emerged in the aftermath of the cognitive revolution as a theoretical alternative to empiricist theories that believed all learning can be driven by the operation of a few such general learning devices. Prominent examples of such domain-general views include Jean Piaget’s theory of cognitive developme ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |