|

Massive Multitask Language Understanding

In artificial intelligence, Measuring Massive Multitask Language Understanding (MMLU) is a benchmark for evaluating the capabilities of large language models. Benchmark It consists of about 16,000 multiple-choice questions spanning 57 academic subjects including mathematics, philosophy, law, and medicine. It is one of the most commonly used benchmarks for comparing the capabilities of large language models, with over 100 million downloads as of July 2024. The MMLU was released by Dan Hendrycks and a team of researchers in 2020 and was designed to be more challenging than then-existing benchmarks such as General Language Understanding Evaluation (GLUE) on which new language models were achieving better-than-human accuracy. At the time of the MMLU's release, most existing language models performed around the level of random chance (25%), with the best performing GPT-3 model achieving 43.9% accuracy. The developers of the MMLU estimate that human domain-experts achieve around 89.8% ac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Benchmark (computing)

In computing, a benchmark is the act of running a computer program, a set of programs, or other operations, in order to assess the relative performance of an object, normally by running a number of standard tests and trials against it. The term ''benchmark'' is also commonly utilized for the purposes of elaborately designed benchmarking programs themselves. Benchmarking is usually associated with assessing performance characteristics of computer hardware, for example, the floating point operation performance of a CPU, but there are circumstances when the technique is also applicable to software. Software benchmarks are, for example, run against compilers or database management systems (DBMS). Benchmarks provide a method of comparing the performance of various subsystems across different chip/system architectures. Purpose As computer architecture advanced, it became more difficult to compare the performance of various computer systems simply by looking at their specificatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Models

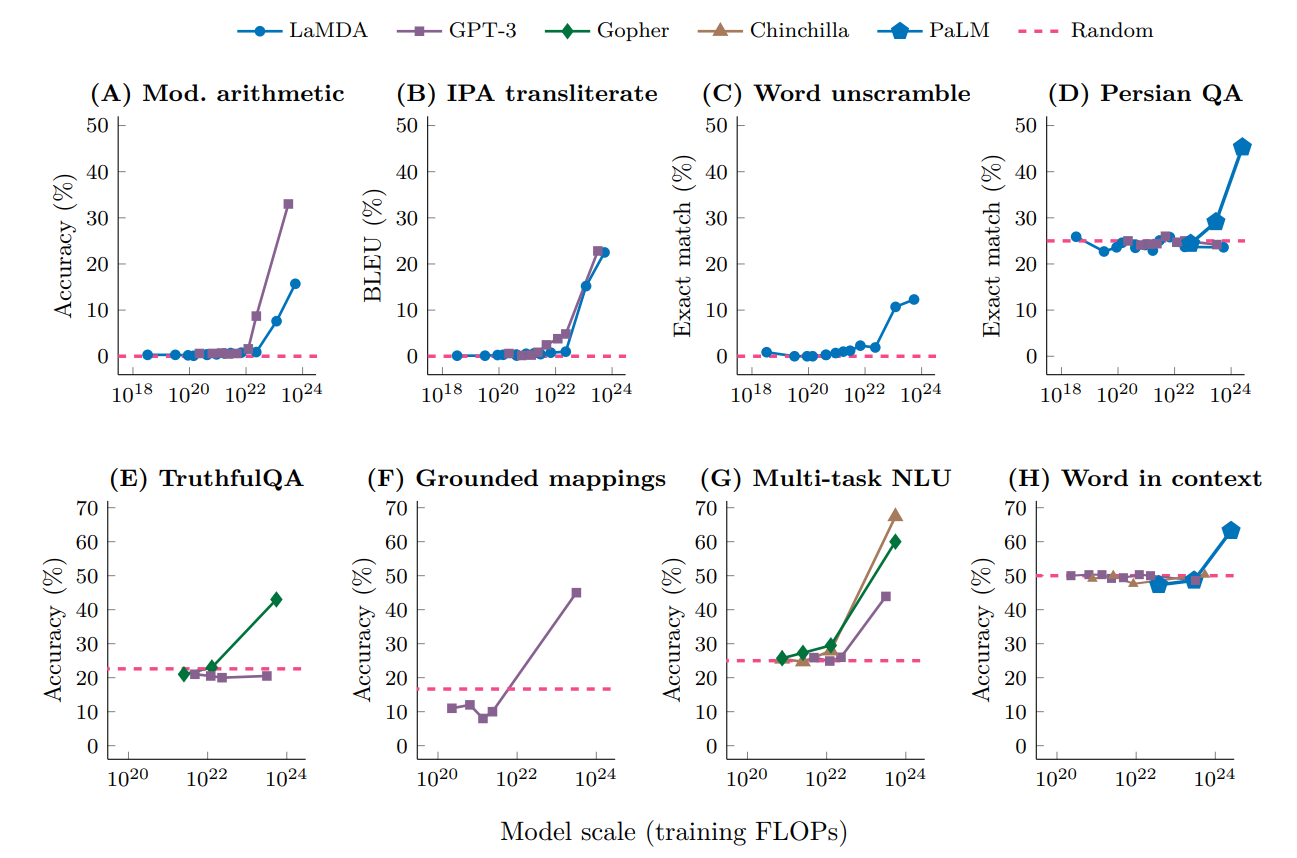

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dan Hendrycks

Dan Hendrycks (born ) is an American machine learning researcher. He serves as the director of the Center for AI Safety. Early life and education Hendrycks was raised in a Christian evangelical household in Marshfield, Missouri. He received a B.S. from the University of Chicago in 2018 and a Ph.D. from the University of California, Berkeley in Computer Science in 2022. Career and research Hendrycks' research focuses on topics that include machine learning safety, machine ethics, and robustness. He credits his participation in the effective altruism (EA) movement-linked 80,000 Hours program for his career focus towards AI safety, though denied being an advocate for EA. In February 2022, Hendrycks co-authored recommendations for the US National Institute of Standards and Technology (NIST) to inform the management of risks from artificial intelligence. In September 2022, Hendrycks wrote a paper providing a framework for analyzing the impact of AI research on societal risk ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

General Language Understanding Evaluation

These datasets are applied for machine learning research and have been cited in peer-reviewed academic journals. Datasets are an integral part of the field of machine learning. Major advances in this field can result from advances in learning algorithms (such as deep learning), computer hardware, and, less-intuitively, the availability of high-quality training datasets. High-quality labeled training datasets for supervised and semi-supervised machine learning algorithms are usually difficult and expensive to produce because of the large amount of time needed to label the data. Although they do not need to be labeled, high-quality datasets for unsupervised learning can also be difficult and costly to produce. Image data These datasets consist primarily of images or videos for tasks such as object detection, facial recognition, and multi-label classification. Facial recognition In computer vision, face images have been used extensively to develop facial recognition system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like text. Given an initial text as prompt, it will produce text that continues the prompt. The architecture is a standard transformer network (with a few engineering tweaks) with the unprecedented size of 2048-token-long context and 175 billion parameters (requiring 800 GB of storage). The training method is "generative pretraining", meaning that it is trained to predict what the next token is. The model demonstrated strong few-shot learning on many text-based tasks. It is the third-generation language prediction model in the GPT-n series (and the successor to GPT-2) created by OpenAI, a San Francisco-based artificial intelligence research laboratory. GPT-3, which was introduced in May 2020, and was in beta testing as of July 2020, is part of a trend in natural language processing (NLP) systems of pre-trained language representations. The quality of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Claude 3

Claude is a family of large language models developed by Anthropic. The first model was released in March 2023. Claude 3, released in March 2024, can also analyze images. Training Claude models are generative pre-trained transformers. They have been pre-trained to predict the next word in large amounts of text. Claude models have then been fine-tuned with Constitutional AI with the aim of making them helpful, honest, and harmless. Constitutional AI Constitutional AI is an approach developed by Anthropic for training AI systems, particularly language models like Claude, to be harmless and helpful without relying on extensive human feedback. The method, detailed in the paper "Constitutional AI: Harmlessness from AI Feedback" involves two phases: supervised learning and reinforcement learning. In the supervised learning phase, the model generates responses to prompts, self-critiques these responses based on a set of guiding principles (a "constitution"), and revises the respon ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPT-4

Generative Pre-trained Transformer 4 (GPT-4) is a multimodal large language model created by OpenAI and the fourth in its GPT series. It was released on March 14, 2023, and has been made publicly available in a limited form via ChatGPT Plus, with access to its commercial API being provided via a waitlist. As a transformer, GPT-4 was pretrained to predict the next token (using both public data and "data licensed from third-party providers"), and was then fine-tuned with reinforcement learning from human and AI feedback for human alignment and policy compliance. Observers reported the GPT-4 based version of ChatGPT to be an improvement on the previous (GPT-3.5 based) ChatGPT, with the caveat that GPT-4 retains some of the same problems. Unlike the predecessors, GPT-4 can take images as well as text as input. OpenAI has declined to reveal technical information such as the size of the GPT-4 model. Background OpenAI published their first paper on GPT in 2018, called "Improv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Abstract Algebra

In mathematics, more specifically algebra, abstract algebra or modern algebra is the study of algebraic structures. Algebraic structures include groups, rings, fields, modules, vector spaces, lattices, and algebras over a field. The term ''abstract algebra'' was coined in the early 20th century to distinguish this area of study from older parts of algebra, and more specifically from elementary algebra, the use of variables to represent numbers in computation and reasoning. Algebraic structures, with their associated homomorphisms, form mathematical categories. Category theory is a formalism that allows a unified way for expressing properties and constructions that are similar for various structures. Universal algebra is a related subject that studies types of algebraic structures as single objects. For example, the structure of groups is a single object in universal algebra, which is called the '' variety of groups''. History Before the nineteenth century, alge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

International Law

International law (also known as public international law and the law of nations) is the set of rules, norms, and standards generally recognized as binding between states. It establishes normative guidelines and a common conceptual framework for states across a broad range of domains, including war, diplomacy, economic relations, and human rights. Scholars distinguish between international legal institutions on the basis of their obligations (the extent to which states are bound to the rules), precision (the extent to which the rules are unambiguous), and delegation (the extent to which third parties have authority to interpret, apply and make rules). The sources of international law include international custom (general state practice accepted as law), treaties, and general principles of law recognized by most national legal systems. Although international law may also be reflected in international comity—the practices adopted by states to maintain good relations and mut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ICCPR

The International Covenant on Civil and Political Rights (ICCPR) is a multilateral treaty that commits nations to respect the civil and political rights of individuals, including the right to life, freedom of religion, freedom of speech, freedom of assembly, electoral rights and rights to due process and a fair trial. It was adopted by United Nations General Assembly Resolution 2200A (XXI) on 16 December 1966 and entered into force 23 March 1976 after its thirty-fifth ratification or accession. , the Covenant has 173 parties and six more signatories without ratification, most notably the People's Republic of China and Cuba; North Korea is the only state that has tried to withdraw. The ICCPR is considered a seminal document in the history of international law and human rights, forming part of the International Bill of Human Rights, along with the International Covenant on Economic, Social and Cultural Rights (ICESCR) and the Universal Declaration of Human Rights (UDHR). Complian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

O1 (generative Pre-trained Transformer)

OpenAI o1 is a generative pre-trained transformer (GPT). A preview of o1 was released by OpenAI on September 12, 2024. o1 spends time "thinking" before it answers, making it better at complex reasoning tasks, science and programming than GPT-4o. The full version was released on December 5, 2024. History Background According to leaked information, o1 was formerly known within OpenAI as "Q*", and later as "Strawberry". The codename "Q*" first surfaced in November 2023, around the time of Sam Altman's ousting and subsequent reinstatement, with rumors suggesting that this experimental model had shown promising results on mathematical benchmarks. In July 2024, Reuters reported that OpenAI was developing a generative pre-trained transformer known as "Strawberry", which later became o1. Release "o1-preview" and "o1-mini" were released on September 12, 2024, for ChatGPT Plus and Team users. GitHub started testing the integration of o1-preview in its Copilot service the same day. On D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |